Abstract

Abstract

- When designing an agent-based simulation, an important question to answer is how to model the decision making processes of the agents in the system. A large number of agent decision making models can be found in the literature, each inspired by different aims and research questions. In this paper we provide a review of 14 agent decision making architectures that have attracted interest. They range from production-rule systems to psychologically- and neurologically-inspired approaches. For each of the architectures we give an overview of its design, highlight research questions that have been answered with its help and outline the reasons for the choice of the decision making model provided by the originators. Our goal is to provide guidelines about what kind of agent decision making model, with which level of simplicity or complexity, to use for which kind of research question.

- Keywords:

- Decision Making, Agents, Survey

Introduction: Purpose & Goals

Introduction: Purpose & Goals

- 1.1

-

In computational social science in general and in the area of agent-based

social simulation (ABSS) in particular, there is an ongoing

discussion on how to best to model human decision making.

The reason for this is that although human decision making is

very complex, most computational models of it are rather simplistic

(Sun 2007).

As with any good scientific model, when modelling humans, the

modelled entities should be equipped with just those properties and behavioural

patterns of the real humans they are representing that are relevant in the

given scenario and no more or less.

- 1.2

-

The question therefore is “What is a good (computational) model of a human (and

its decision making) for what kind of research question?”

A large number of

architectures and models that try to represent human decision making

have been designed for ABSS. Despite their common goal, each architecture

has slightly different aims and as a

consequence incorporates different assumptions and simplifications. Being aware

of these differences is therefore important when selecting an agent

decision making model in an ABSS.

- 1.3

-

Due to their number, we are not able to review all existing

models and architectures for human decision making in this paper. Instead we

have selected examples of established models and architectures as well as

some others that have attracted attention. We have aimed

to cover a diversity of models and architectures, ranging from simple production

rule systems (Section 3) to complex

psychologically-inspired cognitive ones (Section

7). In our selection we focussed on

(complete) agent architectures, which is why we did not include any

approaches (e.g. learning algorithms) which focus only on parts of an

architecture.

For each architecture, we outline research questions that have been answered

with its help and highlight reasons for the choices made by the authors.

Using the overview of existing systems, in Section

8 we aim to fulfil our overall goal, to

provide guidelines about which kind of agent decision making model, with which level

of simplicity or complexity, to use for which kind of research question or

application.

We

hope that they will help researchers to identify where the particular strength

of different agent architectures lie and to provide an overview that will

help researchers deciding which agent architecture to pick in case of

uncertainty.

- 1.4

-

The paper is structured as follows: In the next section we provide a

discussion of the most common topics and foci of ABSS. The discussion

will be used to determine dimensions for classifying different agent

decision making models as well as outlining their suitability for particular

research topics.

We then present production rule systems

(Section

3) and deliberative agent

models, in particular the belief-desire-intention idea and its derivatives

(Section 4).

Section 5 is on models that

rely on norms as the main aspect of their deliberative component. Sections 6 and

7 review cognitive agent decision

making models. Section 6 focuses on “simple”

cognitive architectures, while Section 7 has a

closer look at psychological and neurological-inspired models.

The paper closes with a discussion of the findings and provides a

comparison of the models along a number of dimensions. These dimensions

include the applications and research questions the different models might

be used for. The section also points out issues that are not covered

by any of the architectures in order to encourage research in these

areas.

Dimensions of Comparison

Dimensions of Comparison

- 2.1

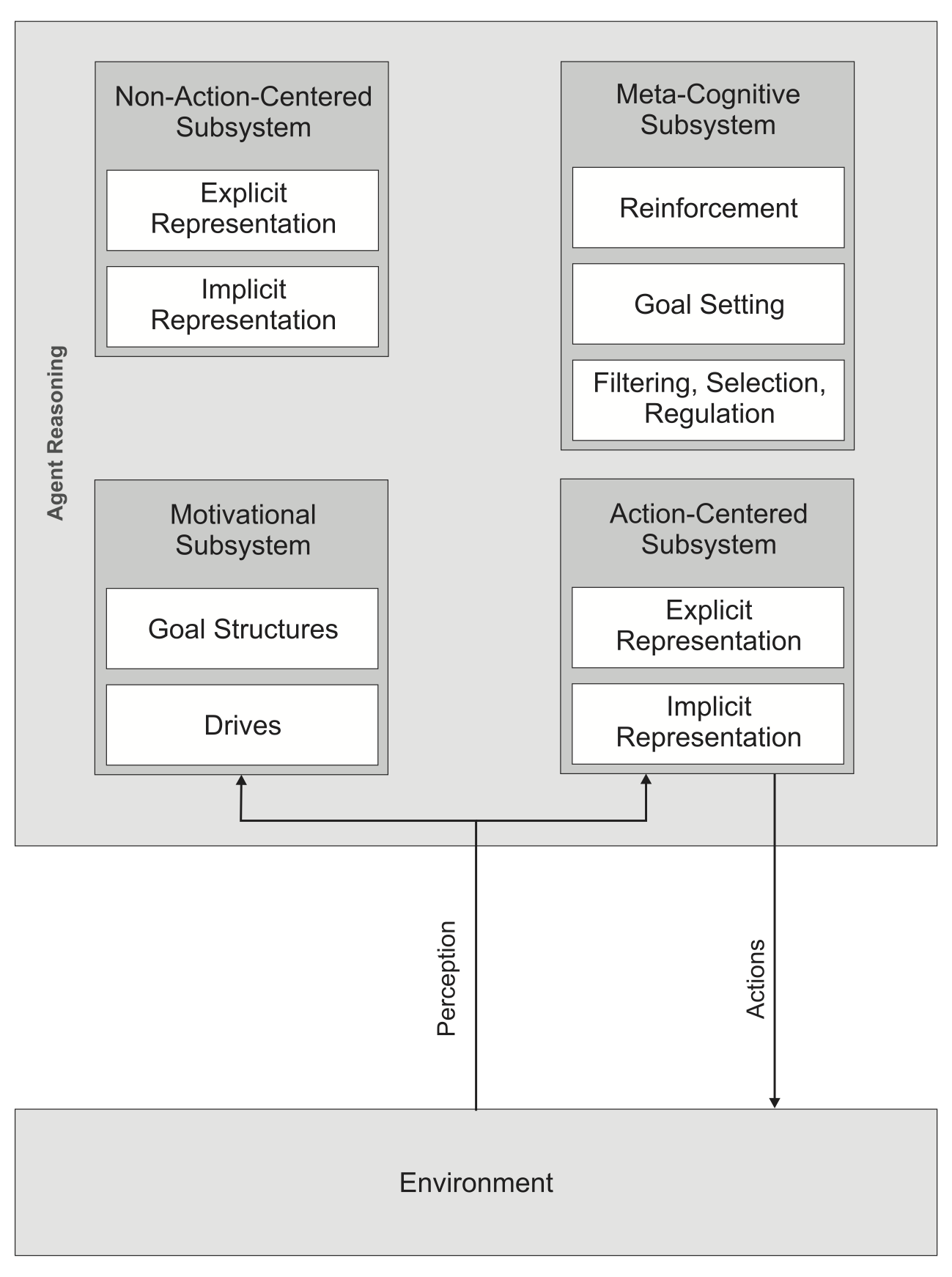

- In order to be able to discuss the suitability of different agent

architectures for different kinds of ABSS, a question to be answered is what

kinds of ABSS are existing and of interest to the ABSS community.

Starting from general ABSS overview articles (e.g. Gilbert (2004),

Meyer et al. (2009) or Helbing & Balletti (2011)) we filter out current research topics

and derive agent properties that might be of interest to

answer typical research questions within these general topics. The derived

properties are then used to define dimensions that are used in the following

sections to compare the different agent architectures.

- 2.2

-

One of the earlier attempts to categorize ABSS was conducted

by Gilbert(2004, p.6). He outlines five high-level dimensions by which

ABSS in general can be categorised, including for example the degree to which

ABSS attempt to incorporate the detail of particular targets. The last of his dimension deals with agents (and indirectly

their decision making), by comparing ABSS by means of the complexity of

the agents they involve. According to Gilbert this complexity of agents might

range from “production system architectures” (i.e. agents that follow simple

IF-THEN rules) to agents with sophisticated cognitive architectures such as SOAR

or ACT-R. Looking at the suitability of these different architectures for

different research questions/topics, Gilbert cites Carley et al. (1998), who

describe a number of experiments comparing models of organizations using agents

of varying complexity. In these cited experiments Carley et al. (1998) conclude

that simpler models of agents are better suited if the objective of the ABSS is

to predict the behaviour of the organization as a whole (i.e. the macro-level

behaviour), whereas complex and more cognitive accurate architectures were

needed to accurately represent and predict behaviour at the individual or small

group level.

- 2.3

-

A slightly different distinction is offered by Helbing & Balletti (2011), who

propose the three categories:

- Physical models that assume that individuals are mutually reactive to current (and/or past) interactions.

- Economic models that assume that individuals respond to their future expectations and take decision in a selfish way.

- Sociological models that assume that individuals respond to their own and other people's expectations (and their past experiences as well). Helbing & Balletti (2011, p.4)

- 2.4

-

In Helbing's classification, simple agent architectures such as rule-based

production systems would be best suited for the physical models and the

complexity and ability of the agents would need to increase when moving to the

sociological models. In these sociological models, the focus on modelling social

(human) interaction might necessitate that the agent can perceive the social network

in which they are embedded, that agents are able to

communicate, or even requirements for more complex social concepts such as the Theory of

Mind1 or We-intentionality.

- 2.5

-

Summarising, we identify two major dimensions that seem

useful for distinguishing agent architectures:

- The Cognitive Level of the agents, i.e. whether they are purely reactive, have some form of deliberation, simple cognitive components or are psychologically or neurologically inspired (to represent human decision making as closely as possible), and

- The Social Level of the agents, i.e. the extent to which they are able to distinguish social network relations (and status), what levels of communication they are capable of, whether have a theory of mind or to what degree they are capable of complex social concepts such as we-intentionality.

- 2.6

-

Another way of categorizing ABSS is in terms of application areas. Axelrod & Tesfatsion (2006) list:

(i) Emergence and Collective behaviour, (ii) Evolution, (iii) Learning, (iv) Norms, (v) Markets, (vi) Institutional Design, and (vii) (Social) Networks as examples of application areas.

- 2.7

-

Other candidates for dimensions to distinguish agent architectures

are:

- Whether agents are able to reason about (social) norms, institutions and organizational structures; what impact norms, policies, institutions and organizational structures have on the macro-level system performance; and how to design normative structures that support the objectives of the systems designer (or other stakeholders); and

- Whether agents can learn and if so, on what kind of level they are able to learn; e.g.. are the agents only able to learn about better values for their decision functions and can they learn new decision rules.

- 2.8

-

The final dimension we shall use is the affective level an

agent is capable to express. This dimension results from a discussion of current

publication trends in the Journal of Artificial Societies and Social Simulation

(JASSS) provided by Meyer et al. (2009). Meyer et al. use a

co-citation analysis of highly-cited JASSS papers to visualize the thematic

structure of social simulation publications. Most of the

categories found thereby are similar to Axelrod & Tesfatsion (2006). However, they also

include emotions as an area of research. We cover this

aspect with a dimension indicating the extent that an agent architecture

can be used to express emotions. Research questions

that might be answered with this focus on affective components are, for example,

how emotions can be triggered, how they influence decision making and what a

change in the decision making of agents due to emotions implies for the system

as a whole2.

- 2.9

-

Summing up, the five main dimensions shown in Table

1 can be used to classify ABSS work in general and

are therefore used for distinguishing agent architectures in this

paper.

Table 1: Dimensions for Comparison Dimensions Explanation Cognitive What kind of cognitive level does the agent architecture allow for: reactive agents, deliberative agents, simple cognitive agents or psychologically or neurologically-inspired agents? Affective What degree of representing emotions (if any at all) is possible in the different architectures? Social Do the agent architectures allow for agents capable of distinguishing social network relations (and status), what levels of communication can be represented and to what degree can one use the architectures to represent complex social concepts such as the theory of mind or we-intentionality. Norm Consideration To what degree do the architectures allow to model agents which are able to explicitly reason about formal and social norms as well as the emergence and spread of the latter? Learning What kind of agent learning is supported by the agent architectures?

Production Rule Systems

Production Rule Systems

- 3.1

-

Production rules systems—which consist primarily of a set of behavioural “if-then-rules”

(Nilsson 1977)—are symbolic systems3 (Chao 1968) that have their

origin in the 1970s, when artificial intelligence researchers began to

experiment with information processing architectures based on the matching of

patterns. Whereas many of the first production rule systems were applied

to rather simple puzzle or blocks world problems, they

soon became popular for more “knowledge-rich” domains such as automated

planning and expert systems.

- 3.2

-

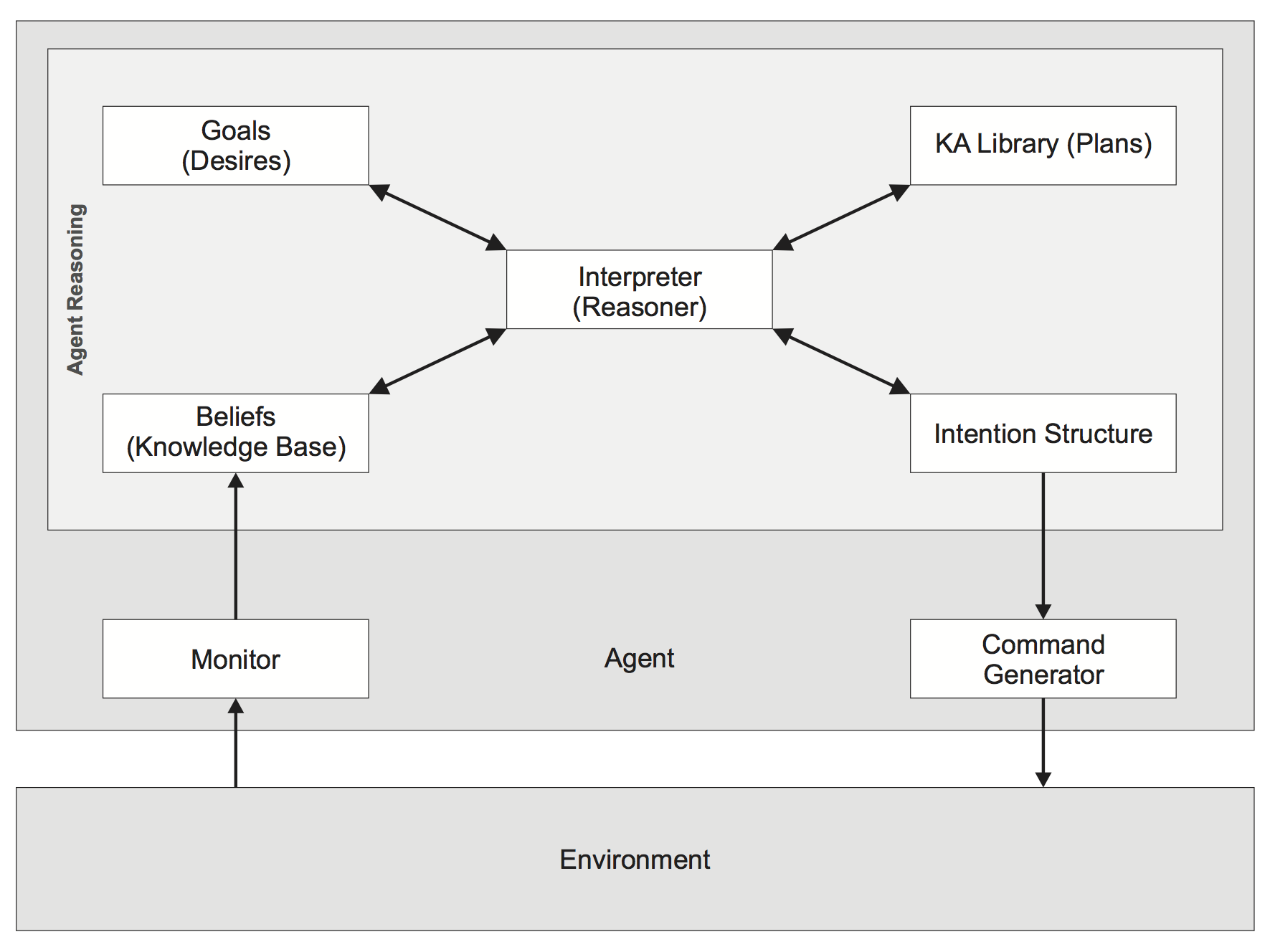

Figure 1 shows the typical decision making cycle of a

production rule system. It determines what actions (output) are chosen by an

agent based on the input it perceives. Production rule systems

consist of three core components:

- A set of rules (also referred to as productions) that have the form Ci → Ai where Ci is the sensory precondition (also referred to as IF statement or left-hand side (LHS)) and Ai is the action that is to be performed if Ci holds (the THEN part or right-hand side (RHS) of a production rule).

- One or more knowledge databases that contain information relevant to the problem domain. One of these databases is the working memory, a short-term memory that maintains data about the current state of the environment or beliefs of the agent.

- A rule interpreter which provides the means to determine in which order rules are applied to the knowledge database and to resolve any conflicts between the rules that might arise.

- 3.3

-

The decision making cycle depicted in Figure 1 is referred

to as a forward chaining recognise-act cycle (Ishida 1994).

It consists of four basic stages in which all of the above mentioned components

are used.

- Once the agent has made observations of its environment and these are translated to facts in the working memory4, the IF conditions in the rules are matched against the known facts in the working memory to identify the set of applicable rules (i.e. the rules for which the IF statement is true). This may result in the selection of one or more production rules that can be “fired”, i.e. applied.

- If there is more than one rule that can be fired, then a conflict resolution mechanism is used to determine which to apply. Several algorithms, designed for different goals such as optimisation of time or computational resources, are available (McDermott & Forgy 1976). If there are no rules whose preconditions match the facts in the working memory, the decision making process is stopped.

- The chosen rule is applied, resulting in the execution of the rule's actions and, if required, the updating of the working memory.

- A pre-defined termination condition such as a defined goal state or some kind of resource limitation (e.g. time or number of rules to be executed) is checked, and if it is not fulfilled, the loop starts again with stage 15.

- 3.4

-

One reason for the popularity of production rule

systems is their simplicity in terms of understanding the link between the

rules and their outcomes. In addition, the existence of convenient graphical

means to present decision processes (e.g. decision trees) has contributed to

their continuing use.

However, production rule systems have often been criticised as

inadequate for modelling human behaviour. The criticisms can be considered in relation to the dimensions introduced in the previous section.

- 3.5

-

The agents one can model with production rule systems

react with predefined rules to environmental events,

with no explicit deliberation or cognitive processes being available to them.

As such, production rule systems agents typically are not capable of affective

behaviour, the understanding of and reaction to norms, the consideration of

social structures (including communication) or learning new rules or updating existing ones.

Of course, since a production rule system is Turing complete, it is in principle possible to

model such behaviours, but only at the cost of great complexity and using many rules.

This is a problem because the more rules in the system, the more

likely are conflicts between these rules and the more computation will be needed to resolve

these conflicts. This can result in long compute

times, which make production-rule systems difficult to use in

settings with large numbers of decision rules.

- 3.6

- With respect to implementation, Prolog, a general purpose logic programming language, and LISP are popular for production rule systems. In addition, several specialised software tools based on these languages are available. Two examples are JESS, a software tool for building expert systems (Friedman-Hill 2003), and JBOSS Drools, a business rule management system and an enhanced rule engine implementation6.

BDI and its Derivatives

BDI and its Derivatives

- 4.1

- We next focus on a conceptual framework

for human decision making that was developed roughly a decade after the

production rule idea: the beliefs-desires-intentions (BDI) model.

BDI

- 4.2

- The Belief-Desires-Intention (BDI) model, which was originally based on ideas

expressed by the philosopher Bratman (1987), is one of the most popular

models of agent decision making in the agents community (Georgeff et al. 1999).

It is particularly popular for constructing reasoning

systems for complex tasks in dynamic environments (Bordini et al. 2007).

- 4.3

-

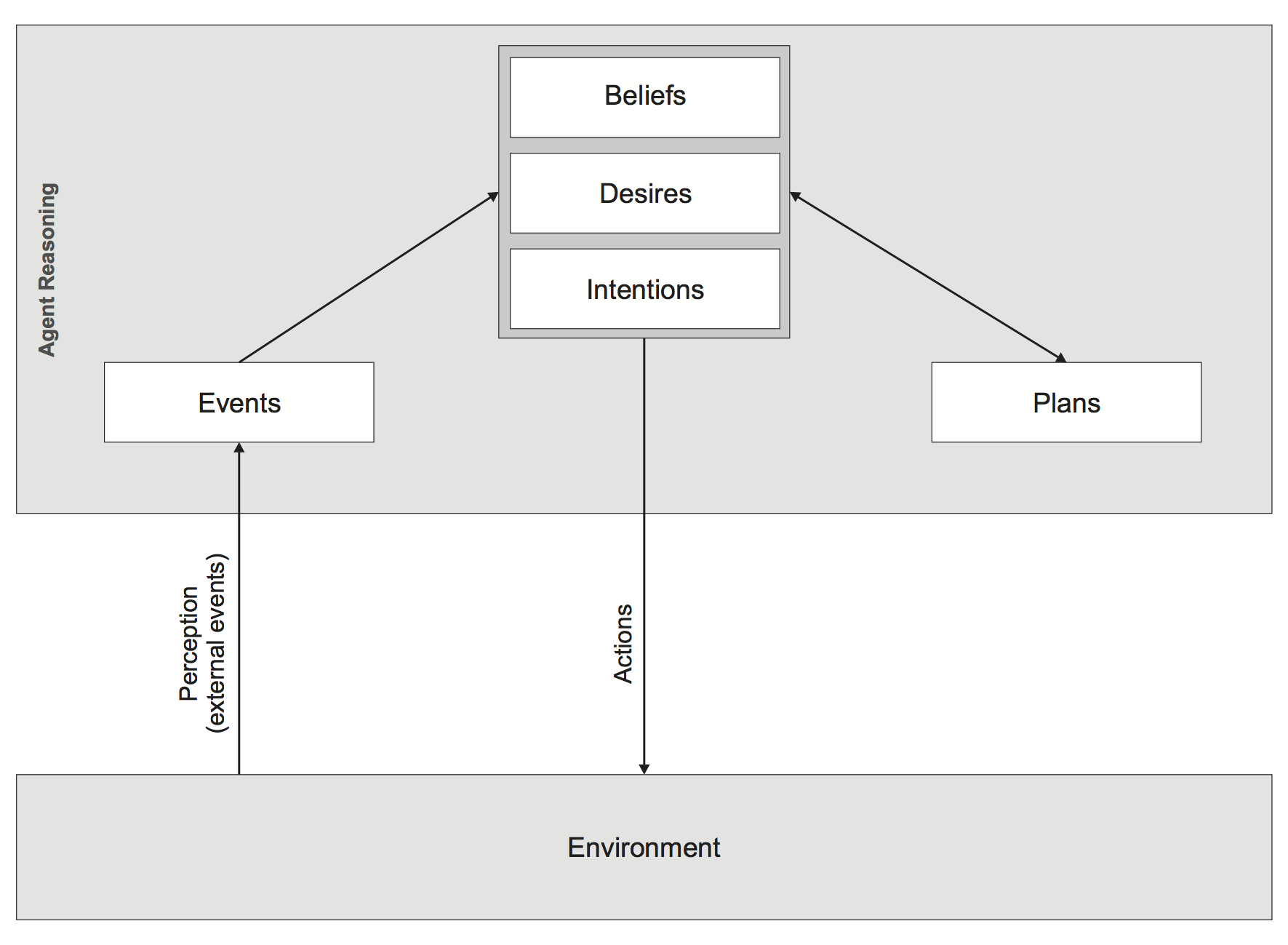

In contrast to the production rule system presented earlier, the basic idea

behind BDI is that agents have a “mental state” as the basis for their

reasoning. As suggested by its name, the

BDI model is centred around three mental attitudes, namely beliefs, desires and,

especially, intentions (Figure 2). It is therefore

typically referred to as an “intentional system”.

Beliefs are the internalized information that the agent has about the world. These beliefs do not need to correspond with reality (e.g. the beliefs could be based on out-dated or incorrect information); it is only required that the agent considers its beliefs to be true. Desires are all the possible states of affairs that an agent might like to accomplish. They represent the motivational state of the agent. The notion of desires does not imply that the agent will act upon all these desires, rather they present options that might influence an agent's actions. BDI papers often also refer to goals. Goals are states that an agent actively desires to achieve (Dignum et al. 2002). An intention is a commitment to a particular course of action (usually referred to as a plan) for achieving a particular goal (Cohen & Levesque 1990)7.

- 4.4

-

These three components are complemented by a library of plans.

The plans define procedural knowledge about low-level actions that are expected to

contribute to achieving a

goal in specific situations, i.e. they specify plan steps that define how to do

something.

- 4.5

-

In the BDI framework, agents are typically able to reason about

their plans dynamically. Furthermore, they are able to reason about their own

internal states, i.e. reflect upon their own beliefs, desires and

intentions and, if required, modify these.

- 4.6

-

At each reasoning step, a BDI agent's beliefs are updated, based on its

perceptions. Beliefs in

BDI are represented as Prolog-like facts, that is, as atomic formulae

of first-order logic. The intentions to be achieved are pushed onto a stack,

called the intention stack. This stack contains all the intentions that

are pending achievement (Bordini et al. 2007). The agent then searches through

its plan library for any plans with a post-condition that matches the intention on top of the

intention stack. Any of these plans that have their

pre-conditions satisfied according to the agent's beliefs are considered

possible options for the agent's actions and intentions. From these options,

the agent selects the plans of highest relevance to its beliefs and intentions.

This is referred to as the deliberation process.

In the Procedural Reasoning System (PRS) architecture—one of the first

implementations of BDI—this is done with the help of domain-specific meta-plans as well as information

about the goals to be achieved. Based on these goals and the plans'

information, intentions are generated, updated and then

translated into actions that are executed by the agents.

- 4.7

-

The initial applications using BDI were embedded

in dynamic and real-time environments (Ingrand et al. 1992) that required

features such as asynchronous event handling, procedural representation of

knowledge, handling of multiple problems, etc. As a result of these features,

one of the seminal applications to use an implementation of BDI

was a monitoring and fault detection system for the reaction

control system on the NASA space shuttle Discovery (Georgeff & Ingrand 1990).

For this purpose 100 plans and over 25 meta-level

plans (including over 1000 facts

about it) were designed. This demonstrates the size and complexity of the systems that BDI is

capable of dealing with. Another complex application using BDI

ideas is a network management monitor called the Interactive Real-time

Telecommunications Network Management System (IRTNMS) for Telecom Australia

(Rao & Georgeff 1991). Rao & Georgeff (1995) generalised BDI to be especially appropriate

for systems that are required to perform high-level management and control tasks in

complex dynamic environments as well as for systems where agents are required to

execute forward planning (Rao & Georgeff 1995).

Besides these rather technical applications, BDI has also has been used for

more psychologically-inspired research. It formed the basis for a computational model

of child-like reasoning, CRIBB (Wahl & Spada 2000) and has also been used to develop

a rehabilitation strategy to teach autistic children to reason about other

people (Galitsky 2002).

- 4.8

-

A detailed discussion on modelling human behaviour with BDI agents can be found in Norling (2009).

Looking at BDI using the dimensions introduced in Section 2, at the cognitive level, BDI

agents can be both reactive and actively deliberate about intentions

(and associated plans). Hence, in contrast to production

rule systems, BDI architectures do not have to follow classical

IF-THEN rules, but can deviate if they perceive this is appropriate for the

intention. Although BDI agents differ conceptually in this way from production-rule systems, most BDI

implementations do not allow agents to deliberate actively about intentions

(Thangarajah et al. 2002). However, what is different to production rule systems is

that BDI agents are typically goal persistent. This means that if an agent for

some reason is unable to achieve a goal through a particular intention, it is able to reconsider the goal in the

current context (which is likely to have changed since it chose the original

course of action). Given the new context, a BDI agent is then able to attempt

to find a new course of action for achieving the goal. Only once a

goal has been achieved or it is deemed to be no longer relevant does an agent

discard it.

- 4.9

-

A restriction of the traditional BDI approach is that is assumes

agents to behave in line with (bounded) rationally (Wooldridge 2000).

This assumption has been criticised for being “out-dated”

(Georgeff et al. 1999), resulting in several extensions of BDI, some of which

will be discussed below. Another point of criticism is that the traditional BDI model

does not provide any specification of

agent communication (or any other aspects at the social level)

and that—in its initial design—it does not provide an explicit mechanism

to support learning from past behaviour (Phung et al. 2005). Furthermore normative

or affective considerations are not present in the traditional BDI model.

- 4.10

-

BDI itself is a theoretical model, rather than an implemented architecture.

Nevertheless, several

implementations (and extensions) have been developed since its origins in

the mid-1980s. One of the first explicitly embodying the BDI

paradigm was the Procedural Reasoning System (PRS) architecture

(Georgeff & Lansky 1987; Ingrand et al. 1992).

PRS was initially implemented by the Artificial Intelligence Center at SRI

International during the 1980s. After it had been applied to the reaction control

system of the NASA Space Shuttle Discovery, it has

been developed further in other institutes and was re-implemented several

times, for example, the Australian Artificial

Intelligence Institute's distributed Multi-Agent Reasoning (dMARS) system

(d'Inverno et al. 1998; d'Inverno et al. 2004), the University of Michigan's C++

implementation UM-PRS (Lee et al. 1994) and their Java version called

JAM!8 (Huber 1999). Other

examples of BDI implementations include AgentSpeak (Rao 1996; Machado & Bordini 2001),

AgentSpeak(L) (Machado & Bordini 2001), JACK Intelligent Agents

(Winikoff 2005), the SRI Procedural Agent Realization Kit (SPARK)

(Morley & Myers 2004), JADEX (Pokahr et al. 2005) and 2APL (Dastani 2008).

eBDI

- 4.11

- Emotional BDI (eBDI) (Pereira et al. 2005; Jiang & Vidal 2006) is one extension of the BDI

concept that tries to address the

rational agent criticism mentioned above. It does so by

incorporating emotions as one decision criterion into the agent's decision making

process.

- 4.12

-

eBDI is based on the idea that in order to model human behaviour properly, one

needs to account for the influence of emotions Kennedy (2012) .

The eBDI approach, initially proposed by

Pereira et al. (2005), was implemented and extended to emotional maps for

emotional negotiation models as part of her PhD work by Jiang (2007).

Although the idea of incorporating emotion into the agent

reasoning itself had been mentioned before eBDI by Padgham & Taylor (1996),

eBDI is the first architecture that accounts for emotions as mechanisms

for controlling the means by which agents act upon their environment.

- 4.13

-

As demonstrated by Pereira et al. (2008), eBDI includes an

internal representation of an agent's resources and capabilities, which,

according to the authors, allows for a better resource allocation in

highly dynamic environments.

- 4.14

-

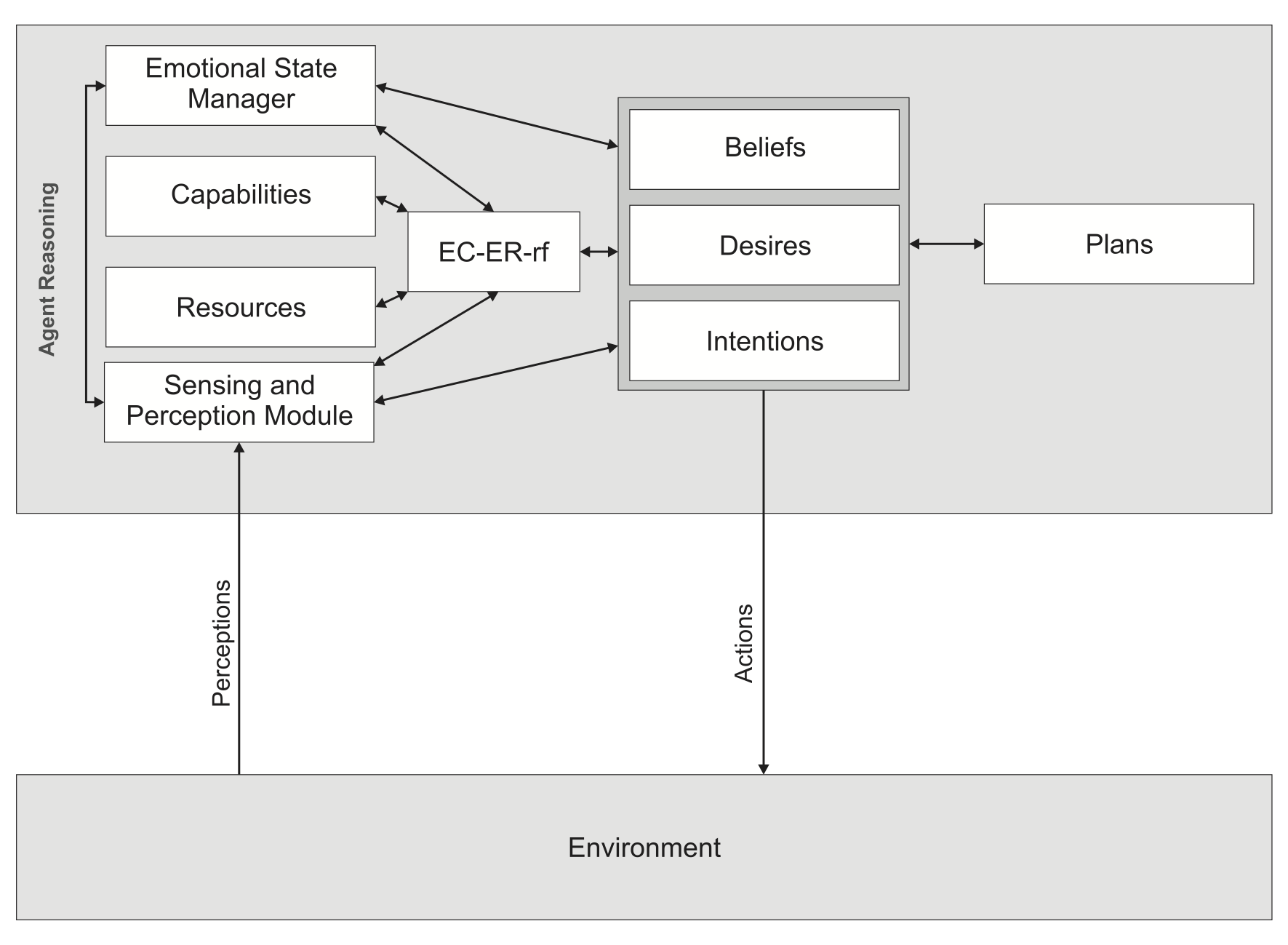

The eBDI architecture is

considered to be a BDI extension by Pereira et al. (2005). They chose to extend the

BDI framework because of its high acceptance in the agent

community as well as its logical underpinning. The eBDI architecture is depicted in

Figure 3.

- 4.15

-

eBDI is centred around capabilities and resources as the basis for the internal

representation of emotions. Capabilities are abstract plans of actions which the

agent can use to act upon its environment.

In order to become specific plans, the abstract plans

need to be matched against the agent's ideas of ability and opportunity. This is done

with the help of resources, which can be either physical or virtual. The agents in

eBDI are assumed to have information

about only a limited part of their environment and themselves.

This implies that an agent might not be aware of all its resources and

capabilities. In order for an

agent to become aware of these capabilities and resources, they first need to

become effective. This is

done with the help of the Effective Capabilities and Effective Resources

revision function (EC-ER-rf) based on an agent's perceptions as well as

various components derived from the BDI architecture.

In addition to capabilities and resources, two further new components are

added: a Sensing and Perception Module and an

Emotional State Manager. The former is intended to

“make sense" of the environmental stimuli perceived by the agent. It filters

information from all perceptions and other sensor stimuli and—with the help

of semantic association rules—gives a meaning to

this information. The Emotional

State Manager is the component responsible for controlling the resources and

the capabilities used in the information processing phases of the architecture.

- 4.16

-

None of the papers describing the eBDI architecture (i.e

Pereira et al. (2005); Jiang et al. (2007); Jiang (2007); Pereira et al. (2008)) give a precise specification of

the Emotional State Manager, but they provide three

general guidelines that they consider fundamental for the component:

- It should be based on a well defined set of artificial emotions which relate efficiently to the kind of tasks the agent has to perform,

- extraction functions that link emotions with various stimuli should be defined, and

- all emotional information should be equipped with a decay rate that depends on the state of the emotional eliciting source (Pereira et al. 2005).

- 4.17

-

Both the Sensing and Perception

Module and the Emotional State Manager are linked to the EC-ER-rf, which

directly interacts with the BDI component in the agent's reasoning steps. On

each level it adds an emotional input to the BDI process that selects the

action that the agent executes. Detailed information on the

interaction of EC-ER-rf and the BDI module is given in

Pereira et al. (2005); Pereira et al. (2008) and Jiang (2007).

- 4.18

-

The authors of the eBDI framework envision it

to be particularly useful for applications that require agents to be self-aware.

They demonstrate

this with several thought examples such as a static environment

of a simple maze with energy sources and obstacles and a hunting scenario.

One larger application using eBDI is a e-commerce negotiation protocol presented

by Jiang (2007).

- 4.19

-

eBDI is similar to BDI,

in that at the cognitive level it allows for reflective agents, but

does not provide any consideration of learning, norms or social relations.

As indicated by its name, however, it does account

for the representation of emotions on the affective level.

- 4.20

-

To our knowledge the only complete implementation of the eBDI architecture is

by Jiang (2007), who integrated eBDI with the OCC model,

a computational emotion model developed by Ortony et al. (1990). In the future work

section of their paper Pereira et al. (2005) point out that they intend to implement

eBDI agents in dynamic and complex environments, but

we were not able to find any

of these implementations or papers about them online.

BOID

- 4.21

- The Beliefs-Desires-Obligations-Intentions (BOID) architecture is an extension

of the BDI idea to account for normative concepts, and in particular

obligations (Broersen et al. 2002; Broersen et al. 2001).

- 4.22

-

BOID is based on ideas described by Dignum et al. (2000). In addition

to the mental attitudes of

BDI, (social) norms and obligations (as one component of norms) are required to

account for the social behaviour of agents. The authors of BOID argue that in order

to give agents “social ability”, a multi-agent

system needs to allow the agents to deliberate about whether or not

to follow social rules and contribute to collective interests. This deliberation

is typically achieved by means of argumentation between obligations, the actions

an agent “must perform” (for the social good), and the

individual desires of the agents.

Thus, it is not surprising that the majority of works on BOID are within the

agent argumentation community (Dastani & van der Torre 2004; Boella & van der Torre 2003).

- 4.23

-

The decision making cycle in BOID is very similar to the BDI one

and only differentiates itself with respect to the agents' intention (or goal) generation.

When generating goals, agents also account for internalized social

obligations9. The outcome of this deliberation

depends on the agent's attitudes towards social obligations and its own goals

(i.e. which one it perceives to be the highest priority)10.

- 4.24

-

With regard to the dimensions of

comparison, BOID has the same properties as BDI (and therefore

similar advantages and disadvantages for modelling ABSS), but in contrast to BDI,

it allows the modelling of social norms (i.e. it differs on the norm dimension). In

BOID, these norms are expressed solely in terms of obligations and other

aspects of social norms are neglected.

- 4.25

-

So far, most work on BOID has concentrated on the

formalisation of the idea (and in particular the deliberation process). As a

result of this rather formal focus, at present no separate implementation of the

BOID architecture exists and application examples are limited to the process in

which agents deliberate about their own desires in the

light of social norms.

BRIDGE

- 4.26

- The BRIDGE architecture of Dignum et al. (2009)

extends the social norms idea in BOID and aims to provide “agents with

constructs of social awareness, 'own' awareness and reasoning update”

(Dignum et al. 2009).

- 4.27

-

It was first mentioned as model for agent reasoning and decision making in the

context of policy modelling. The idea is that one needs to take into account

that macro-level policies relate to people with different

needs and personalities who are typically situated in different cultural

settings. Dignum et al. (2009) reject the idea that human behaviour is typically (bounded) rational

and economically motivated, and advocate the

dynamic representation of “realistic social interaction

and cultural heterogeneity” in the design of agent decision making. They

emphasise that an architecture needs to take into account those

features of the environment an agent is located in (such as policies) that

influence its behaviour from the top down.

- 4.28

-

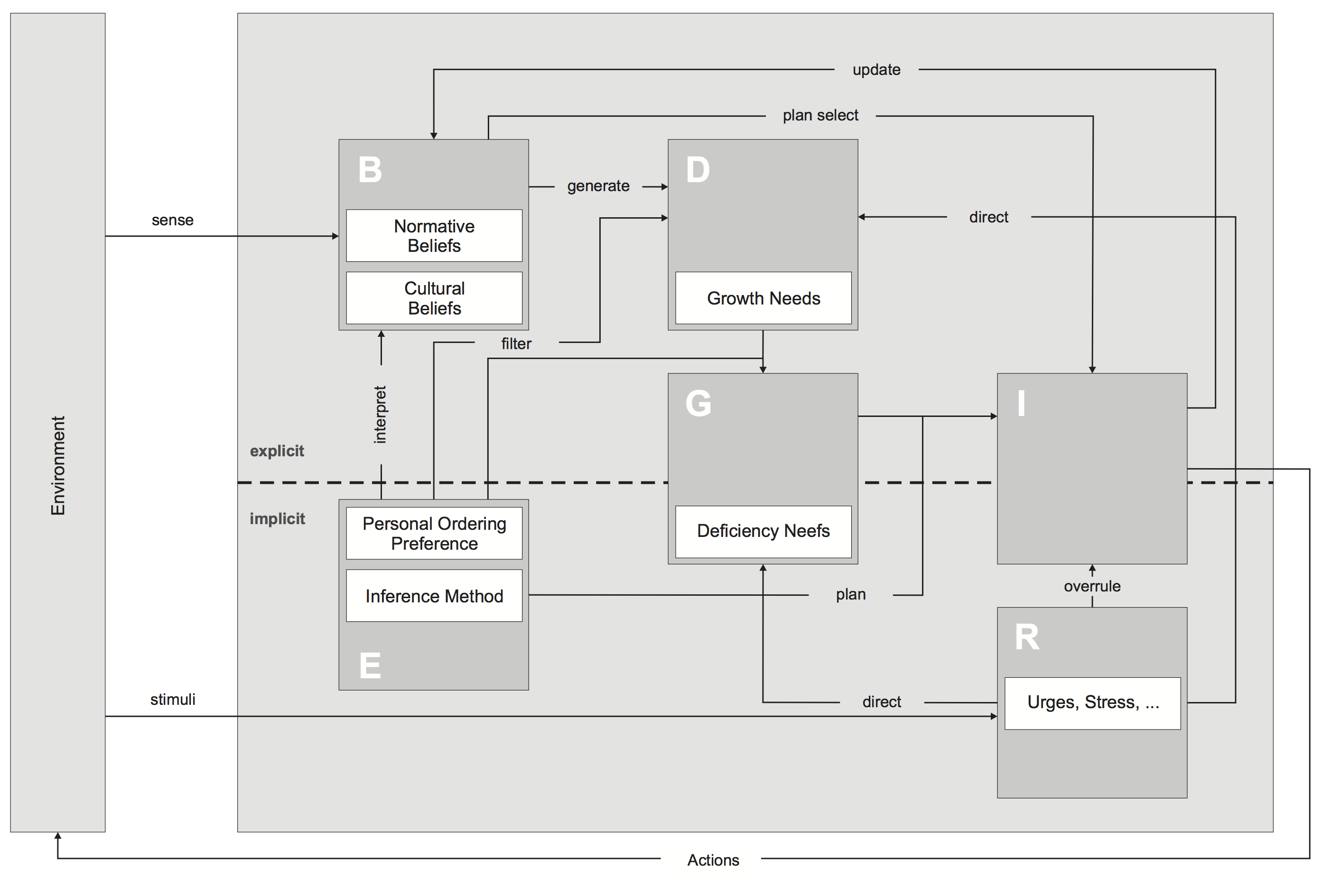

According to Dignum et al. (2009), one of the main reasons for basing the BRIDGE

architecture (Figure 4) on BDI

was its emphasis on the deliberation process

in the agent's reasoning. The architecture introduces

three new mental components: ego, response and goals and

modifies the function of some of the BDI components.

Ego describes an agent's priorities in decision making with the help of different filters and ordering preferences. It also includes the personality type of the agent, which determines its choice of mode of reasoning (e.g. backward- or forward reasoning).

Response refers to the physiological needs of the entity that is represented by the agent (e.g. elementary needs such as breathing, food, water). It implements the reactive behaviour of the agent to these basic needs. Additionally the response component is used to represent fatigue and stress coping mechanisms. Items in the response components directly influence goals and can overrule any plans (e.g. to allow for an immediate change of plan and the execution of different actions in life-threatening situations).

- 4.29

-

In contrast to other work on BDI, Dignum et al. (2009) distinguish goals

and intentions. To them, goals are generated from desires (as well as from the

agent's ego and response) and deficiency needs. Intentions are the

possible plans to realise the goals. The choice of goals (and therefore

indirectly also intentions) can be strongly influenced by response factors such

as fatigue or stress. Agents can change the order in which goals are chosen in

favour of goals aimed at more elementary needs.

The component of desires is extended in the BRIDGE architecture to also

consider ego (in addition to beliefs). They are complemented by maintenance

and self-actualization goals (e.g. morality) that do not go away by being

fulfilled. Beliefs are similar to the classical BDI idea, with the only

adaption being that beliefs are influenced by the cultural and normative

background of the agent.

- 4.30

-

In the reasoning cycle of a BRIDGE agent, all

components work concurrently to allow continuous processing of sensory

information (consciously received input from the environment) and other

“stimuli” (subconsciously received influences). These two input streams are

first of all interpreted according to the personality characteristics of the agent

by adding priorities and weights to the beliefs that result from the inputs. These

beliefs are then sorted. The sorted beliefs function as a filter on the desires

of the agent. Based on the desires, candidate goals are selected and ordered

(again based on the personality characteristics). Then, for the candidate

goals, the appropriate plans are determined with consideration of the ego

component. In addition, desires are generated from the agent's beliefs based on

its cultural and normative setting. As in normal BDI, in a last step, one of the

plans is chosen for execution (and the beliefs updated accordingly)

and the agent executes the plan if it is not overruled by the response component (e.g. fatigue).

- 4.31

-

In terms of our dimensions, although it is based on BDI, the BRIDGE

architecture has several differences. In contrast to, for example, eBDI, BRIDGE

does not explicitly represent emotions, although using the ego component, it is

possible to specify types of agents and their

different emotional responses to various stimuli. Furthermore, some social concepts are

accounted for. These concepts include a social interaction consideration, the

social concept of culture as well as a notion of self-awareness (and resulting

differentiation of one-self and other agents). On the norm dimension,

Dignum et al. (2009)

envision that BRIDGE can be used to model reactions to policies, which can be understood as a normative

concept. One of the key ideas of Dignum et al. (2009) is that the components they

introduced on top of BDI (e.g. culture and ego) influence social norms and their

internalization by agents. BRIDGE

nevertheless considers norms solely from an obligation perspective.

- 4.32

-

To the best of our knowledge, the BRIDGE architecture has not yet been implemented.

In Dignum et al. (2009) the authors mention their

ambition of implementing BRIDGE agents in an extension of 2APL in a Repast

environment. Currently the BRIDGE architecture has only

been applied in theoretical examples such as to help conceptualise the

emergence and enforcement of social behaviour in different cultural settings

(Dignum & Dignum 2009).

Normative Models

Normative Models

- 5.1

-

In Section 4.3, we introduced the BOID architecture as a

first step towards the integration of (social) norms in the agent decision

making process. This section extends this notion of normative architectures,

and moves away from intentional systems such as

BDI to externally motivated ones. In BDI, the agents act because of a

change in their set of beliefs and the establishment of desires to achieve a

specific state of affairs (for which the agents then select specific intentions

in form of plans that they want to execute). Their behaviour is purely

driven by their internal motivators such as beliefs and desires. Norms are an

additional element to influence an agent's reasoning. In contrast to beliefs and

desires, they are external to the agent, established within the society/environment

that the agent is situated in. They are therefore regarded as external motivators

and the agents in the system are said to be norm-governed.

- 5.2

-

Norms as instruments to influence an agent's behaviour have been

popular in the agents community for the past decade with several works

addressing questions such as (Kollingbaum 2004):

- How are norms represented by an agent?

- Under what circumstances will an agent adopt a new norm?

- How do norms influence the behaviour of an agent?

- Under what circumstances would an agent violate a norm?

- 5.3

-

As a result of these works, several models of normative systems

(e.g. Boissier & Gâteau (2007); Dignum (2003); Esteva et al. (2000); Cliffe (2007); López y López et al. (2007)) and

models of norm-autonomous agents (e.g.

Kollingbaum & Norman (2004); Castelfranchi et al. (2000); Dignum (1999); López y López et al. (2007)) have been

developed.

However, most of the normative system models focused on the norms

rather than the agents and their decision making, and most of the

norm-autonomous agent-architectures have remained rather abstract. This is why we have selected

only three to present in this paper.

- 5.4

-

The Deliberate Normative Agents of Castelfranchi et al. (2000)

is a cognitive research-inspired abstract model of norm-autonomous agents.

Despite being one of the earlier works, their norm-aware

agents do not limit social norms to be triggers

for obligations, but also allow for a more fine-grained deliberation process.

- 5.5

-

The EMIL architecture (EMIL project consortium 2008; Andrighetto et al. 2007b) is an

agent reasoning architecture designed to account for the internalization of norms by agents.

It focuses primarily on decisions about which norms to accept and internalize

and the effects of this internalization. It also introduces the idea

that not all decisions have to be deliberated about11.

- 5.6

-

The NoA agent architecture presented in

Kollingbaum & Norman (2004); Kollingbaum (2004); Kollingbaum & Norman (2003)

extends the notion of norms to include legal and social norms

(Boella et al. 2007).

Furthermore, it is one of the few normative architectures that presents a

detailed view of its computational realisation.

Deliberate Normative Agents

- 5.7

- The idea of deliberate normative agents was developed before BOID. It is based

on earlier works in cognitive science (e.g. Conte et al. (1999); Conte & Castelfranchi (1999)) and

it focuses on the idea that social norms need to be involved in the decision making process of an agent.

Dignum (1999) argues that autonomous entities such

as agents need to be able to reason, communicate and negotiate about

norms, including deciding whether to violate social norms if they are

unfavourable to an agent's intentions.

- 5.8

-

As a result of the complexity of the tasks associated with social norms,

Castelfranchi et al. (2000) argue that they cannot simply be implicitly

represented as constraints or external fixed rules in an agent architecture.

They suggest that norms should be represented as mental objects that

have their own mental representation (Conte & Castelfranchi 1995) and that interact

with other mental objects (e.g. beliefs and desires) and plans of an agent.

They propose the agent architecture shown in

Figure 512.

- 5.9

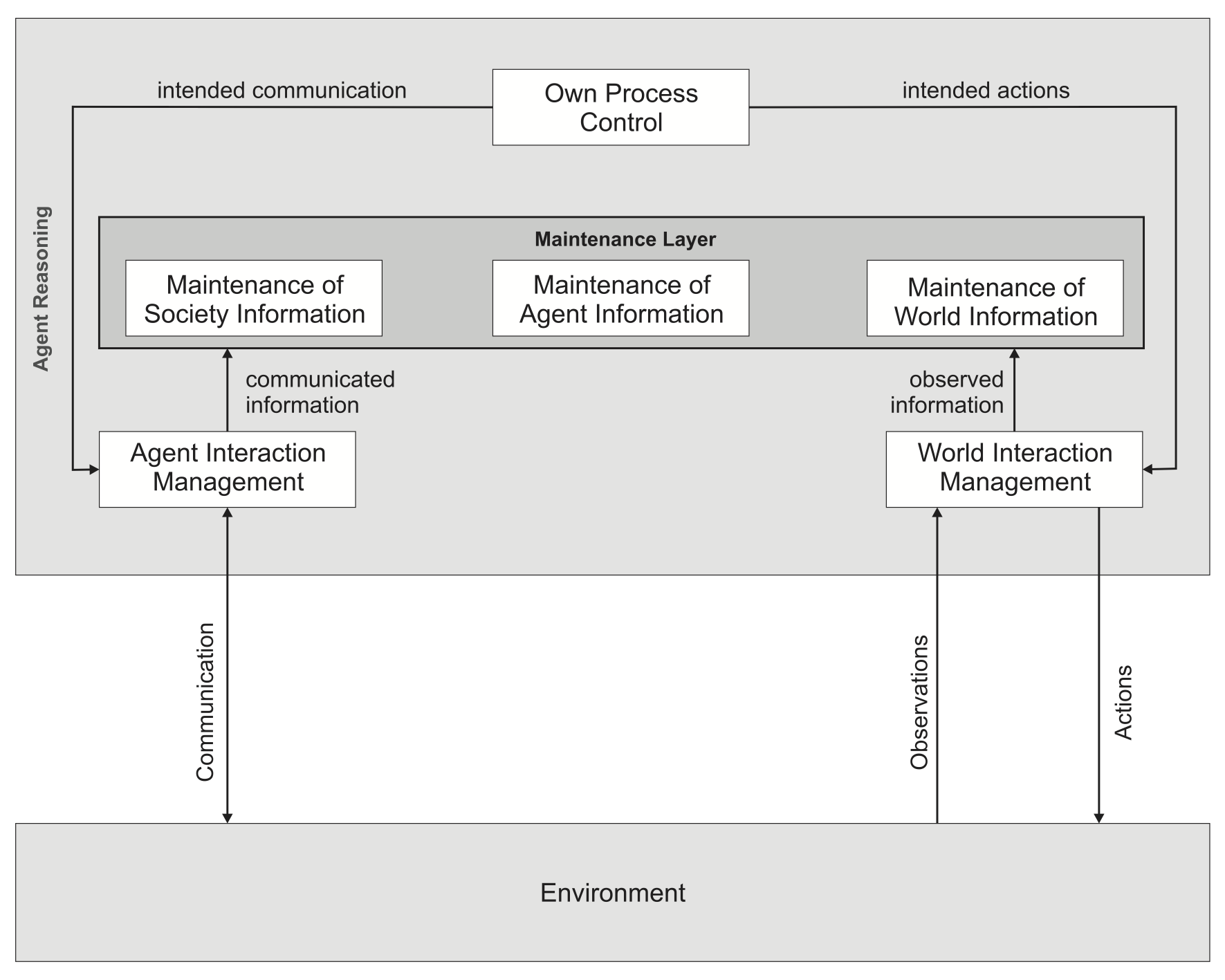

-

The architecture consists of 6 components which are loosely grouped into 3 layers. These layers

are: (i) an interaction management layer that handles the interaction of an agent with other agents (through communication) as well as the general environment; (ii) an information maintenance layer that stores the agent's information about the environment (world information), about other agents (agent information) and about the agent society as a whole (society information); and (iii) a process control layer where the processing of the information and reasoning takes place.

- 5.10

-

To reflect semantic distinctions between different kinds of information,

Castelfranchi et al. (2000) distinguish three different information levels: one

object level and two meta levels. The object level includes the information that the

agent believes. All the information maintenance layer components are at this object level.

The first meta-level contains information about how to handle

input information based on its context.

Depending on its

knowledge about this context (e.g. the reliability of the information source), it then

specifies rules about how to handle the information. In the examples

given by Castelfranchi et al. (2000), only reliable information at the interaction

management level is taken on as a belief at the

information maintenance layer. There is also meta-meta-level reasoning (i.e.

information processing at the second meta-level). The idea behind this second

meta level is that information (and in particular norms) could have effects on

internal agent processes. That is why meta-meta-information

specifies how the the agent's internal processes can be changed and under which

circumstances.

- 5.11

-

At its core, the agent reasoning cycle is the same

as the BDI reasoning cycle. Based on their percepts (interaction management

layer), agents select intentions from a set of desires (process control layer)

and execute the respective plans to

achieve these intentions.

However, the consideration of norms in the architecture adds an additional

level of complexity, because the norms that an agent has internalized can

influence the generation as well as the selection of intentions. Thus, in addition

to desires, social norms specifying what an agent should/has to do can generate

new norm-specific intentions. Norms can also have an impact by providing

preference criteria for selecting among intentions.

- 5.12

-

Norm internalization is a separate

process in the agent reasoning cycle. It starts

with the recognition of a social norm by an agent (either through observation

or via communications).

This normative information is evaluated based on its context and stored in

the information maintenance layer. It is then processed in the

process control layer with the help of the two meta-levels of information. In

particular, the agent determines which norms it wants to adopt for itself and

which ones it prefers to ignore, as well as how it wants its behaviour to be

influenced by norms. Based on this, meta-intentions are created that

influence the generation and selection of intentions as outlined above.

- 5.13

-

Evaluating the deliberative normative agents against our dimensions, they exhibit

exhibit similar features as BOID agents, but enhanced on the

social and the learning dimensions. Not only does the deliberative

normative agent architecture include an explicit separate norm internalization

reasoning cycle, but its notion of (social) norms goes beyond obligations.

Castelfranchi et al. (2000) furthermore recognize the need for communication in

their architecture. Concerning the learning dimension, agents have limited

learning capabilities, being able to learn new norm-specific intentions.

EMIL-A

- 5.14

- The EMIL-A agent architecture13

(Andrighetto et al. 2007b) was developed

as part of an EU-funded FP6 project which focused on “simulating the two-way

dynamics of norm innovation”

(EMIL project consortium 2008).

- 5.15

-

What this byline refers to is the idea of extending the classical micro-macro link

usually underlying ABMs to include both top-down and bottom-up

links (as well the the interaction between these two).

EMIL-A models the process of agents learning about norms in a society, the internalization

of norms and the use of these norms in the agents' decision making.

- 5.16

-

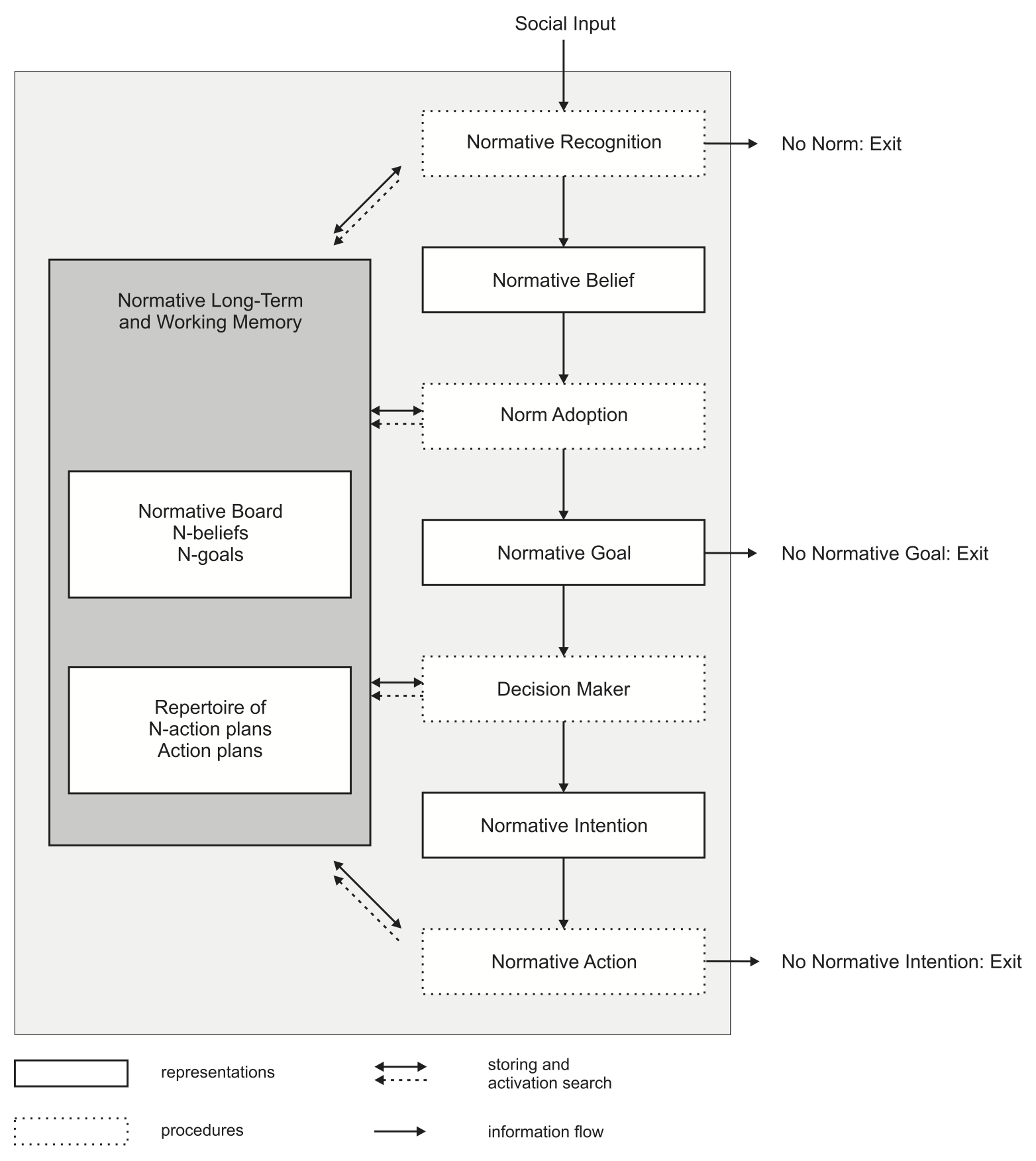

Figure 6 shows the normatively focused part of the EMIL-A architecture.

The authors make a distinction between

factual knowledge (events) and normative knowledge (rules). The agent has a

separate interface to the environment for each of these knowledge types.

- 5.17

-

The agent also has two kind of memories,

one for each knowledge type:

(i) an event board for facts and events, and (ii) a normative frame for inferring and storing rules from the event board.

- 5.18

-

In addition to these components, the EMIL-A architecture consists of:

- four different procedures:

- norm recognition, containing the above mentioned normative frame,

- norm adoption, containing goal generation rules,

- decision making, and

- normative action planning

- three different mental objects that correspond to the components of

BDI:

- normative beliefs,

- normative goals, and

- normative intentions

- an inventory:

- a memory containing a normative board and a repertoire of normative action plans, and

- an attitudes module (capturing the internalized attitudes and morals of an agent) which acts on all procedures, activating and deactivating them directly based on goals (Andrighetto et al. 2007a).

- four different procedures:

- 5.19

-

The first step of the normative reasoning cycle is the recognition of a norm using

the norm recogniser module.

This module distinguishes two different scenarios:

(i) information it knows about and had previously classified as a norm and (ii) new (so far unknown) normative information.

- 5.20

-

In the former case, the normative input is entrenched on the normative board

where it is ordered by salience. By 'salience' Andrighetto et al. (2007a)

refer to the degree of activation of a norm, i.e. how often the respective norm

has been used by the agent for action decisions and how often it has been

invoked. The norms stored on the normative board are then considered in the

classical BDI decision process as restrictions on the goals and intentions of

the agent. The salience of a norm is important, because in case of

conflict (i.e. several norms applying to the same situation), the norm with the

highest salience is chosen.

- 5.21

-

When the external normative input is new, i.e. not known to the agent,

the agent first needs to internalize it. To do this, first the normative frame is activated.

The normative frame is equipped with a dynamic schema (a frame of reference)

with which to recognise and categorise an

external input as being normative, based on its properties. Properties that the

normative frame takes into account include deontic specifications,

information about the locus from which the norm emanates, information about legitimate

reactions or sanctions for transgression of the norm, etc.14. The recognition of a norm by an agent does not

imply that the agent will necessarily agree with the norm or that it understands

it fully. It only means that the agent has classified the new information as a

norm. After this initial recognition of the external input as a norm, the normative

frame is used to find an interpretation of the new norm, by

checking the agent's knowledge for information about properties of the normative frame.

Once enough information has been gathered about the new norm

and the agent has been able to determine its meaning and implications, the newly

recognised norm is turned into a normative belief. Again, normative beliefs

do not require that the agent will follow the norm.

- 5.22

-

In EMIL-A, agents follow a “why not” approach. This means

that an agent has a slight preference to adopt a new norm if it cannot find

evidence that this new norm conflicts with its existing mental objects. Adopted

normative beliefs are stored as normative goals.

These normative goals are considered in the agent's decision making. An agent

does not need to follow all its normative goals when making a decision.

When deciding whether to follow a norm, EMIL-A agents diverge from

the general classical utility-maximising modality of reasoning. An

agent will try to conform with its normative

goals if it does not have reasons for not doing so (e.g. if the benefits of

following a norm do not outweigh its costs).

- 5.23

-

As well as the reasoning cycle taking into account the normative goals

and intentions of an agent, the EMIL-A architecture also recognises

that not all human actions result from an extensive deliberation process.

For example, Andrighetto et al. (2007a) note that

car drivers do not normally think about stopping their car at a red

traffic light, but instead react instinctively to it. To implement this in the

EMIL-A architecture, Andrighetto et al. (2007a) introduce shortcuts, whereby internalised

norms can trigger behaviour directly in reaction to external stimuli.

- 5.24

-

Evaluating EMIL-A according to our dimensions, on the cognitive level a deliberation

architecture is used, extended by short-cuts similar to the ones

in the BRIDGE architecture, in addition to a norm deliberation and internalization

component.

Emotions or other affective elements are not included in EMIL-A. Instead,

(social) norms play a central role. Similar to

deliberative normative agents, EMIL considers the social aspects of

norms, and uses a blackboard to communicate norm candidates.

- 5.25

-

There are several implementations of the EMIL-A architecture, including EMIL-S

(EMIL project consortium 2008) and EMIL-I-A (Villatoro 2011; Andrighetto et al. 2010).

EMIL-S was developed within the EMIL project,

whereas EMIL-I-A, which focuses primarily on norm internalization, was developed

afterwards by one of the consortium partners in collaboration

with other researchers.

- 5.26

-

The EMIL-A architecture has been applied to several

scenarios15, including self-regulated distributed web service provisioning, and

discussions between a group of borrowers and a bank in micro-finance scenarios.

It has also been used for simpler models such as the behaviour of people

waiting in queues (in particular, whether people of

different cultural backgrounds would line up and wait their turn).

NoA

- 5.27

- The Normative Agent (NoA) architecture of Kollingbaum & Norman (2003) is one of the few that

specifically focuses on the incorporation of norms into agent decision

making while also using a broader definition of norms. Over the

last 12 years of research into normative multi-agent systems, the definition of

norm has changed from mere social obligations to

a much more sophisticated idea strongly inspired by disciplines such as sociology, psychology,

economics and law. Kollingbaum (2004) himself speaks of norms as

concepts that describe what is “allowed, forbidden or

permitted to do in a specific social context”. He thus keeps the social aspect

of norms, but extends it to include organisational concepts and ideas from

formal legal systems. That is why to him norms are not only linked to obligations,

such as in BOID, but also “hold explicit mental concepts representing

obligations, privileges, prohibitions, [legal] powers, immunities etc.”

(Kollingbaum 2004, p. 10). In the NoA architecture, norms governing the

behaviour of an agent refer to either actions or states of affairs that are

obligatory, permitted or forbidden.

- 5.28

-

The NoA architecture has an explicit representation

of a “normative state”. A normative state is

a collection of norms (obligations, permissions and prohibitions) that

an agent holds at a point in time. This normative state is consulted when

the agent wants to determine which plans

to select and execute. NoA agents are equipped with an

ability to construct plans to achieve their goals. These plans should fulfil

the requirement that they do not violate any of the

internalized/instantiated norms of the agent, i.e. norms that the agent has decided

to follow16.

- 5.29

-

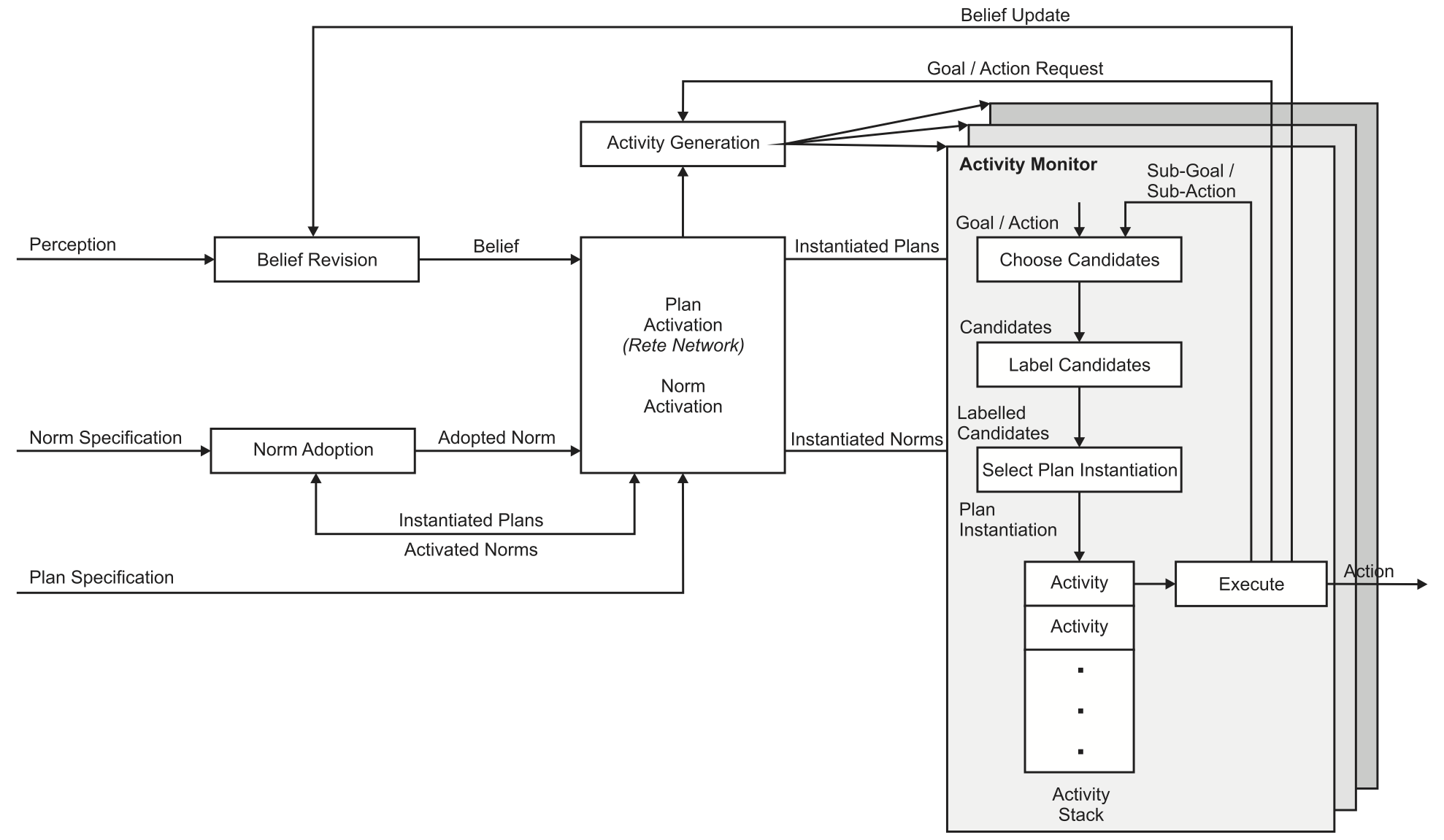

Figure 7 shows the NoA architecture.

The main elements that influence the behaviour of a NoA agent are

(i) a set of beliefs, (ii) a set of pre-specified plans and (iii) a set of norms.

- 5.30

-

In contrast to if-then-rules or BDI plans, a NoA plan

not only specifies when the plan is appropriate for execution (preconditions)

and the action to be taken, but also defines what states of affairs it

will achieve (effects). Norm specifications carry

activation and termination conditions that determine when a norm

becomes active and therefore relevant to an agent and when a

norm ceases to be active (Kollingbaum & Norman 2003).

- 5.31

-

A typical reasoning cycle of a NoA agent starts with a

perception that might alter some of its beliefs (which are symbolically

represented). As in conventional production systems, there are two sources

to change a set of beliefs:

(i) external “percepts” of the environment, and (ii) internal manipulations resulting from the execution of activities (plan steps) by the agent.

- 5.32

-

As with the EMIL-A architecture, NoA not only looks at knowledge gained from

percepts but also considers external norms.

That is why in addition to the normal percepts, the agent can obtain normative

specifications from the environment. The agent's

reasoning cycle then follows two distinct operations:

- the activation of plan and norm declarations and the generation of a set of instantiations of both plans and norms,

- the actual deliberation process including the plan selection and execution. This process is dependant on the activation of plans and norms (Kollingbaum 2004).

- 5.33

-

The NoA agent architecture is based on the NoA

language, which has close similarities with AgentSpeak(L) (Rao 1996).

It was implemented by Kollingbaum for his PhD

dissertation and he has used it for simple examples such as moving boxes.

We could not find any other application of the NoA architecture. Nevertheless,

NoA is far more sophisticated than other normative architectures such as BOID,

especially in terms of representing

normative concepts. This sophistication comes at the cost of an increased complexity.

One conceptually interesting facet of the NoA

architecture is that it implements a distinction between an agent being

responsible for achieving a state of affairs and being responsible for

performing an action. This allows for both state- and event-based reasoning

by the agent, which is a novelty for normative systems, where typically only

one of these approaches can be found.

- 5.34

-

In terms of the comparison dimensions, NoA has the same features as EMIL-A.

Its main difference and the reason for its inclusion here is its

extended notion of norms.

Cognitive Models

Cognitive Models

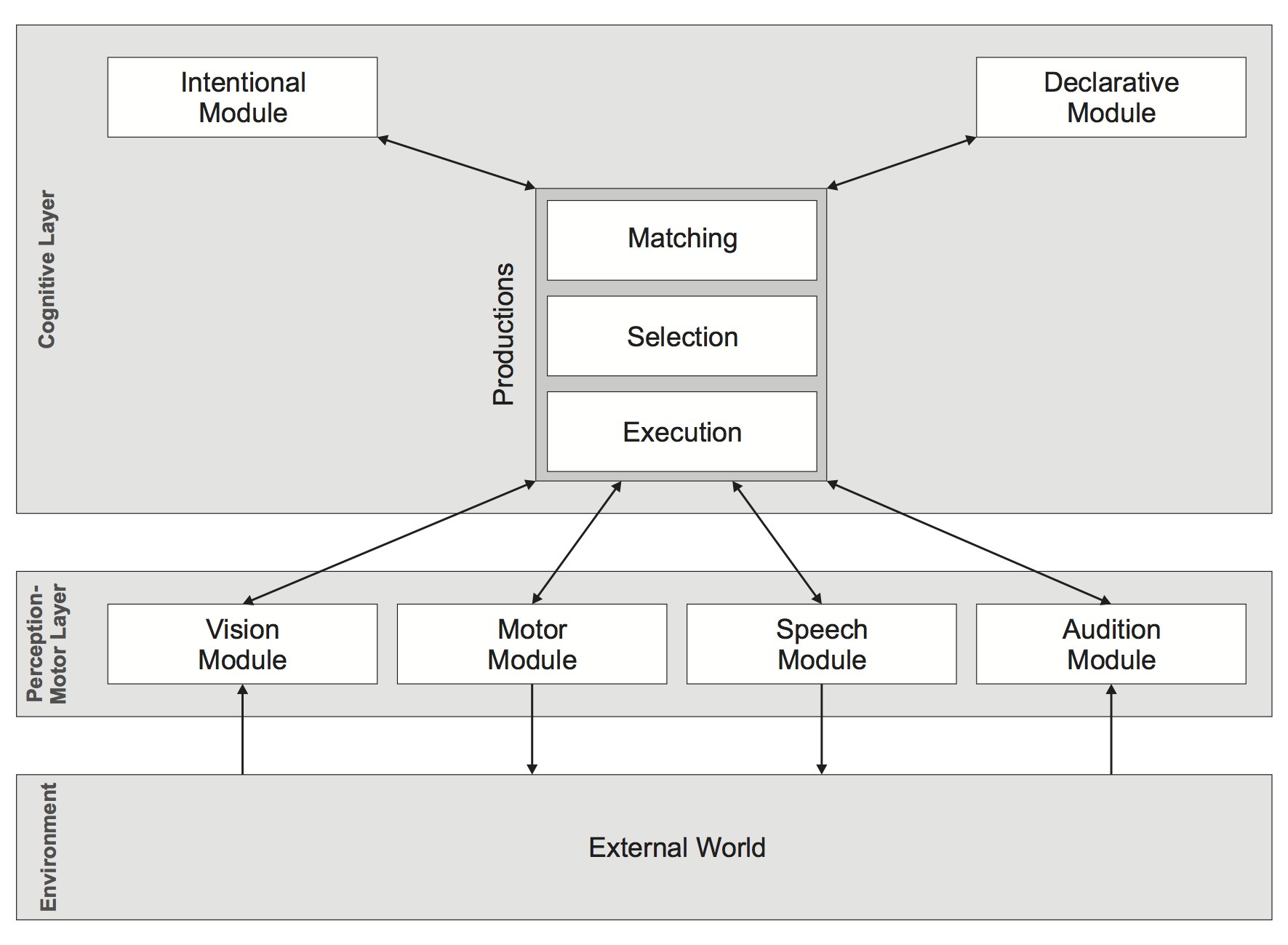

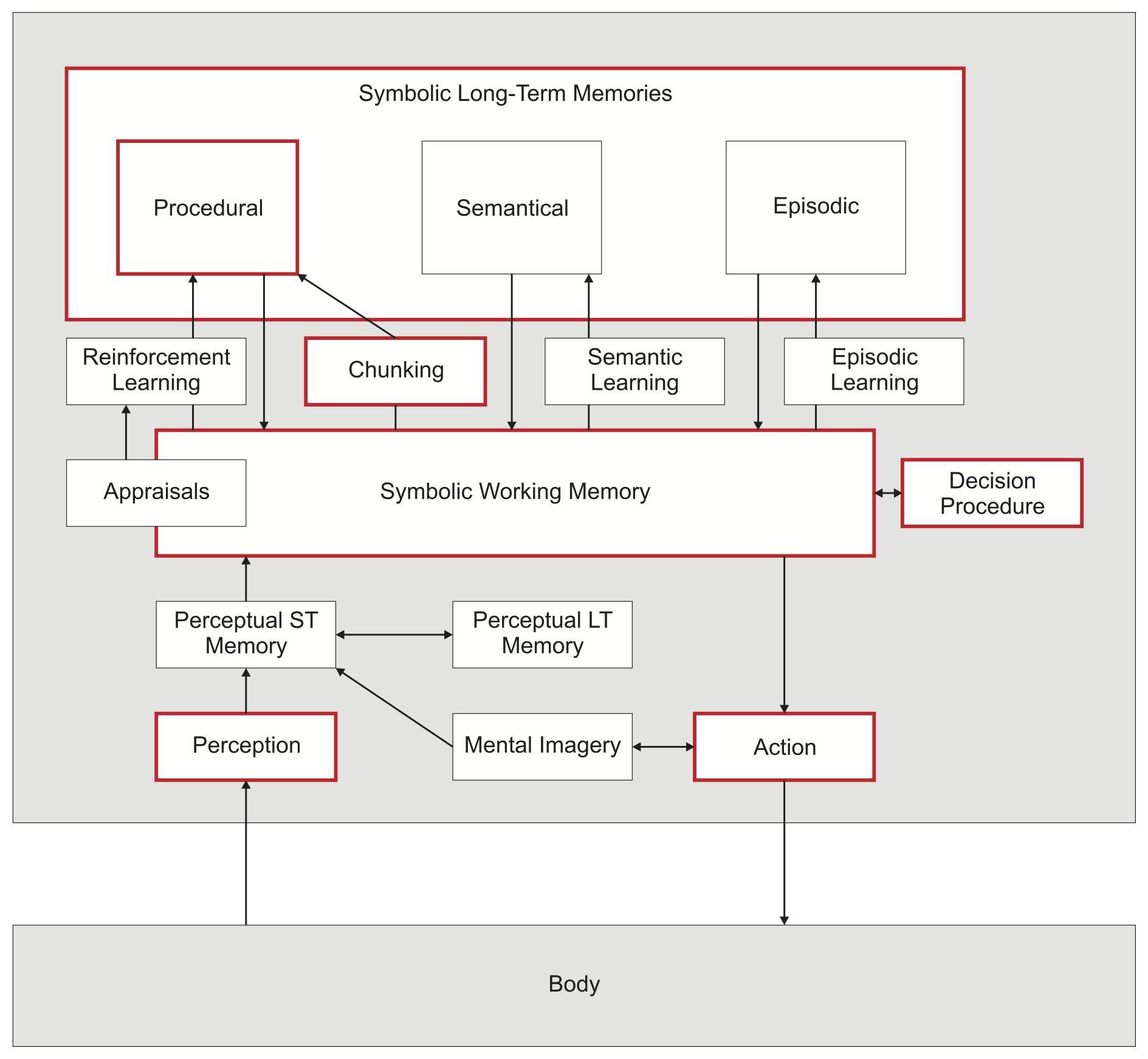

- 6.1

-

Having reviewed production rule systems, BDI-inspired and

normative architectures, in the next two sections we consider

cognitively inspired models. As Sun (2009) remarks, cognitive models and

social simulation models—despite often having the same aim

(i.e. representing the behaviour of decision-making actors)—tend to have

a different view of what is a good model for representing human decision making.

Sun (2009), a cognitive scientist, remarks that

except for few such as Thagard (1992), social simulation

researchers frequently only focus on agent models custom-tailored to the task at

hand. He calls this situation

unsatisfying and emphasises that it limits realism and

the applicability of social simulation. He argues that to overcome these

short-comings, it is necessary to include cognition as an integral part of an agent

architecture. This and the next section present models that follow this suggestion

and take their inspiration from cognitive research. This section focuses on

“simple” cognitive models that are inspired by cognitive ideas but that still have

strong resemblance to the models presented earlier. Section

7 focuses on the models

that are more strongly influenced by psychology and neurology.

PECS

- 6.2

-

The first architecture with this focus on cognitive ideas and processes that we shall

discuss is PECS (Urban 1997).

- 6.3

-

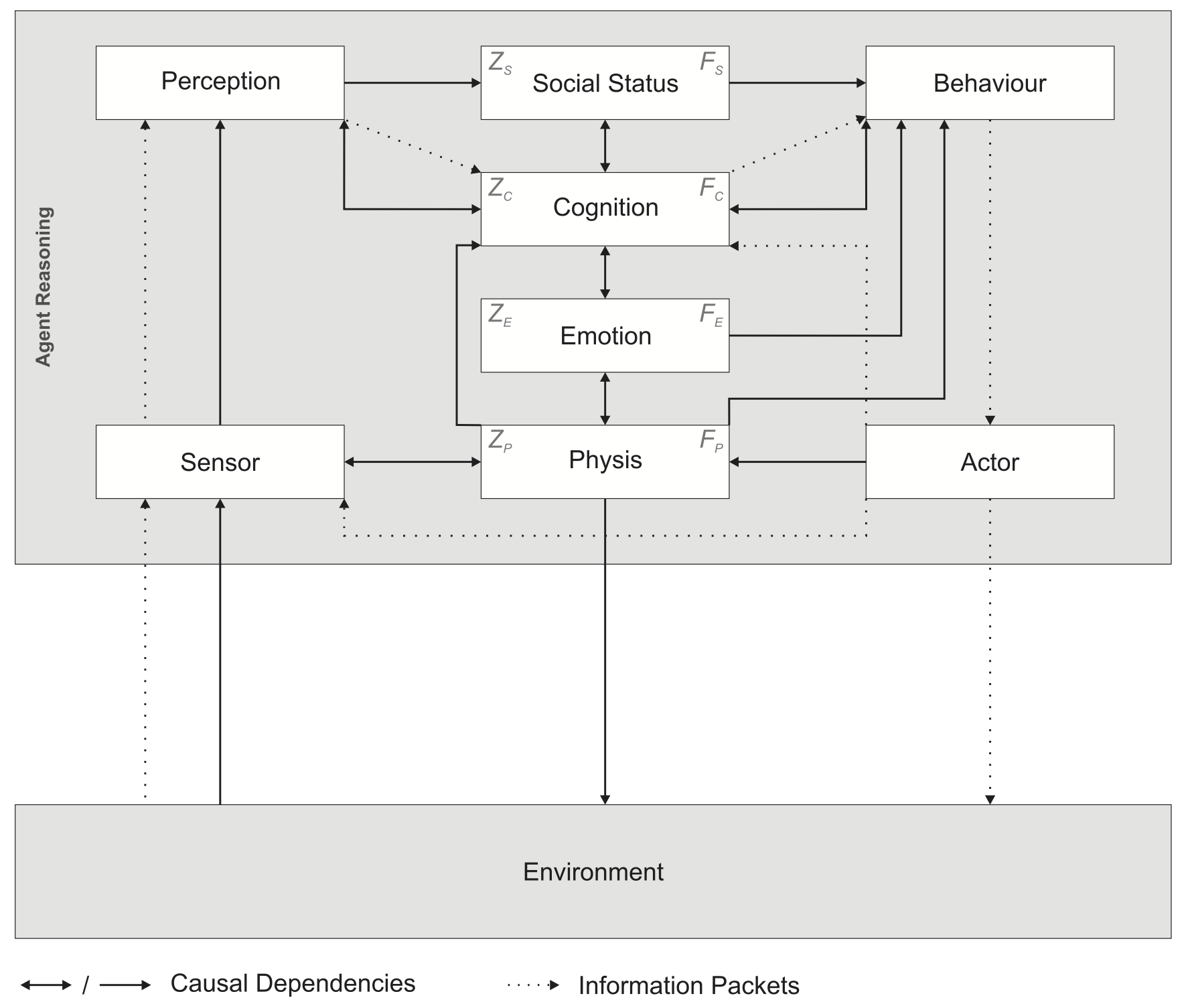

PECS

stands for Physical conditions, Emotional state, Cognitive

capabilities and Social status, which refers to the authors' aim to “enable an integrative modelling of physical,

emotional, cognitive and social influences within a component-oriented

agent architecture” (Urban & Schmidt 2001). They wanted

to design a reference model for modelling human behaviour that could replace the

BDI architecture Schmidt (2002b). According to them, BDI is only to a “very

limited degree sensible and useful” for modelling humans because of its focus

on rational decision-makers. Instead, they advocate using the Adam model

Schmidt (2000) on which PECS is based.

- 6.4

-

The architecture of PECS is depicted in Figure 8. It is divided

into three layers:

(i) an input layer (consisting of a sensor and a perception component)

responsible for the processing of input data,

(ii) an internal layer which is structured in several sub-components, each of which

is responsible for modelling a specific required functionality and might

be connected to other components, and

(iii) a behavioural layer (consisting of a behaviour and an actor component)

in which the actions of the agent are determined.

- 6.5

-

In the input and the behavioural layers, the sensor and the actor components act

as interfaces with the environment, whereas the perception and the

behaviour components are responsible for handling data to and from the interface

components and for supporting the decision making processes.

- 6.6

-

The authors model each of the properties that the name

PECS is derived from as a separate component.

Each component is characterized by an internal state (Z),

defined by the current values for the given set of model quantities at

each calculated point in time (Schmidt 2001). The transitions of Z over time

can be specified with the help of

time-continuous as well as time-discrete transition functions (F). Each

component can generate output based on the pre-defined dynamic behaviour of the

component.

- 6.7

-

As with the other architectures described so far, the agent decision-making cycle

starts with the perception of the environment, which is translated to agent

information and processed by the components mentioned above. Information flows and

dependencies between the different components are depicted in

Figure 8 with dotted and solid arrows.

- 6.8

-

Urban (1997) notes that both

simple reactive behaviour that can be described by

condition-state-action rules and

more complex deliberative behaviour, including planning

based on the goals the agent has in mind,

are possibilities for the agent's decision-making. An example of

the latter is presented by Schmidt (2002b), who extends the internal

reasoning in the four components of the internal layer to include reflective and

deliberative information flows.

- 6.9

-

As a reference model, the PECS architecture mainly provides concepts and a partial

methodology for the construction of agents representing humans

and their decision making (as well as concepts for the supporting communication

infrastructure and environment). According to its developers, PECS

should be useful for constructing a wide range of models for agents

whose dynamic behaviour is determined by physical, emotional, cognitive and

social factors and which display behaviour containing reactive and deliberative

elements. Despite this general focus, we were only able to find one paper

presenting an application of the PECS reference model, Ohler & Reger (1999),

in which PECS is used to analyse role-play and group formation among children.

- 6.10

-

PECS covers many of the comparison dimensions, although

because it is a reference model, only conceptually rather then in terms of an

actual implementation. On the cognitive level, reaction-based architectures as

well deliberative ones and hybrid architectures combining the two are envisioned

by Urban (1997). Following Schmidt (2002b), PECS covers issues on the

affective and the social level, although again few specifics can be found

about actual implementations, which is why it is hard to judge to what level

issues in these dimensions are covered. Norms and learning are the two

dimensions which are not represented in the architectures, or only to a limited degree.

The transition functions in PECS are theoretically

usable for learning, but only within the bounds of pre-defined update functions.

Consumat

- 6.11

-

The Consumat model of Jager & Janssen was initially developed to model the behaviour of

consumers and market dynamics (Janssen & Jager 2001; Jager 2000), but has since been

applied to a number of other research topics (Jager et al. 1999).

- 6.12

-

The Consumat model builds on three main considerations:

(i) that human needs are multi-dimensional,

(ii) that cognitive as well as time resources are required to make

decisions, and

(iii) that decision making is often done under uncertainty.

- 6.13

-

Jager and Janssen argue that humans have various (possibly conflicting)

needs that can diminish with consumption or over time,

which they try to satisfy when making a decision. They point out

that models of agent decision making should take this into account, rather than

trying to condense decision making to a single utility value.

They base their work on the pyramid of needs from Maslow (1954) as well as

the work by Max-Neef (1992), who distinguishes nine different human needs:

subsistence, protection, affection, understanding, participation, leisure,

creation, identity and freedom. Due to the complexity of modelling nine

different needs as well as their interactions in an agent architecture,

Jager & Janssen (2003) condense them to three: personal needs, social needs and a

status need19. These three

needs may conflict (one can, for example, imagine an agent's personal needs

do not conform with its social ones) and as a consequence an agent

has to balance their fulfilment.

- 6.14

-

Jager and Janssen note that the resources available for decision making

are limited and thus constrain the number of alternatives an

agent can reason about at a point of time. Their argument

is that humans not only try to optimize the outcomes of decision

making, but also the process itself20.

That is why they favour the idea of “heuristics” that simplify complex

decision problems and reduce the cognitive effort involved in a

decision. Jager and Janssen suggest

that the heuristics people employ can be classified along two dimensions:

(i) the amount of cognitive effort involved for the individual agent, and

(ii) the individual or social focus of information gathering

(Jager & Janssen 2003, Figure 1).

- 6.15

-

The higher the uncertainty of an agent, the more likely

it will use social processing for its decision making. In the Consumat model,

uncertainty is modelled as the difference between expected outcomes and

actual outcomes.

- 6.16

-

An agent's current level of need satisfaction and its uncertainty level

determine which heuristic it will apply when trying to make a decision.

Jager & Janssen (2003) define two agent attributes:

the aspiration level and the uncertainty tolerance. The aspiration level

indicates at what level of need the agent is satisfied and the

uncertainty tolerance specifies how well an agent can deal with its own

uncertainty before looking at other agents' behaviour. A low aspiration

level implies that an agent is easily satisfied and therefore will not

invest in intense cognitive processing using a lot of cognitive effort,

whereas agents with a higher aspiration level are more often dissatisfied, and

hence are also more likely to discover new behavioural opportunities.

Similarly, agents with a low uncertainty tolerance are more likely to look at

other agents to make a decision, whereas agents with a high tolerance rely more

on their own perceptions (Jager & Janssen 2003).

- 6.17

-

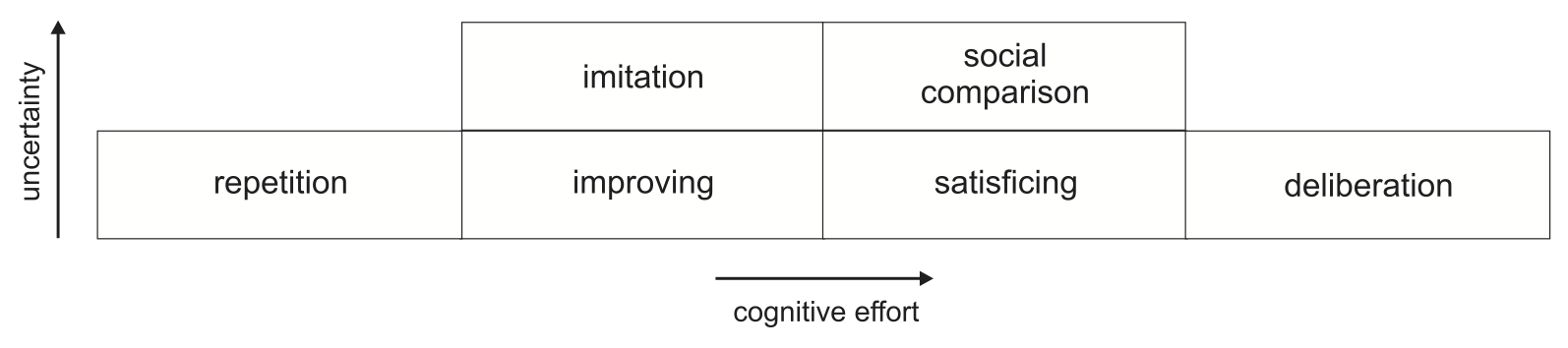

Based on the two dimensions of uncertainty and cognitive effort, the Consumat

approach distinguishes six different heuristics an agent can use to make a

decision (Figure 9).

- 6.18

-

Starting from the right hand side of Figure 9, agents with a very low current

level of need satisfaction (i.e. agents that are likely to be dissatisfied) are

assumed to put more cognitive effort into their decision making and to

deliberate, i.e. to determine the consequences of all possible decisions

for a fixed time horizon and to act according to what they perceive as the

“best” possible way. Moving from the right-hand side of the figure to the

left, the level of need satisfaction increases and the dissatisfaction

decreases, resulting in less need for intense cognitive effort spent on decision

making. Thus, in the case of a medium low (rather than very low) need satisfaction

and a low level of uncertainty, the agents engage in a strategy where they

determine the consequences of decisions one by one and stop

as soon as they find one that satisfies their needs. Jager and

Janssen call this strategy satisficing after a concept first described

by Simon (1957).

With the same level of need satisfaction but a higher uncertainty level, agents

engage in social comparison, i.e. they compare their own performance

with those that have similar abilities. With a higher level of

need satisfaction and low uncertainty, the agent compares options until it

finds one that is improving its current situation. In contrast, in the

case of high uncertainty, it will try to imitate the behaviour of agents

with similar abilities. Finally, when there is high need satisfaction and low

uncertainty, the agent will simply repeat what it has been doing so far,

because this seems to be a successful strategy.

- 6.19

-

The Consumat model also includes a memory component (referred to as a mental map)

that stores information on the abilities, opportunities and characteristics of the

agent. This mental map is updated every time the agent engages

in cognitive effort in social comparison, satisficing or deliberation.

- 6.20

-

Summing up, on the cognitive dimension, the Consumat approach goes beyond the

approaches presented so far by allowing for different heuristics in the agent's

decision making (and by showing actual implementations for them).

Although the model is not capable of simulating elaborate cognitive

processes, logical reasoning or morality in agents, it does represent

a number of key processes that capture human decision making in a

variety of situations (Jager & Janssen 2012). As a result, it has been used to study

the effects of heuristics in comparison to the extensive deliberation

approaches of other architectures. With respect to the other comparison

dimensions, on the affective level values and morality are considered,

however emotions are not directly mentioned. (Jager 2000, p. 97) considers

norms and institutions as input for the behavioural model, in

particular as one of the study foci of Consumat was the impact of different

policies on agent behaviour. Although (Jager 2000) mentions norms in his dissertation,

he considers

legal norms (or laws) and policies, and social norms are not directly

mentioned. On the social level , Consumat puts a lot of emphasis on

comparison of the agent's own success and that of its peers. As such, Consumat

has some idea of sociality in terms of agents being able to reason

about the success of their own actions in relation to the success resulting

from the actions of others (which they use for learning better behavioural

heuristics).

Nonetheless, they are not typically designed to see beyond this success

comparison and for example account for the impact of the behaviour of others on

their own actions.

Furthermore, in the original Consumat, it was difficult to compare the effects

of different peers groups.

- 6.21

-

Recently the authors of the Consumat model have presented an updated version which they

refer to as Consumat II (Jager & Janssen 2012). Changes include

(i) accounting for different agent capabilities in estimating the future,

(ii) lessening the distinction between repetition on the one

hand and deliberation on the other,

(iii) accounting for the expertise of agents in the social-oriented heuristics, and

(iv) consideration of several different network structures.

- 6.22

-

At the time of writing, the Consumat II model has not yet been formalized and Jager & Janssen

first want to focus on a few stylized experiments to explore the effects of their

rules. In the long run, their aim is to apply Consumat II to study the parameters

in serious games, which in turn could be used to analyse the effects of policy decisions.

Psychologically and Neurologically Inspired

Models

Psychologically and Neurologically Inspired

Models

- 7.1

-

Having described “simple” cognitive decision making models, we now turn

our attention to architectures inspired by psychology and neurology. These are

often referred to as cognitive architectures. However, as

they have a different focus than the “cognitive architectures” we have just

presented, we group them separately. The main difference is that the architectures in this section take into account the presumed structural

properties of the human brain. We chose four to present in this section: MHP, ACT-R/PM, CLARION and

SOAR, because of their

popularity within the agent community. There are many more,

some of which are listed in Appendix A.

MHP

- 7.2

- The Model Human Processor (MHP) (Card et al. 1983) originates from studies of

human-computer interaction (HCI). It is based on a synthesis of

cognitive science

and human-computer interaction

and is described by Byrne (2007) as an influential cornerstone for the

cognitive architectures developed afterwards.

- 7.3

-

MHP was originally developed to support the calculation of how long it

takes to perform certain simple manual tasks. The advantage of the Model Human

Processor is that it includes detailed

specifications of the duration of actions and the cognitive processing of

percepts and breaks down complex human actions into detailed small

steps that can be analysed. This allows system designers to predict the

time it takes a person to complete a task, avoiding the need to perform experiments with human participants

(Card et al. 1986).

- 7.4

-

Card et al. (1983) sketch a framework based on

a system of several interacting memories and processors. The main three processors are

(i) a perceptual processor,

(ii) a cognitive processor and

(iii) a motor.

- 7.5

-

Although not explicitly modelled as processors in other architectures, these

three components are common to all psychology/neurology-inspired models.

In most of the examples in Card et al. (1983), the processors are envisioned to

work serially. For example, in order

to respond to a light signal, the light must first be detected by the

perceptual component. This perception can then be processed

in the cognitive module and, once this processing has taken place, the

appropriate motor command can be executed. Although this serial processing is

followed for simple tasks, for more complex tasks Card et al. (1983) suggest the three

processors could work in parallel. For this purpose they lay out some general