Abstract

Abstract

- Agent-based models are increasingly used to address questions regarding real-world phenomena and mechanisms; therefore, the calibration of model parameters to certain data sets and patterns is often needed. Furthermore, sensitivity analysis is an important part of the development and analysis of any simulation model. By exploring the sensitivity of model output to changes in parameters, we learn about the relative importance of the various mechanisms represented in the model and how robust the model output is to parameter uncertainty. These insights foster the understanding of models and their use for theory development and applications. Both steps of the model development cycle require massive repetitions of simulation runs with varying parameter values. To facilitate parameter estimation and sensitivity analysis for agent-based modellers, we show how to use a suite of important established methods. Because NetLogo and R are widely used in agent-based modelling and for statistical analyses, we use a simple model implemented in NetLogo as an example, packages in R that implement the respective methods, and the RNetLogo package, which links R and NetLogo. We briefly introduce each method and provide references for further reading. We then list the packages in R that may be used for implementing the methods, provide short code examples demonstrating how the methods can be applied in R, and present and discuss the corresponding outputs. The Supplementary Material includes full, adaptable code samples for using the presented methods with R and NetLogo. Our overall aim is to make agent-based modellers aware of existing methods and tools for parameter estimation and sensitivity analysis and to provide accessible tools for using these methods. In this way, we hope to contribute to establishing an advanced culture of relating agent-based models to data and patterns observed in real systems and to foster rigorous and structured analyses of agent-based models.

- Keywords:

- Parameter Fitting, Sensitivity Analysis, Model Calibration, Agent-Based Model, Inverse Modeling, NetLogo

Introduction

Introduction

- 1.1

- In agent-based models (ABMs), individual agents, which can be humans, institutions, or organisms, and their behaviours are represented explicitly. ABMs are used when one or more of the following individual-level aspects are considered important for explaining system-level behaviour: heterogeneity among individuals, local interactions, and adaptive behaviour based on decision making (Grimm 2008). The use of ABMs is thus required for many, if not most, questions regarding social, ecological, or any other systems comprised of autonomous agents. ABMs have therefore become an established tool in social, ecological and environmental sciences (Gilbert 2007; Thiele et al. 2011; Railsback & Grimm 2012).

- 1.2

- This establishment appears to have occurred in at least two phases. First, most ABMs in a certain field of research are designed and analysed more or less ad hoc, reflecting the fact that experience using this tool must accumulate over time. The focus in this phase is usually more on how to build representations than on in-depth analyses of how the model systems actually work. Typically, model evaluations are qualitative, and fitting to data is not a major issue. Most models developed in this phase are designed to demonstrate general mechanisms or provide generic insights. The price for this generality is that the models usually do not deliver testable predictions, and it remains unclear how well they really explain observed phenomena.

- 1.3

- The second phase in agent-based modelling appears to begin once a critical mass of models for certain classes of questions and systems has been developed, so that attention shifts from representation and demonstration to obtaining actual insights into how real systems are working. An indicator of this phase is the increased use of quantitative analyses that focus on both a better mechanistic understanding of the model and on relating the model to real-world phenomena and mechanisms. Important approaches during this stage are sensitivity analysis and calibration (parameter fitting) to certain data sets and patterns.

- 1.4

- The use of these approaches is, however, still rather low with agent-based modelling. A brief survey of papers published in the Journal of Artificial Societies and Social Simulation and in Ecological Modelling in the years 2009–2010 showed that the percentages of simulation studies including parameter fitting were 14 and 37%, respectively, while only 12 and 24% of the published studies included some type of systematic sensitivity analysis (for details of this survey, see Supplement SM1). There are certainly many reasons why quantitative approaches for model analysis and calibration are not used more often and why the usage of these approaches appears to differ between social simulation and ecological modelling, including the availability of data and generally accepted theories of certain processes, a focus on theory or application, and the complexity of the agents' decision making (e.g., whether they are humans or plants).

- 1.5

- There is, however, a further important impediment to using more quantitative methods for analysing models and relating them more closely to observed patterns (Grimm, Revilla, Berger, et al. 2005; Railsback & Grimm 2012) and real systems: most modellers in ecology and social sciences are amateurs with regard to computer science and the concepts and techniques of experimental design (Lorscheid et al. 2012). They often lack training in methods for calibration and sensitivity analysis and for implementing and actually using these methods. Certainly, comprehensive monographs on these methods exist (e.g., Saltelli et al. 2004; Kleijnen 2008), but they tend to be dense and therefore not easily accessible for many modellers in social sciences and ecology. Moreover, even if one learns how a certain method works in principle, it often remains unclear how it should actually be implemented and used.

- 1.6

- We therefore in this article introduce software and provide scripts that facilitate the use of a wide range of methods for calibration, the design of simulation experiments, and sensitivity analysis. We do not intend to give in-depth introductions to these methods but rather provide an overview of the most important approaches and demonstrate how they can easily be applied using existing packages for the statistical software program R (R Core Team 2013a) in conjunction with RNetLogo (Thiele et al. 2012), an R package that allows a NetLogo (Wilensky 1999) model to be run from R, sends data to that model, and exports the model output to R for visualisation and statistical analyses.

- 1.7

- R is a free, open-source software platform that has become established as a standard tool for statistical analyses in biology and other disciplines. An indicator of this is the rapidly increasing number of textbooks on R or on certain elements of R; currently, there are more than 30 textbooks on R (R Core Team 2013b) available, e.g., Crawley (2005); Dalgaard ( 2008) and Zuur et al. (2009). R is an open platform, i.e., users contribute packages that perform certain tasks. RNetLogo is one of these packages.

- 1.8

- NetLogo (Wilensky 1999) is a free software platform for agent-based modelling that was originally designed for teaching but is increasingly used for science (Railsback & Grimm 2012; Wilensky & Rand, in press). Learning and using NetLogo requires little effort due to its easy and stable installation, the excellent documentation and tutorials, a simple but powerful programming language, and continuous support by its developers and users via an active user forum on the internet. Moreover, NetLogo's source code was made available to the public in 2011, which might lead to further developments and improvements, in particular regarding computational power, which can sometimes be limiting for large, complex models.

- 1.9

- NetLogo comes with "BehaviorSpace" (Wilensky & Shargel 2002), a convenient tool for running simulation experiments, i.e., automatically varying parameters, running simulations, and writing model outputs to files. However, for more complex calibrations, simulation experiments, or sensitivity analyses, it would be more efficient to have a seamless integration of NetLogo into software where modules or packages for these complex methods exist and can easily be used and adapted. Such a seamless link has been developed for Mathematica (Bakshy & Wilensky 2007) and, more recently, also for R (RNetLogo, Thiele et al. 2012). RNetLogo merges the power of R with the power and ease of use of NetLogo.

- 1.10

- The software tool "BehaviorSearch" calibrates ABMs implemented in NetLogo (Stonedahl & Wilensky 2013); it implements some of the calibration methods that we describe below and appears to be powerful and robust (for an example use, see Radchuk et al. 2013). Still, for many purposes, the professional agent-based modeller might need to take advantage of the wider range of calibration methods available via R packages and to control the operation of these methods in more detail. We recommend using the "cookbook" presented here in combination with BehaviorSearch. For models implemented in languages other than NetLogo, the scripts in our cookbook can be adapted because they are based on R, whereas BehaviorSearch cannot be used.

- 1.11

- In the following, we will first explain how R, NetLogo, and RNetLogo are installed and how these programs can be learned. We introduce a simple example model, taken from population ecology, which will be used for all further demonstrations. We then present a wide range of techniques of model calibration, starting with a short general introduction and closing with an intermediate discussion. Afterwards, we do the same for sensitivity analysis techniques. Our main purpose is to provide an overview, or "cookbook", of methods so that beginners in parameter estimation and sensitivity analysis can see which approaches are available, what they can be used for, and how they are used in principle using R scripts. These scripts can also be adapted if platforms other than NetLogo are used for implementing the ABM, but then the users must replace the "simulation function" in the R scripts, where the data exchange between R and NetLogo occurs, with an appropriate alternative. All source codes, i.e., the NetLogo model implementation and the R/RNetLogo scripts, are available in the Supplementary Material.

- 1.12

- We will not discuss the backgrounds of the methods in detail, as there is already a large body of literature on calibration and sensitivity analysis methods (e.g., Saltelli et al. 2000, 2004, 2008; Helton et al. 2006; Kleijnen 1995, 2008; Cournède et al. 2013; Gan et al. 2014). We will therefore refer to existing introductions to the respective methods. We also did not fine-tune the methods to our example model and did not perform all of the checks required for thorough interpretation of results, e.g., convergence checks. Therefore, the methods presented have the potential to produce more detailed and robust results, but for simplicity, we used default settings whenever possible. The purpose of this article is not to present the optimal application of the methods for the example model but to provide a good starting point to apply the methods to readers' own models. As with a real cookbook, readers should benefit from reading the general sections but might decide to browse through the list of approaches demonstrated and selectively zoom into reading specific "recipes".

- 1.13

- Readers not familiar with R will not understand the R scripts in detail but can still see how easily R packages can be employed to perform even complex tasks. Readers not familiar with statistical methods, e.g., linear regression, will not understand the interpretation of some of the results presented, but they should still grasp the general idea. Again, as with a real cookbook, you will not be able to follow the recipe if you never learned the basics of cooking. However, hopefully this article will convince some readers that learning these basics might pay off handsomely.

Software requirements

- 1.14

- The model used and described in the next section is implemented in NetLogo (Wilensky 1999). NetLogo can be downloaded from http://ccl.northwestern.edu/netlogo/. Parameter fitting and sensitivity analysis is performed in R (R Core Team 2013a). R can be downloaded from http://cran.r-project.org/. Because RNetLogo is available on CRAN, installation from within an R shell/RGUI can be performed by typing install.packages("RNetLogo"). For further details see the RNetLogo manual, available at http://cran.r-project.org/web/packages/RNetLogo/index.html. When RNetLogo is installed, loading the example model works in this way (path and version names might need to be adapted):

# 1. Load the package.

library(RNetLogo)

# 2. Initialize NetLogo.

nl.path <- "C:/Program Files/Netlogo 5.0.4"

NLStart(nl.path, nl.version=5, gui=FALSE, obj.name="my.nl1")

# 3. Load the NetLogo model.

model.path <- "C:/models/woodhoopoe.nlogo"

NLLoadModel(model.path, nl.obj="my.nl1") - 1.15

- This code was used in all application examples. The source codes of all examples as well as the R workspaces with simulation results are in the Supplementary Material. In many of the examples presented in this article, additional R packages are used. In most cases the installation is equivalent to the installation of RNetLogo. References are provided where these packages are used.

The example model

- 1.16

- The model description following the ODD protocol (Grimm et al. 2010) is adopted from Railsback & Grimm (2012). Because they are simple, a description of the submodels is included in the section Process overview and scheduling. The source code of the NetLogo model is included in the Supplementary Material.

- 1.17

- The model represents, in a simplified way, the population and social group dynamics of a group-living, territorial bird species with reproductive suppression, i.e., the alpha couple in each group suppresses the reproduction of subordinate mature birds. A key behaviour in this system is the subordinate birds' decision as to when to leave their territory for so-called scouting forays, on which they might discover a free alpha, or breeding, position somewhere else. If they do not find such a position, they return to their home territory. Scouting forays come with an increased mortality risk due to raptors. The model provides a laboratory for developing a theory for the scouting foray decision, i.e., alternative submodels of the decision to foray can be implemented and the corresponding output of the full model compared to patterns observed in reality. Railsback & Grimm (2012) use patterns generated by a specific model version, and the educational task they propose is to identify the submodel they were using. In this article, we use the simplest model version, where the probability of subordinates undertaking a scouting foray is constant.

- 1.18

- Purpose. – The purpose of the model is to explore the consequences of the subordinate birds' decisions to make scouting forays on the structure and dynamics of the social group and the entire population.

- 1.19

- Entities, state variables, and scales. – The entities of the model are birds and territories. A territory represents both a social group of birds and the space occupied by that group. Territories not occupied by a group are empty. Territories are arranged in a one-dimensional row of 25 NetLogo patches with the boundary territories "wrapped" so that the model world is a ring. The state variables of a territory are the coordinate of its location and a list of the birds occupying it. The state variables of birds are their sex, age (in months), and whether they are alpha. The time step of the model is one month. Simulations run for 22 years, and the results from the initial two years are ignored.

- 1.20

- Process overview and scheduling. – The following list of processes is executed in the given order once per time step. The order in which the birds and territories execute a process is always randomised, and state variables are updated immediately after each operation.

- Date and ages of birds are updated.

- Territories try to fill vacant alpha positions. If a territory lacks an alpha but has a subordinate adult (age > 12 months) of the right sex, the oldest subordinate becomes the new alpha.

- Birds undertake scouting forays. Subordinate adults decide whether to scout for a new territory with a vacant alpha position. If no other non-alpha is in the current territory, a subordinate adult definitely stays. If there are older non-alphas on the current home territory, a subordinate adult scouts with probability scout-prob. If the bird scouts, it randomly moves either left or right along the row of territories. Scouting birds can explore up to scouting-distance territories in their chosen direction. Of those territories, the bird occupies the one that is closest to its starting territory and has no alpha of its sex. If no such territory exists, the bird goes back to its starting territory. All birds that scout (including those that find and occupy a new territory) are then subjected to a predation mortality probability of 1.0 - scouting-survival.

- Alpha females reproduce. In the last month of every year, alpha females that have an alpha male in their territory produce two offspring. The offspring have their age set to zero months and their sex chosen randomly with equal probability.

- Birds experience mortality. All birds are subject to stochastic background mortality with a monthly survival probability of survival-prob.

- Output is produced.

- 1.21

- Design concepts.

- Emergence. The results we are interested in are the three patterns the model is supposed to reproduce (see Observation); they all emerge from the decision making for scouting but also may strongly depend on other model parameters, such as reproduction and mortality rates.

- Adaptation. There is only one adaptive decision: to undertake a scouting foray or not.

- Objectives. The subordinate birds' objective is to become an alpha so they can reproduce. If the individual stays at its home territory, all the older birds of its sex must die before the individual is able to become an alpha. If the individual scouts, to succeed it must find a vacant alpha position and it must survive predation during scouting.

- Sensing. We assume that birds know nothing about other territories and can only sense whether an alpha position is open in another territory by actually going there. Birds know both the status and age of the other birds in their group.

- Collectives. The social groups are collectives. Because the model's "territory" entities represent the social groups as well as their space, the model treats the behaviours of the social groups (promoting alphas) as behaviours of the territories.

- Observation. In addition to visual displays to observe individual behaviour, three characteristic patterns are observed at different hierarchical levels of the model: the long-term mean number of birds (mean or abundance criterion), the standard deviation from year to year in the annual number of birds (variation criterion) and the average percentage of territories that lack one or both alpha animals (vacancy criterion). The observational data are collected in November of each year, i.e., the month before reproduction occurs.

- 1.22

- Initialisation. – Simulations begin in January (month 1). Every territory begins with two male and two female birds, with ages chosen randomly from a uniform distribution of 1 to 24 months. The oldest adult of each sex becomes alpha.

- 1.23

- Input data. – The model does not include any external input.

- 1.24

- Submodels. – Because all submodels are very simple, their full descriptions are already included in the process overview above.

- 1.25

- The model includes five parameters, which are listed in Table 1.

Table 1: Model parameters. Parameter Description Base value used by Railsback & Grimm (2012) fecundity Number of offspring per reproducing female 2 scouting-distance Distance over which birds scout 5 scouting-survival Probability of surviving a scouting trip 0.8 survival-prob Probability of a bird to survive one month 0.99 scout-prob Probability to undertake a scouting trip 0.5

Parameter estimation and calibration

Parameter estimation and calibration

- 2.1

- Typically, ABMs include multiple submodels with several parameters. Parameterisation, i.e., finding appropriate values of at least some of these parameters, is often difficult due to the uncertainty in, or complete lack of, observational data. In such cases, parameter fitting or calibration methods can be used to find reasonable parameter values by combining sampling or optimisation methods with so-called inverse modelling, also referred to as pattern-oriented parameterisation/modelling (POM; Grimm & Railsback 2005), or Monte Carlo Filtering, as the patterns are applied as filters to separate good from bad sets of parameter values (Grimm & Railsback 2005). The basic idea is to find parameter values that make the model reproduce patterns observed in reality sufficiently well.

- 2.2

- Usually, at least a range of possible values for a parameter is known. It can be obtained from biological constraints (e.g., an adult human will usually be between 1.5 and 2.2 metres tall), by checking the variation in repeated measurements or different data in the literature, etc. During model development, parameter values are often chosen via simple trial and error "by hand" because precision is not necessary at this stage. However, once the design of the model is fixed and more quantitative analyses are planned, the model must be run systematically with varying parameter values within this range and the outcome of the model runs compared to observational data.

- 2.3

- If the parameters are all independent, i.e., the calibrations of different parameters do not affect each other, it is possible to perform model calibration separately for all unknown parameters. Usually, though, parameters interact because the different processes that the parameters represent are not independent but interactive. Thus, rather than single parameters, entire sets of parameters must be calibrated simultaneously. The number of possible combinations can become extremely large and may therefore not be processable within adequate time horizons.

- 2.4

- Therefore, more sophisticated ways of finding the right parameter values are needed. This can also be the case for independent parameters or if only one parameter value is unknown, if the model contains stochastic effects and therefore needs to be run multiple times (Monte Carlo simulations) for each parameter combination (Martínez et al. 2011) or if the model runs very slow. Therefore, efficient sampling or optimisation methods must be applied. Here, "sampling" refers to defining parameter sets so that the entire parameter space, i.e., all possible parameter combinations, is explored in a systematic way.

- 2.5

- To assess whether a certain combination of parameter values leads to acceptable model output, it is necessary to define one, or better yet multiple, fitting criteria, i.e., metrics that allow one to quantify how well the model output matches the data. Such criteria should be taken from various hierarchical levels and possibly different spatial or temporal scales, e.g., from single individuals over social groups, if possible, to the whole population.

- 2.6

- Two different strategies for fitting model parameters to observational data exist: best-fit and categorical calibration. The aim of calibration for the first strategy is to find the parameter combination that best fits the observational data. The quality measure is one exact value obtained from the observational data, so it is easy to determine which parameter set leads to the lowest difference. Of course, multiple fitting criteria can be defined, but they must be aggregated to one single measure, for example, by calculating the sum of the mean square deviation between the model and the observational data for all fitting criteria. An example for the application of such a measure can be found in Wiegand et al. (1998). The problem with best-fit calibration is that even the best parameter set may not be able to reproduce all observed patterns sufficiently well. Furthermore, aggregating different fitting criteria to one measure makes it necessary to think about their relation to each other, i.e., are they all equally important or do they need to be weighted?

- 2.7

- These questions are not that important for the second strategy, categorical calibration. Here, a single value is not derived from the observational data, but rather, a range of plausible values is defined for each calibration criterion. This is particularly useful when the observational data are highly variable or uncertain by themselves. In this case, the number of criteria met is counted for a parameter set, i.e., the cases when the model result matches the defined value range. Then, the parameter set that matches all or most criteria is selected. Still, it is possible that either no parameter combination will match the defined value range or that multiple parameter sets will reproduce the same target patterns. In such a case, the fitting criteria (both their values and importance) and/or the model itself need to be adjusted. For further details on practical solutions to such conceptual problems, see, for example, Railsback & Grimm (2012).

Preliminaries: Fitting criteria for the example model

- 2.8

- We assume, following Railsback & Grimm (2012), that the two parameters survival-prob and scout-prob have been identified as important parameters with uncertain values. We assume that reasonable value ranges for these two parameters are as follows:

- scout-prob: 0.0 to 0.5

- survival-prob: 0.95 to 1.0

- 2.9

- Railsback & Grimm (2012) define categorical calibration criteria. The acceptable value ranges derived from observational data they used are as follows:

- mean abundance (abundance criterion): 115 to 135 animals

- annual std. dev. (variation criterion): 10 to 15 animals

- mean territories lacking one or both alpha animals (vacancy criterion): 15 to 30%

- 2.10

- Some of the calibration methods used below require a single fitting criterion, the best-fit criterion. To keep the patterns as they are, we use a hybrid solution by defining conditional equations to transform the categorical criteria to a best-fit criterion. The following is just a simple example of how such an aggregation can be performed. For more powerful approaches, see, for example, Soetaert & Herman (2009) for a function including a measure of accuracy of observational data. However, such transformations always involve a loss of more or less important information that can be non-trivial. Furthermore, differences in the results of different methods, for example, using categorical criteria versus using best-fit criteria, can have their origin in the transformation between these criteria.

- 2.11

- To calculate the three above-defined categorical criteria as quantitative measures, we use conditional equations based on squared relative deviations to the mean value of the acceptable value range and sum them over the different criteria as follows:

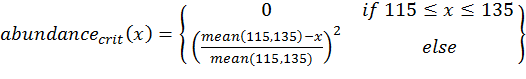

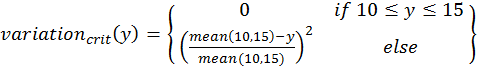

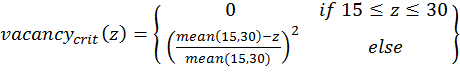

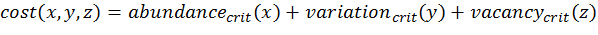

(1)

(2)

(3)

(4) with x,y,z being the corresponding simulation result, e.g., x is the mean abundance of the simulation as mentioned above. If the simulation result is within the acceptable range, the cost value of the criteria becomes 0; otherwise, it is the squared relative deviation. By squaring the deviations, we keep the values positive and weigh large deviations disproportionately higher than low deviations (Eqs. 1–3). This has an important effect on Eq. 4. For example, if we find small deviations in all three criteria, the overall cost value (Eq. 4) still stays low, but when only one criterion's deviation is rather high, its criterion value and therefore also the overall cost value becomes disproportionately high. We use this approach here because we think that small deviations in all criteria are less important than a single large deviation.

- 2.12

- Alternatives to this are multi-criterion approaches where each criterion is considered separately and a combined assessment is performed by determining Pareto optima or fronts (Miettinen 1999; Weck 2004). See, for example, the package mco (Trautmann et al. 2013) for Pareto optimisation with a genetic algorithm. Multi-criterion approaches, however, have their own challenges and limitations and are much less straightforward to use than the cost function approach that we used here.

- 2.13

- Because the example model includes stochastic effects, we repeat model runs using the same initialisation and average the output. Following Martínez et al. (2011) and Kerr et al. (Kerr 2002), we ran 10 repetitions for every tested parameter set. However, for "real" simulation studies it is advisable to determine the number of repetitions by running the model with an increasing number of repetitions and calculating the resulting coefficient of variation (CV) of the simulation output. At the number of repetitions where the CV remains (nearly) the same, convergence can often be assumed (Lorscheid et al. 2012). However, in cases of non-linear relationships between the input parameters and simulation output, this assumption may not be fulfilled.

- 2.14

- If we have replicates of observational data and a stochastic model, which is not the case for our example, we should compare distributions of the results rather than using a single value comparison, as recommended in Stonedahl & Wilensky (2010). The calculation of the pomdev measure (Piou et al. 2009), which is already implemented in the R package Pomic (Piou et al. 2009), could be of interest in such a case.

- 2.15

- If we want to compare whole time series instead of single output values, the aggregation procedure can become more difficult. Such data could also be compared by mean square deviations (see, for example, Wiegand et al. 1998), but then small differences in all measurement points can yield the same deviation as a strong difference in one point. Hyndman (2013) provides an overview of R packages helpful for time series analysis.

Full factorial design

- 2.16

- A systematic method for exploring parameter space is (full) factorial design, known from Design of Experiments (DoE) methodology (Box et al. 1978). It can be applied if the model runs very quickly or if the numbers of unknown parameters and possible parameter values are rather small. For example, Jakoby et al. (2014) ran a deterministic generic rangeland model that includes nine parameters and a few difference equations for one billion parameter sets.

- 2.17

- In DoE terminology, the independent variables are termed "factors" and the dependent (output) variables "responses". For full factorial design, all possible factor combinations are used. The critical point here is to define the possible factor levels (parameter values) within the parameter ranges. For parameter values that are integer values by nature (e.g., number of children) this is easy, but for continuous values it can be difficult to find a reasonable step size for the factor levels. This can be especially difficult if the relationship between the factor and the response variables is non-linear.

- 2.18

- For our example model, we assume the following factor levels (taken from Railsback & Grimm 2012):

- scout-prob: 0.0 to 0.5 with step size 0.05

- survival-prob: 0.95 to 1.0 with step size 0.005

# 1. Define a function that runs the simulation model

We include this example because it is a good starting point for proceeding to more sophisticated methods, such as the fractional factorial design. If you want to use a classical full factorial design with NetLogo, we recommend using NetLogo's "BehaviorSpace" (Wilensky & Shargel 2002).

# for a given parameter combination and returns the

# value(s) of the fitting criteria. See Supplementary

# Material (simulation_function1.R) for an

# implementation example using RNetLogo.

sim <- function(params) {

...

return(criteria)

}

# 2. Create the design matrix.

full.factorial.design <- expand.grid(scout.prob =

seq(0.0,0.5,0.05), survival.prob = seq(0.95,1.0,0.005))

# 3. Iterate through the design matrix from step 2 and call

# function sim from step 1 with each parameter combination.

sim.results <- apply(full.factorial.design, 1, sim)

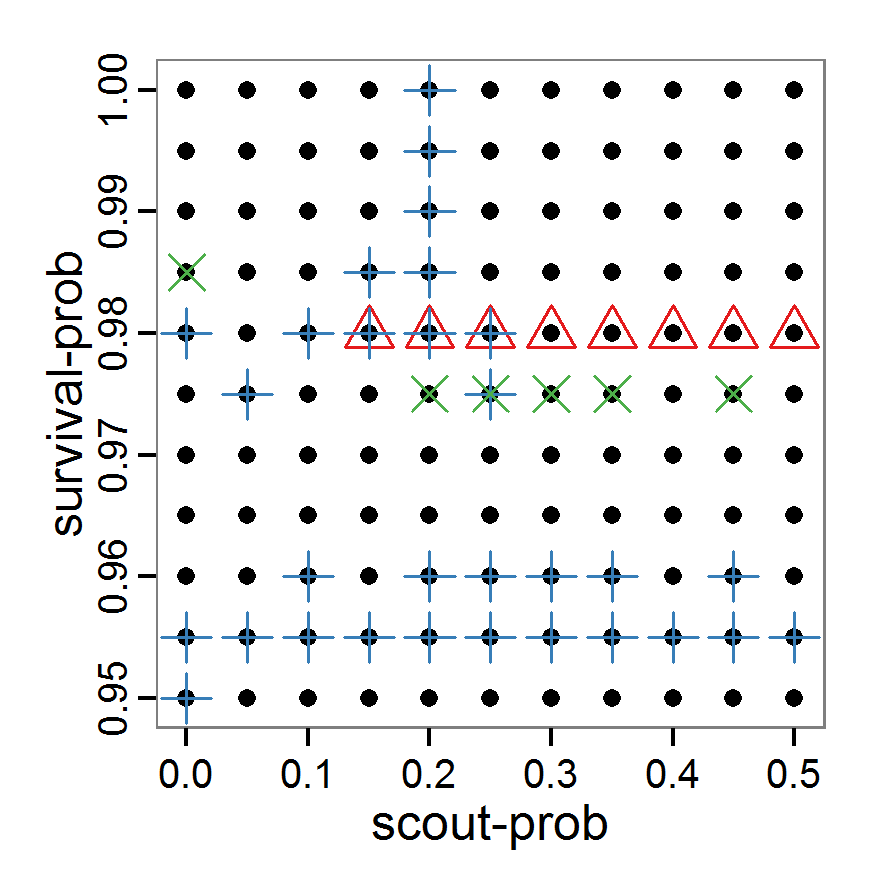

Figure 1. Left: Results of the full factorial design (121 parameter sets) using categorical evaluation criteria. Black points symbolise the tested parameter combinations, and the three different symbols show whether the evaluation criteria were met (red triangle: abundance criterion, blue cross: variation criterion, green x: vacancy criterion). Right: Results of the full factorial design based on conditional best-fit equations (Equation 1 to 3), summed up to the one metric cost (Equation 4). The cost values are truncated at 10.0 (max. cost value was 842). Grey cells indicate high cost values, and white cells represent low values, i.e., better solutions. One cell represents one simulation with parameter values from the cell's midpoint corresponding to the left panel. The results of the model calibration procedure with categorical calibration criteria can be explored visually. Figure 1 (left panel) shows such a figure for the example model. We see that none of the 121 tested parameter combinations met the three calibration criteria simultaneously. Still, the figure provides some useful hints. The central region of parameter space appears to be promising. The failure to meet all three criteria simultaneously might be due to the step width used.

- 2.19

- Using the same output data that underlie Figure 1 (left panel), we can calculate the conditional best-fit equations (Eq. 4) and plot the results as a raster map (Figure 1, right panel). This plot shows more directly than the left panel where the most promising regions of parameter space are located (the white cells), which can then be investigated in more detail.

- 2.20

- Overall, full factorial design is useful for visually exploring model behaviour regarding its input parameters in a systematic way but only if the number of parameters and/or factor levels is low. To gain a coarse overview, full factorial design can be useful for calibrating a small number of parameters at a time because the number of combinations can be kept small enough. Fractional factorial designs can be used for a larger number of parameters by selecting only a subset of the parameter combinations of a full factorial design (Box et al. 1978); the application in R is very similar, see the above-mentioned collection by Groemping (2013a).

Classical sampling methods

- 2.21

- One common method of avoiding full factorial designs, both in simulations and in real experiments, is the usage of sampling methods. The purpose of these methods is to strongly reduce the number of parameter sets that are considered but still scan parameter space in a systematic way. Various algorithms exist to select values for each single parameter, for example, random sampling with or without replacement, balanced random design, systematic sampling with random beginning, stratified sampling etc.

Simple random sampling

- 2.22

- The conceptually and technically simplest, but not very efficient, sampling method is simple random sampling with replacement. For each sample of the chosen sample size (i.e., number of parameter sets), a random value (from an a priori selected probability density function) is taken for each parameter from its value range. The challenge here, and also for all other (random) sampling methods, is finding an appropriate probability density function (often just a uniform distribution is used) and the selection of a useful sample size. Applying a simple random sampling in R can look like the following:

# 1. Define a simulation function (sim) as done for

# Full factorial design.

# 2. Create the random samples from the desired random

# distribution (here: uniform distribution).

random.design <- list('scout-prob'=runif(50,0.0,0.5),

'survival-prob'=runif(50,0.95,1.0))

# 3. Iterate through the design matrix from step 2 and call

# function sim from step 1 with each parameter combination.

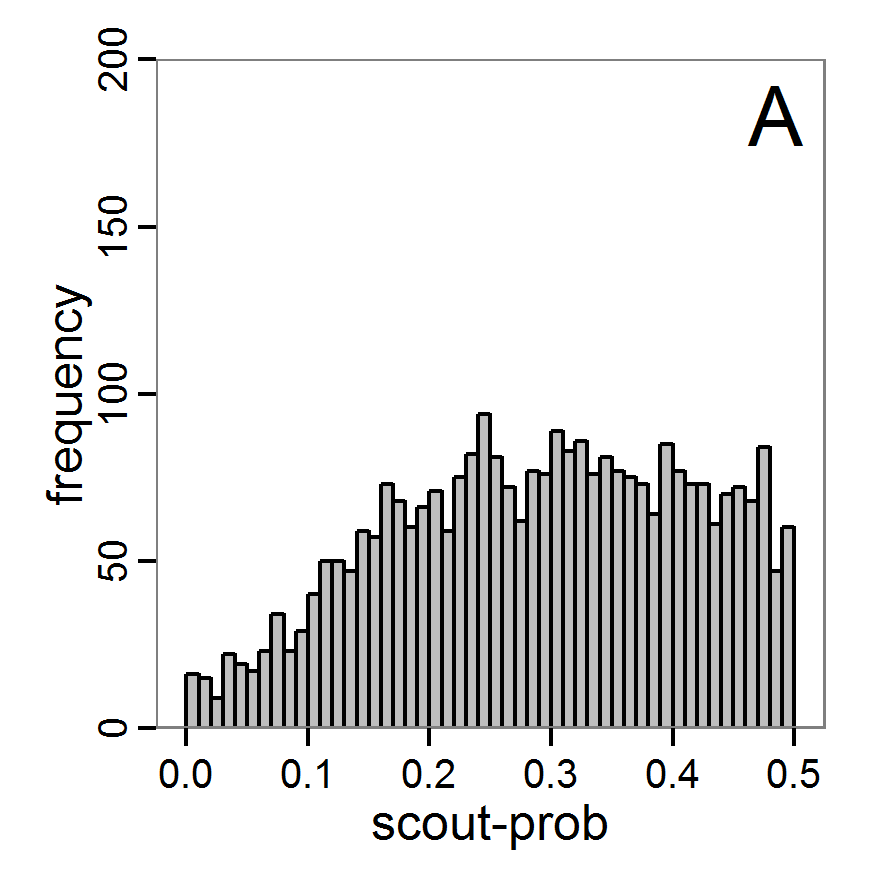

sim.results <- apply(as.data.frame(random.design), 1, sim) - 2.23

- Despite the fact that this simple random sampling is not an efficient method and could rather be considered a trial-and-error approach to exploring parameter space, it is used quite often in practice (e.g., Molina et al. 2001). We nevertheless included the source code for this example in the Supplementary Material because it can be easily adapted to other, related sampling methods.

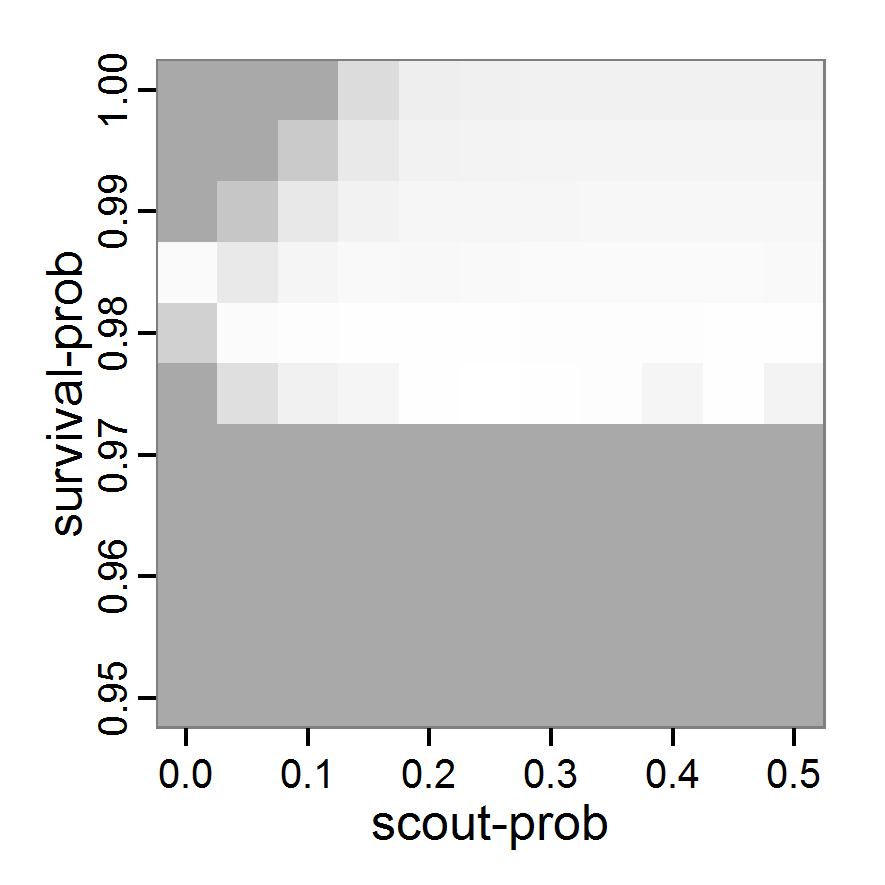

Figure 2. : Results of using simple random sampling based on categorical calibration criteria with 50 samples. Black points symbolise the tested parameter combinations, and the three different symbols show whether the evaluation criteria were met (red triangle: abundance criterion, blue cross: variation criterion, green x: vacancy criterion). - 2.24

- The results of an example application using 50 samples with categorical calibration criteria are shown in Figure 2. The sampling points are distributed over the parameter space but leave large gaps. The overall pattern of the regions where the different criteria were met looks similar to that of the full factorial design (Figure 1). In this example application, we were not able to find a parameter combination that meets all three evaluation criteria. In general, the simple random sampling method is not an efficient method for parameter fitting.

Latin hypercube sampling

- 2.25

- As a more efficient sampling technique to scan parameter spaces, Latin hypercube sampling (LHS) (McKay et al. 1979) is widely used for model calibration and sensitivity analysis as well as for uncertainty analysis (e.g., Marino et al. 2008; Blower & Dowlatabadi 1994; Frost et al. 2009; Meyer et al. 2009). LHS is a stratified sampling method without replacement and belongs to the Monte Carlo class of sampling methods. It requires fewer samples to achieve the same accuracy as simple random sampling. The value range of each parameter is divided into N intervals (= sample size) so that all intervals have the same probability. The size of each interval depends on the used probability density distribution of the parameter. For uniform distributions, they all have the same size. Then, each interval of a parameter is sampled once (Marino et al. 2008). As there are some packages for creating Latin hypercubes available in R, such as tgp (Gramacy & Taddy 2013) and lhs (Carnell 2012), it is easy to use this sampling method. For our small example model, the code for generating a Latin hypercube with the tgp package is as follows:

# 1. Define a simulation function (sim) as done for

# Full factorial design.

# 2. Create parameter samples from a uniform distribution

# using the function lhs from package tgp.

param.sets <- lhs(n=50, rect=matrix(c(0.0,0.95,0.5,1.0), 2))

# 3. Iterate through the parameter combinations from step 2

# and call function sim from step 1 for each parameter

# combination.

sim.results <- apply(as.data.frame(param.sets), 1, sim) - 2.26

- As with simple random sampling, the challenge of choosing appropriate probability density distributions and a meaningful sample size remains. Using, as shown above, a uniform random distribution for the two parameters of our example model, the results using categorical criteria for 50 samples are shown in Figure 3. We have been lucky and found one parameter combination (scout-prob: 0.0955, survival-prob: 0.9774) that met all three criteria. The source code for creating a Latin hypercube in the Supplementary Material also includes an example of applying a best-fit calibration (Eq. 4).

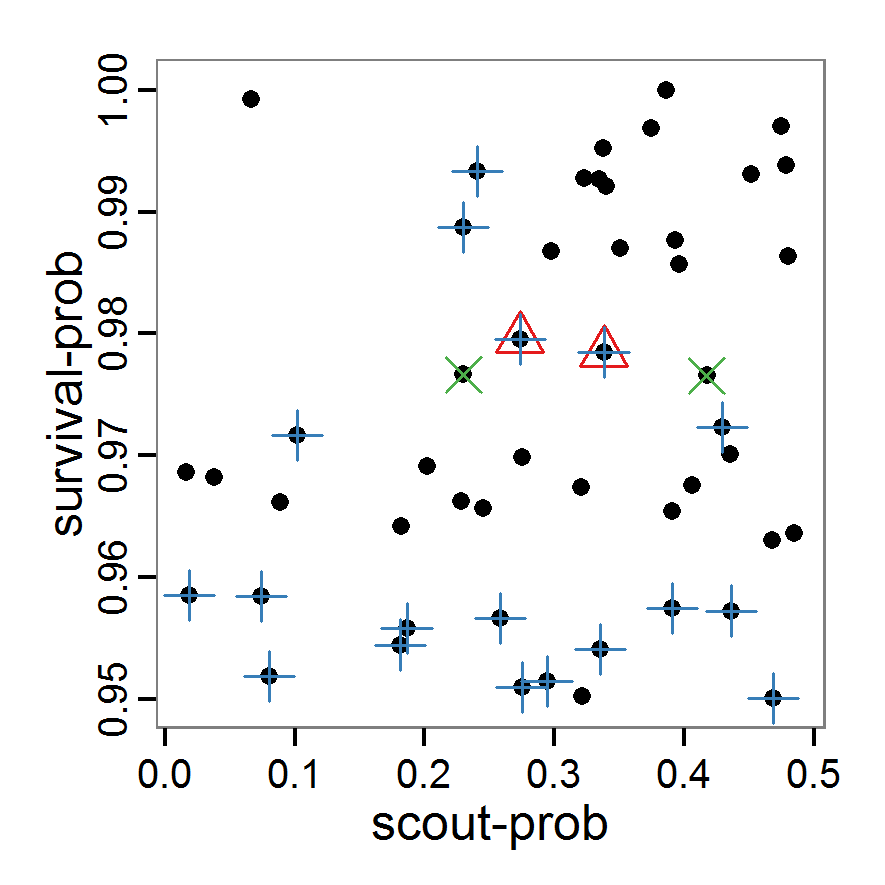

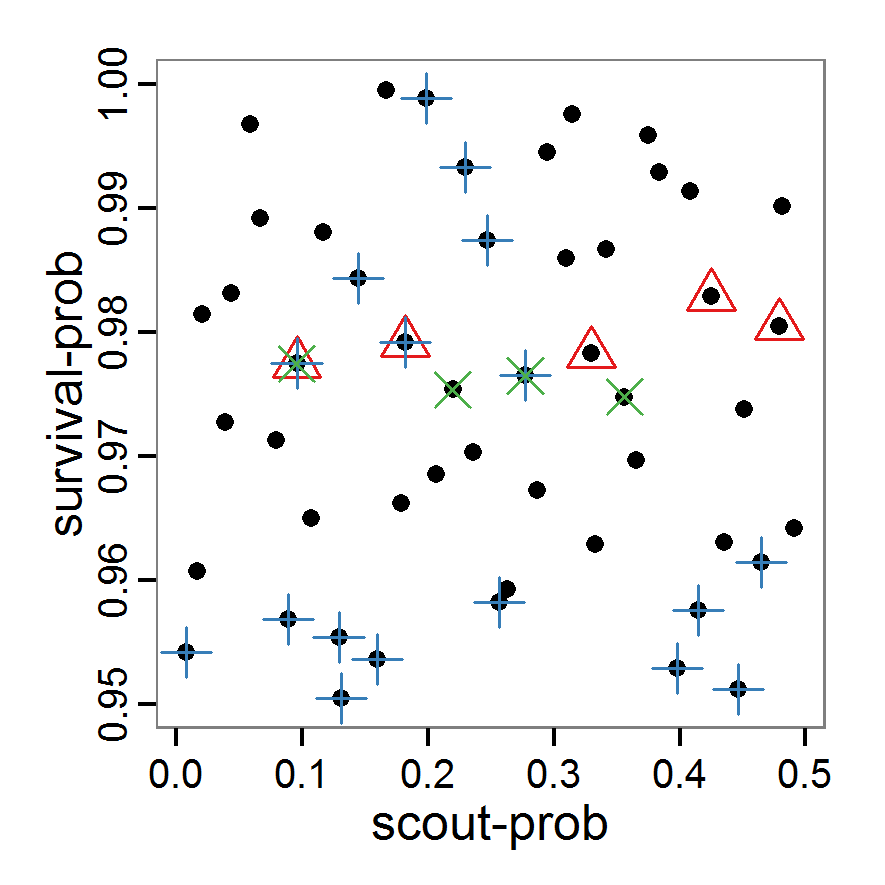

Figure 3. Results from the Latin hypercube sampling using categorical evaluation criteria with 50 samples. Black points symbolise tested parameter combinations, and the three different symbols show whether the evaluation criteria were met (red triangle: abundance criterion, blue cross: variation criterion, green x: vacancy criterion). Optimisation methods

- 2.27

- In contrast to the sampling methods described above, optimisation methods create parameter sets not before the simulations are started but in a stepwise way based on the results obtained with one or more previously used parameter sets. These methods are used in many different disciplines, including operations research, physics etc. (Aarts & Korst 1989; Bansal 2005). As the relationships between the input parameters and the output variables in ABMs are usually non-linear, non-linear heuristic optimisation methods are the right choice for parameter fitting. We will give examples for gradient and quasi-Newton methods, simulated annealing and genetic algorithms. There are, however, many other optimisation methods available, such as threshold accepting, ant colony optimisation, stochastic tunnelling, tabu search etc.; several packages for solving optimisation problems are available in R. See Theussl (2013) for an overview.

Gradient and quasi-Newton methods

- 2.28

- Gradient and quasi-Newton methods search for a local optimum where the gradient of change in parameters versus change in the fitting criterion is zero. In cases where multiple local optima exist, the ability to find the global optimum depends on the starting conditions (Sun & Yuan 2006). A popular example of gradient methods is the so-called conjugate gradient method (CG). Because the standard CG is designed for unconstrained problems (i.e., the parameter space cannot be restricted to a specific value range), it is not useful to apply it to parameter estimation problems of ABMs. Quasi-Newton methods instead are based on the Newton method but approximate the so-called Hessian matrix and, therefore, do not require the definition of the second derivative (Biethahn et al. 2004). An introduction to these methods can be found in Fletcher (1987). The implementation of both the gradient and quasi-Newton methods requires a gradient function to be supplied, which is often difficult in ABMs. Implementations in R can often approximate the gradient numerically. Here, we selected the L-BFGS-B method by Byrd et al. (1995), which is a variant of the popular Broyden–Fletcher–Goldfarb–Shanno (BFGS) method (Bonnans et al. 2006) because it is the only method included in the function optim of R's stats package (R Core Team 2013a), which can take value ranges (upper and lower limits) for the parameters into account. The strength of the L-BFGS-B method is the ability to handle a large number of variables. To use the L-BFGS-B method with our example ABM, we must define a function that returns a single fitting criterion for a submitted parameter set. For this, we use the single fitting criterion defined in Eq. 4. The usage of this method is as follows:

# 1. Define a function that runs the simulation model

# for a given parameter combination and returns the

# value of the (aggregated) fitting criterion. See

# Supplementary Material (simulation_function2.R) for

# an implementation example using RNetLogo.

sim <- function(params) {

...

return(criterion)

}

# 2. Run L-BFGS-B. Start, for example, with the maximum of

# the possible parameter value range.

result <- optim(par=c(0.5, 1.0),

fn=sim, method="L-BFGS-B",

control=list(maxit=200),

lower=c(0.0, 0.95), upper=c(0.5, 1.0)) - 2.29

- Other packages useful for working with gradient or quasi-Newton methods are Rcgmin (Nash 2013), optimx (Nash & Varadhan 2011) and BB (Varadhan & Gilbert 2009). The source code, including the L-BFGS-B, is in the Supplementary Material and can easily be adapted for other gradient or quasi-Newton methods.

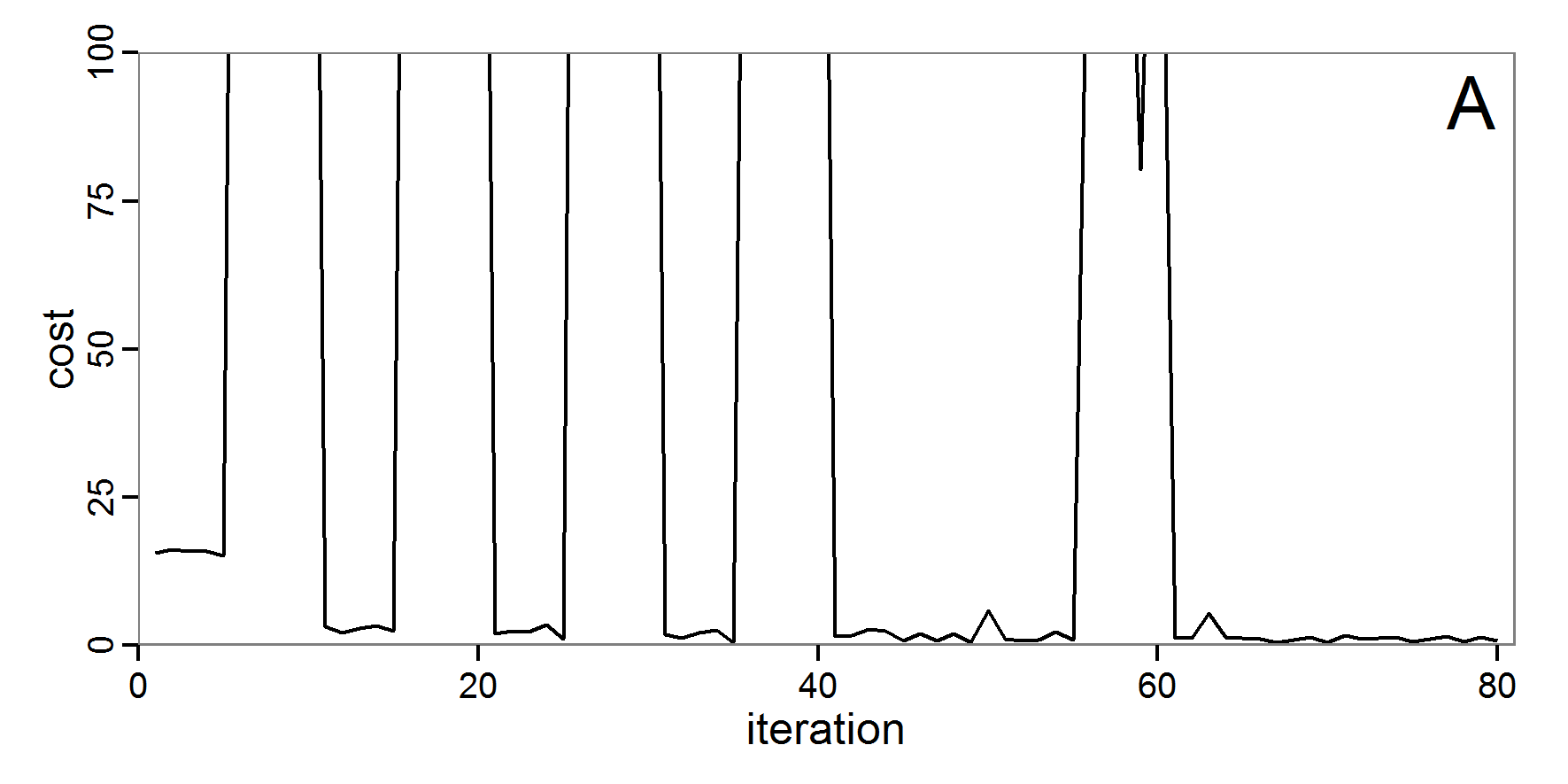

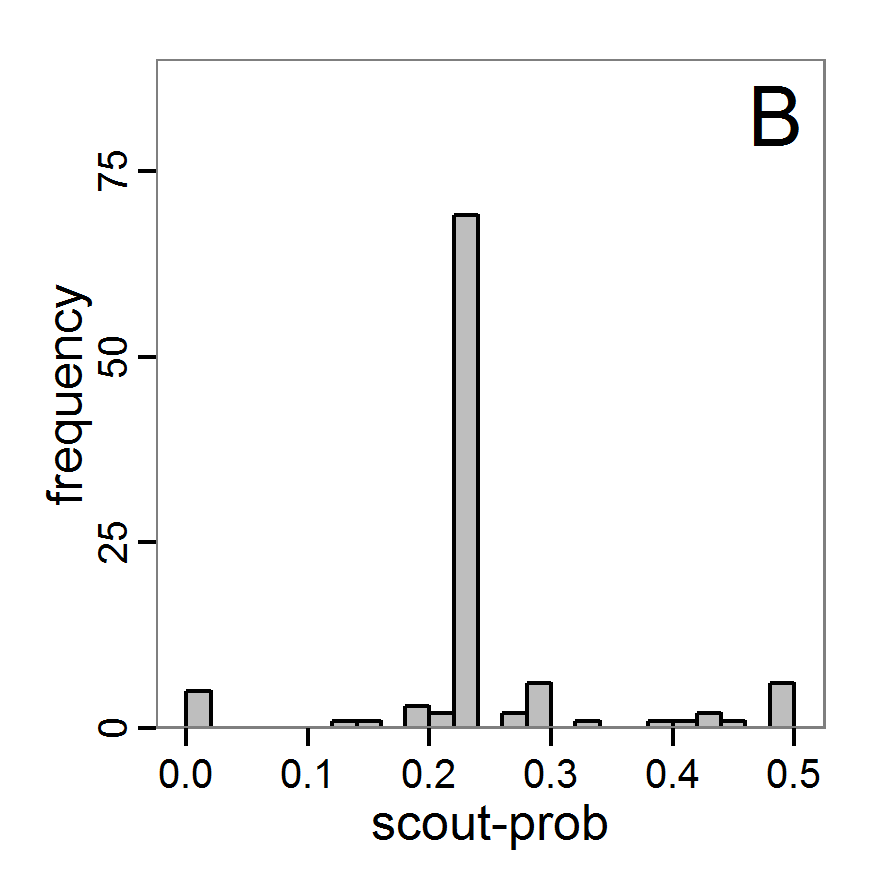

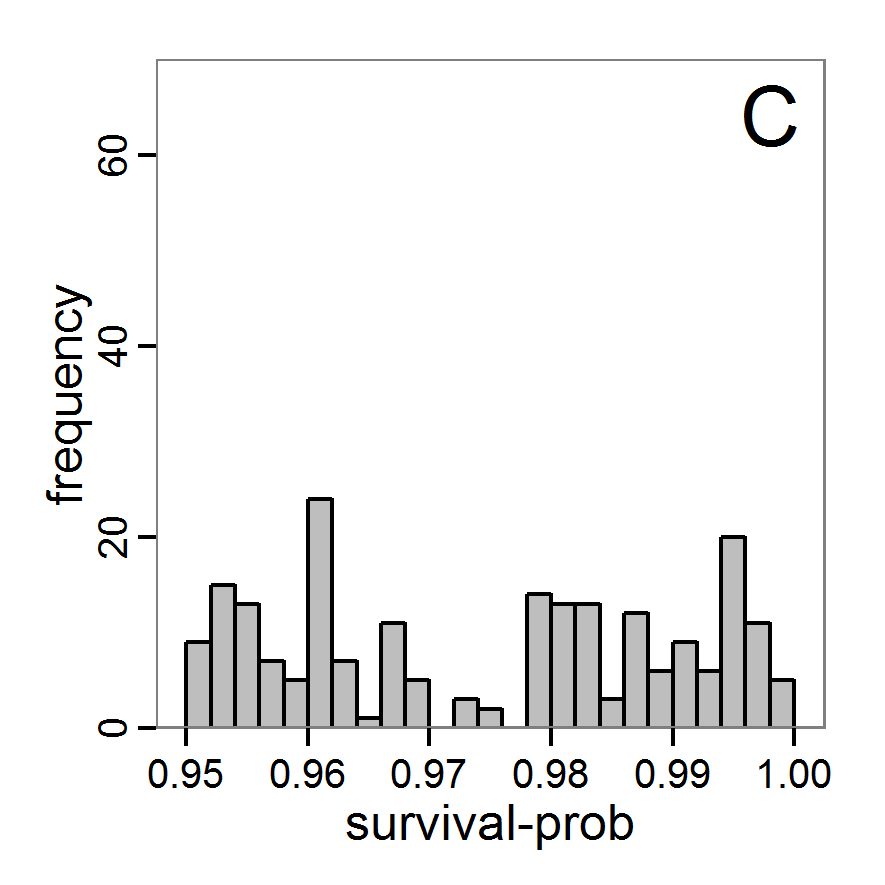

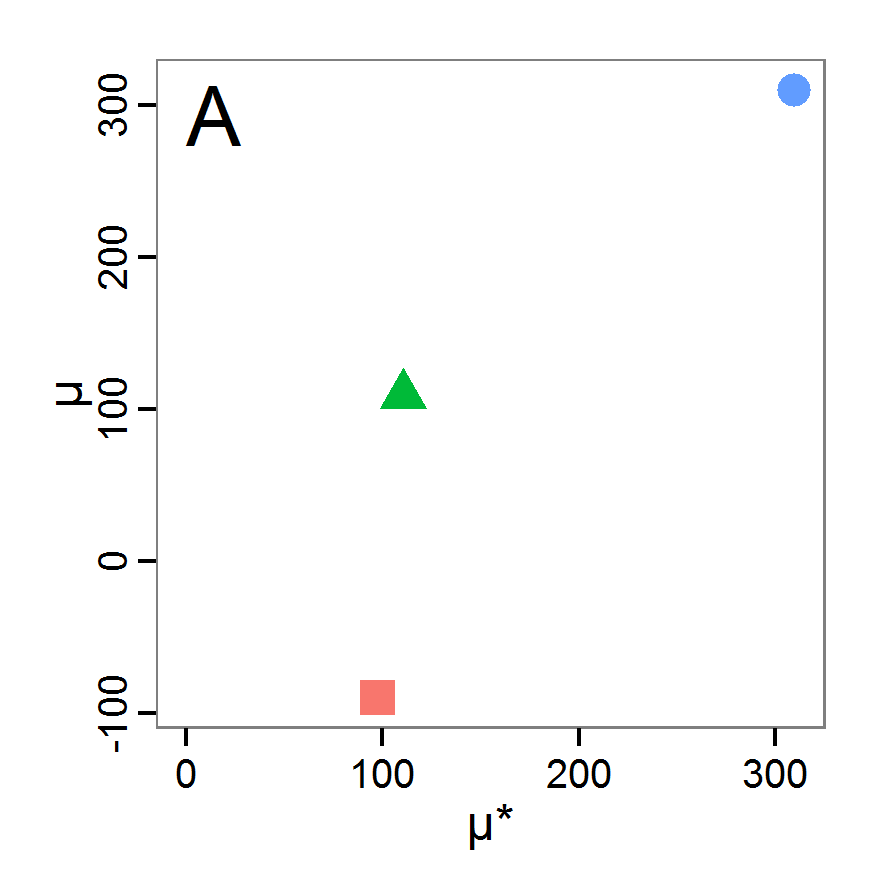

Figure 4. Results of the L-BFGS-B method (includes intermediate simulations, e.g., simulations for gradient approximation). A: Performance of the cost value (Eq. 4) over the calls of the simulation function (x-axis, truncated at cost value 100, max. cost value was 317). B: Histogram of the tested parameter values for parameter scout-prob. C: Histogram of the tested parameter values for parameter survival-prob. - 2.30

- The variation of the aggregated value of the conditional equations (cost value) over the 80 model evaluations (including evaluations for gradient approximation) of the L-BFGS-B method is shown in Figure 4 (upper panel). The algorithm checks the cost value of the start parameter set and the parameter values at the bounds of the valid parameter values, resulting in strong variations of the cost value over the number of iterations. Another source of the strong variation is intermediate simulation for the approximation of the gradient function. As we are only interested in the regions with low cost values, we truncated the high cost values in the graph to obtain a more detailed look at the variation of the lower cost values over the iterations. The lower panels of Figure 4 show the histograms of the tested parameter values. We see that the search by the L-BFGS-B method for an optimal value for both model parameters, in the configuration used here, shortly checked the extreme values of the value range but focused on the middle range of the parameter space. We see that the method stopped quickly and left large areas out of consideration, which is typical for methods searching for local optima without the ability to also accept solutions with higher costs during the optimisation. For survival-prob, the minimum possible value is checked more precisely and smaller parts of the parameter space remain untested. The best fit was found with parameter values of 0.3111 for scout-prob and 0.9778 for survival-prob, which resulted in a cost value of 1.1. This cost value indicates that the three categorical criteria were not matched simultaneously; otherwise the cost value would be zero. However, keep in mind that the application of the method was not fine-tuned and a finite-difference approximation was used for calculating the gradient.

Simulated annealing

- 2.31

- In simulated annealing, temperature corresponds to a certain probability that a local optimum can be left. This avoids the problem of optimisation methods becoming stuck in local, but not global, optima. Simulated annealing is thus a stochastic method designed for finding the global optimum (Michalewicz & Fogel 2004).

- 2.32

- There are several R packages that include simulated annealing functions, for example, stats (R Core Team 2013a), subselect (Cerdeira et al. 2012) or ConsPlan (VanDerWal & Januchowski 2010). As for the gradient and quasi-Newton methods, to use simulated annealing with an ABM we must define a function that returns a single fitting criterion for a submitted parameter set. Using the GenSA package (Xiang et al. forthcoming), which allows one to define value ranges for the parameters, running a simulated annealing optimisation looks like this:

# 1. Define a simulation function (sim) as done for the

# L-BFGS-B method.

# 2. Run SA algorithm. Start, for example, with the maximum

# of the possible parameter value range.

result <- GenSA(par=c(0.5,1.0), fn=sim,

lower=c(0.0, 0.95), upper=c(0.5, 1.0)) - 2.33

- As with the gradient and quasi-Newton methods, the choice of the starting values as well as the number of iterations can be challenging. Furthermore, specific to simulated annealing, the selection of an appropriate starting temperature is another critical point.

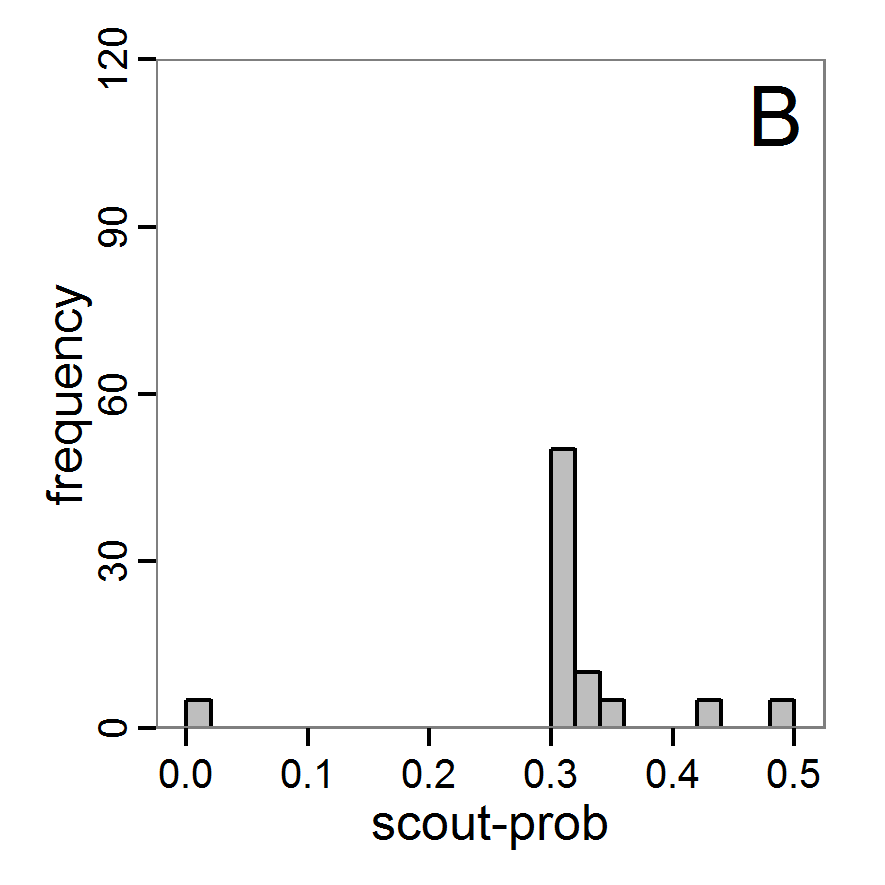

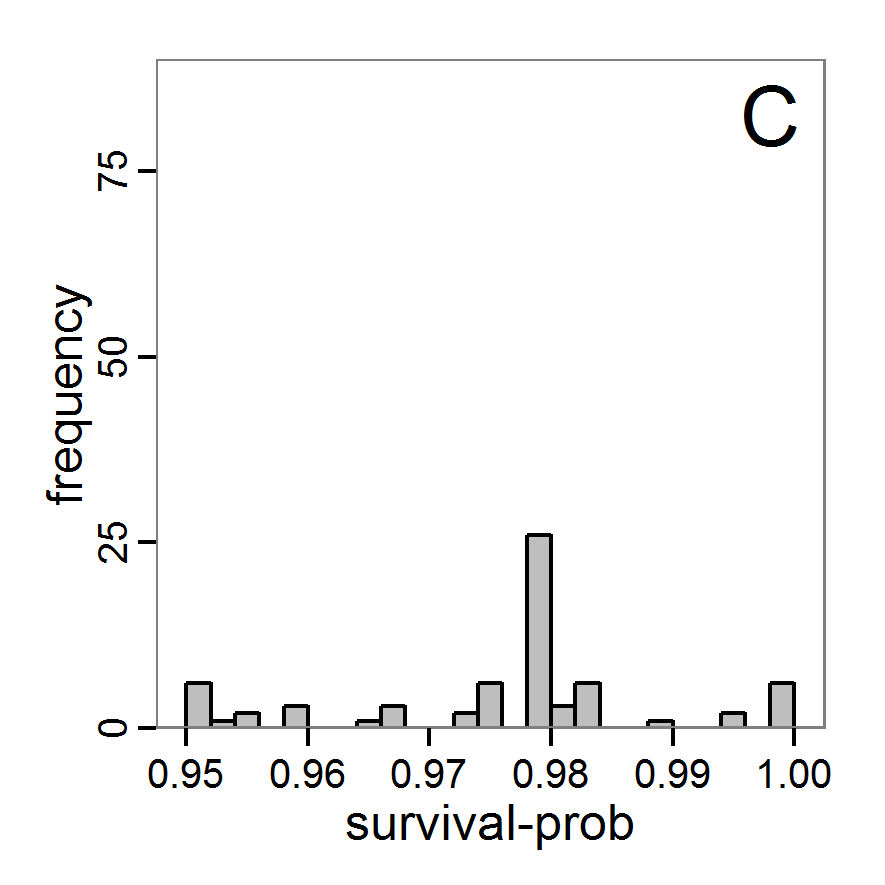

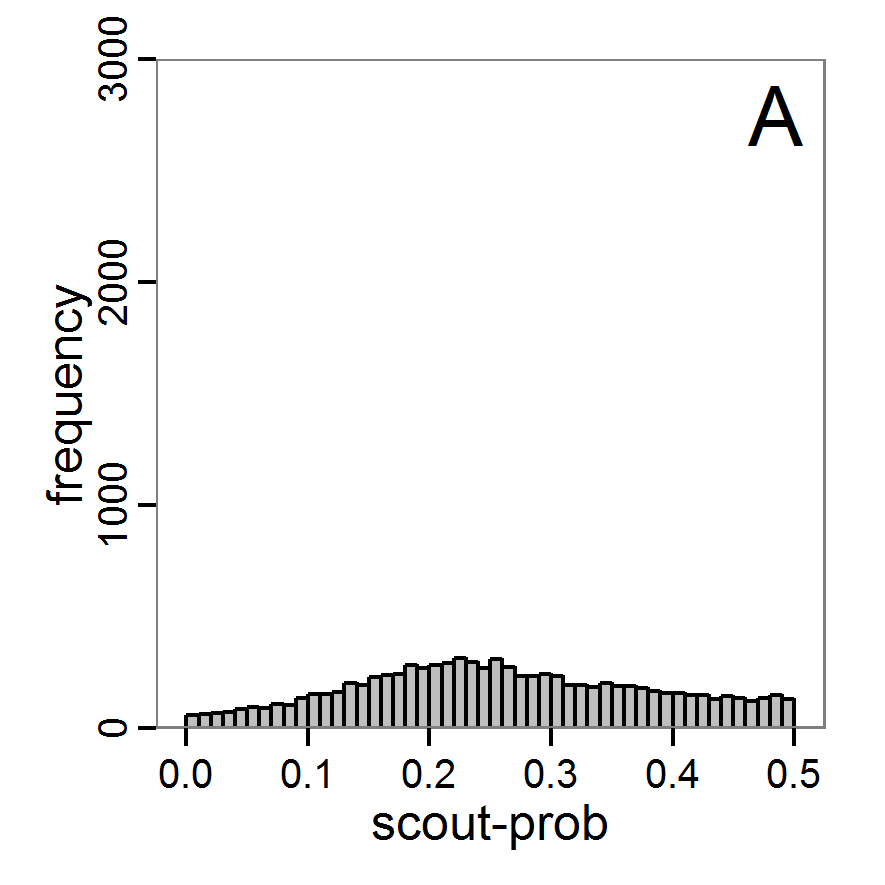

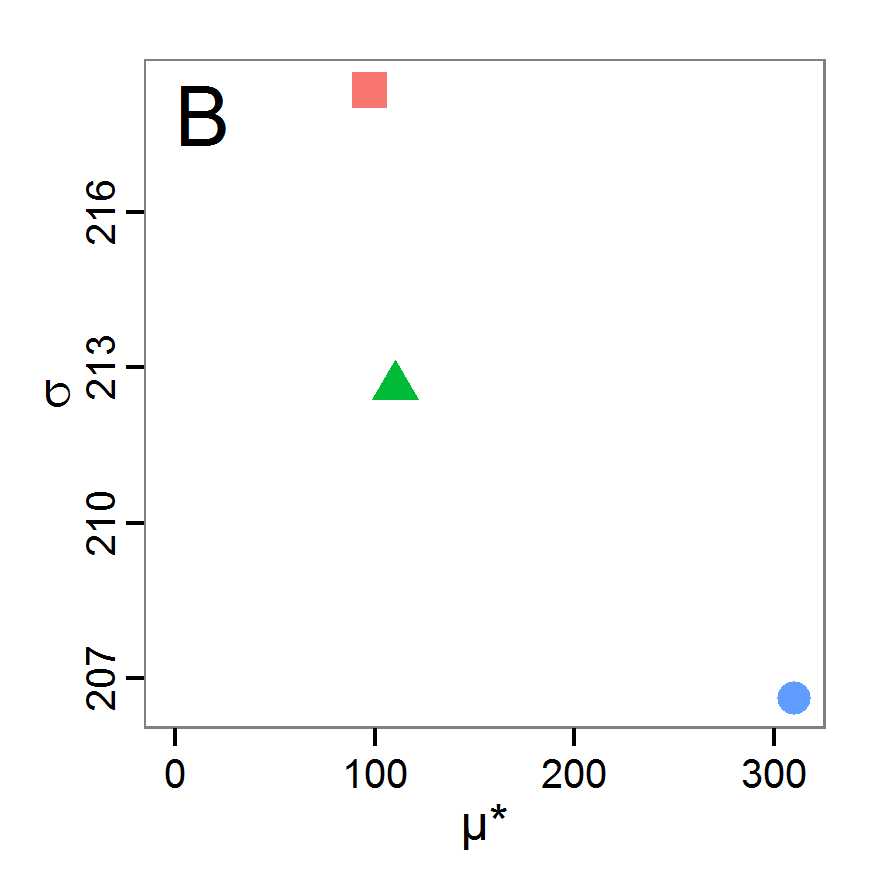

Figure 5. Results of the simulated annealing method. A: Performance of the cost value (Eq. 4) over the calls of the simulation function (x-axis, truncated at cost value 100, max. cost value was 317). B: Histogram of the tested parameter values for parameter scout-prob. C: Histogram of the tested parameter values for parameter survival-prob. - 2.34

- The result of an application example with 256 model evaluations is shown in Figure 5. In the upper panel, we see the variation of the cost value over the iterations, i.e., the simulation function calls. The algorithm found a good solution very fast, but then the algorithm leaves this good solution and also accepts less good intermediate solutions. Because we are primarily interested in the regions with low cost values, i.e., good adaptations of the model to the data, we truncated the graph to obtain a better view of the variation in the region of low cost values. In the lower panels, we see that more of the parameter space is tested than with the previous L-BFGS-B method (Figure 4). The focus is also in the middle range of the parameter space, which is the region where the best solution was found and which is the most promising part of the parameter space, as we already know from the full factorial design. The minimum cost value found in this example was 0.65 with corresponding parameter values of 0.225 for scout-prob and 0.9778 for survival-prob. As with the quasi-Newton method, the cost value indicates that the three criteria have not been met simultaneously.

Evolutionary or genetic algorithms

- 2.35

- Evolutionary algorithms (EA) are inspired by the natural process of evolution and feature inheritance, selection, mutation and crossover. Genetic algorithms (GA) are, like evolutionary strategies, genetic programming and some other variants, a subset of EAs (Pan & Kang 1997). GAs are often used for optimisation problems by using genetic processes such as selection or mutation on the parameter set. The parameter set, i.e., a vector of parameter values (genes), corresponds to the genotype. A population is formed by a collection of parameter sets (genotypes). Many books and articles about this methodology are available, e.g., Mitchell (1998), Holland (2001), or Back (1996). Application examples of EA/GA for parameter estimation in the context of ABMs can be found in Duboz et al. (Duboz 2010), Guichard et al. (2010), Calvez & Hutzler (2006), or Stonedahl & Wilensky (2010).

- 2.36

- There are several packages for evolutionary and genetic algorithms available in R; see the listing in Hothorn (2013). Using the package genalg (Willighagen 2005) enables us to take ranges of permissible values for the parameters into account. The rbga function of this package requires a function that returns a single fitting criterion, as we have also used for the quasi-Newton and simulated annealing methods. The procedure in R is as follows:

# 1. Define a simulation function (sim) as done for the

# L-BFGS-B method.

# 2. Run the genetic algorithm.

result <- rbga(stringMin=c(0.0, 0.95),

stringMax=c(0.5, 1.0),

evalFunc=sim, iters=200)

- 2.37

- Challenges with EAs/GAs include selecting an appropriate population size and number of iterations/generations, as well as meaningful probabilities for various genetic processes, such as mutation.

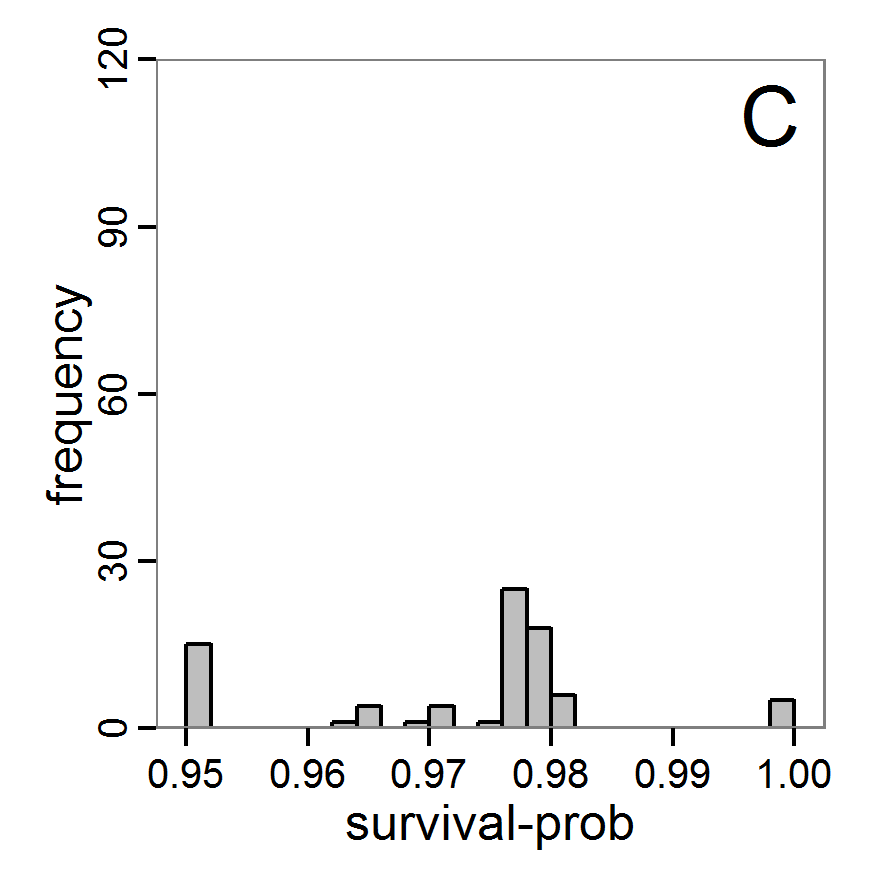

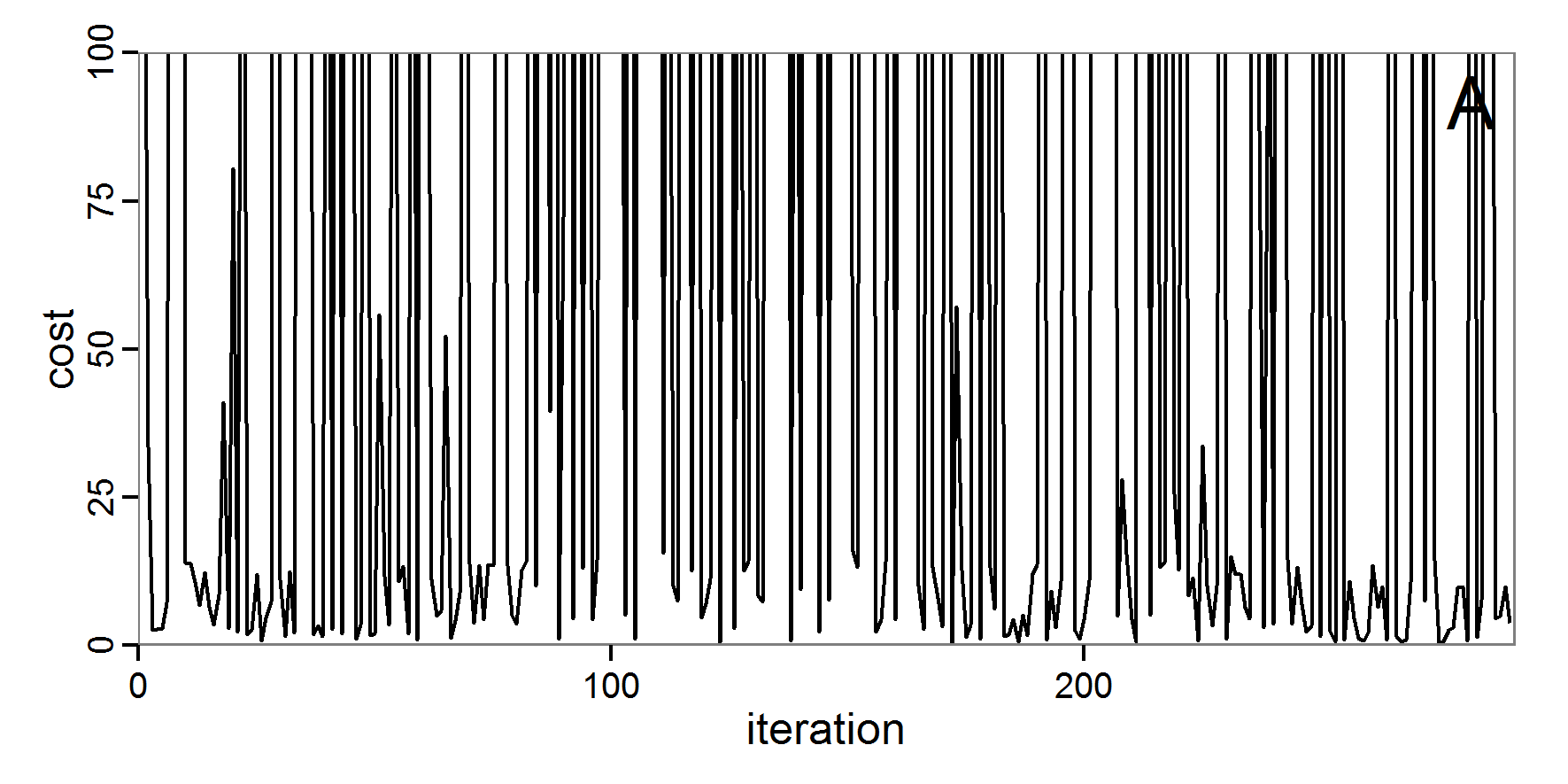

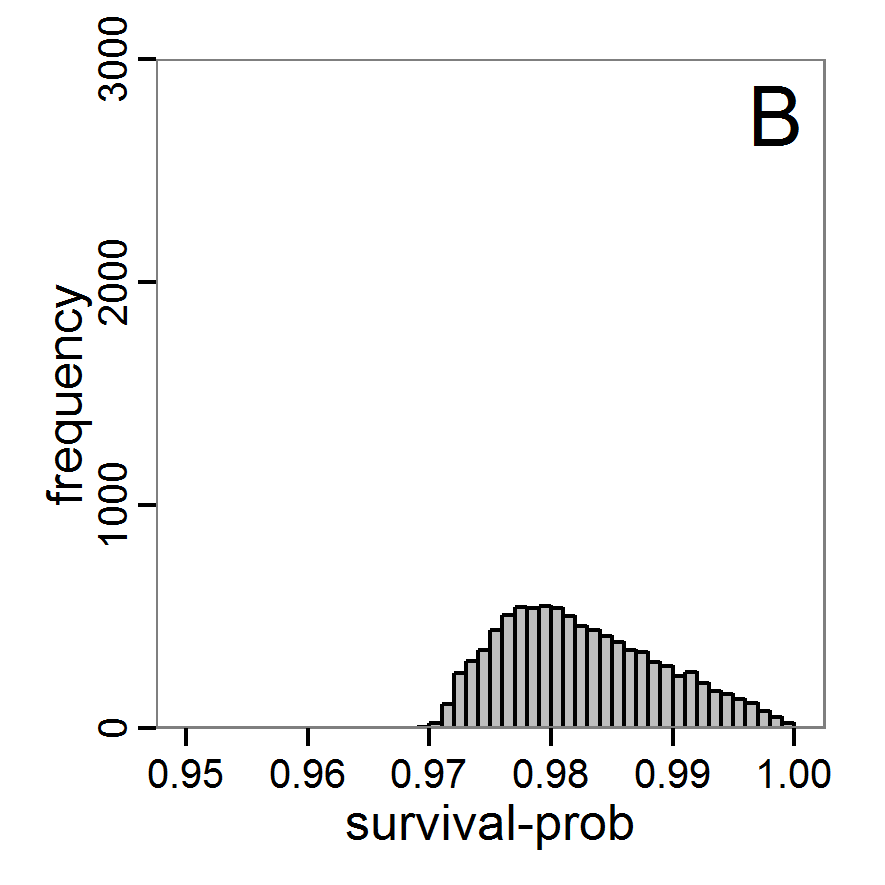

Figure 6. Results of the genetic algorithm method for 10 populations and 50 generations. A: Performance of the cost value (Eq. 4) over the calls of the simulation function (x-axis, truncated at cost value 100, max. cost value was 2923). B: Histogram of the tested parameter values for parameter scout-prob. C: Histogram of the tested parameter values for parameter survival-prob. - 2.38

- The fitting process using the genalg package with 290 function evaluations resulted in a best cost value of 0.35 with scout-prob of 0.3410 and survival-prob of 0.9763. The performance of the cost value over the model evaluations is shown in the upper panel of Figure 6. We find a very strong oscillation because successive runs are more independent than in the other methods above, e.g., by creating a new population. Therefore, this graph looks much more chaotic, and truncating the vertical axis to zoom into the region of low cost values is less informative in this specific case. As we can see in the lower panels of the figure, a wide range of parameter values has been tested, with slightly higher frequency around the best parameter value for scout-prob and a more diffuse pattern for survival-prob. However, the best parameter combination found is very similar to the one found by the other optimisation methods. In general, it appears that the promising value range of survival-prob is much smaller than that for scout-prob. The values of (sub-) optimal solutions for survival-prob are always close to 0.977, whereas the corresponding value of scout-prob varies on a much wider value range with only a small influence on the cost value. This pattern was already shown in Figure 1 (right panel). For investigating such a pattern in more detail, Bayesian methods can be very help- and powerful, as presented below.

Bayesian methods

- 2.39

- Classical statistical maximum likelihood estimation for model parameters cannot be applied to complex stochastic simulation models; the likelihood functions are either intractable or hard to detect because this is computationally too expensive (Jabot et al. 2013). By using the Bayesian strategy, the true/posterior probability density functions of parameters are calculated by point-wise likelihood approximations across the parameter space. The basic idea, as described in Jabot et al. (2013), is to run the model a very large number of times with different parameter values drawn from distributions we guess are underlying (prior distributions). Then, the simulation results and the observational data are compared using so-called summary statistics, which are some aggregated information calculated from the simulation and the observational data to reduce the dimensionality of the data. Only those parameter values where the difference between the simulated and the observed summary statistics is less than a defined threshold (given by a tolerance rate) are kept. At the end, an approximation of the posterior distribution is formed by the retained parameter values. Such methods, called Approximate Bayesian Computing (ABC), have been increasingly used for simulation models in recent years (e.g., May et al. 2013; Martínez et al. 2011; Sottoriva & Tavaré 2010; and review by Hartig et al. 2011). They not only deliver a best estimate for the parameter values but also provide measures of uncertainty with consideration of correlations among the parameters (Martínez et al. 2011). Introductions to Bayesian statistical inference using ABC can be found in Beaumont (2010), Van Oijen (2008), or Hartig et al. (2011).

- 2.40

- A list of useful R packages around Bayesian inference can be found in Park (2012). The most relevant packages regarding ABC are abc (Csillery et al. 2012), EasyABC (Jabot et al. 2013), pomp (King et al. 2013), FME (Soetaert & Petzoldt 2010) and MCMChybridGP (Fielding 2011). If a specific method is not available in an out-of-box package, there are several R packages that can assist in developing custom implementations, such as the MCMC package (Geyer & Johnson 2013) with its Markov chain Monte Carlo Metropolis-Hastings algorithm or the coda package (Plummer et al. 2006) for the analysis of Markov chain Monte Carlo results. An R interface to the openBUGS software (Lunn et al. 2009) comes with the BRugs package (Thomas et al. 2006) and enables the advanced user to define models and run Bayesian approximations in openBUGS, which is beyond the scope of this paper. MCMC in an ABC framework can also be used to compute some measures of model complexity (Piou et al. 2009). The Pomic package (Piou et al. 2009) is based on an adaptation of the DIC measure (Spiegelhalter et al. 2002) to compare the goodness of fit and complexity of ABMs developed in a POM context.

- 2.41

- Note that Bayesian methods require deeper knowledge and understanding than the other methods presented above to be adapted properly to a specific model. The methods presented above could be understood in principle without previous knowledge, but this is not the case for Bayesian methods. We recommend first reading Wikipedia or other introductions to these methods before trying to use the methods described in this section. Nevertheless, even for beginners, the following provides an overview of the inputs, packages, and typical outputs of Bayesian calibration techniques.

Rejection and regression sampling

- 2.42

- The easiest variant of ABC regarding the sampling scheme is rejection sampling. Here, the user first defines a set of summary statistics, which are used to compare observations with simulations. Furthermore, a tolerance value, which is the proportion of simulation points whose parameter set is accepted, must be selected. Then, parameter sets are drawn from a user-defined prior distribution and tested for acceptance. At the end, the posterior distribution is approximated from the accepted runs (Beaumont et al. 2002).

- 2.43

- Such sampling can be performed in R using the package abc (Csillery, Blum et al. 2012) or the package EasyABC (Jabot et al. 2013). The abc package offers two further improvements to the simple rejection method based on Euclidean distances: a local linear regression method and a non-linear regression method based on neural networks. Both add a further step to the approximation of the posterior distribution to correct for imperfect matches between the accepted and observed summary statistics (Csillery, Francois et al. 2012).

- 2.44

- Because the abc package expects random draws from the prior distributions of the parameter space, we must create such an input in a pre-process. For this, we can, for simplicity, reuse the code of the Latin hypercube sampling with separate best-fit measures for all three fitting criteria used as summary statistics (see Eqs. 1–3). Also for simplicity, we apply a non-informative uniform (flat) prior distribution (for further reading see Hartig et al. 2011). As summary statistics, we use the three criteria defined in the model description. We assume that the observed summary statistics is calculated as the mean of the minimum and the maximum of the accepted output value range, i.e., we assume that the value range was gained by two field measurements and we use the mean of these two samples to compare it with the mean of two simulated outputs. The procedure of using the abc function in R (for simple rejection sampling) is as follows:

# 1. Run a Latin hypercube sampling as performed above.

# The result should be two variables, the first

# containing the parameter sets (param.sets) and

# the second containing the corresponding summary

# statistics (sim.sum.stats).

# 2. Calculate summary statistics from observational data

#(here: using the mean of value ranges).

obs.sum.stats <- c(abundance=mean(c(115,135)),

variation=mean(c(10,15)),

vacancy=mean(c(0.15,0.3)))

# 3. Run ABC using observations summary statistics and the

# input and output of simulations from LHS in step 1.

results.abc <- abc(target=obs.sum.stats, param=param.sets,

sumstat=sim.sum.stats,

tol=0.3, method="rejection")

- 2.45

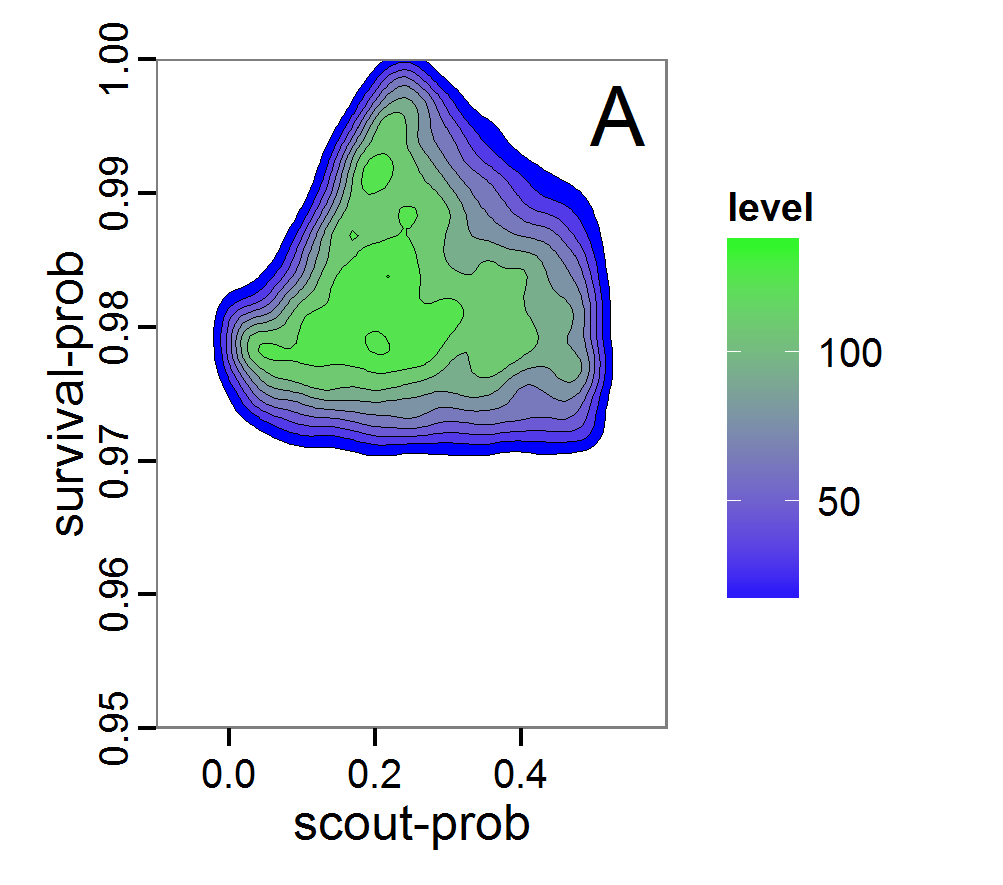

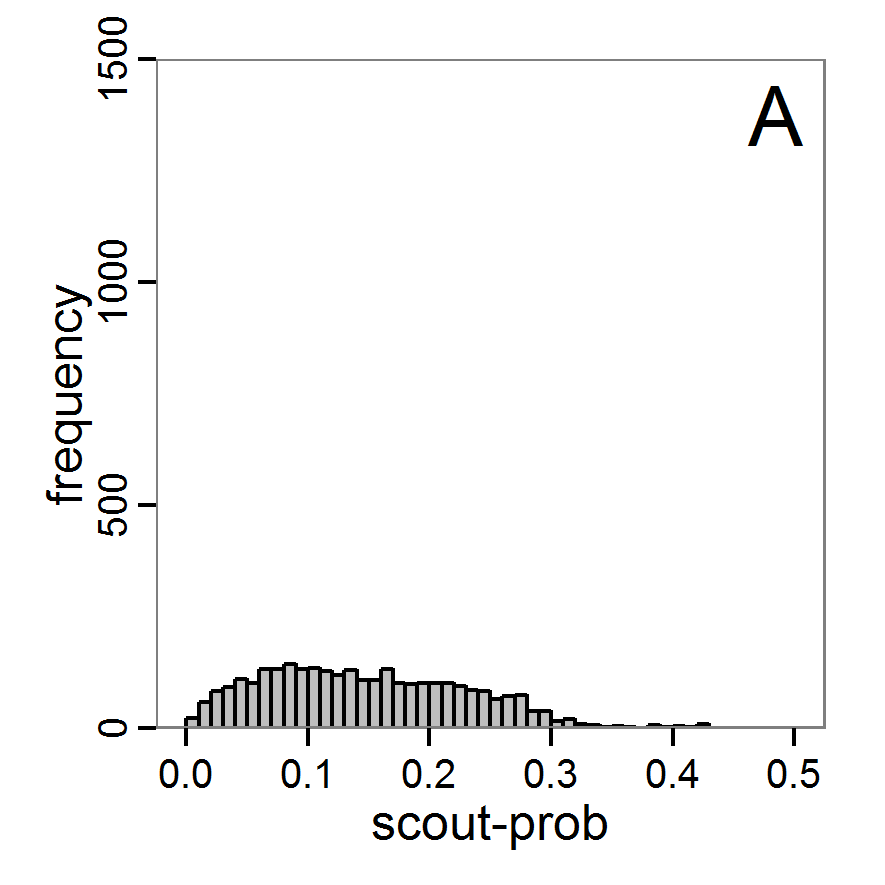

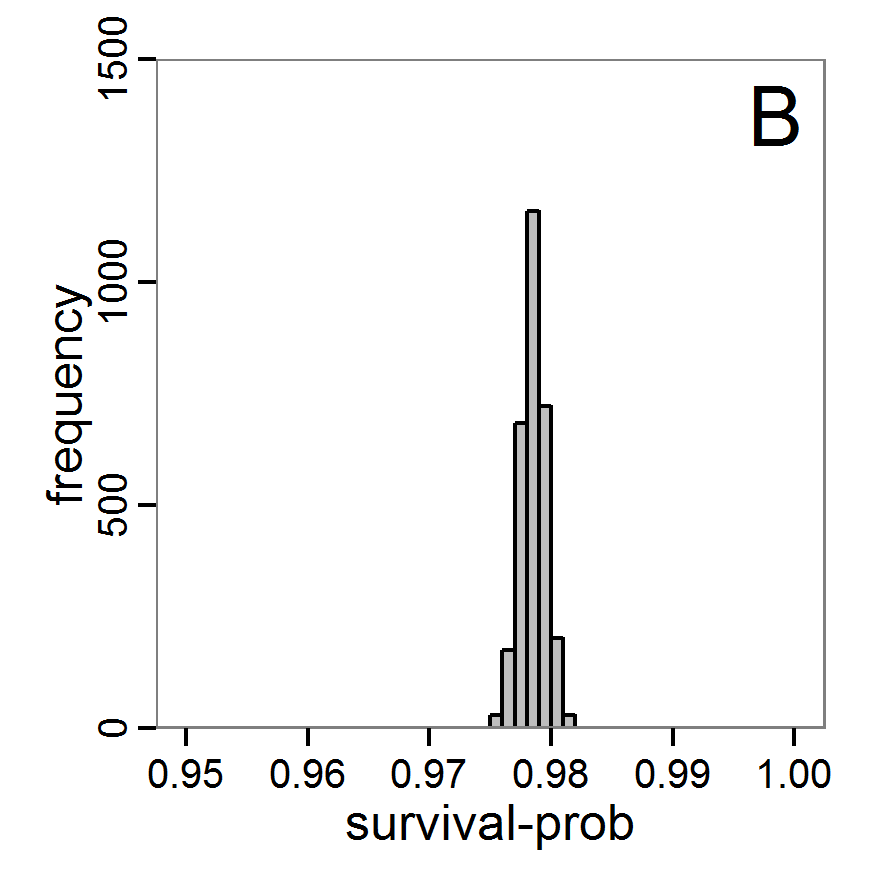

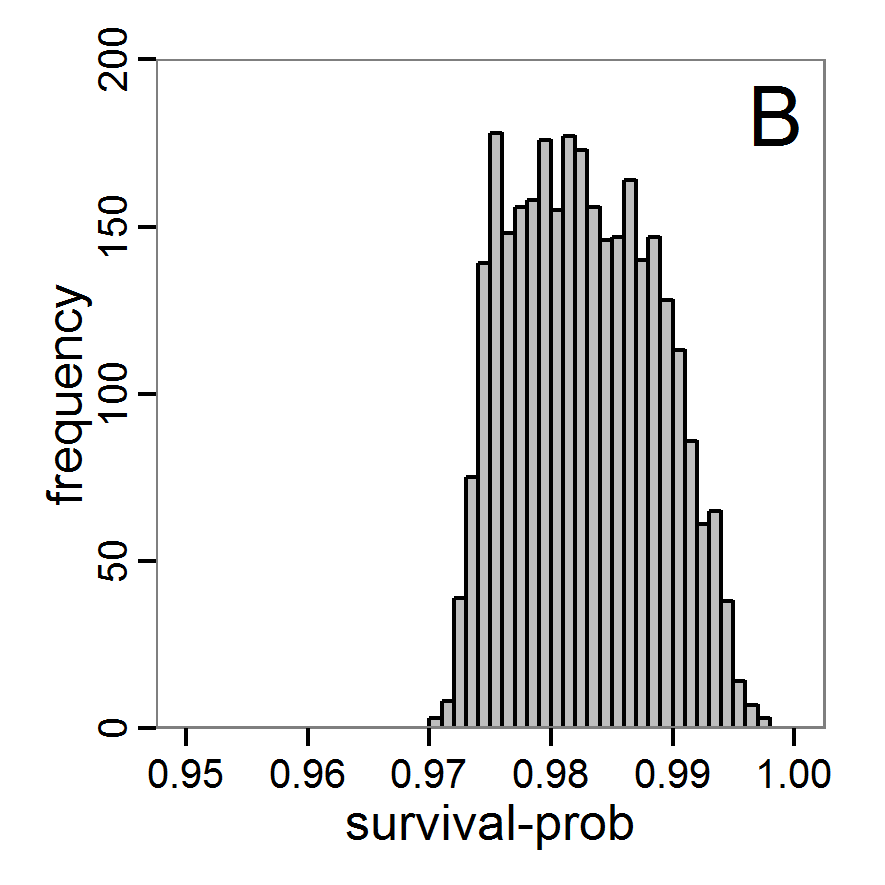

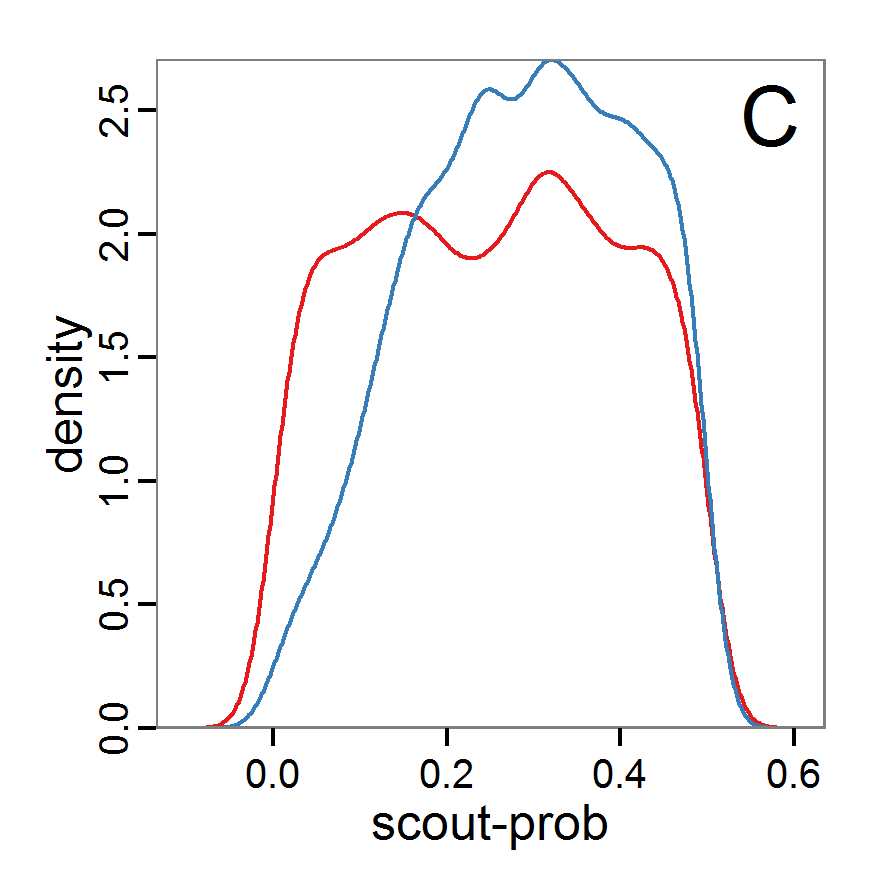

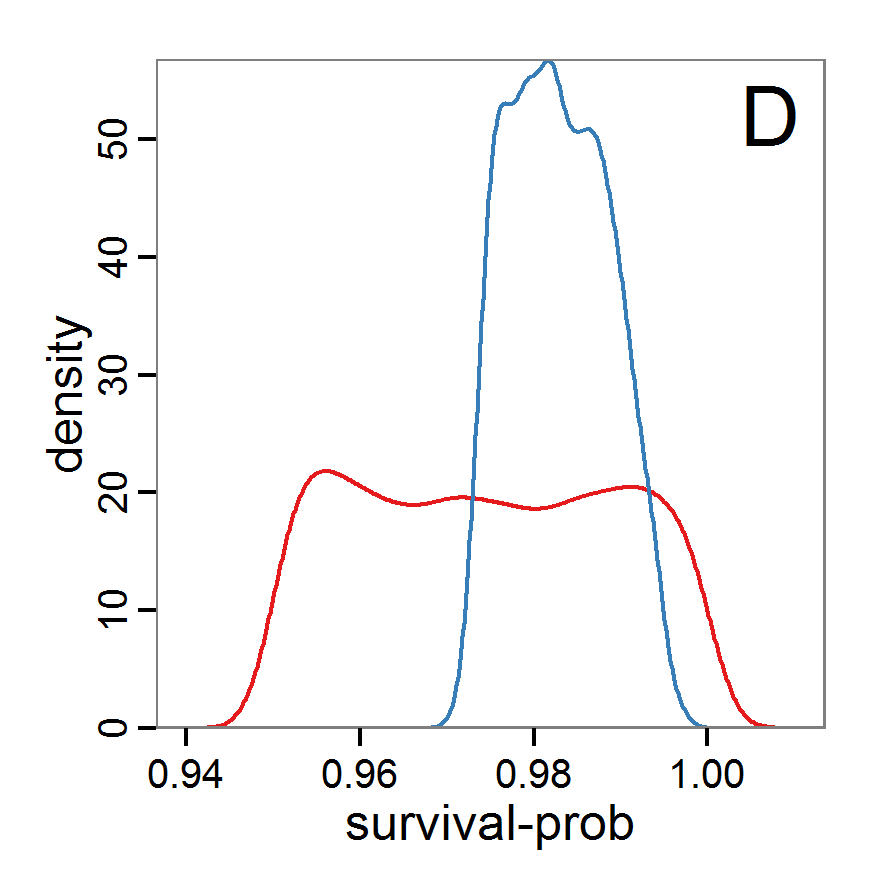

- The results, i.e., the accepted runs that form the posterior distribution of simple rejection sampling and of the local linear regression method, can be displayed using histograms, as presented in Figure 7 (upper and middle panels). These results are based on Latin hypercube sampling with 30,000 samples and a tolerance rate of 30 per cent, which defines the percentage of accepted simulations. The histograms can be used to estimate the form of the probability density function (kernel density), which is shown in the lower panels of Figure 7. These density estimations can be taken subsequently to gain distribution characteristics for the two input parameters, as shown in Table 2. Afterwards, the density estimations can be used to run the model not only with the mean or median of the parameter estimation but also for an upper and lower confidence value, which would result not only in one model output but in confidence bands for the model output. See Martínez et al. (2011) for an example.

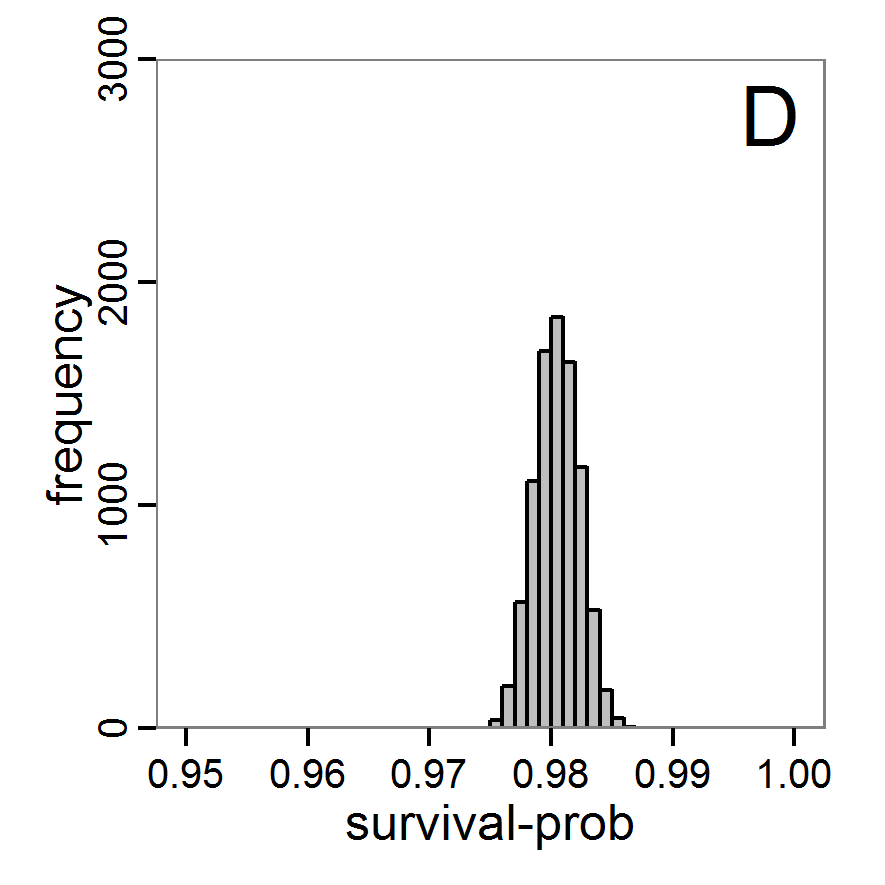

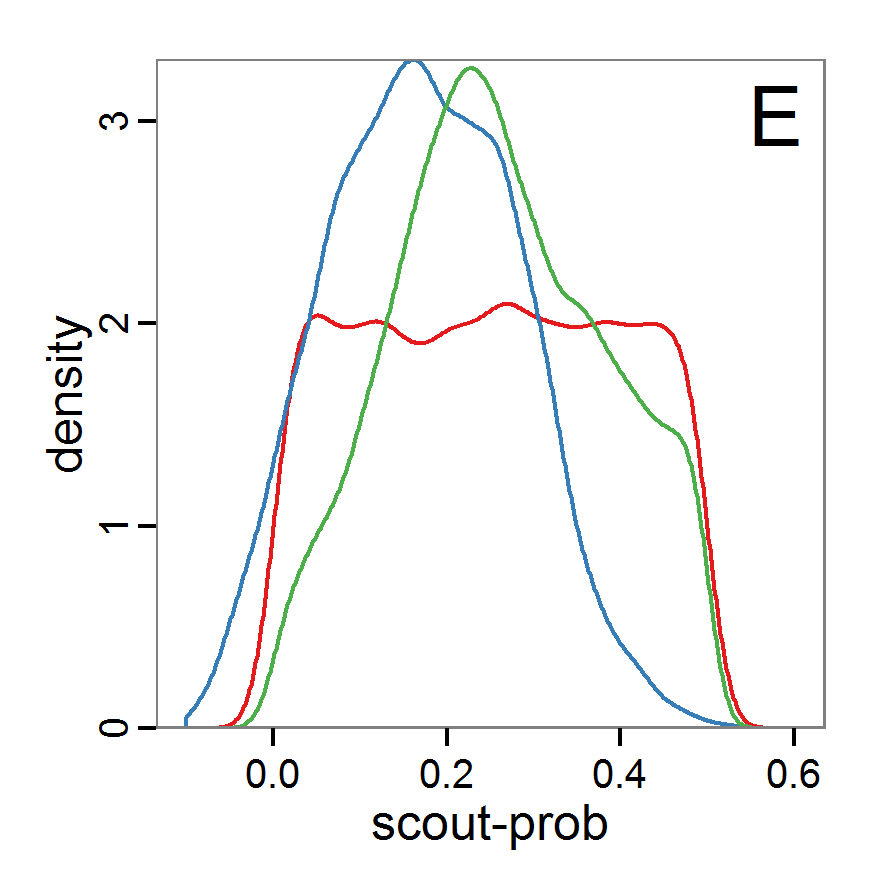

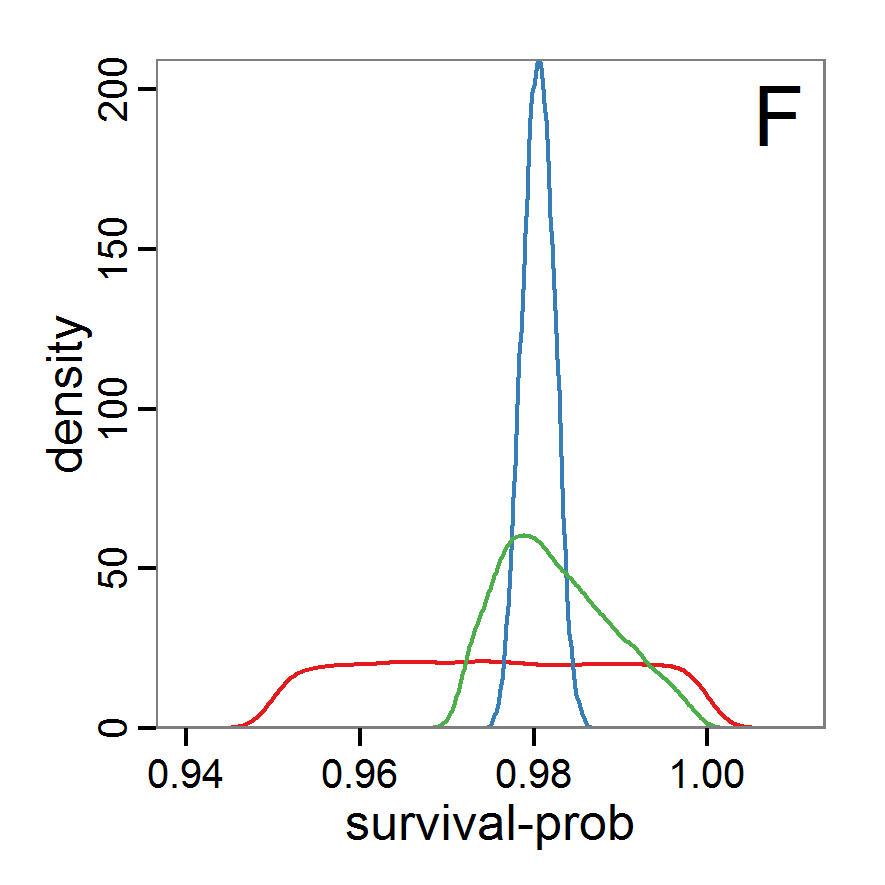

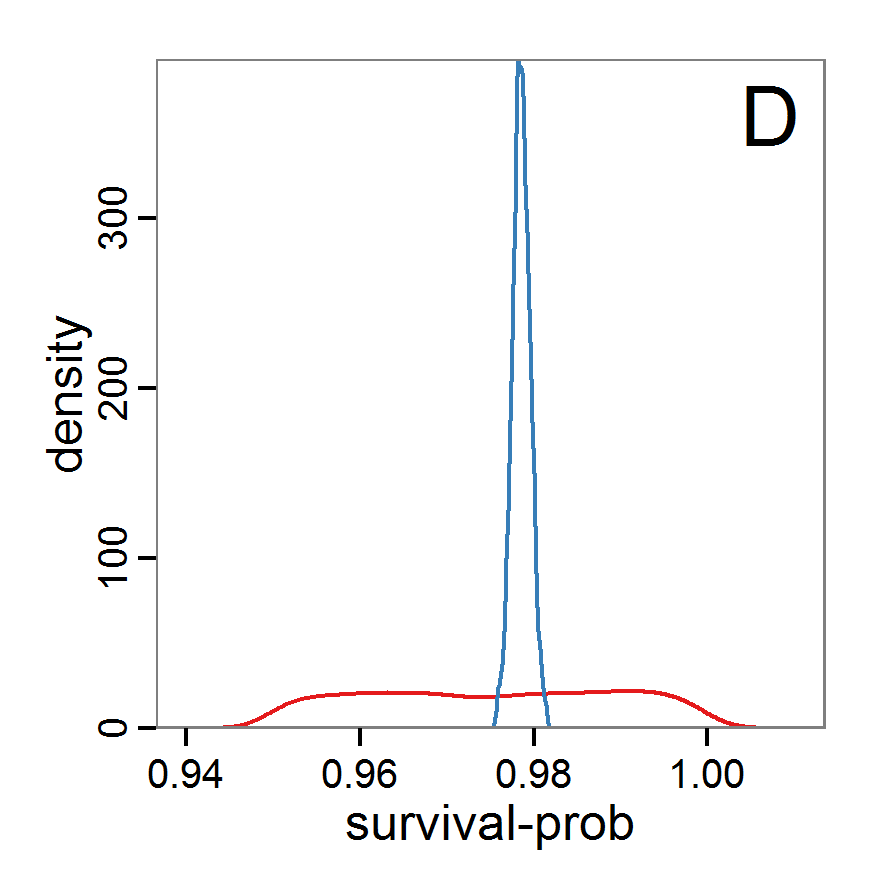

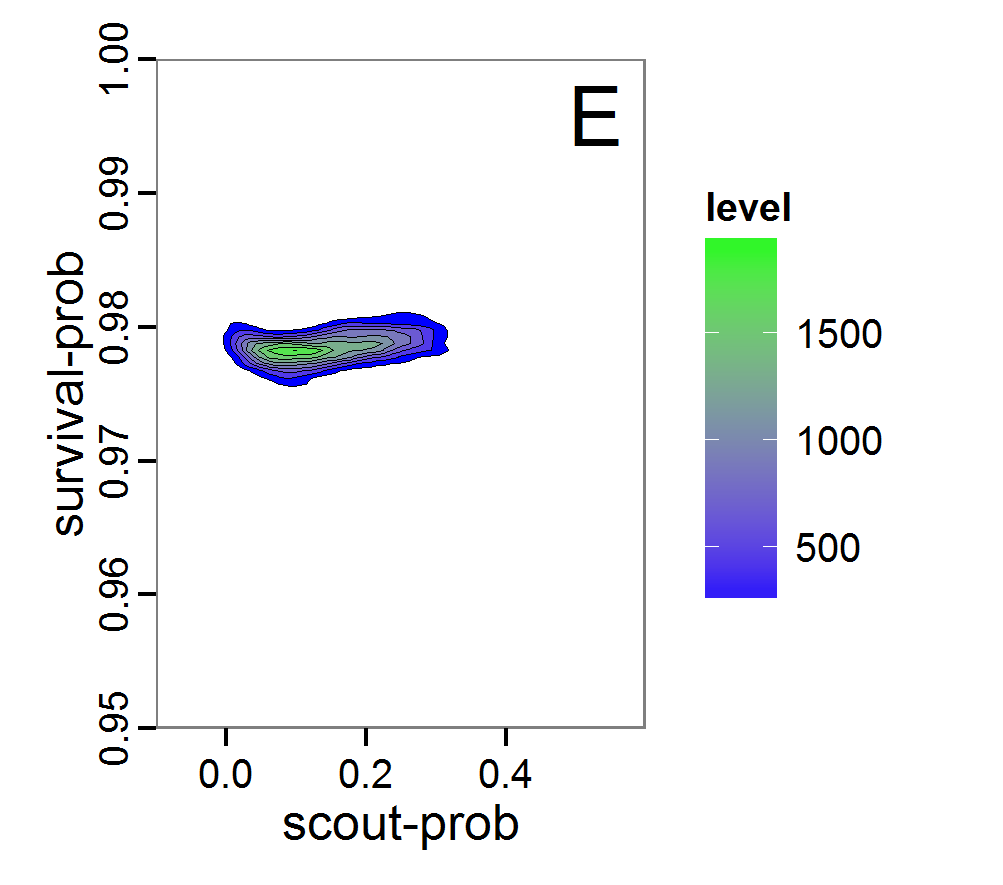

Figure 7. Posterior distribution generated with the ABC rejection sampling method as well as the rejection sampling method followed by additional local linear regression. A: Histogram of accepted runs for scout-prob using rejection sampling. B: Histogram of accepted runs for survival-prob using rejection sampling. C: Histogram of accepted runs for scout-prob using the local linear regression method. D: Histogram of accepted runs for survival-prob using the local linear regression method. E & F: Density estimation for scout-prob (E) and survival-prob (F) by rejection sampling (green line), by the local linear regression method (blue line) and from a prior distribution (red line). - 2.46

- We see that there are considerable differences in results between the simple rejection sampling and the rejection sampling with local linear regression correction. For example, the mean value of scout-prob is much lower with the regression method than with the simple rejection method. Furthermore, the posterior distribution of survival-prob is narrower with the regression method than with the simple rejection method.

Table 2: Posterior distribution characteristics for the two parameters gained from the ABC rejection sampling (first and third columns) and the local linear regression method (second and fourth columns; weighted). scout-prob survival-prob rej. sampl. loc. lin. reg. rej. sampl. loc. lin. reg. Minimum 0.0003 -0.1151 0.9695 0.9746 5% percentile 0.0622 -0.0185 0.9733 0.9774 Median 0.2519 0.1423 0.9817 0.9803 Mean 0.2596 0.1485 0.9826 0.9803 Mode 0.2270 0.0793 0.9788 0.9805 95% percentile 0.4666 0.3296 0.9946 0.9832 Maximum 0.5000 0.5261 0.9999 0.9863

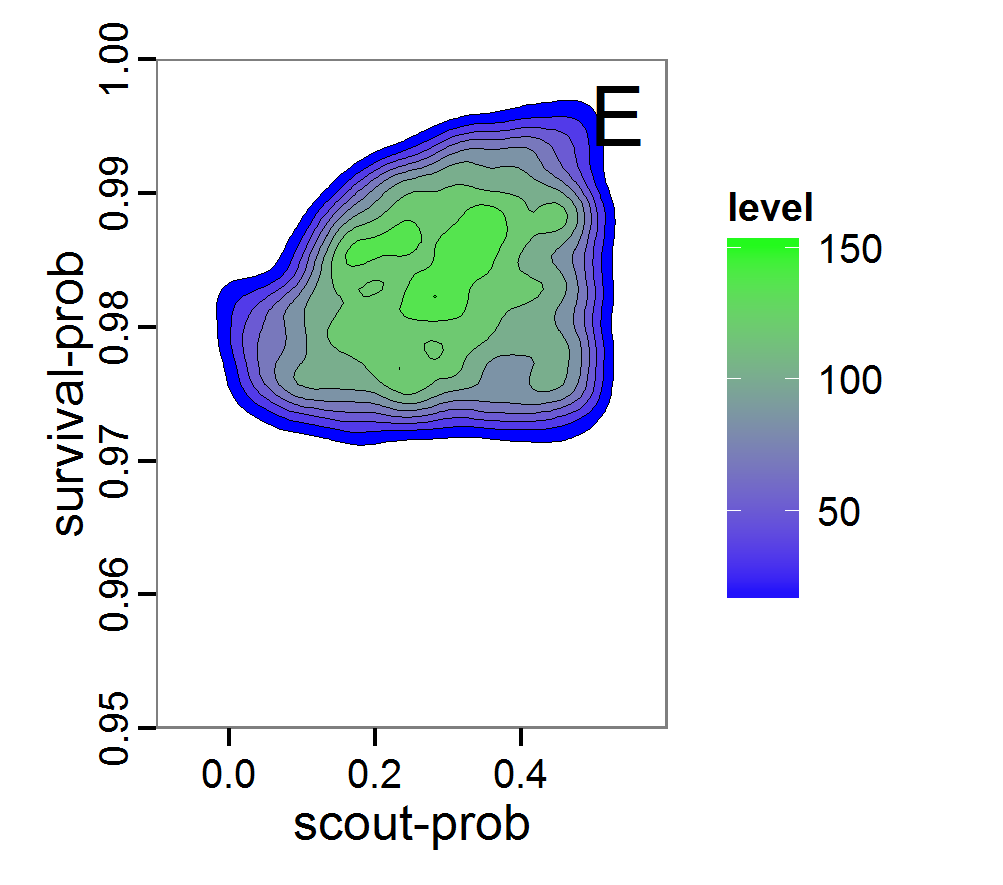

Figure 8. Joint posterior density estimation based on A: ABC rejection sampling, B: rejection sampling with additional local linear regression. Both with 10–90% highest density contours. The last contour is invisible without zooming in, meaning high density is concentrated in a small area. - 2.47

- Looking at the joint posterior density in Figure 8, we see how the densities of the two parameters are related to each other. Not surprisingly, we see strong differences for the two methods, as we already know from Figure 7 that the distributions differ. However, we also see that the additional local linear regression condenses the distribution very much and uncovers a linear correlation between the two parameters. A Spearman correlation test between the two parameter samples delivers a ρ of 0.66 (p-value < 2.2e-16) for the method with the additional local linear regression, whereas ρ is only 0.02 (p-value =0.11) for the simple rejection sampling. This result for the method with the additional local linear regression is in good accordance with the results of the following method and has many similarities to the pattern we know from the results of the full factorial design (Figure 1, right panel).

- 2.48

- Because the application of the additional local linear regression method is based on the same simulation results as the simple rejection sampling, it comes with no additional computational costs. Therefore, it is a good idea to run both methods and check their convergence.

Markov chain Monte Carlo

- 2.49

- Markov chain Monte Carlo (MCMC) is an efficient sampling method where the selection of the next parameter combination depends on the last parameter set and the resulting deviation between the simulation and the observation (Hartig et al. 2011). Therefore, sampling is concentrated in the regions with high likelihood. This makes the method more efficient in comparison with rejection sampling. Only the initial parameter set is drawn from the prior distribution. In the long run, the chain of parameter sets will converge to the posterior distribution. The advantage of the MCMC methods over the rejection sampling methods is that it does not sample from the prior distribution. See, for example, Beaumont (2010) for further reading.

- 2.50

- The R package EasyABC (Jabot et al. 2013) delivers several different algorithms for performing coupled ABC-MCMC schemes. The usage in R looks like this:

# 1. Define a function that runs the simulation model for a

# given parameter combination and returns all summary

# statistics.

# See Supplementary Material (simulation_function4.R)

# for an implementation example using RNetLogo.

sim <- function(params) {

...

return(sim.sum.stats)

}

# 2. Calculate summary statistics from observational data

# (here using the mean of ranges).

obs.sum.stats <- c(abundance=mean(c(115,135)),

variation=mean(c(10,15)),

vacancy=mean(c(0.15,0.3)))

# 3. Generate prior information.

prior <- list('scout-prob'=c("unif",0.0,0.5),

'survival-prob'=c("unif",0.95,1.0))

# 4. Run ABC-MCMC.

results.MCMC <- ABC_mcmc(method="Marjoram", model=sim,

prior=prior, summary_stat_target=obs.sum.stats)

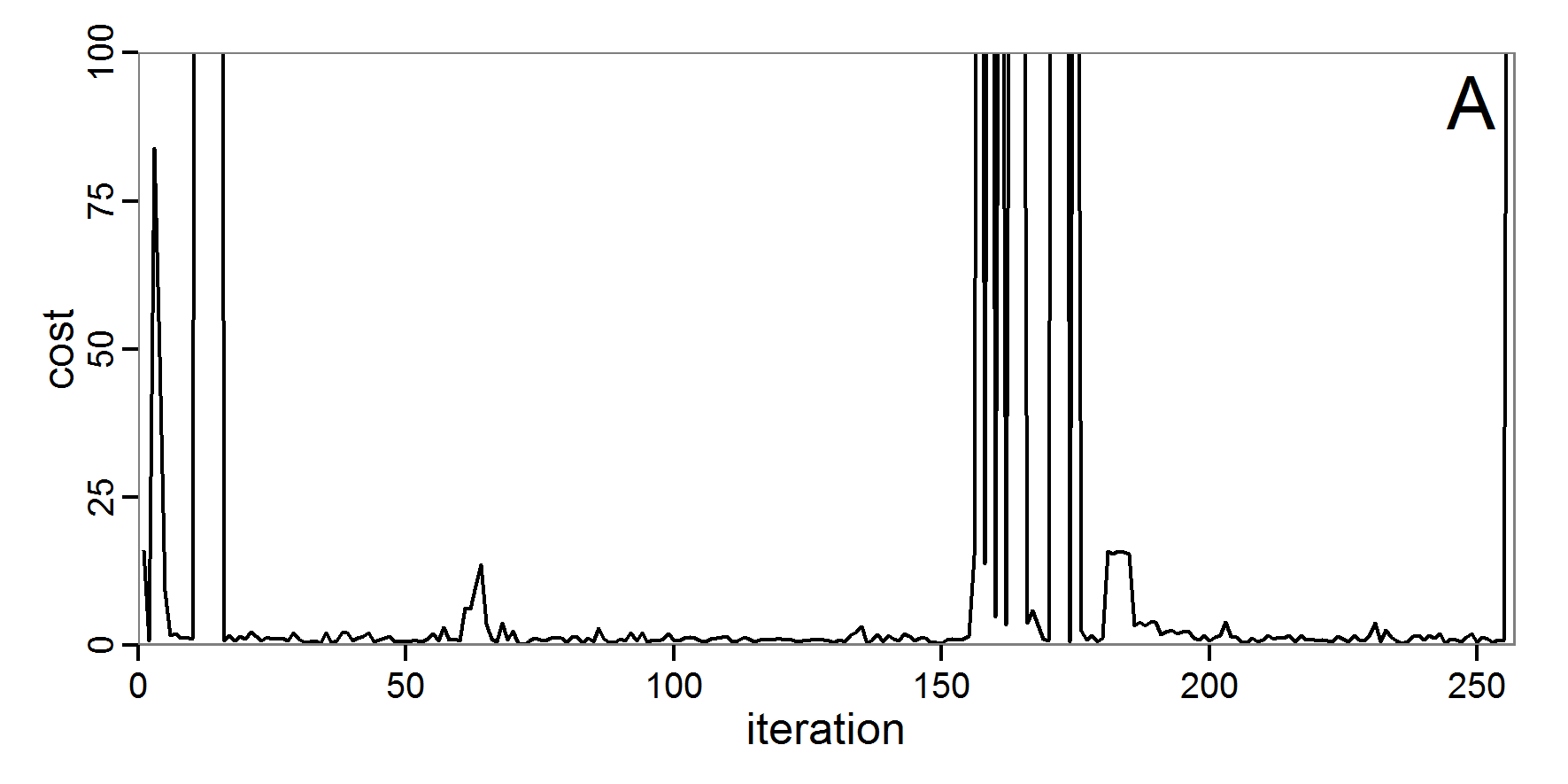

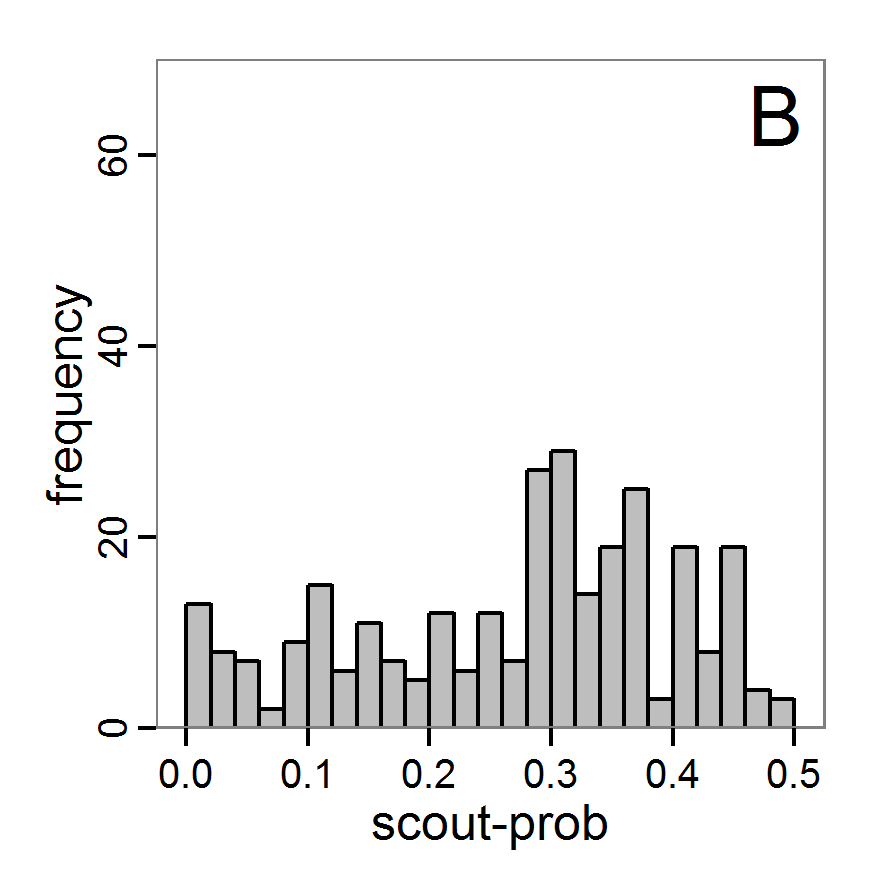

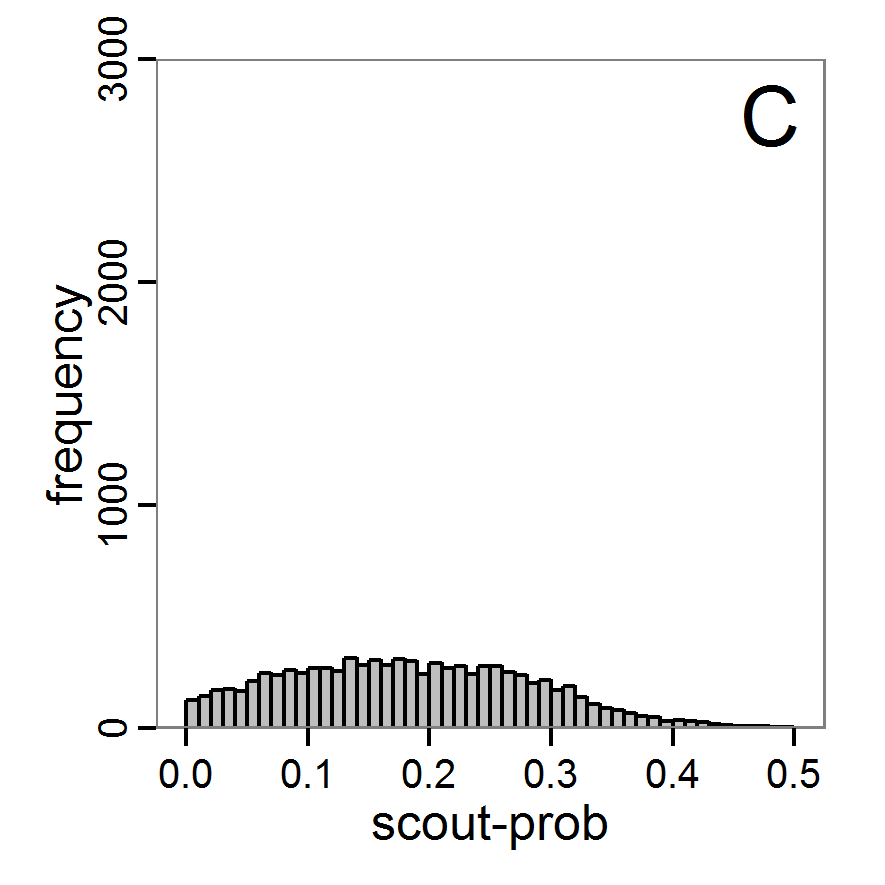

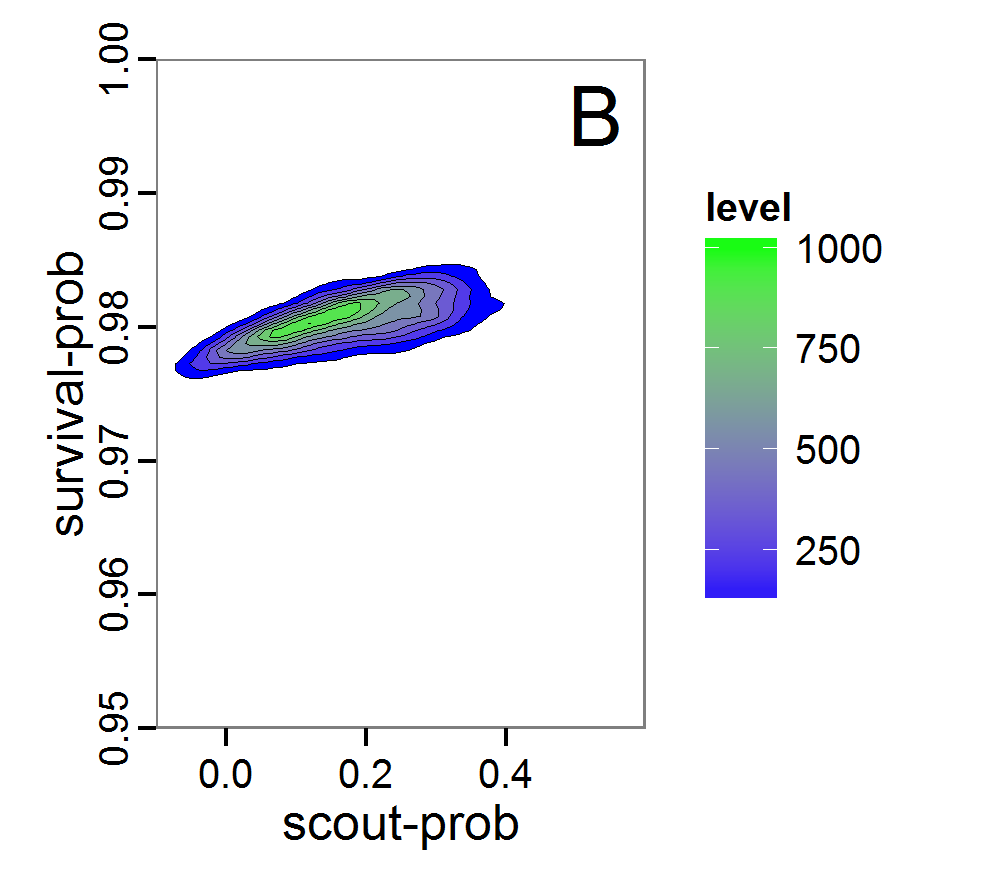

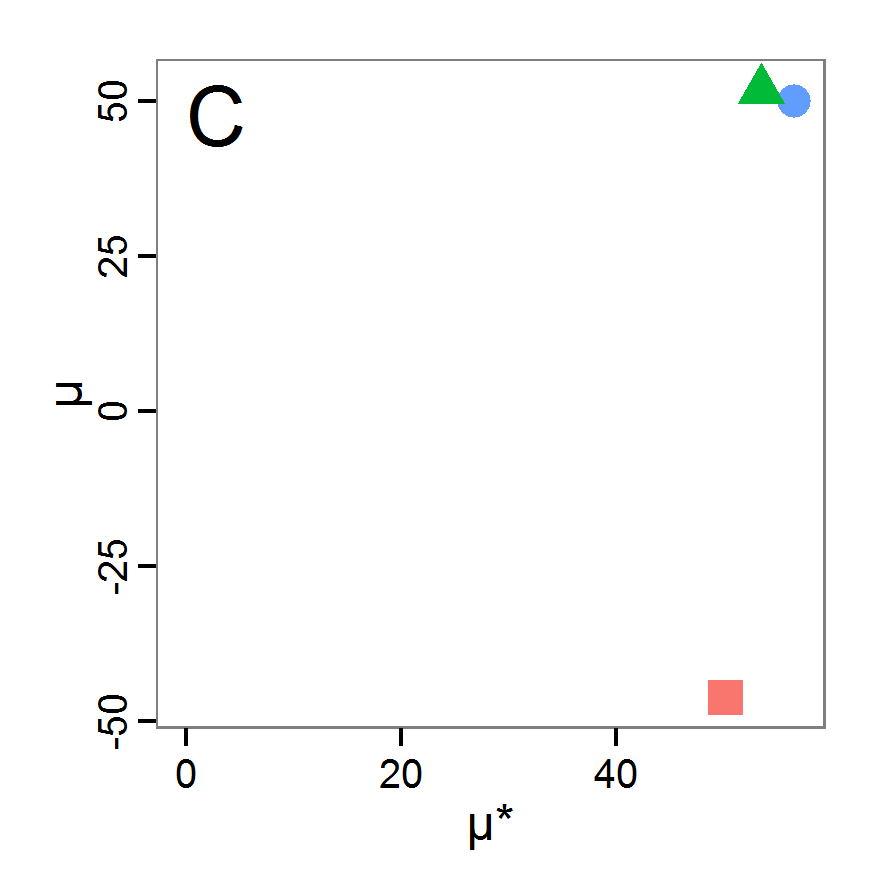

Figure 9. Posterior distribution generated with ABC-MCMC. A: Histogram of accepted runs for scout-prob. B: Histogram of accepted runs for survival-prob. C & D: Density estimation for scout-prob (C) and survival-prob (D) by MCMC sampling (blue line) and from prior distribution (red line). E: joint posterior density estimation of scout-prob and survival-prob by SMC sampling with 10–90% highest density contours. - 2.51

- The results of applying the ABC-MCMC scheme to the example model with a total of 39,991 function calls and 3,000 samples in the posterior distribution are prepared in the same manner as the results of the rejection method and are shown in Figure 9. The results are very similar to those of the rejection sampling method with local linear regression correction but with even narrower posterior distributions. The numerical characteristics of the posterior distributions are processed using the package coda (Plummer et al. 2006) and are given in Table 3.

- 2.52

- The ABC-MCMC scheme is more efficient than rejection sampling, but there are many more fine-tuning possibilities, which can also make its use more complicated.

Table 3: Posterior distribution characteristics for the two parameters gained from the ABC-MCMC algorithm. scout-prob survival-prob Minimum 0.0034 0.9758 5% percentile 0.0280 0.9769 Median 0.1392 0.9785 Mean 0.1474 0.9785 Mode 0.0863 0.9758 95% percentile 0.2840 0.9802 Maximum 0.4296 0.9817 Sequential Monte Carlo

- 2.53

- A sequential Monte Carlo (SMC) method, such as ABC-MCMC, is also used to concentrate the simulations to the zones of the parameter space with high likelihood (Jabot et al. 2013), i.e., to make the sampling more efficient compared to the rejection method. In contrast to MCMC, each step contains not only one parameter set but a sequence of sets (also called a particle or population). A sequence depends on its predecessor, but the simulations within a sequence are independent. The first sequence contains points from the prior distribution and performs a classical rejection algorithm. The successive sequences are then concentrated to those points of the former sequence with the highest likelihood, i.e., points that are nearest to the observed data (Jabot et al. 2013). Therefore, the sequences converge to the posterior distribution based on, in contrast to ABC-MCMC, independent samples. The risk of getting stuck in areas of parameter space that share little support with the posterior distribution is lower than in ABC-MCMC (Hartig et al. 2011). For further reading see Hartig et al. (2011), Jabot et al. (2013) and references therein.

- 2.54

- The EasyABC package (Jabot et al. 2013) for R delivers four variants of SMC. The usage is very similar to the application of ABC-MCMC:

# 1. Define a simulation function (sim) as done for the

# ABC-MCMC method.

# 2. Generate observational summary statistics

# (using the mean of ranges).

obs.sum.stats <- c(abundance=mean(c(115,135)),

variation=mean(c(10,15)),

vacancy=mean(c(0.15,0.3)))

# 3. Generate prior information.

prior <- list('scout-prob'=c("unif",0.0,0.5),

'survival-prob'=c("unif",0.95,1.0))

# 4. Define a sequence of decreasing tolerance thresholds for

# the accepted (normalised) difference between simulated and

# observed summary statistics (in case of multiple summary

# statistics, like here, the deviations are summed and

# compared to the threshold); one value for each step, first

# value for the classical rejection algorithm, last value for

# the max. final difference.

tolerance <- c(1.5,0.5)

# 5. Run SMC.

results.MCMC <- ABC_sequential(method="Beaumont", model=sim,

prior=prior, summary_stat_target=obs.sum.stats,

tolerance_tab=tolerance, nb_simul=20)

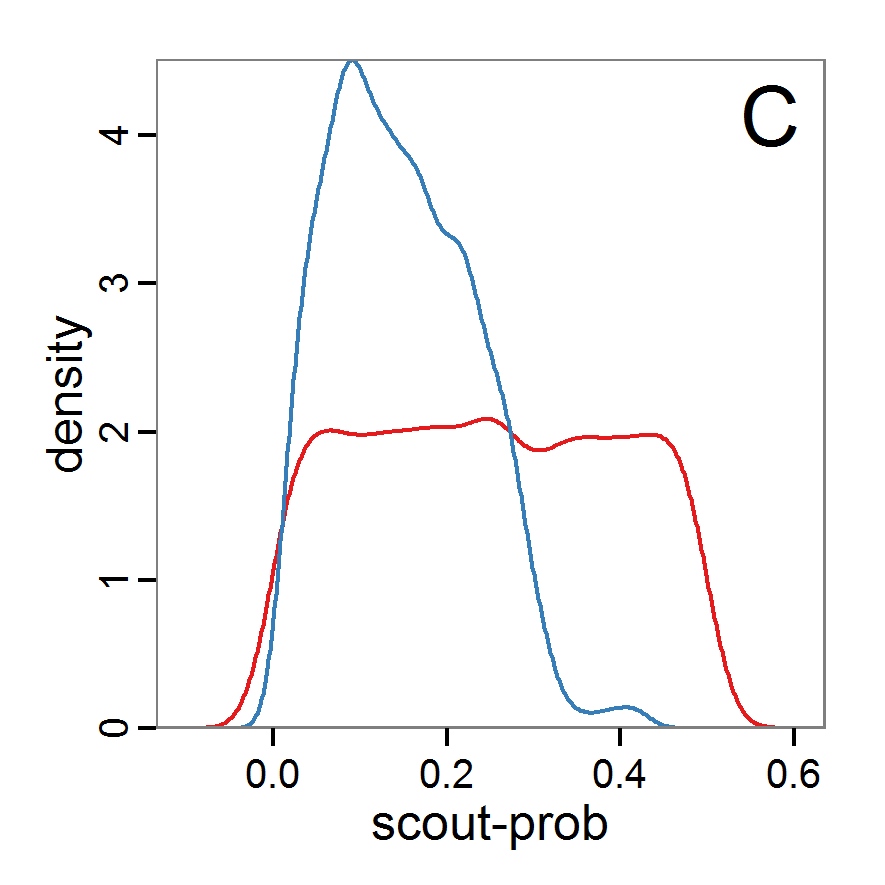

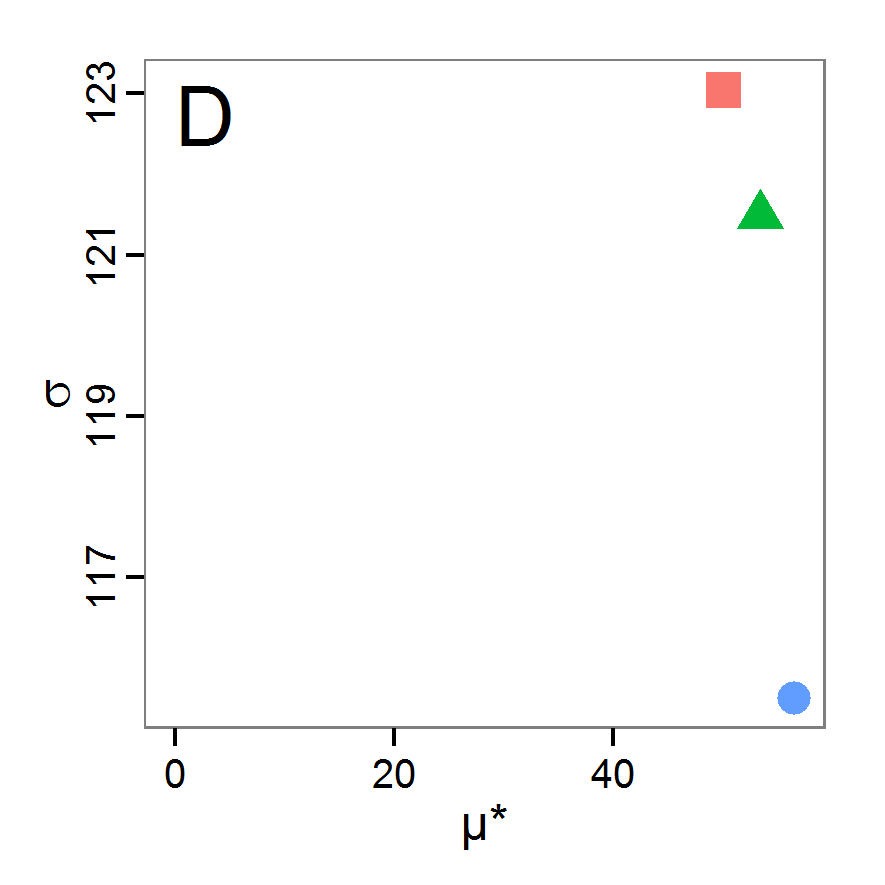

Figure 10. Posterior distribution generated with ABC-SMC. A: Histogram of accepted runs for scout-prob. B: Histogram of accepted runs for survival-prob. C & D: Density estimation for scout-prob (C) and survival-prob (D) by SMC sampling (blue line) and from the prior distribution (red line). E: Joint posterior density estimations of scout-prob and survival-prob by SMC sampling with 10–90% highest density contours. - 2.55

- The results of an application of the ABC-SMC scheme to the example model, prepared in the same manner as for the other ABC schemes, are given in Figure 10 and Table 4. They are based on 11,359 function calls and 3,000 retained samples for the posterior distribution. The distributions share some similarities with the resulting posterior distributions of the other ABC schemes regarding the value range but have different shapes. The posterior distribution of scout-prob does not have its peak at the very small values and is not that different from the prior distribution. The posterior distribution of survival-prob is also broader than with the other schemes. These differences from the other schemes could be the result of the lower sample size, differences in the methodologies, and missing fine-tuning. The multiple fine-tuning options in particular make this method complex for satisfactory application.

Table 4: Posterior distribution characteristics for the two parameters gained from the ABC-SMC algorithm. scout-prob survival-prob Minimum 0.0003 0.9700 5% percentile 0.0785 0.9742 Median 0.2968 0.9825 Mean 0.2852 0.9826 Mode 0.0003 0.9700 95% percentile 0.4741 0.9925 Maximum 0.4999 0.9975 Costs and benefits of approaches to parameter estimation and calibration

- 2.56

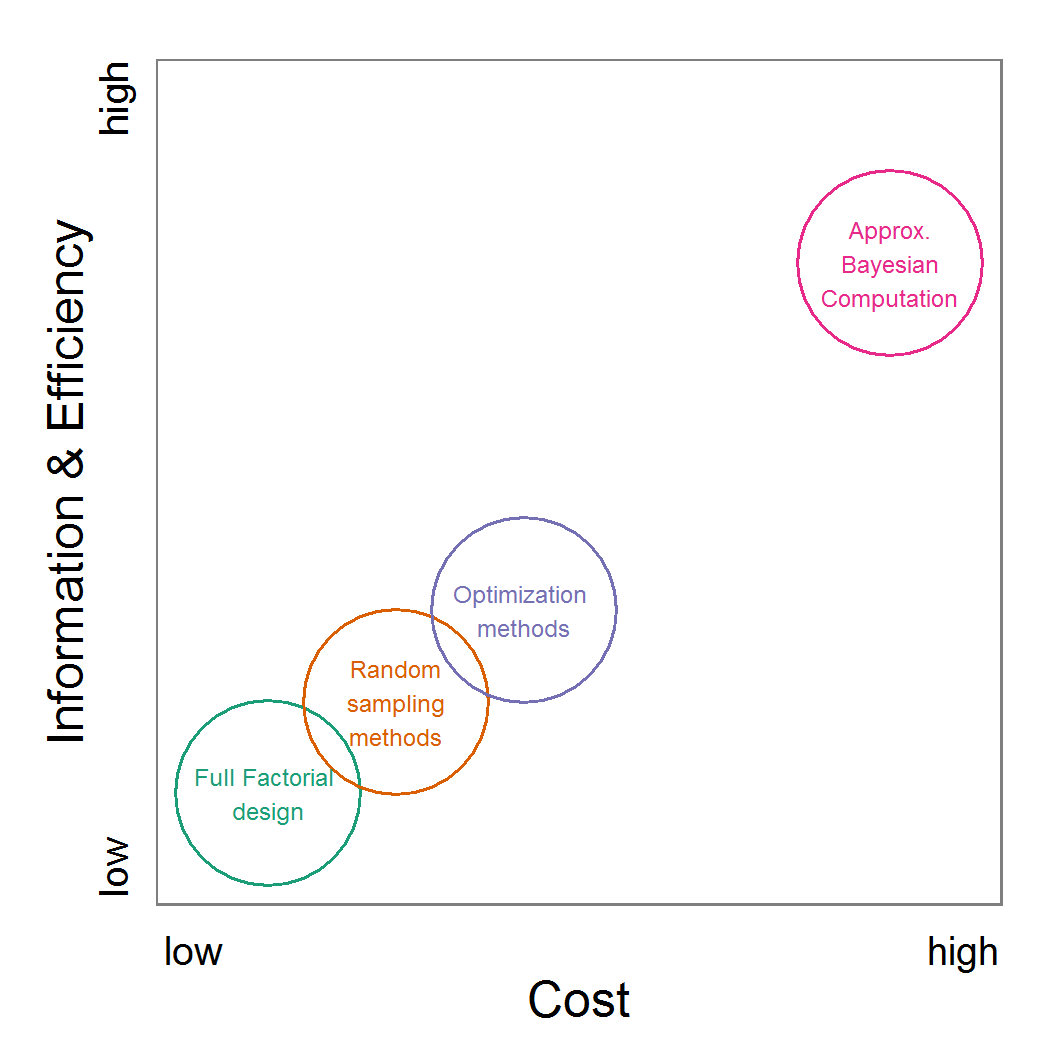

- We presented a range of methods for parameter calibration; from sampling techniques over optimisation methods to Approximate Bayesian Computation. Not only the knowledge required to properly apply these methods but also the efficiency of parameter estimation increase in exactly this order. Those modellers who are not willing to become familiar with the details of the more complex methods and are satisfied with less accurate/single parameter values should use the approved Latin hypercube sampling. Those who are interested in very good fits but do not need to worry too much about distributions and confidence bands for the parameter values should take a closer look into the various optimisation methods. The details can become tricky, but methods such as genetic algorithms and simulated annealing are widely used methods with lots of documentation. The ABC methods deliver the most recent approach to parameter calibration but require a much deeper statistical understanding than the other methods as well as sufficient computational power to run a very large number of simulations; on the other hand, these methods deliver much more information than the other methods by constructing a distribution of parameter values rather than one single value. This field is currently quickly evolving, and we see an ongoing development process of new approaches especially designed for the parameterisation of complex dynamic models (e.g.Hartig et al. 2013).

- 2.57

- It is always a good idea to start with a very simple approach, such as Latin hypercube sampling, to acquire a feel for the mechanisms of the model and the response to varying parameter values. From there, one can decide whether more sophisticated methods should be applied to the fitting problem. This sequence avoids the situation in which a sophisticated method is fine tuned first, and it is later realised that the model was not able to produce the observed patterns, requiring a return to model development. Furthermore, it can be interesting to first identify those unknown/uncertain parameters that have a considerable influence on the model results. Then, intensive fine tuning of model parameters can be restricted to the most influential ones. For such an importance ranking, the screening techniques presented in the next section about sensitivity analysis can be of interest.

- 2.58

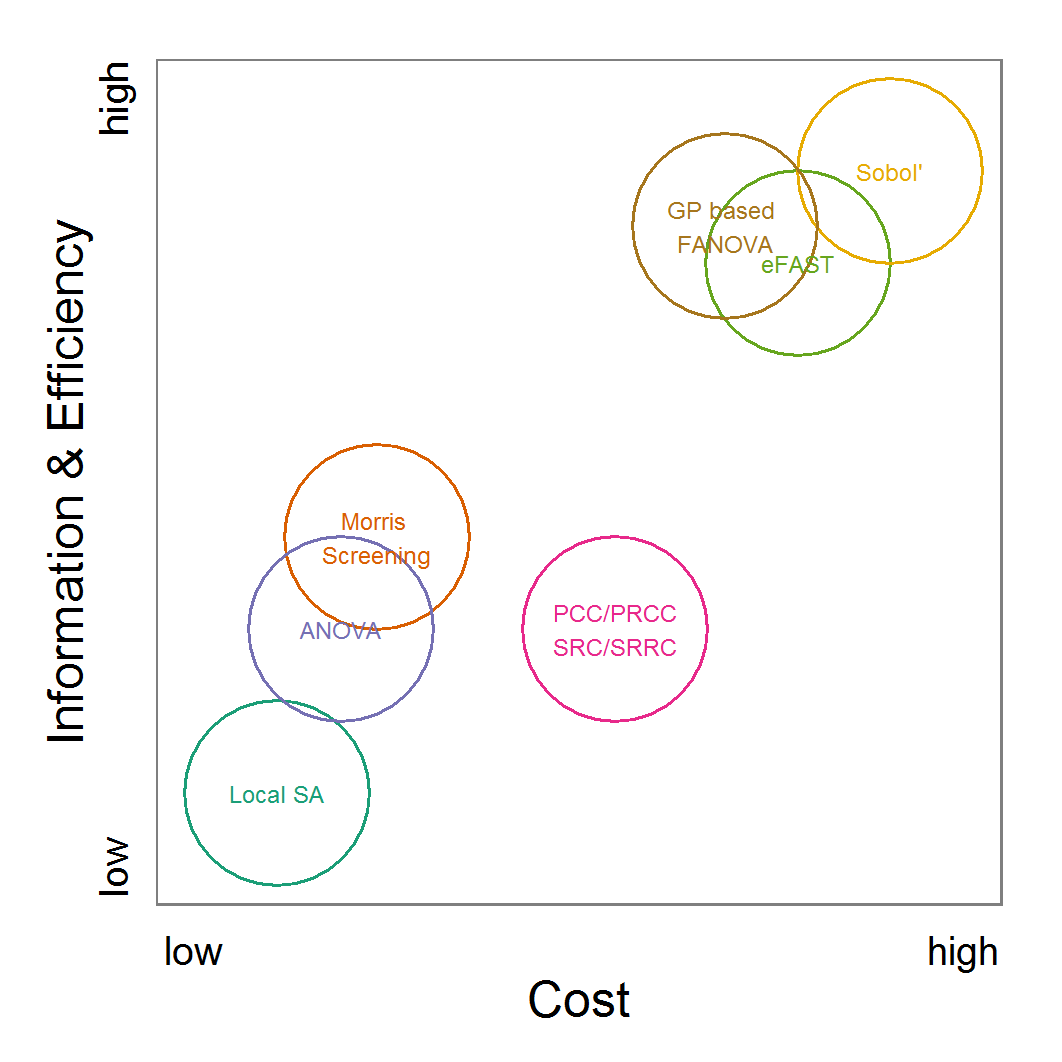

- As an attempt to rank the methods, we plotted their costs versus the combination of the information generated by the method and the efficiency with which the method generates them (Figure 11). Under costs, we summarised the amount of time one would need to understand the method and to fine-tune its application as well as the computational effort. The most desirable method is the ABC technique, but its costs are much higher than those of the other methods. In the case of large and computationally expensive models, however, the application of ABC techniques may be impossible. Then, the other techniques should be evaluated. For pre-studies, we recommend the application of LHS because it is very simple, can be set up very quickly based on the scripts delivered in the Supplementary Material and can be easily parallelised.

Figure 11. A rough categorisation of the parameter fitting/calibration methods used regarding their cost vs. information and efficiency. Cost includes the computational costs as well as the time required for understanding and fine-tuning the methods. Information and efficiency includes aspects of the type of output and the way to reach it. - 2.59

- For more complex ABMs, runtime might limit the ability to take full advantage of the methods presented here because the models cannot just be run several thousand times. Here, submodels could at least be parameterised independently. For example, in an animal population model, a submodel describing the animals' energy budget could be parameterised independent of the other processes and entities in the full model (e.g., Martin et al. 2013). A limitation of this "divide and conquer" method of parameterisation is that interactions between submodels might in fact exist, which might lead to different parameter values than if the full ABM were parameterised.

Sensitivity analysis

Sensitivity analysis

- 3.1

- Sensitivity analysis (SA) is used to explore the influence of varying inputs on the outputs of a simulation model (Ginot et al. 2006). The most commonly analysed inputs are model parameters. SA helps identify those parameters that have a strong influence on model output, which indicates which processes in the model are most important. Moreover, if inputs of the model are uncertain, which is usually the case, sensitivity analysis helps assess the importance of these uncertainties. If the model is robust against variations in the uncertain parameters, i.e., model output does not vary strongly when the parameter values are varied, the uncertainties are of low importance. Otherwise, the parameter values should be well-founded on empirical values (Bar Massada & Carmel 2008; Schmolke et al. 2010). SA is therefore closely related to uncertainty analysis (Ginot et al. 2006). In addition to model parameters, entire groups of parameters, initial values of state variables, or even different model structures can also be considered as inputs to be analysed in SA (Schmolke et al. 2010).

- 3.2

- With sensitivity analysis, three approaches are differentiated: screening, local and global sensitivity analysis (Saltelli 2000; sometimes screening methods are added to global SA methods, see, for example, Cariboni et al. 2007). Screening methods are used to rank input factors by their importance to differentiate between more and less important inputs. These methods are often useful for computationally expensive models because they are very fast in identifying the important parameters, which should be analysed in more detail, but they cannot deliver a quantification of the importance (Saltelli 2000).

- 3.3

- Originating from the analysis of models based on ordinary differential equations, local sensitivity analysis quantifies the effect of small variations in the input factors (Soetaert & Herman 2009; Marino et al. 2008). Classical local sensitivity analysis is often performed as ceteris paribus analysis, i.e., only one factor is changed at a time (the so-called one-factor-at-time approach, OAT) (Bar Massada & Carmel 2008). In contrast, in global sensitivity analysis, input factors are varied over broader ranges. This is of special importance if the inputs are uncertain (Marino et al. 2008), which is mostly the case for ABMs. Furthermore, in global sensitivity analysis several input factors are often varied simultaneously to evaluate not only the effect of one factor at a time but also the interaction effect between inputs; the sensitivity of an input usually depends on the values of the other inputs.

Preliminaries: Experimental setup for the example model

- 3.4

- For simplicity, we will restrict the following examples of sensitivity analyses to three parameters: scout-prob, survival-prob, and scouting-survival. The value ranges and base values used (e.g., for local sensitivity analysis) for the three parameters covered by the sensitivity analysis are listed in Table 5.

- 3.5

- To control stochasticity in the simulation model, we apply the same approach with 10 repeated model runs as described for parameter fitting.

Table 5: Model parameters used in sensitivity analysis. Parameter Description Base value used

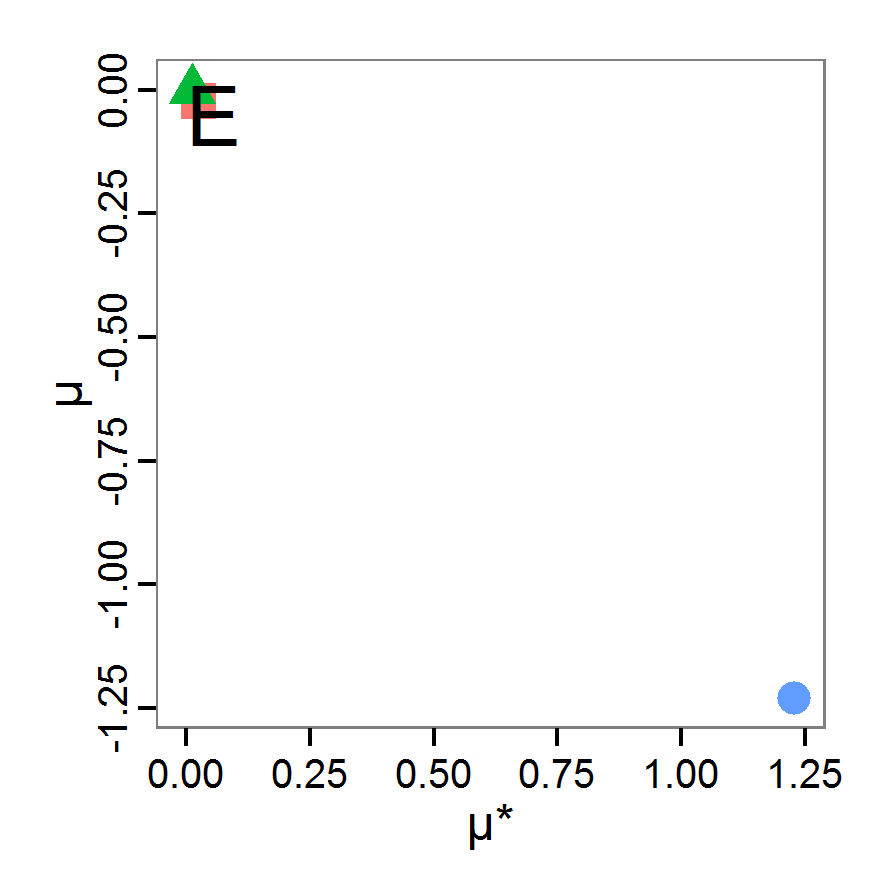

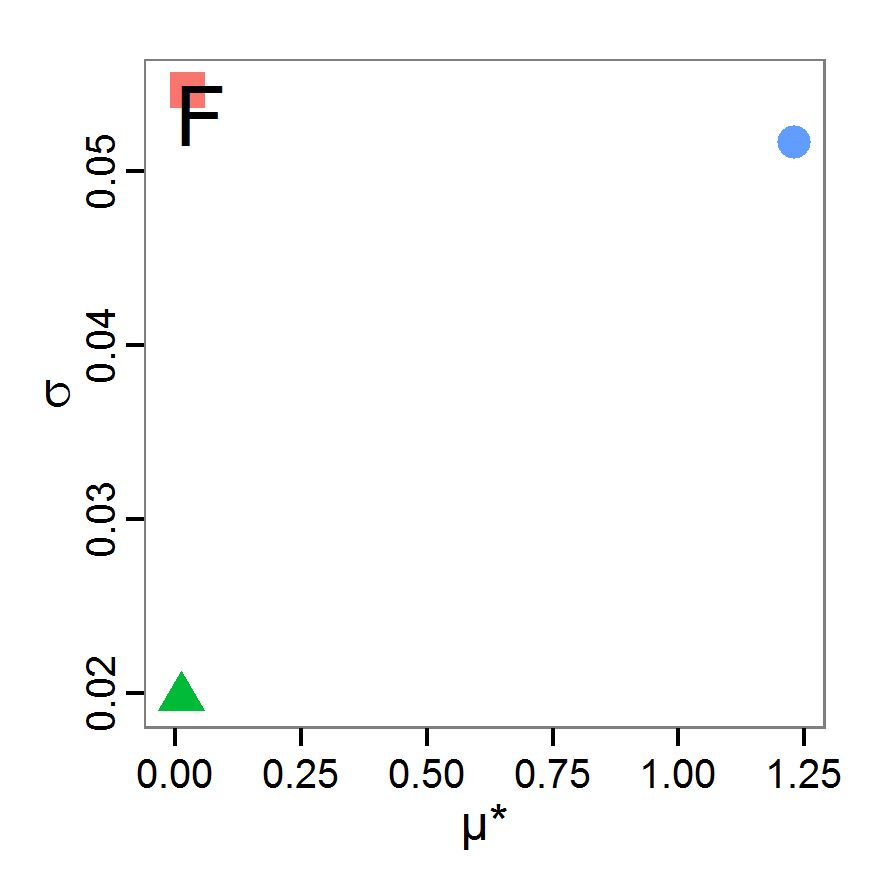

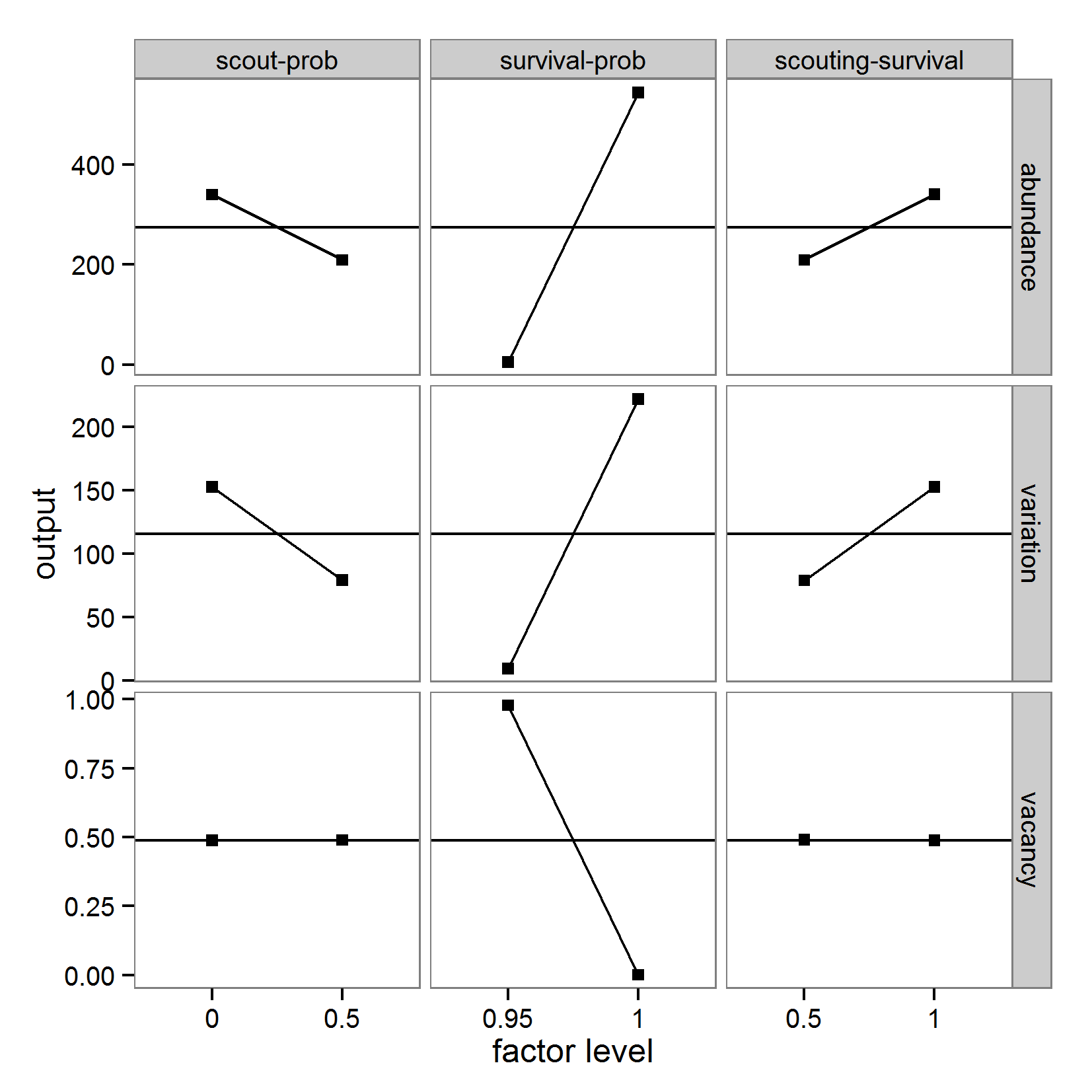

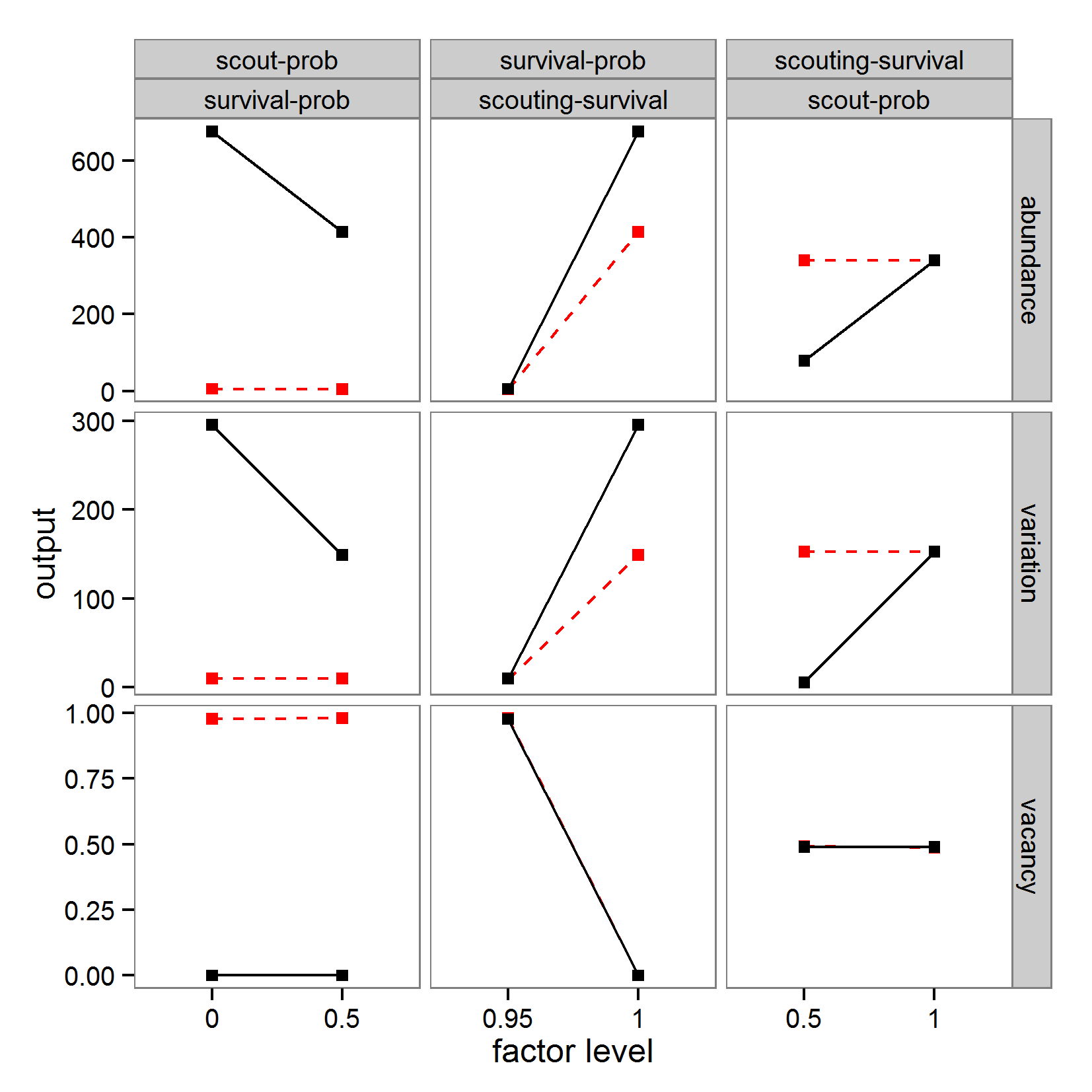

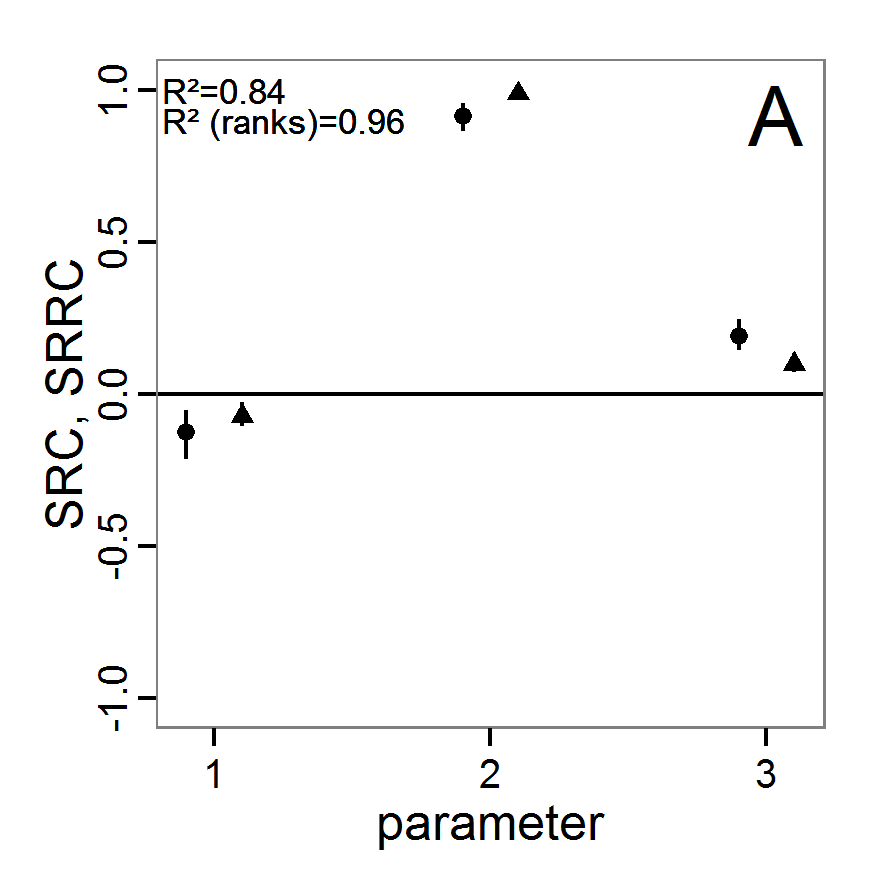

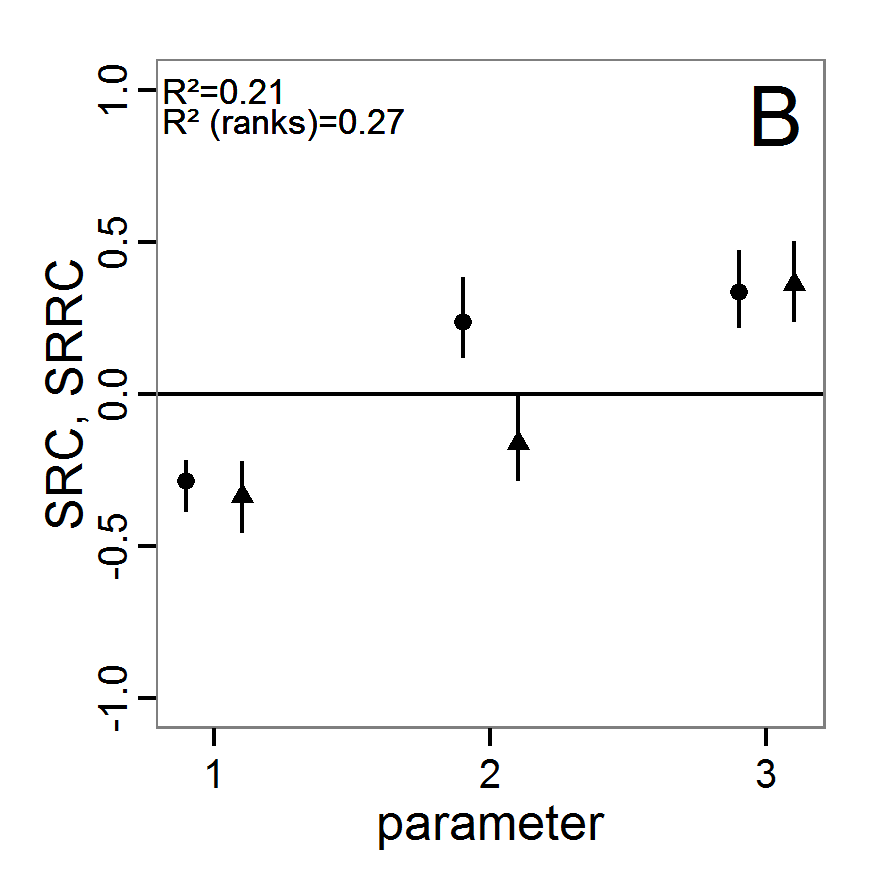

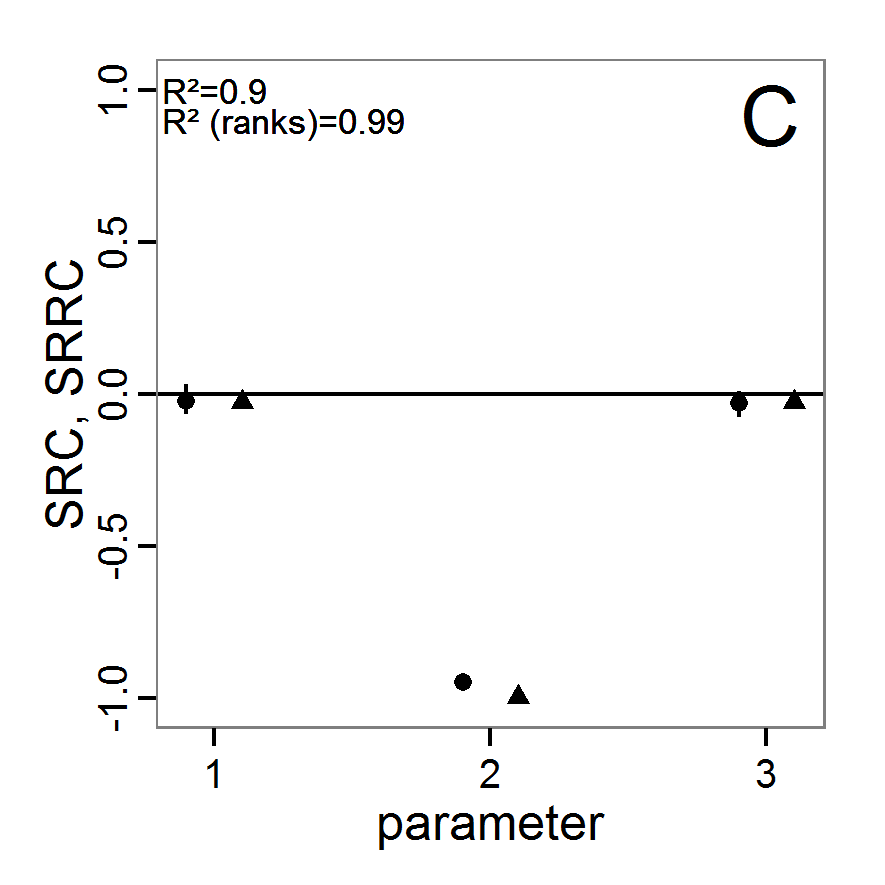

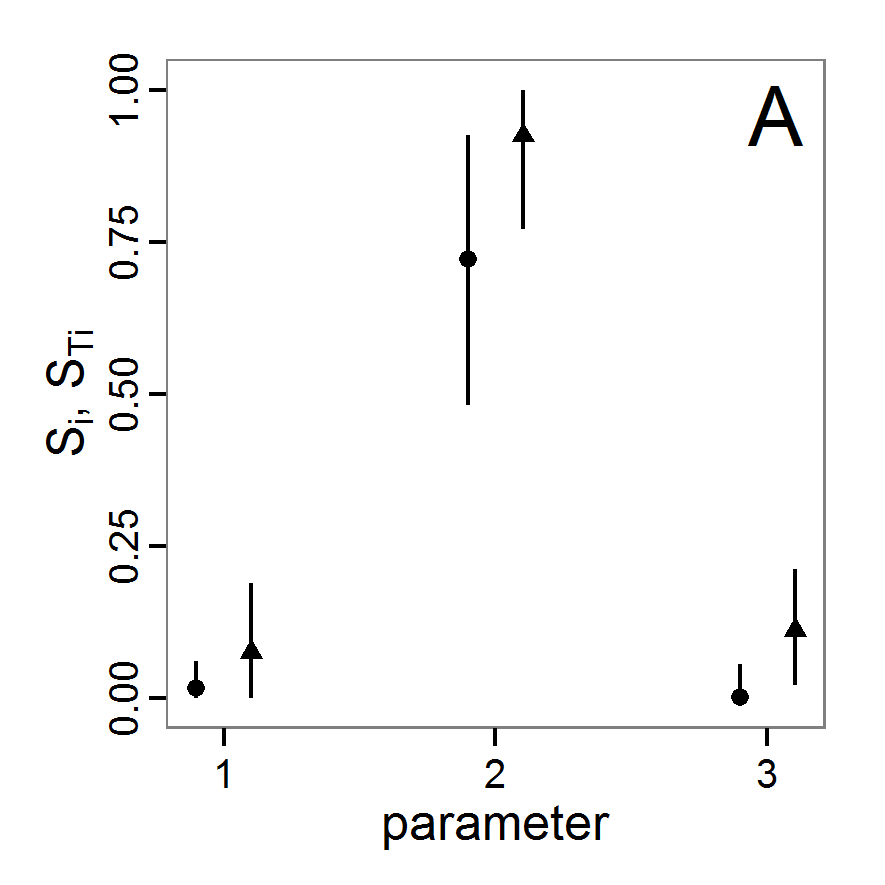

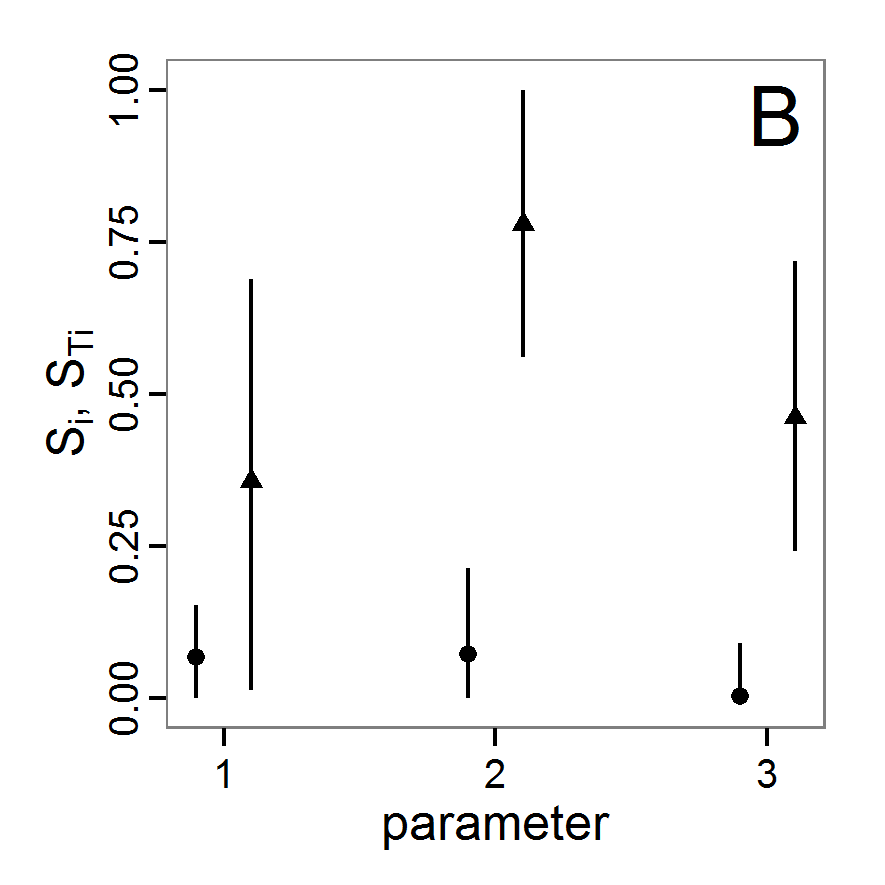

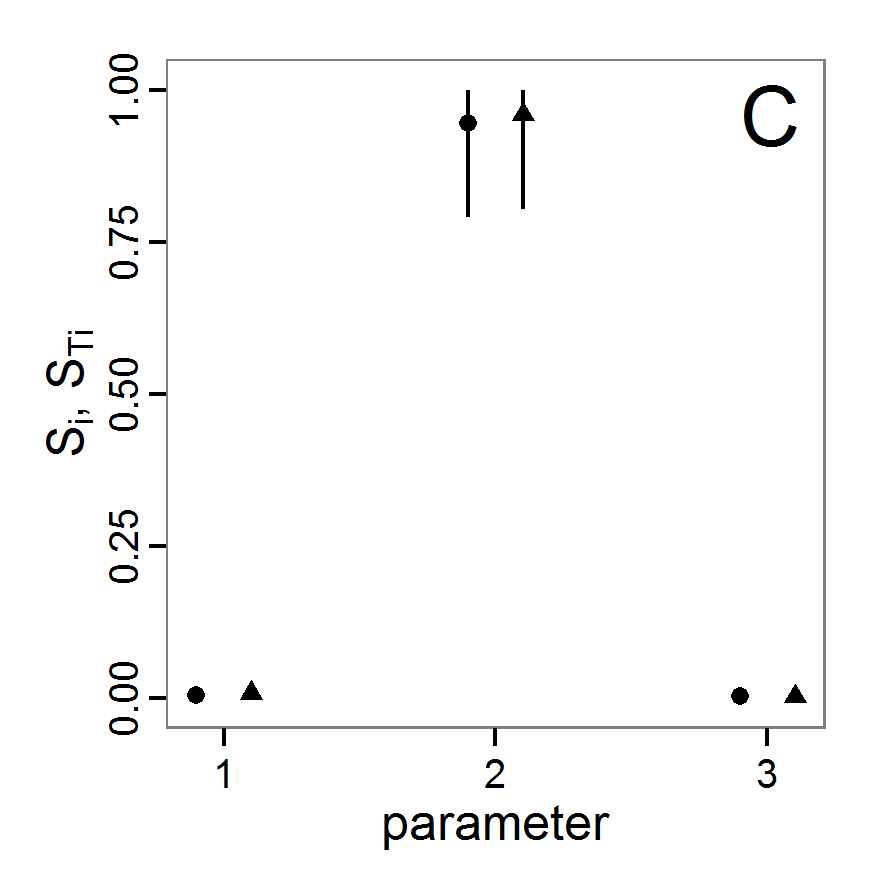

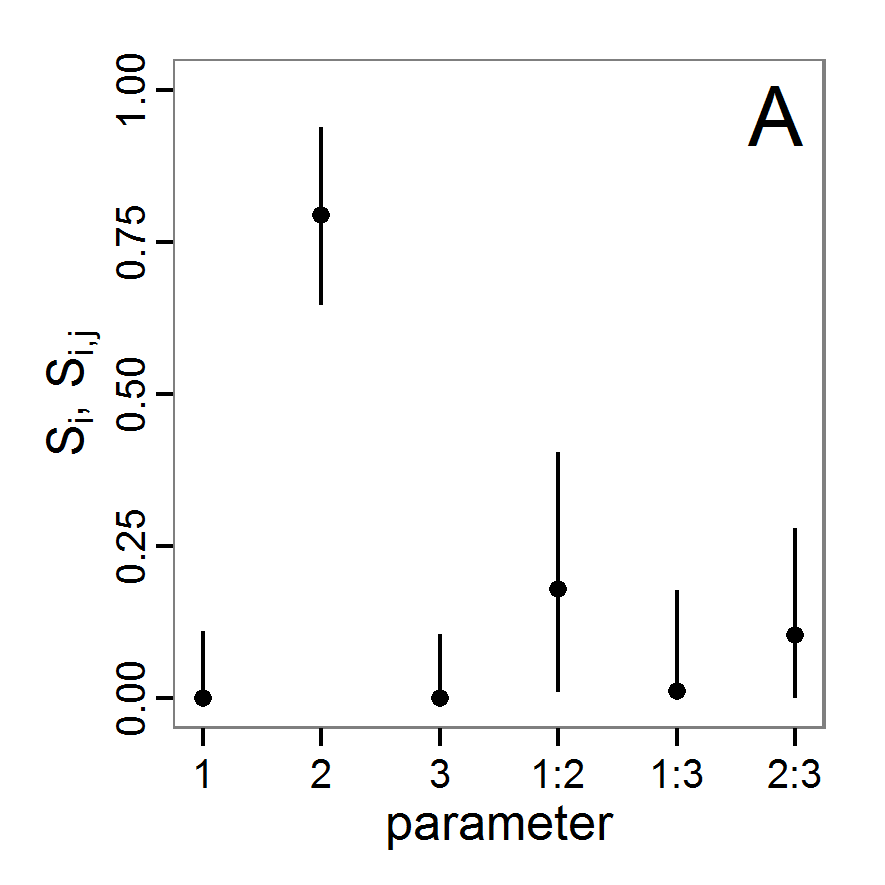

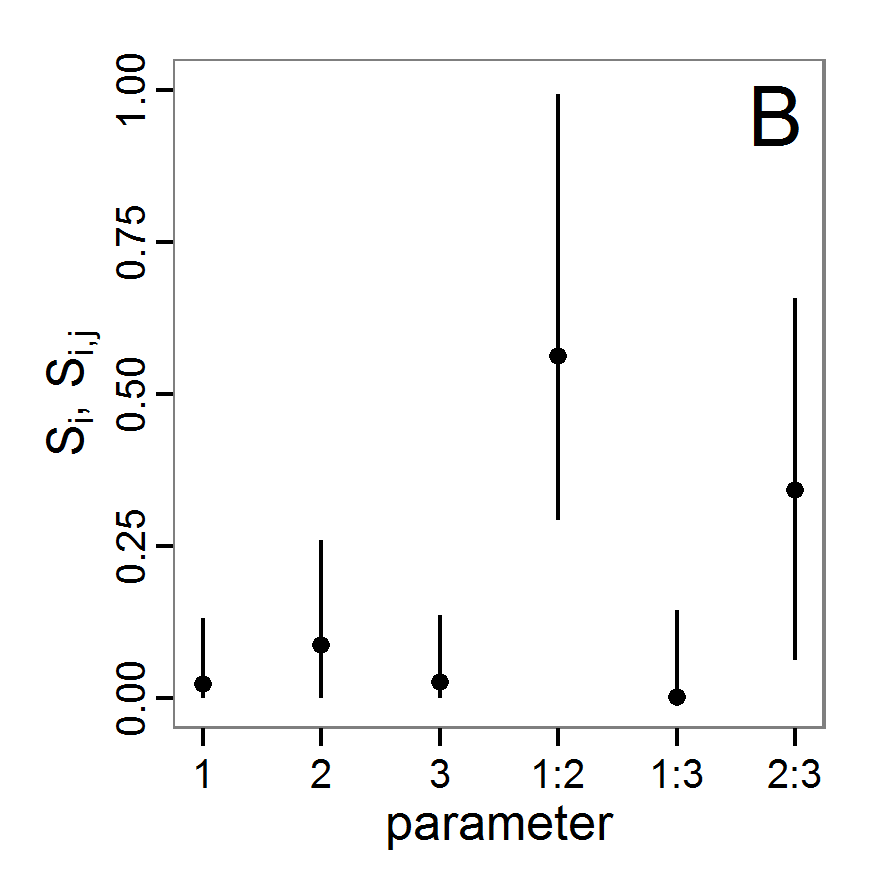

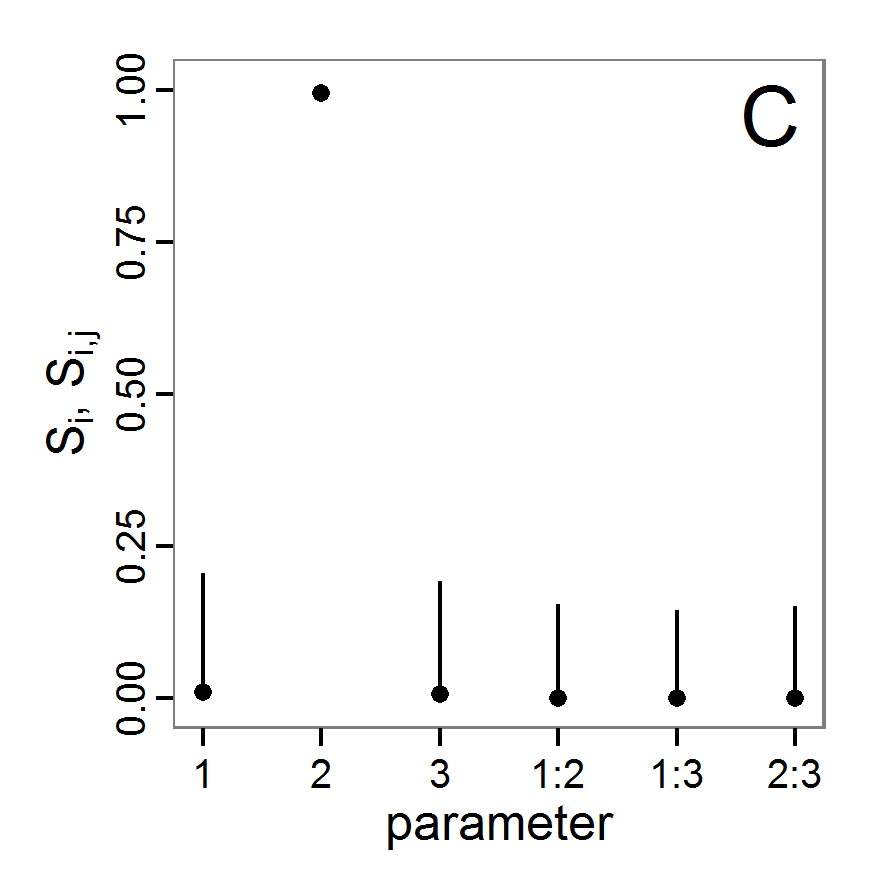

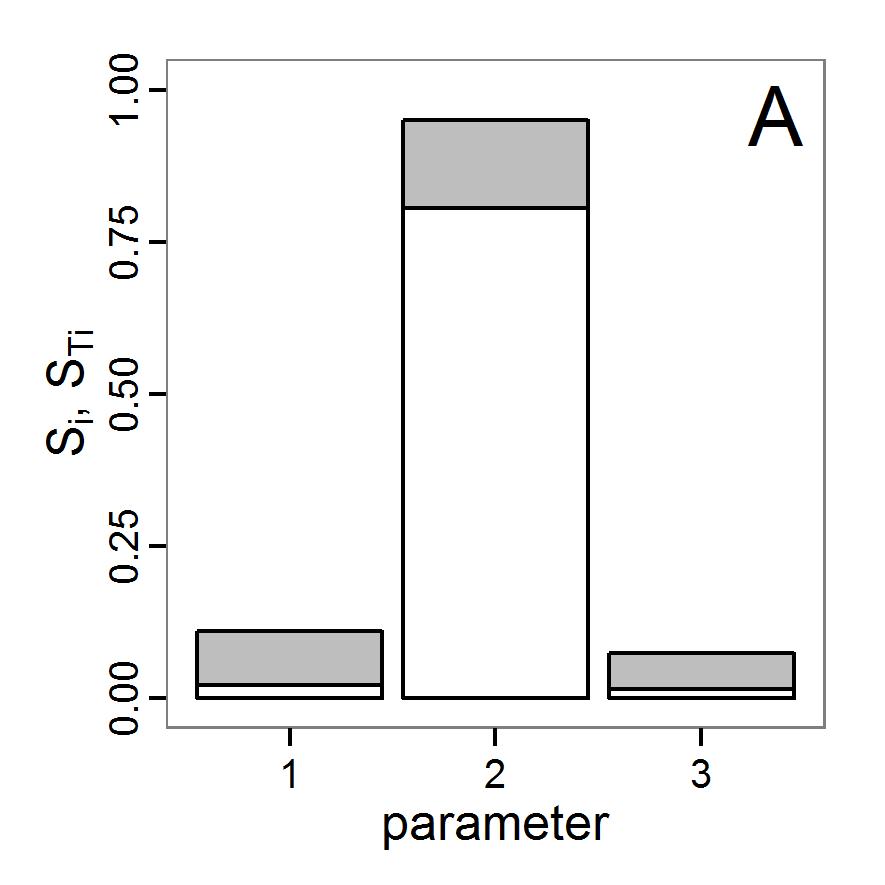

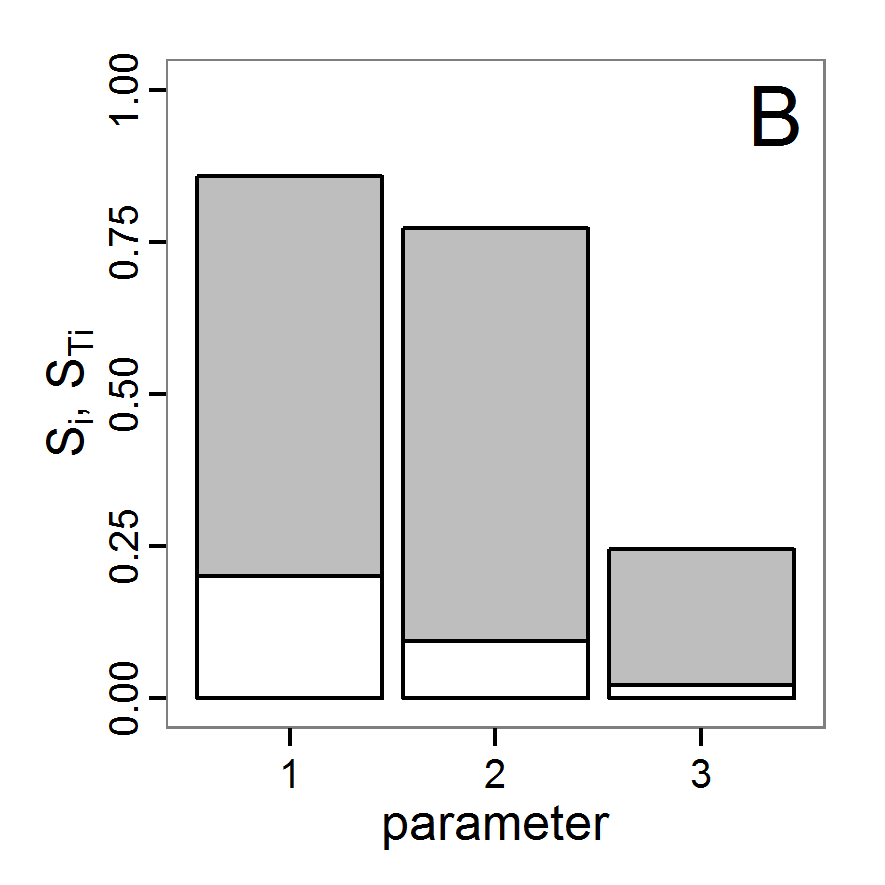

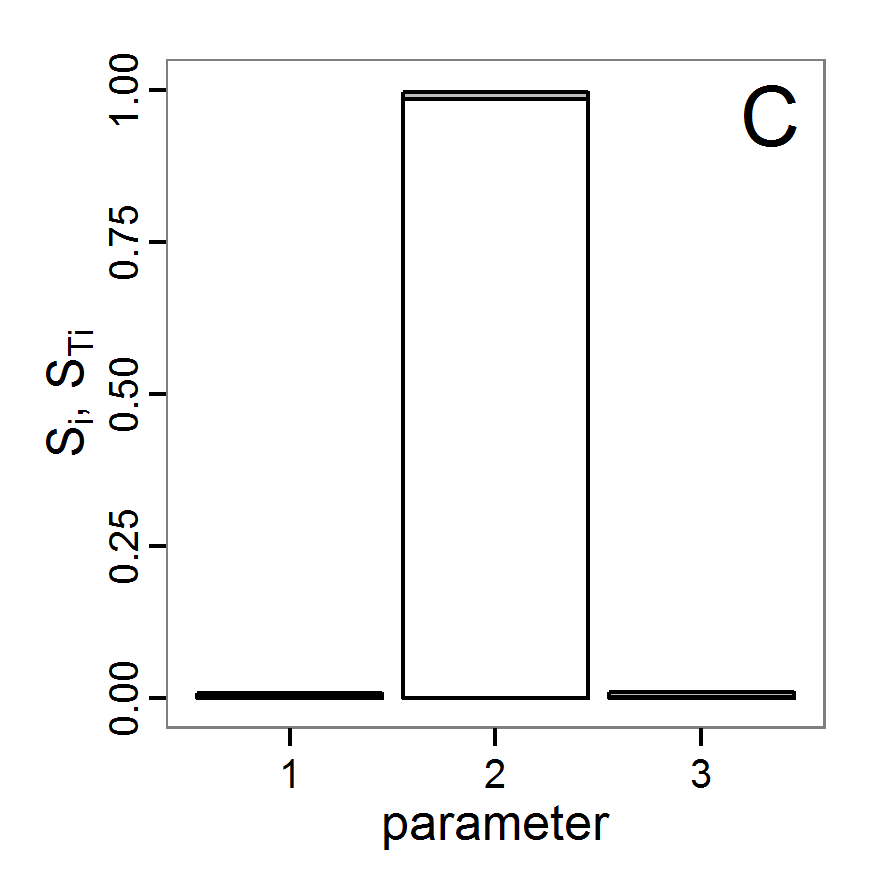

by Railsback & Grimm (2012)Base value used here Min. value Max. value scout-prob Probability of undertaking