Abstract

Abstract

- When talking to fellow modellers about the feedback we get on our simulation models the conversation quickly shifts to anecdotes of rejective scepticism. Many of us experience that they get only few remarks, and especially only little helpful constructive feedback on their simulation models. In this forum paper, we give an overview and reflections on the most common criticisms experienced by ABM modellers. Our goal is to start a discussion on how to respond to criticism, and particularly rejective scepticism, in a way that makes it help to improve our models and consequently also increase acceptance and impact of our work. We proceed by identifying common criticism on agent-based modelling and social simulation methods and show where it shifts to rejection. In the second part, we reflect on the reasons for rejecting the agent-based approach, which we mainly locate in a lack of understanding on the one hand, and academic territorialism on the other hand. Finally, we also give our personal advice to socsim modellers of how to deal with both forms of rejective criticism.

- Keywords:

- Social Simulation, Agent-Based Modelling, Rejective Criticism, Constructive Feedback, Communication, Peer Support

Introduction

Introduction

- 2.1

- When talking to fellow socsim[1] modellers about the feedback we get from non-modelling peers the conversation quickly shifts to anecdotes of scepticism. Typically, the received criticism seems to be either absent, not fitting, incorrect or definitely not addressing the weak parts of the model of which you actually would expect criticism to. The resulting feeling of battling for acknowledgement of method-existence is reflected in the quote of Squazzoni (2010, p. 219): "After 15 years of active exploration, even the most enthusiastic supporter could not argue that ABM has yet dramatically changed the current landscape of social sciences." Why is ABM still not accepted in the common toolbox of social science researchers?

- 2.2

- We want simulation studies to have more impact in social sciences. However, colleagues from the social sciences remain sceptical and even disapproving given our results. What can we do? Our goal is to support socsim modellers by focusing on what we can do in order to understand criticism in its different manifestations and to respond adequately. We regard criticism, as any feedback, as useful, since it embodies the capacity to improve the quality of our work. However, all input needs to be filtered to be able to make it useful on the side of the receiver.

- 2.3

- In our understanding feedback, criticism, and scepticism[2] are neutral notions of interactions that are essential to scientific dialogue. In the following, we differentiate between different value-loaded manifestations of feedback/criticism/scepticism: We distinguish constructive criticism in the form of helpful feedback on our work from rejective criticism in the form of dismissive or even hostile responses, which may be more frustrating than helpful. The art of receiving feedback is to be able to separate one from the other, and maybe even transform rejective responses into constructive feedback. With this paper, we want to start a discussion of how filtering and transformation might be achieved.

- 2.4

- How do we proceed? First, we identify common criticism on agent-based modelling and social simulation methods in general and show, where it shifts to rejection. In the second part, we reflect on the reasons for rejection, which we mainly locate in a lack of understanding on the one hand, and academic territorialism on the other hand; and we also formulate advice to socsim modellers of how to deal with both forms of rejection.

Common criticism

Common criticism

- 3.1

- In the following paragraphs, we present common criticism that our socsim peers and we are confronted with in our daily work and particularly when presenting our simulation models on workshops and conferences where the simulation approach is still considered an "alien" method. When collecting the major criticisms, we first started from our personal experience and critical reviews we found in the basic literature on agent-based modelling (e.g., Axelrod 2006; Axtell 2000; Epstein 2008; Macy & Willer 2002; Miller & Page 2007). Additionally, we surveyed our socsim peers with a short questionnaire, distributed via the SIMSOC mailing list in the end of February 2012. The questionnaire contained the following two open questions:

- What field are you in? - Describe the field that your model is applied to OR is developed for.

- What criticism do you get? - Describe the criticism you receive. For instance, recall the questions or objections you got during a talk you gave. Feel free to address several points.

- 3.2

- 30 socsim peers of different disciplines replied to the survey. One third, and thus the major part of the respondents, have a background in management or economics. The others are associated to various fields such as ecology/geography (4), political science (4), psychology (2), sociology (2), and computer science (2). Six respondents did not indicate a specific field of social science they belong to.

- 3.3

- We reviewed the answers to the survey and grouped them into categories. These categories were partly already predefined by our literature review and personal experiences. Partly, they evolved during the process, fed by the answers and re-arranged to better capture the answers of our respondents. From this review, we extracted ten categories of common criticism towards social simulation, which we will present below. As we developed the categories inductively from the material the order of presentation does not imply any weight in the sense of their frequency, but rather follows our conceptual reasoning. If not indicated otherwise, direct quotes are taken from the survey responses.

Your model is too complex.

- 3.4

- One major criticism agent-based modellers are confronted with concerns the complexity of their models. Especially macro-level modellers that prefer equation-based approaches are often doubtful given the number of variables and parameters in agent-based simulations. Even simulation models that the modeller thinks are built as simply as possible cannot compete with simple mathematical equations in this respect. Reactions of this type are for instance: "Why are your models so complicated? Can't you do with a simpler model?" or "It's too complex; I can solve it more simply."

Your model is too simple.

- 3.5

- Ironically, the modeller will frequently also hear the opposite criticism for the very same models: being too simple. More than just accusing the researcher of oversimplifying certain social processes, the 'too simple' criticism often ends in the overall rejective scepticism that "Real life is just too complex and unpredictable to model". For socsim modellers, this objection is especially hard to deal with as it shows a deep misunderstanding of the role of any modelling. As Holland (1998, p. 24) puts it: "Shearing away detail is the very essence of model building. Whatever else we require, a model must be simpler than the thing modelled." In our experience scholars with a critical or normative self-concept as a social scientist are particularly sceptical to the simulation approach, which in their view is "reductionist" and "value-free". A typical reaction of this kind is the following: "People are not numbers or machines, but behave in individualistic ways."

You chose the wrong focus.

- 3.6

- If the modeller is not generally criticised for his or her models being too complex or too simple, he or she often encounters a more specific variation of the same type of criticism that may be summarized with the statement: "But you have left out factor X!" or more concrete "You didn't include my favourite variable." This type of reaction is frequently reported when modellers talk to scientists who are experts on their modelling target. The sarcastic summary of one of the survey respondents says it all: "It implies that a model is useless, unless it includes all of the factors they are expert about: X, Y, Z, etc."

Your model is not theory-based.

- 3.7

- A lot of scepticism is also grounded in the question of the theoretical foundations of a simulation model. Of course, social simulation modellers need to address questions such as: "Where do you get your assumptions from? Are they backed by theory?" (cf., Conte 2009). As simulation models explicitly implement assumptions on social processes in the source code, they can be considered formalised expressions of theory. However, it might not be a theory that is common sense in that community or a theory that is favoured by the critiques. Disapproval of the chosen theoretical approach might lead to total rejection of the model by accusing the modeller of choosing the "wrong" theory. Of course, one could discuss at length, which one is the "right" theory, since there are usually competing theoretical approaches one might refer to when modelling a certain social process. Conflicts in this respect especially arise if established theories in the target field do not match the modeller's objectives. If the modeller for instance draws on empirical evidence contradicting mainstream theory this also might lead sceptics to dismiss the model. To give an example, one of the survey respondents reports the following reactions to his model: "Your networks are not scale free, so they must be wrong". He adds: "This criticism is made even when the networks are empirically based."

Your model is not realistic.

- 3.8

- Theory is one critical aspect, empirical validation the other. One of the most frequently reported criticisms in the survey is that agent-based models are too abstract and too far from reality. However, not all models and not every part of a model can be compared and validated against empirical data. Instead of recognizing the use of simulation models to produce insights on non-observable processes, possible future scenarios, or to serve as a thought experiment, the absence of empirical data too often leads to a complete rejection of the modelling approach. As one of the survey respondents puts it: "So the argument is: why to create a model for which we can never find data?"

Your assumptions and parameters are arbitrary.

- 3.9

- The criticism concerning theory and empirical data both boil down to the question where the model's assumptions and parameter settings come from, or as one of our survey respondents explains: "You can model a certain process using theory, but you have to make assumptions concerning some of the underlying processes - which usually are not addressed by the theory. So you have to make such assumptions, knowing that under certain circumstances they may make a large difference in the outcomes." This quote shows that the criticism of artificial assumptions really strikes a nerve in the socsim community. Modellers feel trapped between this criticism and the necessity to make such assumptions: no matter how well they base their models on theory or data, there will always be parts of the processes they do not have enough specific information on for their modelling decisions.

Your results are built into the model.

- 3.10

- The fact that the researcher can directly influence the results of the simulation by adjusting only a few parameters consequently leads scientists unfamiliar with the method often to doubt the credibility of the whole approach: If the model design depends so much on the researcher's hand, then you can get any result you want by modelling and simulating. In this context, socsim peers in the survey report of being accused for producing definite outcomes, "fishing for interesting effects by modifying parameters and mechanisms", or even "cherry picking" simulation data in a way "so that your approach always works". In this light, the whole approach of social simulation lacks credibility as a "result-driven perspective" only"developing ad-hoc explanations". A weaker form of this scepticism is stating that simulation results are not surprising, but rather trivial, and thus do not contribute substantially to research in the field. Admittedly, in a strict sense simulation results cannot be surprising as all processes are explicitly defined in the source code. The modeller here is challenged to show how the behaviour of the modelled system may be counterintuitive and reveals complex interactions, which are hidden to most of us when just thinking about the phenomena in question.

Your model is a black box.

- 3.11

- Another common criticism is that models are not transparent, or in the words of one of our respondents:"Computational models are too obscure and difficult to understand, not to mention to replicate." This may be due to a lack of understanding on the side of the audience, to the complexity of the model, or in the worst case both. Especially models with a large number of variables may be hard to analyse and even harder to communicate. This criticism is widely acknowledged and dealt with more and more in the socsim community (e.g., Grimm et al. 2006, 2010; Lorscheid, Heine & Meyer 2012; Sanchez 2007). However, often the sheer number of variables makes it impossible to enquire all kinds of combinations and interactions, a big problem modellers are also confronted with in the review process, as Axelrod (2006, pp. 1581-1582) noted:

"Even if the reviewer is satisfied with the range of parameter values that have been tested, he or she might think up some new variations of the model to inquire about. Demands to check new variants of the model as well as new parametric values in the original model can make the review process seem almost endless."

This is not science!

- 3.12

- All of the above mentioned doubts concerning the credibility of the method culminate in the complete rejection of the simulation approach as being a legitimate way of scientific knowledge production. This type of criticism is highly ideological, seeking to protect the mainstream paradigms of how science should work in the respective discipline. One manifestation is the paradigmatic abidance by analytical mathematical models in mainstream economics: "In order to build economic theories, models have to be mathematical, even at the sacrifice of realism (economists have said that to me)." In other, more empirically based disciplines, such as sociology or psychology, computational models are rejected because they are no empirical method; or, in more critical communities (such as cultural studies), where the goal is not only understanding, but also criticizing society, socsim modellers are called naïve positivists, who are not able to contribute meaningfully to social criticism. The essence of the scepticism is that because socsim modellers do not follow the orthodox approaches, their models are out of the realm what can be called economics, sociology, etc. and that they consequently can be considered nothing more than "daydreams".

Your model is not useful.

- 3.13

- Another variation of the 'this-is-not-science' type of argument is the statement that the model is simply not useful. Modelling and simulating may be a nice tool to play with, but what can it do that the mainstream methods cannot do? Can't we get the same insights by more common means? Socsim peers need to constantly explain and justify the extra benefit of their approach: "The orthodox approach works, why change?" Besides this scientific discourse, experts, such as policy-makers, are often mainly interested in the concrete use of a model for decision-making and prediction. It seems as if modellers more than other researchers have to think and argue on the 'so what'–question: "So what? What's in it for me? Of what practical value is it?"

Dealing with the underlying problem

Dealing with the underlying problem

- 4.1

- Feedback, ideally, safeguards the quality of our work. It may be really helpful when addressing substantial weaknesses of our work, e.g., theoretical foundation, missing validation, etc. But often criticism is regarded not helpful directly for our particular model. It is rejective against the whole methodology and puts the presenter in the position of defending the whole agent-based approach.

- 4.2

- For each of the common criticisms we dug deeper into the context of the survey statements. Typically the common criticisms appeared to be rejective, whereas other types of feedback were reported to be desired, however often absent or only minimally present. Based on the context of the survey statements, we tried to identify the underlying reasons for rejection. We could distinguish two sources for rejection: (1) lack of understanding and (2) academic territorialism. Looking at it from this perspective, the two sources pose different problems that demand their own way of dealing with.

Source 1: Lack of understanding

- 4.3

- Lack of understanding explains why other researchers find it hard to give us constructive feedback. They misunderstand or do not understand the work that is presented. Lack of understanding can itself originate from several sources. Other bases of knowledge or other methodological backgrounds might lead to a lack of understanding. For instance, modellers not familiar with the agent-based approach might not understand why the model needs so many parameters, and come up with statements such as the following: "If you really understood this problem you would be using a closed form linear model." Or: You didn't show the complete response surface for all 100+ variables in the model. "

- 4.4

- A clash of research goals also might produce misunderstanding, i.e., a scholar expecting prediction from a model that was designed to contribute to understanding, or demanding empirical validation from a model meant to explore future scenarios. The following statement reported by one of the survey respondents also shows that the main goal of the simulation analyses has not been understood by the audience: "This model is not robust as the starting conditions change the results, therefore it is not valid."

- 4.5

- On an even deeper level, clashes of world views might also be at work, as one of the respondents reflects: "What seems to be at stake here is not so much the criticism of ABM itself, but a resistance to the reduction of human behaviour to that of lesser beings/things."

- 4.6

- When one is able to classify the rejection in this category, in our view there is a lot of potential to increase your impact. To effectively deal with this problem is to improve communication in both, preparation and direct interaction:

1a) Understand

- 4.7

- Ask in order to understand![3] This helps you to clarify.

1b) Clarify

- 4.8

- The rejective criticism indicates that you were not clear enough. Be clearer, take the feedback seriously by being, for instance, more specific about your modelling aims, your modelling approach, your efforts to verify and validate, the theoretical foundation of your model and the set-up of your experimental design.

1c) Relate

- 4.9

- Relate to the audience's world. Use, for instance, examples typically used in this field of work; it is recognisable and helps understanding and embedding the 'new' things that you are going to share. Moreover, it acknowledges the existence of their theories, approaches, and results. In that sense, make yourself part of their discipline (in-group) and use their jargon. These are anchor points, especially when your work is very new or far from theirs. Whenever possible use their favourite means of visualisation and common approaches of data analysis. For example, it might help to present core processes of the model as flowcharts if this is a common mean of visualization in that discipline. Also reporting standard statistical measures on your simulation results, such as means and standard deviations, in the mainstream manner of that field might ease understanding and thereby help focusing on your actual outcome. On the contrary, abstain from using fancy visualizations if you cannot be sure that your audience will easily understand them.

1d) Be transparent

- 4.10

- Be transparent about your choices. Since socsim models are still not part of methodological common knowledge, we always need to stress what is the extra-benefit of our method. Make the audience aware that each method has their weaknesses and explanatory angle. By emphasising the strengths of our method the benefit is indicated in a non-attacking way.

- 4.11

- In (a) understanding what is not understood, (b) being more clear, (c) relating to your audience and (d) being more transparent, you actively try to move the body of rejective feedback originating from a lack of understanding into the realm of constructive feedback.

Source 2: Academic territorialism

- 4.12

- Academic territorialism is a notion we adopted from one of the respondents since it fitted so well: academics defending their territory. This notion comprises the whole range of basic debates based on a strong belief, preference or conviction of one's own methodology, ontology, research focus, types of knowledge in the 'battle of methods'[4]. The thoughts of this respondent reflect this well:

"Almost all the debate I get from my papers is really about academic territory, and the rationalisations that each sub-field (including social simulation!) have built up to justify their way of doing things. […] This seems to indicate that the use of social simulation is seen as a marker of group/tribe belonging, rather than evaluated dispassionately—often people assuming one is seeking to reduce 'their' complex phenomena to simplistic models, or trying to take-over a field. […] Sometimes this is just a defensive reaction to questions of 'expertise ownership' in different areas. It implies that a model is useless unless it includes all of the factors they are expert about: X, Y, Z, etc."

- 4.13

- Receiving this type of territorial criticism is in our view more tricky, simply because your efforts are only one part of the interaction. In terms of model impact, this depends more on others' willingness to bend their minds to something different. The response to this kind of feedback should in our perspective be dealt with in a completely different manner. For a lack of understanding we suggested to specify, whereas here we suggest pushing the discussion to a more abstract level by meta-communication and not entering the cycle of mutual rejection.

2a) Meta-communication

- 4.14

- Make explicit what is going on in the conversation. Specify your point of view in the 'battle of methods': The question is not whether one method is better than the other, but which one serves the research problem best. Elaborate on the different perspectives and the different research questions that lead to different method use, without delegitimizing or offending the mainstream perspective of your audience. For example, you might formulate:

- Differences in perspectives: Typically field X has a particular perspective on your modelling target, you have another.

- Differences in research questions: Explain why you seek a typical explanation that socsim can provide you, whereas the other perspective does not allow for that.

- Build bridges: Besides talking about differences, also show in which sense your approach is not so different, complementary, or even similar to the mainstream approach.

- 4.15

- For example, if someone argues that the world is too complex to model make clear that every scientist has models of the world, either explicit or implicit. Computer models are only one way of formalizing models. Rejective criticism concerning arbitrary assumptions might be encountered by showing how the common methods also build on assumptions, which are often not questioned anymore, just because they are common. For instance, many mainstream statistical measures assume that relationships between variables are linear, or that traits are distributed normally. In the best case, you can take your audience for a walk out of the box by making their rejective attitude towards your new approach an occasion to also take a more critical look on their approach.

2b) Don't enter the cycle of rejection[5]

- 4.16

- Getting into the battle of 'my method is better than yours' is just a waste of time. In our view there is no such thing as the best method. So, don't be defensive even though that can be very hard.

- 4.17

- Instead, we recommend the following: Agree to disagree and do not waste your energy. We assume that it is not your aim to spread the word of socsim in a missionary style. The aim is to share your work with others, because we think there is a value for it, since you and your audience are interested in understanding the same social phenomenon.

- 4.18

- Do realize that:

- Amongst research communities, disapproval of each other's methods, focus etc. is not rare. Working interdisciplinarily we explicitly choose to cross the fields, so the 'live and let live' strategy that more or less closed research communities can apply does not hold for us. We rather need to actively engage with each other's fields.

- New things take time to get used to. Although your words might not convince others immediately, it may trigger some thoughts or reflections, which is also impact, just not easily measurable.

- You have a whole audience that you affect while talking and responding to criticism. Don't forget your silent audience, your words reach them too; and be aware that the only thing you can conclude from their silence is that you don't know what they think.

Conclusion

Conclusion

- 5.1

- Many of us experience that they get only few remarks, and especially only little helpful constructive feedback on their simulation models. In this forum paper, we give an overview and reflections on the most common criticisms experienced by ABM modellers. Our goal is to start a discussion on how to respond to criticism, and particularly rejective criticism, in a way that makes it help to improve our models and consequently also increase acceptance and impact of our work.

- 5.2

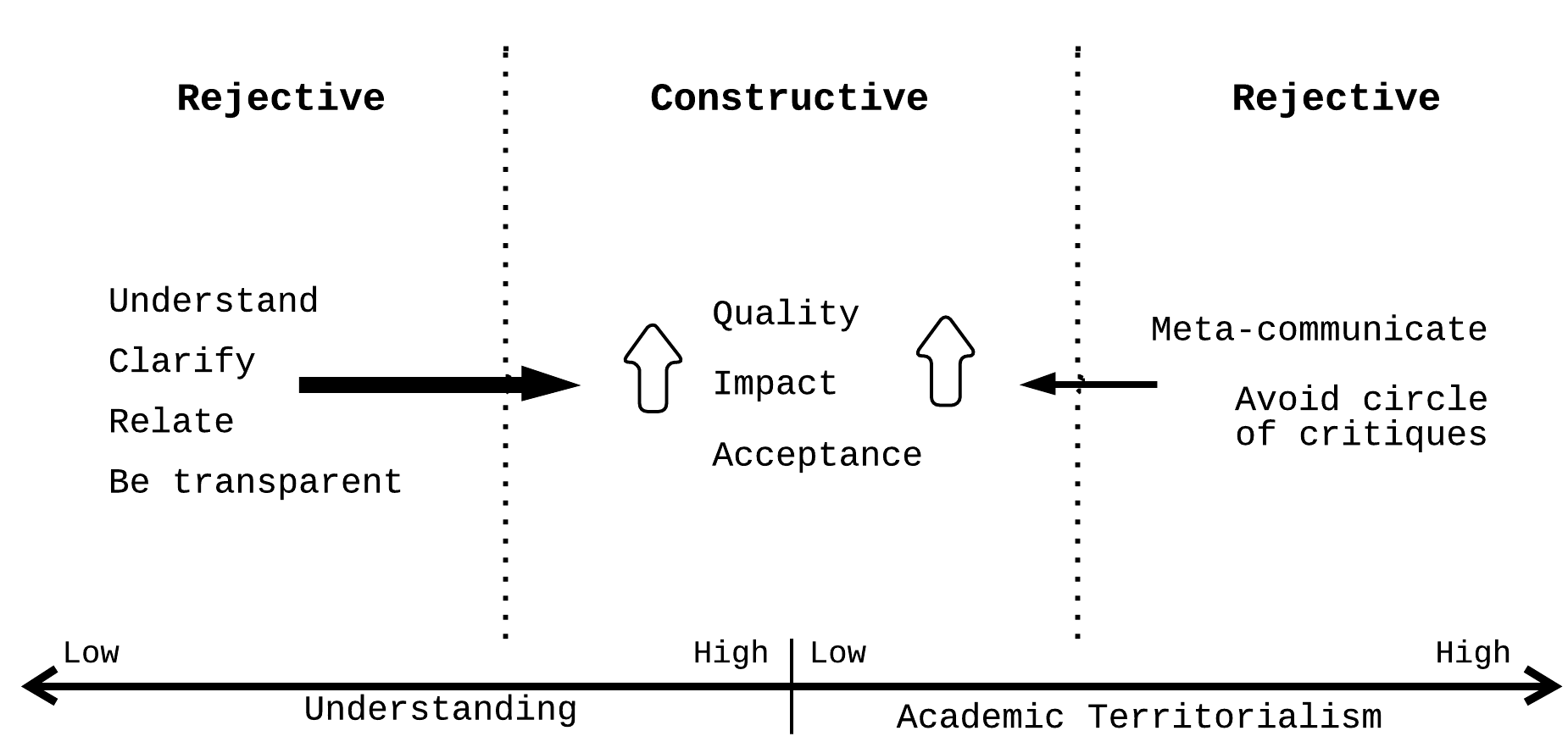

- The focus of our reflections is on the sources of rejection and on possible ways to transform rejective criticism into constructive feedback. As summarised in figure 1, we identified two sources of rejection: (1) lack of understanding and (2) academic territorialism. Helpful and constructive feedback is given, when understanding is high and territorialism is low. This means that criticism rests upon information and knowledge instead of prejudices; and that it is meant to improve the specific work instead of only generally delegitimizing its scientific approach.

- 5.3

- In our view, there is a large potential for shifting criticism out of the realm of rejection to helpful feedback especially for rejective feedback rooted to a lack of understanding. By finding out what is not understood, clarifying, relating to our audience and being transparent about our choices, we might be able to close the gap of misunderstanding. The trickier part in our perspective is dealing with academic territorialism. Here, we recommend shifting the debate to a meta-level by making clear the different perspectives from which scholars argue and criticise. We also think that it does not make sense to waste too much energy in trying to convert passionate ambassadors of orthodox approaches to an ABM perspective.

Figure 1. Dealing with criticism on Social ABM: The two sources of rejective criticism and proposed ways of dealing with them. - 5.4

- We consider it very important to actively engage in debates about the usefulness of the agent-based approach in general and our simulation models in particular. We have to be aware of the fact that what we do is new and unorthodox to others and address it. We do not want to act as missionaries, but we want to invite others to take a walk out of the box, so that they understand and value our contribution.

- 5.5

- As young socsim scholars we realise that finding our 'story' to communicate with our discipline peers can be very frustrating. Over the years, all of us have developed or will develop their own 'defence shields' to deal with the typical criticism. For newcomers to the social simulation community this certainly is a tedious road.

- 5.6

- The main reason for us writing this contribution is that we hope to initiate a broader discussion and learn from each other. Besides sharing our own experiences and thoughts on (rejective) criticism, we are eager to hear the stories of other socsim peers - newcomers and established researchers alike. This way we can support each other in finding the right arguments and ways of communication. Do you agree or disagree with our interpretations? Do you want to add arguments or share your experiences? All responses and discussions are welcome.

Acknowledgements

Acknowledgements

- We would like to thank the survey respondents from the SIMSOC mailing list for sharing their experiences and reflections. Furthermore, special thanks go out to the discussion peer group simsoc@work (now called local ESSA@work DE/NL). The experiences and discussions in this interdisciplinary setting enriched our thoughts about interdisciplinary communication and social ABM.

Notes

Notes

-

1 socsim = Social simulation

2 Throughout the paper we use the words feedback, criticism and scepticism interchangeably in terms of their neutral meaning. The differentiation in use specifies the type of interaction where we felt it fitted best to the context of interaction. Feedback reflects the general notion for interacting, whereas criticism and scepticism reflect the interactions that involve questioning the research under discussion.

3 This does not mean that one asks 'clarify what you didn't understand'. This is not useful as the other person might not even be aware he/she did not understand something. It is you as the presenter that can ask the questions that allow you to grasp what the other did not understand.

4 See Abbott (2004) for a detailed overview of the basic debates, critique and typical responses.

5 This notion is adapted from Abbott (2004, p. 60) who reflects on the different cycles of critiques and how that complicates the methodological landscape.

References

References

-

ABBOTT, A. D. (2004). Methods of Discovery: Heuristics for the Social Sciences. New York, NY: Norton.

AXELROD, R. (2006). Agent-based modeling as a bridge between disciplines. In L. Tesfatsion & K. L. Judd (Eds.), Handbook of computational economics, volume 2 (pp. 1565-1584). Amsterdam: Elsevier.

AXTELL, R. (2000). Why agents? On the varied motivations for agent computing in the social sciences. Working paper no. 17. Center on Social and Economic Dynamics, Washington, DC.

CONTE, R. (2009). From simulation to theory (and backward). In F. Squazzoni (Ed.), Epistemological aspects of computer simulation in the social sciences (pp. 29-47). Berlin: Springer. [doi:10.1007/978-3-642-01109-2_3]

EPSTEIN, J. M. (2008). Why model? Journal of Artificial Societies and Social Simulation, 11(4), 12 https://www.jasss.org/11/4/12.html.

GRIMM, V., Berger, U., DeAngelis, D. L., Polhill, J. G., Giske, J., & Railsback, S. F. (2010). The ODD protocol: A review and first update. Ecological Modelling, 221, 2760-2768. [doi:10.1016/j.ecolmodel.2010.08.019]

GRIMM, V., Berger, U., Finn, B., Eliassen, S., Ginot, V., Giske, J., Goss-Custard, J., Grand, T., Heinz, S. K., Huse, G., Huth, A., & Jespsen, J. U. (2006). A standard protocol for describing individual-based and agent-based models. Ecological Modelling, 198, 115-126. [doi:10.1016/j.ecolmodel.2006.04.023]

HOLLAND, J. H. (1998). Emergence: From chaos to order. Oxford, UK: Oxford University Press.

LORSCHEID, I., Heine, B.-O., & Meyer, M. (2012). Opening the 'black box' of simulations: Increased transparency and effective communication through the systematic design of experiments. Computational and Mathematical Organization Theory, 18(1), 22-62. [doi:10.1007/s10588-011-9097-3]

MACY, M. W. & Willer, R. (2002). From factors to actors: Computational sociology and agent-based modeling. Annual Review of Sociology, 28, 143-166. [doi:10.1146/annurev.soc.28.110601.141117]

MILLER, J. H. & Page, S. E. (2007). Complex adaptive systems: An introduction to computational models of social life. Princeton, NJ: Princeton University Press.

SANCHEZ, S. M. (2007). Work smarter, not harder: Guidelines for designing simulation experiments. In S. G. Henderson, B. Biller, M.-H. Hsieh, J. Shortle, J. Tew, & R. Barton (Eds.), WSC '07: Proceedings of the 39th Winter Simulation Conference (pp. 84-94). Piscataway, NJ: IEEE Press. [doi:10.1109/wsc.2007.4419591]

SQUAZZONI, F. (2010). The impact of agent-based models in the social sciences after 15 years of incursions. History of Economic Ideas, 18(2), 197–233.