Tibor Bosse and Jan Treur (2006)

Formal Interpretation of a Multi-Agent Society As a Single Agent

Journal of Artificial Societies and Social Simulation

vol. 9, no. 2

<https://www.jasss.org/9/2/6.html>

For information about citing this article, click here

Received: 12-Sep-2005 Accepted: 01-Mar-2006 Published: 31-Mar-2006

Abstract

AbstractIn formalised TTL form it looks as follows:'in any trace γ, if at any point in time t1 the agent A observes that it is raining, then there exists a time point t2 after t1 such that at t2 in the trace the agent A believes that it is raining'.

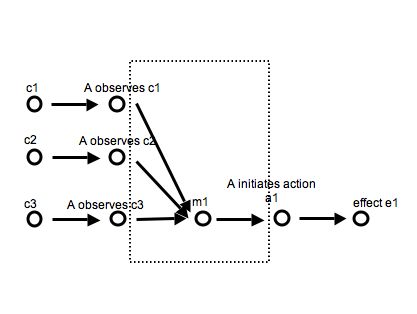

For a precise definition of the leads to format in terms of the language TTL, see Jonker et al. (2003). A specification of dynamic properties in leads to format has as advantages that it is executable and that it can often easily be depicted graphically.

- If

- state property α holds for a certain time interval with duration g,

- then

- after some delay (between e and f) state property β will hold for a certain time interval of length h.

|

| Figure 1. Single Agent behaviour based on an internal mental state |

|

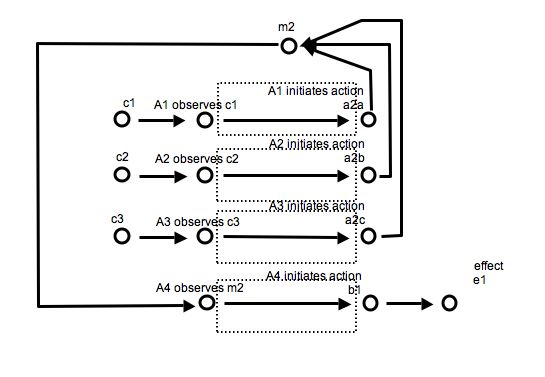

| Figure 2. Multi-Agent behaviour based on a shared external mental state |

To create this shared mental state property, actions a2a, a2b, a2c of the agents A1, A2, A3 are needed, and to show the behaviour, first an observation of m2 by agent A4 is needed. Note that here the internal processing is chosen as simple as possible: stimulus response. Hence, this agent is assumed not to have any internal states. This is in line with the ideas of Clark and Chalmers, who claim that the explanation of cognitive processes should be as simple as possible (Clark and Chalmers 1998). However, the interaction between agent and external world is a bit more complex: compared to a single agent perspective with internal mental state m1, extra actions of some of the agents needed to create the external mental state property m2, and additional observations are needed to observe it.

External world state properties

φ: c1 → c1Observation state properties

φ: c2 → c2

φ: c3 → c3

φ: effect e1 → effect e1

φ: A observes c1 → A1 observes c1Action initiation state properties

φ: A observes c2 → A2 observes c2

φ: A observes c3 → A3 observes c3

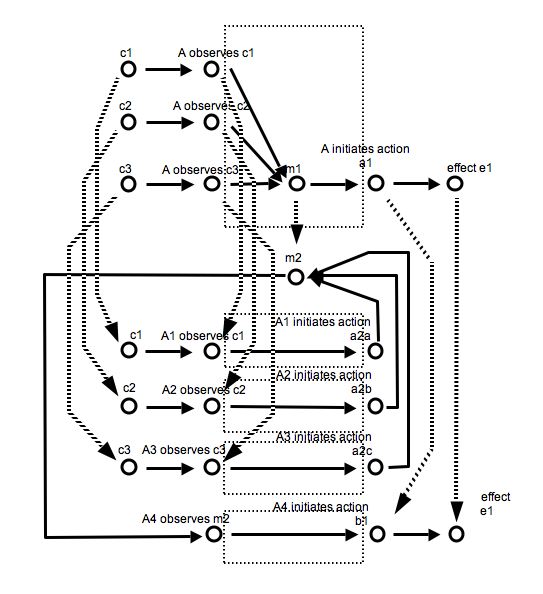

φ: A initiates action a1 → A4 initiates action b1Mental state property to external world state property

φ: m1 → m2

|

| Figure 3. Mapping from individual mental state to shared extended mind |

Note that in this case, for simplicity it is assumed that each observation of A is an observation of exactly one of the Ai, and the same for actions.

If, as we confront some task, a part of the world functions as a process which, were it done in the head, we would have no hesitation in recognizing as part of the cognitive process, then that part of the world is (so we claim) part of the cognitive process. Cognitive processes ain't (all) in the head! (…) (Clark and Chalmers 1998, Section 2).

One can explain my choice of words in Scrabble, for example, as the outcome of an extended cognitive process involving the rearrangement of tiles on my tray. Of course, one could always try to explain my action in terms of internal processes and a long series of "inputs" and "actions", but this explanation would be needlessly complex. If an isomorphic process were going on in the head, we would feel no urge to characterize it in this cumbersome way. (…) In a very real sense, the re-arrangement of tiles on the tray is not part of action; it is part of thought. (Clark and Chalmers 1998, Section 3).Clark and Chalmers (1998) use the isomorphic relation to a process 'in the head' as one of the criteria to consider external and interaction processes as cognitive, or mind processes. As the shared mental state property m2 is modelled as an external state property, this principle is formalised in Figure 3. Note that the process from m1 to action a1, modelled as one step in the single agent, internal case, is mapped onto a process from m2 via A4 observes m2 to A4 initiates action b1, modelled as a two-step process in the multi-agent, external case. So the mapping is an isomorphic embedding in one direction, not a bidirectional isomorphism, simply because on the multi-agent side, the observation state for A4 observing m2 has no counterpart in the single agent, internal case (and the same for the agents A1, A2, A3 initiating actions a2a, a2b, a2c).

|

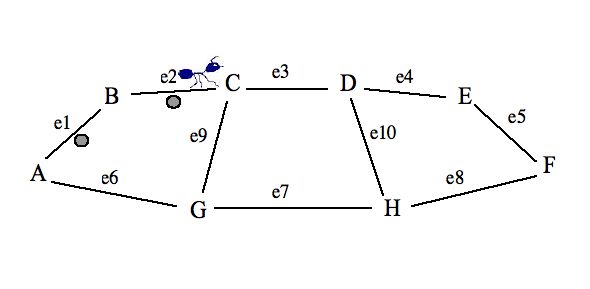

| Figure 4. An ant world |

| Table 1: Multi-Agent conceptualisation: state properties | |

| Multi-Agent Conceptualisation | |

| body positions in world: | |

| pheromone level at edge e is i | pheromones_at(e, i) |

| ant a is at location l coming from e | is_at_location_from(a, l, e) |

| ant a is at edge e to l2 coming from location l1 | is_at_edge_from_to(a, e, l1, l2) |

| ant a is carrying food | is_carrying_food(a) |

| world state properties: | |

| edge e connects location l1 and l2 | connected_to_via(l1, l2, e) |

| location l has i neighbours | neighbours(l, i) |

| edge e is most attractive for ant a coming from location l | attractive_direction_at(a, l, e) |

| input state properties: | |

| ant a observes that it is at location l coming from edge e | observes(a, is_at_location_from(l, e)) |

| ant a observes that it is at edge e to l2 coming from location l1 | observes(a, is_at_edge_from_to(e, l1, l2)) |

| ant a observes that edge e has pheromone level i | observes(a, pheromones_at(e, i)) |

| output state properties: | |

| ant a initiates action to go to edge e to l2 coming from location l1 | to_be_performed(a, go_to_edge_from_to(e, l1, l2)) |

| ant a initiates action to go to location l coming from edge e | to_be_performed(a, go_to_location_from(l, e)) |

| ant a initiates action to drop pheromones at edge e coming from location l | to_be_performed(a, drop_pheromones_at_edge_from(e, l)) |

| ant a initiates action to pick up food | to_be_performed(a, pick_up_food) |

| ant a initiates action to drop food | to_be_performed(a, drop_food) |

| Table 2: Single Agent conceptualisation: state properties | |

| Single Agent Conceptualisation | |

| mental state properties: | |

| belief(S, relevance_level(e, i)) | belief on the relevance level i of an edge e |

| body position in world: | |

| has_paw_at_location_from(S, p, l, e) | position of paw p at location l coming from edge e |

| has_paw_at_edge_from_to(S, p, e, l1, l2) | position of paw p at edge e to l2 coming from location l1 |

| is_carrying_food_with_paw(S, p) | paw p is carrying food |

| world state properties: | |

| connected_to_via(l1, l2, e) | edge e connects location l1 and l2 |

| neighbours(l, i) | location l has i neighbours |

| attractive_direction_at(p, l, e) | edge e is most attractive for paw p coming from location l |

| input state properties: | |

| observes(S, has_paw_at_location_from(p, l, e)) | S observes that paw p is at location l coming from edge e |

| observes(S, has_paw_at_edge_from_to(p, e, l1, l2)) | S observes that paw p is at edge e to l2 coming from location l1 |

| output state properties: | |

| to_be_performed(S, move_paw_to_edge_from_to(p, e, l1, l2)) | S initiates action to move paw p from location l1 to edge e to l2 |

| to_be_performed(S, move_paw_to_location_from (p, l, e)) | S initiates action to move paw p from edge e to location l |

| to_be_performed(S, pick_up_food_with_paw(p)) | S initiates action to pick up food with paw p |

| to_be_performed(S, drop_food_with_paw(p)) | S initiates action to drop food with paw p |

|

| Figure 5. Two conceptualisations and their mapping. |

Given these one-to-one correspondences, a mapping from the single agent conceptualisation to the multi-agent conceptualisation can be made as follows:

| Table 3: Mapping between state properties | |

| Single Agent Conceptualisation | Multi-Agent Conceptualisation |

| belief(S, relevance_level(e, i)) | pheromones_at(e, i) |

| has_paw_at_location_from(S, p, l, e) | is_at_location_from(a, l, e) |

| has_paw_at_edge_from_to(S, p, e, l1, l2) | is_at_edge_from_to(a, e, l1, l2) |

| is_carrying_food_with_paw(S, p) | is_carrying_food(a) |

| connected_to_via(l1, l2, e) | connected_to_via(l1, l2, e) |

| neighbours(l, I) | neighbours(l, i) |

| attractive_direction_at(p, l, e) | attractive_direction_at(a, l, e) |

| observes(S, has_paw_at_location_from(p, l, e)) | observes(a, is_at_location_from(l, e)) |

| observes(S, has_paw_at_edge_from_to(p, e, l1, l2)) | observes(a, is_at_edge_from_to(e, l1, l2)) |

| --- | observes(a, pheromones_at(e, i)) |

| to_be_performed(S, move_paw_to_edge_from_to(p, e, l1, l2)) | to_be_performed(a, go_to_edge_from_to(e, l1, l2)) |

| to_be_performed(S, move_paw_to_location_from (p, l, e)) | to_be_performed(a, go_to_location_from(l, e)) |

| --- | to_be_performed(a, drop_pheromones_at_edge_from(e, l)) |

| to_be_performed(S, pick_up_food_with_paw(p)) | to_be_performed(a, pick_up_food) |

| to_be_performed(S, drop_food_with_paw(p)) | to_be_performed(a, drop_food) |

Formalisation: observes(a, is_at_location_from(l, e0)) and neighbours(l, 3) and connected_to_via(l, l1, e1) and observes(a, pheromones_at(e1, i1)) and connected_to_via(l, l2, e2) and observes(a, pheromones_at(e2, i2)) and e0 ≠ e1 and e0 ≠ e2 and e1 ≠ e2 and i1 > i2 → to_be_performed(a, go_to_edge_from_to(e1, l1))

to_be_performed(a, go_to_edge_from_to(e, l, l1)) → is_at_edge_from_to(a, e, l, l1)

observes(a, is_at_edge_from_to(e, l, l1)) → to_be_performed(a, drop_pheromones_at_edge_from(e, l))

is_at_location_from(a, l, e0) and connected_to_via(l, l1, e1) and pheromones_at(e1, i) → observes(a, pheromones_at(e1, i))

to_be_performed(a1, drop_pheromones_at_edge_from(e, l1)) and ∀l2 not to_be_performed(a2, drop_pheromones_at_edge_from(e, l2)) and ∀l3 not to_be_performed(a3, drop_pheromones_at_edge_from(e, l3)) and a1 ≠ a2 and a1 ≠ a3 and a2 ≠ a3 and pheromones_at(e, i) → pheromones_at(e, i*decay+incr)

observes(a, is_at_location_from(F, e)) → to_be_performed(a, pick_up_food)

observes(S, has_paw_at_location_from(p, l, e0)) and neighbours(l, 3) and connected_to_via(l, l1, e1) and belief(S, relevance_level(e1, i1)) and connected_to_via(l, l2, e2) and belief(S, relevance_level(e2, i2)) and e0 ≠ e1 and e0 ≠ e2 and e1 ≠ e2 and i1 > i2 → to_be_performed(S, move_paw_to_edge_from_to(p, e1, l1))

to_be_performed(S, move_paw_to_edge_from_to(p, e, l, l1)) → has_paw_at_edge_from_to(S, p, e, l, l1)

observes(S, has_paw_at_edge_from_to(p1, e, l, l1)) and ∀l2 not observes(S, has_paw_at_edge_from_to(p2, e, l, l2)) and ∀l3 not observes(S, has_paw_at_edge_from_to(p3, e, l, l3)) and p1 ≠ p2 and p1 ≠ p3 and p2 ≠ p3 and belief(S, relevance_level(e, i)) → belief(S, relevance_level(e, i*decay+incr))

observes(S, has_paw_at_location_from(p, F, e)) → to_be_performed(S, pick_up_food_with_paw(p))

The complete sets of local properties used to model the example are shown in Appendix A (multi-agent case) and Appendix B (single agent case).

|

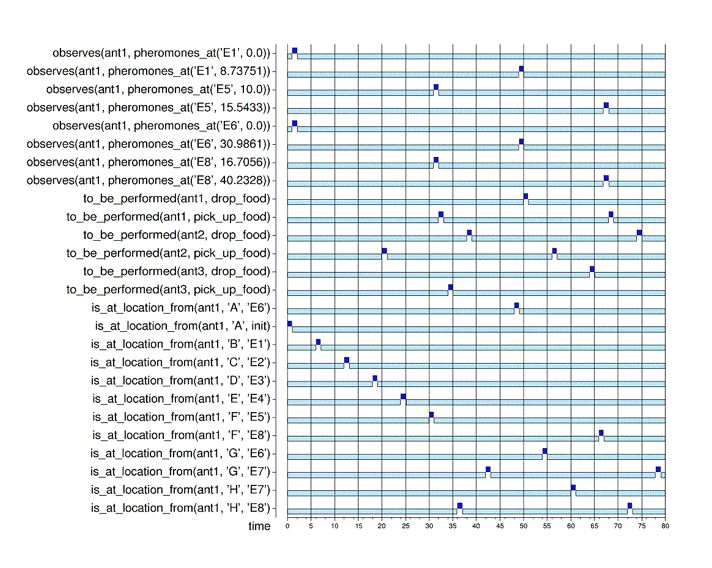

| Figure 6. Multi-Agent Simulation Trace |

|

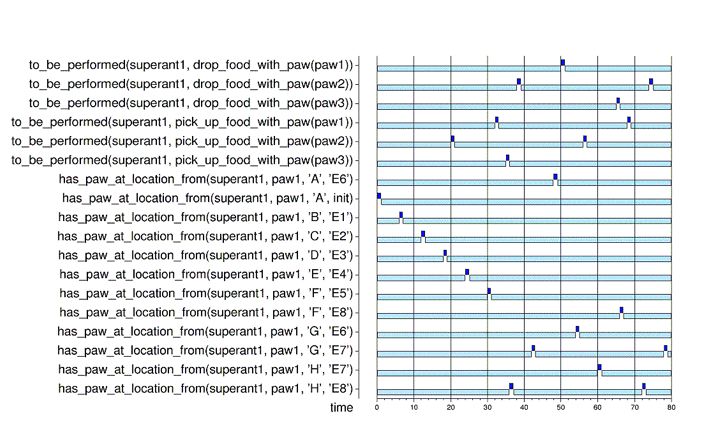

| Figure 7. Single Agent Simulation Trace |

φ(LP6') =A mapping between all local dynamic properties (in leads to format) of the case study is given in Table 4. Notice that in some cases a certain dynamic property is mapped to a dynamic property that is not literally in the multi-agent model, but actually is a combination of two other local properties present in the model. This shows where the single agent conceptualisation is simpler than the multi-agent conceptualisation.

φ(to_be_performed(S, move_paw_to_edge_from_to(p, e, l, l1)) → has_paw_at_edge_from_to(S, p, e, l, l1)) =

φ(to_be_performed(S, move_paw_to_edge_from_to(p, e, l, l1))) → φ(has_paw_at_edge_from_to(S, p, e, l, l1)) =

to_be_performed(a, go_to_edge_from_to(e, l, l1)) → is_ at_edge_from_to(a, e, l, l1)) =

LP6

| Table 4: Mapping between local dynamic properties | |

| Single Agent Conceptualisation | Multi-Agent Conceptualisation |

| LP1' | LP1 |

| LP2' | LP2 |

| LP3' | LP3 |

| LP4' | LP4 |

| LP5' | LP5 & LP12 |

| LP6' | LP6 |

| LP7' | LP7 |

| LP8' | LP8 |

| LP9' | LP10 |

| LP10' | LP11 |

| LP11' | LP9 & LP13 |

| LP12' | LP14 |

| LP13' | LP15 |

| LP14' | LP16 |

| LP15' | LP17 |

| LP16' | LP9 & LP18 |

φ(A ⇒ B) = φ(A) ⇒ φ(B)

φ(not A) = not φ(A)

φ(∀v A(v)) = ∀v' φ(A(v'))

φ(∃v A(v)) = ∃v' φ(A(v'))

∃t,p,l,e [ state(γ, t) |= has_paw_at_location_from(S, p, l, e) & state(γ, t) |= food_location(l) ]

This expression is mapped as follows:

∃t,a,l,e [ state(γ, t) |= is_at_location_from(a, l, e) & state(γ, t) |= food_location(l) ]

max { #{ < P', t > | ∃E < P', E, t > ∈ CASES(C) } | P' product } =

#{ < P, t > | ∃E < P, E, t > ∈ CASES(C) }

max { #{ E | ∃t < P', E, t > ∈ CASES(C) } | P' product } =

#{ E | ∃t < P, E, t > ∈ CASES(C) }

max { &Sigmat' rt-t' #{ < P', t' > | ∃E < P', E, t' > ∈ CASES(C) } | P' product } =

&Sigmat' rt-t' #{ < P, t' > | ∃E < P, E, t' > ∈ CASES(C) }

(…) we allow a more natural explanation of all sorts of actions. (…) in seeing cognition as extended one is not merely making a terminological decision; it makes a significant difference to the methodology of scientific investigation. In effect, explanatory methods that might once have been thought appropriate only for the analysis of "inner" processes are now being adapted for the study of the outer, and there is promise that our understanding of cognition will become richer for it. (Clark and Chalmers 1998, Section 3).In Jonker et al. (2002) it is explained in some detail why in various cases in other areas (such as Computer Science) such an antireductionist strategy often pays off; some of the discussed advantages in terms of insight, transparency and genericity are: additional higher-level ontologies can improve understanding as they may allow simplification of the picture by abstracting from lower-level details; more insight is gained from a conceptually higher-level perspective; analysis of more complex processes is possible; finally, the same concepts have a wider scope of application, thus obtaining unification.

Predicting that someone will duck if you throw a brick at him is easy from the folk-psychological stance; it is and will always be intractable if you have to trace the protons from brick to eyeball, the neurotransmitters from optic nerve to motor nerve, and so forth. (Dennett 1991b), p. 42.In this context, the perspective taken in the current paper can be viewed as an extension of the intentional stance, where mental concepts are ascribed not only to single agents, but also to processes that can be conceptualised as groups of agents. A difference with Dennett (1991b) is that the only types of mental states addressed in the current paper are beliefs. Nevertheless, we expect that our approach can be extended in order to ascribe other mental states (such as desires and intentions) to multi-agent societies as well. Further research will have to confirm this.

| T |-- α ⇒ T' |-- φ(α) |

for all formulae α, where T is a logical theory of single agent behaviour and T' a theory of multi-agent behaviour. More specifically, suppose that a global property B is implied by a number of local properties A1, …, An, according to the following relation:

| A1 & … & An ⇒ B |

Given this implication, the question to explore would be whether there is a similar relation available between the mapped properties, i.e., whether the following implication:

| φ(A1) & … & φ(An) ⇒ φ(B) |

holds as well.

BOSSE, T., Jonker, C.M., Meij, L. van der, and Treur, J. (2005) LEADSTO: a Language and Environment for Analysis of Dynamics by SimulaTiOn. In: Eymann, T. et al. (eds.), Proceedings of the Third German Conference on Multi-Agent System Technologies, MATES'05. Lecture Notes in AI, vol. 3550. Springer Verlag, pp. 165-178.

CLARK, A. (1997) Being There: Putting Brain, Body and World Together Again. MIT Press.

CLARK, A. and Chalmers, D. (1998) The Extended Mind. In: Analysis, vol. 58, pp. 7-19.

DENEUBOURG, J.L., Aron, S., Goss, S., Pasteels, J.M., and Duerinck, G. (1986) Random Behavior, Amplification Processes and Number of Participants: How They Contribute to the Foraging Properties of Ants. In: Evolution, Games and Learning: Models for Adaptation in Machines and Nature, North Holland, Amsterdam, pp. 176-186.

DENNETT, D.C. (1991a) Consciousness Explained, Little, Brown: Boston, Massachusetts.

DENNETT, D.C. (1991b) Real Patterns. The Journal of Philosophy, vol. 88, pp. 27-51.

DENNETT, D.C. (1996) Kinds of Mind: Towards an Understanding of Consciousness, New York: Basic Books.

DROGOUL, A., Corbara, B., and Fresneau, D. (1995) MANTA: New experimental results on the emergence of (artificial) ant societies. In: Gilbert N. and Conte R. (eds.), Artificial Societies: the computer simulation of social life, UCL Press.

FODOR, J.A. (1983) The Modularity of Mind, Bradford Books, MIT Press: Cambridge, Massachusetts.

JONKER, C.M., Treur, J., and Wijngaards, W.C.A. (2002) Reductionist and Antireductionist Perspectives on Dynamics. Philosophical Psychology Journal, vol. 15, pp. 381-409.

JONKER, C.M., Treur, J., and Wijngaards, W.C.A. (2003) A Temporal Modelling Environment for Internally Grounded Beliefs, Desires and Intentions. Cognitive Systems Research Journal, vol. 4(3), pp. 191-210.

KIM, J. (1996) Philosophy of Mind. Westview Press.

KIRSH, D. and Maglio, P. (1994) On distinguishing epistemic from pragmatic action. Cognitive Science, vol. 18, pp. 513-49.

MENARY, R. (ed.). (2006) The Extended Mind, Papers presented at the Conference The Extended Mind - The Very Idea: Philosophical Perspectives on Situated and Embodied Cognition, University of Hertfordshire, 2001. John Benjamins, to appear.

start → pheromones_at(E1, 0.0) and pheromones_at(E2, 0.0) and pheromones_at(E3, 0.0) and pheromones_at(E4, 0.0) and pheromones_at(E5, 0.0) and pheromones_at(E6, 0.0) and pheromones_at(E7, 0.0) and pheromones_at(E8, 0.0) and pheromones_at(E9, 0.0) and pheromones_at(E10, 0.0)

start → is_at_location_from(ant1, A, init) and is_at_location_from(ant2, A, init) and is_at_location_from(ant3, A, init)

start → connected_to_via(A, B, l1) and … and connected_to_via(D, H, l10) start → neighbours(A, 2) and … and neighbours(H, 3)

start → attractive_direction_at(ant1, A, E1) and … and attractive_direction_at(ant3, E, E5)

observes(a, is_at_location_from(A, e0)) and attractive_direction_at(a, A, e1) and connected_to_via(A, l1, e1) and observes(a, pheromones_at(e1, i1)) and connected_to_via(A, l2, e2) and observes(a, pheromones_at(e2, i2)) and e1 ≠ e2 and i1 = i2 → to_be_performed(a, go_to_edge_from_to(e1, A, l1))

observes(a, is_at_location_from(A, e0)) and connected_to_via(A, l1, e1) and observes(a, pheromones_at(e1, i1)) and connected_to_via(A, l2, e2) and observes(a, pheromones_at(e2, i2)) and i1 > i2 → to_be_performed(a, go_to_edge_from_to(e1, A, l1))

observes(a, is_at_location_from(F, e0)) and connected_to_via(F, l1, e1) and observes(a, pheromones_at(e1, i1)) and connected_to_via(F, l2, e2) and observes(a, pheromones_at(e2, i2)) and i1 > i2 → to_be_performed(a, go_to_edge_from_to(e1, F, l1))

observes(a, is_at_location_from(l, e0)) and neighbours(l, 2) and connected_to_via(l, l1, e1) and e0 ≠ e1 and l ≠ A and l ≠ F → to_be_performed(a, go_to_edge_from_to(e1, l, l1))

observes(a, is_at_location_from(l, e0)) and attractive_direction_at(a, l, e1) and neighbours(l, 3) and connected_to_via(l, l1, e1) and observes(a, pheromones_at(e1, 0.0)) and connected_to_via(l, l2, e2) and observes(a, pheromones_at(e2, 0.0)) and e0 ≠ e1 and e0 ≠ e2 and e1 ≠ e2 → to_be_performed(a, go_to_edge_from_to(e1, l, l1))

observes(a, is_at_location_from(l, e0)) and neighbours(l, 3) and connected_to_via(l, l1, e1) and observes(a, pheromones_at(e1, i1)) and connected_to_via(l, l2, e2) and observes(a, pheromones_at(e2, i2)) and e0 ≠ e1 and e0 ≠ e2 and e1 ≠ e2 and i1 > i2 → to_be_performed(a, go_to_edge_from_to(e1, l1))

to_be_performed(a, go_to_edge_from_to(e, l, l1)) → is_at_edge_from_to(a, e, l, l1)

is_at_edge_from_to(a, e, l, l1) → observes(a, is_at_edge_from_to(e, l, l1))

observes(a, is_at_edge_from_to(e, l, l1)) → to_be_performed(a, go_to_location_from(l1, e))

observes(a, is_at_edge_from_to(e, l, l1)) → to_be_performed(a, drop_pheromones_at_edge_from(e, l))

to_be_performed(a, go_to_location_from(l, e)) → is_at_location_from(a, l, e)

is_at_location_from(a, l, e) → observes(a, is_at_location_from(l, e))

is_at_location_from(a, l, e0) and connected_to_via(l, l1, e1) and pheromones_at(e1, i) → observes(a, pheromones_at(e1, i))

to_be_performed(a1, drop_pheromones_at_edge_from(e, l1)) and ∀l2 not to_be_performed(a2, drop_pheromones_at_edge_from(e, l2)) and ∀l3 not to_be_performed(a3, drop_pheromones_at_edge_from(e, l3)) and a1 ≠ a2 and a1 ≠ a3 and a2 ≠ a3 and pheromones_at(e, i) → pheromones_at(e, i*decay+incr)

to_be_performed(a1, drop_pheromones_at_edge_from(e, l1)) and to_be_performed(a2, drop_pheromones_at_edge_from(e, l2)) and ∀l3 not to_be_performed(a3, drop_pheromones_at_edge_from(e, l3)) and a1 ≠ a2 and a1 ≠ a3 and a2 ≠ a3 and pheromones_at(e, i) → pheromones_at(e, i*decay+incr+incr)

to_be_performed(a1, drop_pheromones_at_edge_from(e, l1)) and to_be_performed(a2, drop_pheromones_at_edge_from(e, l2)) and to_be_performed(a3, drop_pheromones_at_edge_from(e, l3)) and a1 ≠ a2 and a1 ≠ a3 and a2 ≠ a3 and pheromones_at(e, i) → pheromones_at(e, i*decay+incr+incr+incr)

observes(a, is_at_location_from(F, e)) → to_be_performed(a, pick_up_food)

to_be_performed(a, pick_up_food) → is_carrying_food(a)

observes(a, is_at_location_from(A, e)) and is_carrying_food(a) → to_be_performed(a, drop_food)

is_carrying_food(a) and not to_be_performed(a, drop_food) → is_carrying_food(a)

pheromones_at(e, i) and ∀a,l not to_be_performed(a, drop_pheromones_at_edge_from(e, l)) → pheromones_at(e, i*decay)

start → belief(S, relevance_level(E1, 0.0)) and belief(S, relevance_level(E2, 0.0)) and belief(S, relevance_level(E3, 0.0)) and belief(S, relevance_level(E4, 0.0)) and belief(S, relevance_level(E5, 0.0)) and belief(S, relevance_level(E6, 0.0)) and belief(S, relevance_level(E7, 0.0)) and belief(S, relevance_level(E8, 0.0)) and belief(S, relevance_level(E9, 0.0)) and belief(S, relevance_level(E10, 0.0))

start → has_paw_at_location_from(S, paw1, A, init) and has_paw_at_location_from(S, paw2, A, init) and has_paw_at_location_from(S, paw3, A, init)

start → connected_to_via(A, B, l1) and … and connected_to_via(D, H, l10) start → neighbours(A, 2) and … and neighbours(H, 3)

start → attractive_direction_at(paw1, A, E1) and … and attractive_direction_at(paw3, E, E5)

observes(S, has_paw_at_location_from(p, A, e0)) and attractive_direction_at(p, A, e1) and connected_to_via(A, l1, e1) and belief(S, relevance_level(e1, i1)) and connected_to_via(A, l2, e2) and belief(S, relevance_level(e2, i2)) and e1 ≠ e2 and i1 = i2 → to_be_performed(S, move_paw_to_edge_from_to(p, e1, A, l1))

observes(S, has_paw_at_location_from(p, A, e0)) and connected_to_via(A, l1, e1) and belief(S, relevance_level(e1, i1)) and connected_to_via(A, l2, e2) and belief(S, relevance_level(e2, i2)) and i1 > i2 → to_be_performed(S, move_paw_to_edge_from_to(p, e1, A, l1))

observes(S, has_paw_at_location_from(p, F, e0)) and connected_to_via(F, l1, e1) and belief(S, relevance_level(e1, i1)) and connected_to_via(F, l2, e2) and belief(S, relevance_level(e2, i2)) and i1 > i2 → to_be_performed(S, move_paw_to_edge_from_to(p, e1, F, l1))

observes(S, has_paw_at_location_from(p, l, e0)) and neighbours(l, 2) and connected_to_via(l, l1, e1) and e0 ≠ e1 and l ≠ A and l ≠ F → to_be_performed(S, move_paw_to_edge_from_to(p, e1, l, l1))

observes(S, has_paw_at_location_from(p, l, e0)) and attractive_direction_at(a, l, e1) and neighbours(l, 3) and connected_to_via(l, l1, e1) and belief(S, relevance_level(e1, 0.0)) and connected_to_via(l, l2, e2) and belief(S, relevance_level(e2, 0.0)) and e0 ≠ e1 and e0 ≠ e2 and e1 ≠ e2 → to_be_performed(S, move_paw_to_edge_from_to(p, e1, l, l1))

observes(S, has_paw_at_location_from(p, l, e0)) and neighbours(l, 3) and connected_to_via(l, l1, e1) and belief(S, relevance_level(e1, i1)) and connected_to_via(l, l2, e2) and belief(S, relevance_level(e2, i2)) and e0 ≠ e1 and e0 ≠ e2 and e1 ≠ e2 and i1 > i2 → to_be_performed(S, move_paw_to_edge_from_to(p, e1, l1))

to_be_performed(S, move_paw_to_edge_from_to(p, e, l, l1)) → has_paw_at_edge_from_to(S, p, e, l, l1)

has_paw_at_edge_from_to(S, p, e, l, l1) → observes(S, has_paw_at_edge_from_to(p, e, l, l1))

observes(S, has_paw_at_edge_from_to(p, e, l, l1)) → to_be_performed(S, move_paw_to_location_from(p, l1, e))

to_be_performed(S, move_paw_to_location_from(p, l, e)) → has_paw_at_location_from(S, p, l, e)

has_paw_at_location_from(S, p, l, e) → observes(S, has_paw_at_location_from(p, l, e))

observes(S, has_paw_at_edge_from_to(p1, e, l, l1)) and ∀l2 not observes(S, has_paw_at_edge_from_to(p2, e, l, l2)) and ∀l3 not observes(S, has_paw_at_edge_from_to(p3, e, l, l3)) and p1 ≠ p2 and p1 ≠ p3 and p2 ≠ p3 and belief(S, relevance_level(e, i)) → belief(S, relevance_level(e, i*decay+incr))

observes(S, has_paw_at_edge_from_to(p1, e, l, l1)) and observes(S, has_paw_at_edge_from_to(p2, e, l, l2)) and ∀l3 not observes(S, has_paw_at_edge_from_to(p3, e, l, l3)) and p1 ≠ p2 and p1 ≠ p3 and p2 ≠ p3 and belief(S, relevance_level(e, i)) → belief(S, relevance_level(e, i*decay+incr+incr))

observes(S, has_paw_at_edge_from_to(p1, e, l, l1)) and observes(S, has_paw_at_edge_from_to(p2, e, l, l2)) and observes(S, has_paw_at_edge_from_to(p3, e, l, l3)) and p1 ≠ p2 and p1 ≠ p3 and p2 ≠ p3 and belief(S, relevance_level(e, i)) → belief(S, relevance_level(e, i*decay+incr+incr+incr))

observes(S, has_paw_at_location_from(p, F, e)) → to_be_performed(S, pick_up_food_with_paw(p))

to_be_performed(S, pick_up_food_with_paw(p)) → is_carrying_food_with_paw(S, p)

observes(S, has_paw_at_location_from(p, A, e)) and is_carrying_food_with_paw(S, p) → to_be_performed(S, drop_food_with_paw(p))

is_carrying_food_with_paw(S, p) and not to_be_performed(S, drop_food_with_paw(p)) → is_carrying_food_with_paw(S, p)

belief(S, relevance_level(e, i)) and ∀p,l,l1 not observes(S, has_paw_at_edge_from_to(p, e, l, l1)) → belief(S, relevance_level(e, i*decay))

Return to Contents of this issue

© Copyright Journal of Artificial Societies and Social Simulation, [2006]