MAS-SOC: a Social Simulation Platform Based on Agent-Oriented Programming

Journal of Artificial Societies and Social Simulation

vol. 8, no. 3

<https://www.jasss.org/8/3/7.html>

For information about citing this article, click here

Received: 18-Jun-2005 Accepted: 18-Jun-2005 Published: 30-Jun-2005

Abstract

Abstract

|

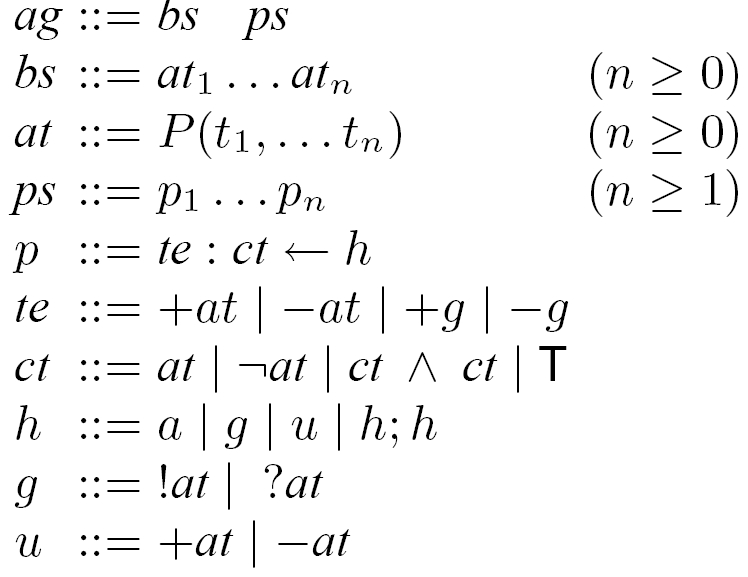

| Figure 1. Syntax of AgentSpeak(L). |

|

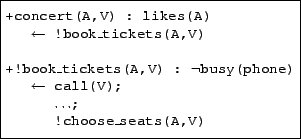

| Figure 2. Examples of AgentSpeak(L) plans. |

|

| Figure 3. An Interpretation Cycle of an AgentSpeak Program (Machado 2002). |

|

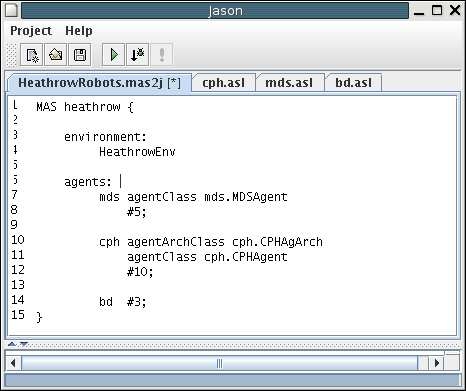

| Figure 4. Jason IDE. |

|

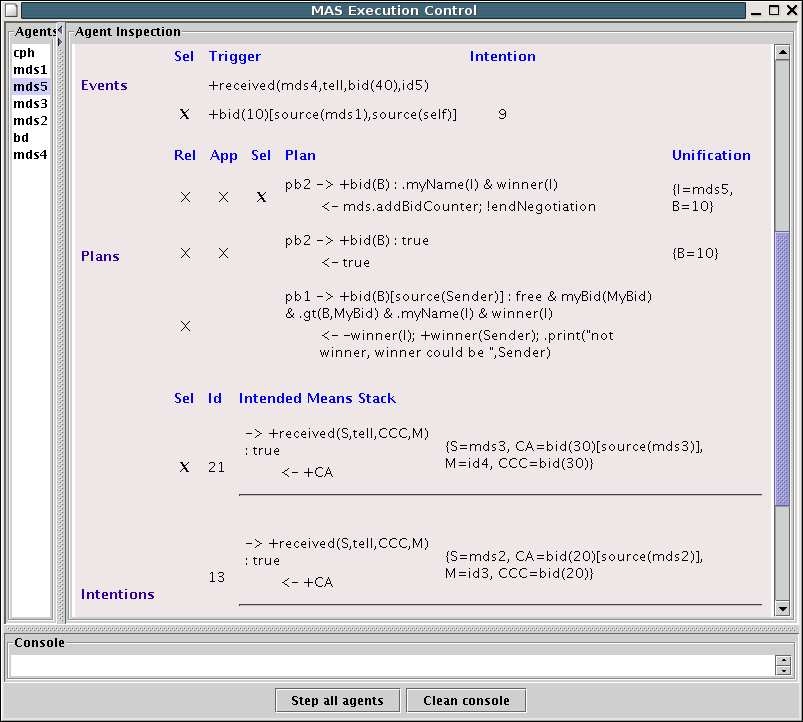

| Figure 5. Jason "Mind Inspector" Tool. |

The code sample below[4] define an agent-body class named consumer which has five integer attributes, one of them initialised with a random value from 60 to 100. Agents defined in this class are able to execute three types of perception, which will be explained later, and six types of actions. The constructor bellow places the agent in a random cell where the preconditions are true, the cell must not be a shop and may not have a owner already. After the placement, some values are set so that to the agent is defined as the owner of that cell.

<AGENT_BODY NAME="consumer">

<INTEGER NAME = "money"> 1000 </INTEGER>

<INTEGER NAME = "id"> "SELF" </INTEGER>

<INTEGER NAME = "home_x">0 </INTEGER>

<INTEGER NAME = "home_y">0</INTEGER>

<INTEGER NAME = "stamina">

<RANDOM MIN = "60" MAX = "100"/>

</INTEGER>

<PERCEPTIONS>

<ITEM NAME = "see_products"/>

<ITEM NAME = "self_info"/>

<ITEM NAME = "verify_products"/>

</PERCEPTIONS>

<ACTIONS>

<ITEM NAME = "walk_right"/>

<ITEM NAME = "walk_left"/>

<ITEM NAME = "walk_down"/>

<ITEM NAME = "walk_up"/>

<ITEM NAME = "buy"/>

<ITEM NAME = "rest"/>

</ACTIONS>

<CONSTRUCTOR>

<IN_RAND>

<PRECONDITION>

<EQUAL>

<OPERAND>

<CELL_ATT ATTRIBUTE = "shop">

<X>"X"</X>

<Y>"Y"</Y>

</CELL_ATT>

</OPERAND>

<OPERAND> "FALSE" </OPERAND>

</EQUAL>

<EQUAL>

<OPERAND>

<CELL_ATT ATTRIBUTE = "owner">

<X>"X"</X>

<Y>"Y"</Y>

</CELL_ATT>

</OPERAND>

<OPERAND> -1 </OPERAND>

</EQUAL>

</PRECONDITION>

<ELEMENT NAME = "SELFCLASS">

<INDEX>"SELF"</INDEX>

</ELEMENT>

</IN_RAND>

<ASSIGN>

<ELEMENT_ATT NAME = "SELFCLASS" ATTRIBUTE = "home_x" >

<INDEX> "SELF" </INDEX>

</ELEMENT_ATT>

<EXPRESSION> "X"</EXPRESSION>

</ASSIGN>

<ASSIGN>

<ELEMENT_ATT NAME = "SELFCLASS" ATTRIBUTE = "home_y" >

<INDEX> "SELF" </INDEX>

</ELEMENT_ATT>

<EXPRESSION> "Y"</EXPRESSION>

</ASSIGN>

<ASSIGN>

<CELL_ATT ATTRIBUTE = "owner" >

<X>+0</X>

<Y>+0</Y>

</CELL_ATT>

<EXPRESSION> "SELF"</EXPRESSION>

</ASSIGN>

</CONSTRUCTOR>

</AGENT_BODY>

<ACTION NAME="rest">

<PRECONDITION>

<EQUAL>

<OPERATOR>

<CELL_ATT ATTRIBUTE ="owner" >

<X> +0</X>

<Y> +0</Y>

<Z> +0</Z>

</CELL_ATT>

</OPERATOR>

<OPERATOR>

<ELEMENT_ATT NAME = "SELFCLASS" ATTRIBUTE = "id" >

<INDEX> "SELF" </INDEX>

</ELEMENT_ATT>

</OPERATOR>

</EQUAL>

</PRECONDITION>

<ASSIGN>

<ELEMENT_ATT NAME = "consumer" ATTRIBUTE = "stamina" >

<INDEX> "SELF" </INDEX>

</ELEMENT_ATT>

<EXPRESSION>

<RANDOM MIN = "60" MAX = "100"/>

</EXPRESSION>

</ASSIGN>

</ACTION>

In the example above, an action named rest is defined. It has no parameters, but the agent must be on his home cell as defined as a precondition for the action be executed, otherwise the action will fail. If the precondition is satisfied the stamina attribute will receive a random value between 60 and 100 units.

<DEFGRID SIZEX="10" SIZEY="10" SIZEZ="1">

<BOOLEAN NAME = "shop"> "FALSE" </BOOLEAN>

<INTEGER NAME = "owner"> -1 </INTEGER>

</DEFGRID>

Note that this corresponds to the (default) synchronous simulationmode. An asynchronous mode is also available.

|

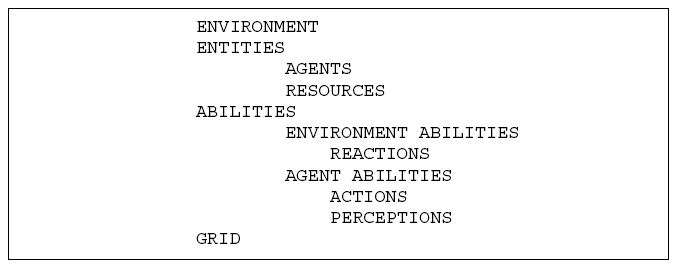

| Figure 6. Top-Ontology. |

|

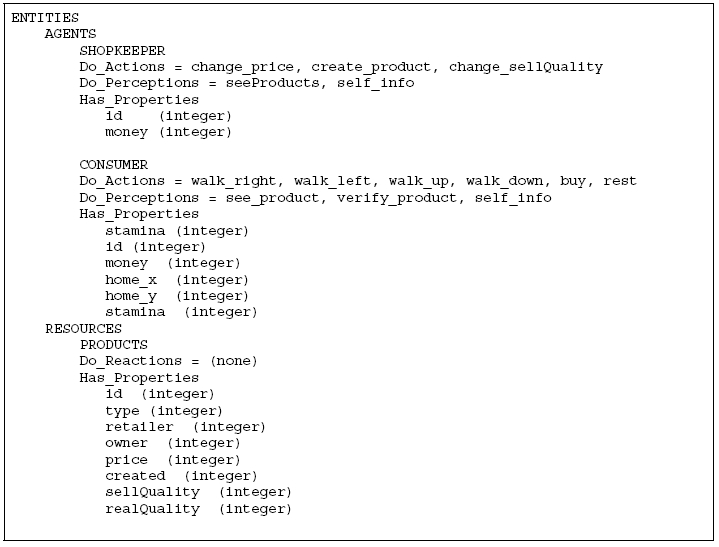

| Figure 7. Sample Ontology. |

|

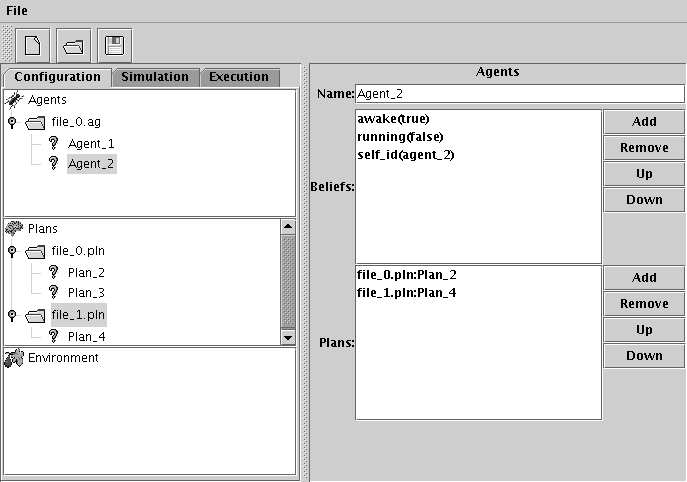

| Figure 8. MAS-SOC Manager GUI. |

<RESOURCE NAME="product">

<INTEGER NAME = "id"> "SELF" </INTEGER>

<INTEGER NAME = "type"> 0 </INTEGER>

<INTEGER NAME = "retailer">-1</INTEGER>

<INTEGER NAME = "owner">-1</INTEGER>

<INTEGER NAME = "price"> 0 </INTEGER>

<INTEGER NAME = "quality"> 0 </INTEGER>

<INTEGER NAME = "realquality"> 0 </INTEGER>

<INTEGER NAME = "created"> 0 </INTEGER>

</RESOURCE>

+!refreshBeliefs (NUM)

: product(instance(INS),id(ID)) &

product(instance(INS),realquality(RQ)) &

product(instance(INS),sellquality(SQ)) &

RQ >= SQ

<- ?product(instance(INS),retailer(IDR));

?sellerEvaluation(IDR,Ev);

.addB(Ev,X,NewEv);

-sellerConcept(IDR,Ev);

+sellerConcept(IDR,NewEv).

2Available at http://jason.sourceforge.net.

3For this reason, a workshop series (held with AAMAS) has recently been created for discussing issues related to multi-agent environments specifically (Weyns 2005).

4Part of the environment definition from the example shown in Section 8.

5Although the list of reactions is the same for all cells, this does not imply they all have the same behaviour at all times, as reactions can have preconditions on the specific state of the individual cells.

6Agents send a message with "true" as its content if they choose to not execute an action in that cycle.

7http://protege.stanford.edu.

8http://www.w3.org/TR/owl-features/.

9In fact, this refers to types of agent, as each of these agents definitions may various instances in a simulation.

ANCONA D, Mascardi V, Hübner J F, and Bordini R H (2004) Coo-AgentSpeak: Cooperation in AgentSpeak through plan exchange. In Jennings, N. R., Sierra, C., Sonenberg, L. and Tambe, M., eds., Proceedings of the Third International Joint Conference on Autonomous Agents and Multi-Agent Systems (AAMAS-2004), New York, NY, 19-23 July, 698-705. New York, NY: ACM Press.

BORDINI R H, and Moreira, Á F (2002) Proving the asymmetry thesis principles for a BDI agent-oriented programming language. In Dix, J., Leite, J. A. and Satoh, K., eds., Proceedings of the Third International Workshop on Computational Logic in Multi-Agent Systems (CLIMA-02), 1st August, Copenhagen, Denmark, Electronic Notes in Theoretical Computer Science 70(5). Elsevier. URL: <http://www.elsevier.nl/locate/entcs/volume70.html>. CLIMA-02 was held as part of FLoC-02. This paper was originally published in Datalogiske Skrifter number 93, Roskilde University, Denmark, pages 94-108.

BORDINI R H, and Moreira Á F (2004) Proving BDI properties of agent-oriented programming languages: The asymmetry thesis principles in AgentSpeak(L). Annals of Mathematics and Artificial Intelligence 42(1-3):197-226. Special Issue on Computational Logic in Multi-Agent Systems.

BORDINI R. H, Bazzan A L C, Jannone R O, Basso D M, Vicari R M, and Lesser V R (2002) AgentSpeak(XL): Efficient intention selection in BDI agents via decision-theoretic task scheduling. In Castelfranchi, C. and Johnson, W. L., eds., Proceedings of the First International Joint Conference on Autonomous Agents and Multi-Agent Systems (AAMAS-2002), 15-19 July, Bologna, Italy, 1294-1302. New York, NY: ACM Press.

BORDINI R H, Fisher M, Pardavila C, and Wooldridge M (2003a) Model checking AgentSpeak. In Rosenschein, J. S., Sandholm, T., Wooldridge, M. and Yokoo, M., eds., Proceedings of the Second International Joint Conference on Autonomous Agents and Multi-Agent Systems (AAMAS-2003), Melbourne, Australia, 14-18 July, 409-416. New York, NY: ACM Press.

BORDINI R H, Visser W, Fisher M, Pardavila C, and Wooldridge M (2003b) Model checking multi-agent programs with CASP. In Hunt Jr., W. A. and Somenzi, F., eds., Proceedgins of the Fifteenth Conference on Computer-Aided Verification (CAV-2003), Boulder, CO, 8-12 July, number 2725 in Lecture Notes in Computer Science, 110-113. Berlin: Springer-Verlag. Tool description.

BORDINI R H, Okuyama F Y, de Oliveira D, Drehmer G, and Krafta R. C (2004a) The mas-soc approach to multi-agent based simulation. In Lindemann, G., Moldt, D. and Paolucci, M., eds., First International Workshop on Regulated Agent-Based Social Systems (RASTA 2002), held with AAMAS02, Bologna, Italy, 16 July (Revised Selected and Invited Papers), number 2934 in Lecture Notes In Artificial Intelligence. Berlin: Springer-Verlag.

BORDINI R H, Dastani, M., Dix, J. and El Fallah-Seghrouchni, A., eds. (2004b) Proceedings of the Second International Workshop on "Programming Multi-Agent Systems: Languages and Tools" (ProMAS 2004). To appear in Springer's LNAI Series.

BORDINI R H, Okuyama F Y, de Oliveira D, Drehmer G, and Krafta RC (2004c) The MAS-SOC approach to multi-agent based simulation. In Lindemann, G., Moldt, D. and Paolucci, M., eds., Proceedings of the First International Workshop on Regulated Agent-Based Social Systems: Theories and Applications (RASTA'02), 16 July, 2002, Bologna, Italy (held with AAMAS02) -- Revised Selected and Invited Papers, number 2934 in Lecture Notes in Artificial Intelligence, 70-91. Berlin: Springer-Verlag.

BORDINI R H, Hübner J F et al. (2005) Jason: A Java-based AgentSpeak interpreter used with SACI for multi-agent distribution over the net, manual, version 0.6 edition. http://jason.sourceforge.net/.

CALCIN O P, Okuyama F Y, and Dias A M (2004) Simulación del proceso de compra de artículos en un mercado virtual con agentes BDI. In Solar, M., Fernandez-Baca, D. and Cuadros-Vargas, E., eds., 30ma Conferencia Latinoamericana de Informática (CLEI2004), Sociedad Peruana de Computación, . September, 2004. ISBN 9972-9876-2-0.

CASTELFRANCHI C (1998) Simulating with cognitive agents: The importance of cognitive emergence. In Sichman, J. S., Conte, R. and Gilbert, N., eds., Multi-Agent Systems and Agent-Based Simulation, number 1534 in Lecture Notes in Artificial Intelligence, 26-44. Berlin: Springer-Verlag.

CASTELFRANCHI C (2000) Engineering social order. In Zambonelli F. Omicini A., Tolksdorf R., editor, Engineering Societies in the Agents World, pages 1 ñ 18. Springer, 2000.

CASTELFRANCHI C (2001) The theory of social functions: Challenges for computational social science and multi-agent learning. Cognitive Systems Research 2(1):5-38.

CONTE R, and Castelfranchi C (1995) Cognitive and Social Action. London: UCL Press.

DASTANI M, Dix J, and Fallah-Seghrouchni, A E., eds. (2004) Programming Multi-Agent Systems, First International Workshop, PROMAS 2003, Melbourne, Australia, July 15, 2003, Selected Revised and Invited Papers, volume 3067 of Lecture Notes in Computer Science. Springer.

DEMAZEAU Y, and Rocha Costa A C (1996) Populations and organizations in open multi-agent systems. In 1st National Symposium on Parallel and Distributed AI (PDAIí96), Hyderabad, India, 1996.

FOSTER I, and Kesselman C, eds. (2003) The Grid 2: Blueprint for a New Computing Infrastructure. Morgan Kaufmann, second edition.

HORROCKS I, and Patel-Schneider P F (2003) Reducing OWL entailment to description logic satisfiability. In Fensel, D., Sycara, K. and Mylopoulos, J., eds., Proc. of the 2003 International Semantic Web Conference (ISWC 2003), number 2870 in Lecture Notes in Computer Science, 17-29. Springer.

HÜBNER J F, Sichman J S, and

Boissier O (2002) ![]() OISE

OISE![]() :

Towards a structural, functional, and deontic model for MAS organization. In

Proceedings of the First International Joint Conference on Autonomous

Agents and Multi-Agent Systems (AAMAS'2002), Bologna, Italy. Extended

Abstract.

:

Towards a structural, functional, and deontic model for MAS organization. In

Proceedings of the First International Joint Conference on Autonomous

Agents and Multi-Agent Systems (AAMAS'2002), Bologna, Italy. Extended

Abstract.

HÜBNER J F (2003) Um Modelo de Reorganização de Sistemas Multiagentes. Ph.D. Dissertation, Universidade de São Paulo, Escola Politécnica.

KRAFTA R, de Oliveira D, and Bordini R H (2003) The city as object of human agency. In Fourth International Space Syntax Symposium (SSS4), London, 17-19 June, 33.1-33.18.

LEITE J, Omicini A, Torroni P, and Yolum, P., eds. (2004a) Proceedings of the Second International Workshop on Declarative Agent Languages and Technologies (DALT 2004). To appear in Springer's LNAI Series.

LEITE J, Omicini A, Sterling L, and Torroni, P., eds. (2004b) Declarative Agent Languages and Technologies, First International Workshop, DALT 2003, Melbourne, Australia, July 15, 2003, Revised Selected and Invited Papers, volume 2990 of Lecture Notes in Computer Science. Springer.

MACHADO R, and Bordini R H (2002) Running AgentSpeak(L) agents on SIM_AGENT. In Meyer, J.-J. and Tambe, M., eds., Intelligent Agents VIII - Proceedings of the Eighth International Workshop on Agent Theories, Architectures, and Languages (ATAL-2001), August 1-3, 2001, Seattle, WA, number 2333 in Lecture Notes in Artificial Intelligence, 158-174. Berlin: Springer-Verlag.

MOREIRA Á F, and Bordini R H (2002) An operational semantics for a BDI agent-oriented programming language. In Meyer, J.-J. C. and Wooldridge, M. J., eds., Proceedings of the Workshop on Logics for Agent-Based Systems (LABS-02), held in conjunction with the Eighth International Conference on Principles of Knowledge Representation and Reasoning (KR2002), April 22-25, Toulouse, France, 45-59.

MOREIRA Á. F, Vieira R, and Bordini R. H (2004) Extending the operational semantics of a BDI agent-oriented programming language for introducing speech-act based communication. In Leite, J., Omicini, A., Sterling, L. and Torroni, P., eds., Declarative Agent Languages and Technologies, Proceedings of the First International Workshop (DALT-03), held with AAMAS-03, 15 July, 2003, Melbourne, Australia (Revised Selected and Invited Papers), number 2990 in Lecture Notes in Artificial Intelligence, 135-154. Berlin: Springer-Verlag.

OKUYAMA F Y, Bordini R H, and Rocha Costa A C (2005) ELMS: An environment description language for multi-agent simulations. In Weyns, D., van Dyke Parunak, H. and Michel, F., eds., Proceedings of the First International Workshop on Environments for Multiagent Systems (E4MAS), held with AAMAS-04, 19th of July, 67-83, number 3374 in Lecture Notes In Artificial Intelligence, Berlin, 2005. Springer-Verlag.

OKUYAMA F. Y. (2003) Descrição e geração de ambientes para simulações com sistemas multiagentes. MSc thesis, PPGC/UFRGS, Porto Alegre, RS. in Portuguese.

PLOTKIN G D (1981) A structural approach to operational semantics. Technical report, Computer Science Department, Aarhus University, Aarhus.

RAO A S, and Georgeff M P (1991) Modeling rational agents within a BDI-architecture. In Allen, J., Fikes, R. and Sandewall, E., eds., Proceedings of the 2nd International Conference on Principles of Knowledge Representation and Reasoning (KR'91), 473-484. Morgan Kaufmann publishers Inc.: San Mateo, CA, USA.

RAO A S, and Georgeff M P (1995) BDI agents: From theory to practice. In Lesser, V. and Gasser, L., eds., Proceedings of the First International Conference on Multi-Agent Systems (ICMAS'95), 12-14 June, San Francisco, CA, 312-319. Menlo Park, CA: AAAI Press / MIT Press.

RAO A S, and Georgeff M P (1998) Decision procedures for BDI logics. Journal of Logic and Computation 8(3):293-343.

RAO A S (1996) AgentSpeak(L): BDI agents speak out in a logical computable language. In Van de Velde, W. and Perram, J., eds., Proceedings of the Seventh Workshop on Modelling Autonomous Agents in a Multi-Agent World (MAAMAW'96), 22-25 January, Eindhoven, The Netherlands, number 1038 in Lecture Notes in Artificial Intelligence, 42-55. London: Springer-Verlag.

ROCHA COSTA A C, Dimuro G P, and Palazzo L A M (2005) Systems of exchange values as tools for multi-agent organizations. Journal of the Brazilian Computer Society, 2005. (To appear, available at http://gmc.ucpel.tche.br/valores.).

RODRIGUES M R, Rocha Costa A C, and Bordini R H (2003) A system of exchange values to support social interactions in artificial societies. In Rosenschein, J. S., Sandholm, T., Michael, W. and Yokoo, M., eds., Proceedings of the Second International Joint Conference on Autonomous Agents and Multi-Agent Systems (AAMAS-2003), Melbourne, Australia, 14-18 July, 81-88. New York, NY: ACM Press.

RUSSEL S, and Norvig P (2003) Artificial Intelligence - A Modern Approach. Englewood Cliffs: Prentice-Hall.

SHOHAM Y (1993) Agent-oriented programming. Artificial Intelligence 60:51-92.

SINGH M P, Rao A S, and Georgeff M P (1999) Formal methods in DAI: Logic-based representation and reasoning. In Weiß, G., ed., Multiagent Systems--A Modern Approach to Distributed Artificial Intelligence. Cambridge, MA: MIT Press. chapter 8, 331-376.

STAAB S, and Studer R, eds. (2004) Handbook on Ontologies. International Handbooks on Information Systems. Springer.

VIEIRA R, Moreira ¡ F, Wooldridge M, and Bordini, R H (2005) On the formal semantics of speech-act based communication in an agent-oriented programming language. Submitted article, to appear.

WEYNS D, van Dyke Parunak H, and Michel F, eds.(2005) Proceedings of the First International Workshop on Environments for Multiagent Systems (E4MAS), held with AAMAS-04, 19th of July, New York City, NY. Number 3374 in LNAI. Springer-Verlag.

WOOLDRIDGE M (2000) Reasoning about Rational Agents. Cambridge, MA: The MIT Press.

Return to Contents of this issue

© Copyright Journal of Artificial Societies and Social Simulation, [2005]