Deborah Vakas Duong and John Grefenstette (2005)

SISTER: a Symbolic Interactionist Simulation of Trade and Emergent Roles

Journal of Artificial Societies and Social Simulation vol. 8, no. 1

<https://www.jasss.org/8/1/1.html>

To cite articles published in the Journal of Artificial Societies and Social Simulation, reference the above information and include paragraph numbers if necessary

Received: 20-May-2004 Accepted: 13-Sep-2004 Published: 31-Jan-2005

Abstract

Abstract |

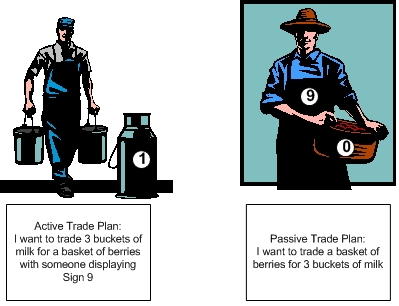

| Figure 1. Corresponding Trade Plans. Agents trade with agents who have corresponding trade plans and are wearing the correct sign |

| (1) |

| (2) |

"Good" is the amount of a good that an agent consumes, n represents the number of different goods, and weight is a measure of how much each individual good is desired. All of the weights add up to one. Each agent has a minimum of one of each good given to it. This is a standard utility function in economics. If all of the weights are the same, it makes it so that agents want a spread of all different types of goods, and as much as they can get of them. The agents want a spread of goods in the same sense that people need some of each of the four food groups. For example, if an agent has eight units each of two of the four food groups, his happiness is 80.25 × 80.25 × 10.25 × 10.25 = 2.82. If the goods are more spread among the food groups, and the agent has four units of each of the four food groups, then its happiness is 40.25 × 40.25 × 40.25 × 40.25 = 3.48. The agent would rather have four of four goods than eight of two goods. With this equation both the spread of goods and the amount goods are important. In this study, the weight for each good is equal, so that differences in outcome can not be attributed to uneven utility values for individual goods.

| Figure 2. Agents differentiate into roles. Roles are designated by tags, learned from an agent's individual GA. Different agents which have the same tag are said to be members the same role if the agents who display the same tag also have the same behaviors. These tags are individually learned by each GA, but come to mean the same set of behaviors |

For 1 to i generations //reproduction occurs every n days

{

For 1 to n chromosomes //each chromosome represents a plan for one day

{

- Harvest goods in amounts according to plan.

- Trade goods with traders displaying signs closest to those in the plan.

- Consume goods and rate plan.

}

- Genetically recombine plans in private GA.

}

|

| Figure 3. SISTER simulation loop for individual agents. Every day, an agent implements a plan of harvesting and trading on a single chromosome. After it has used all of its chromosomes, its genetic algorithm reproduces, and it has a new set of plans. If death is implemented, some small percentage of agents have the genes of their genetic algorithms randomized, and they are given a new identification tag |

|

|

|

| Figure 4: Parameters common to the experiments of this study | |

|

|

|

| Number of Agents | 16 |

| Population Size of GA in each agent | 1000 |

| Number of Crossover Points | 4 |

| Number of Bits in a Chromosome | 772 |

| Mutation Rate | 0.01 |

| Number of Efforts | 128 |

| Number of Trade Sections | 16 |

| Number of Goods | 8 |

| Constant of Harvesting | .001 |

| Constant of Trade | 1 |

| Constant of Cooking | 1 |

| Harvesting Effort Concentration Factor | 3 |

| Trading Effort Concentration Factor | 3 |

| Cooking Effort Concentration Factor | 3 |

| Number of Possible Amounts to Trade | 4 |

| Cobb-Douglas weight | 0.125 |

|

|

|

|

|

||

| Figure 5: Knowledge Representation in SISTER, in an 8 good Scenario. A single chromosome has bits which represent efforts, trade plans and a tag. How many effort sections and trade plans in a single chromosome is a parameter of the simulation. A chromosome represents a single day of trade | ||

|

|

||

| Chromosome section | Bits | Meaning |

| Efforts | 1 | Production or trade? |

| 2-4 | Which good to produce or trade plan to activate | |

| Trade Plans | 1-3 | Good to give |

| 4-6 | Amount to give | |

| 7-9 | Good to receive | |

| 10-12 | Amount to receive | |

| 13-16 | Sign of agent to seek trade with | |

| Role Tag | The meaning of the bits on the tag emerges | |

|

|

||

|

| Figure 6. Illustration of a chromosomal trade plan section. One section on the chromosome of an agent encodes an active trade plan in the string, 0110010010001001, while a chromosome on the passive agent encodes a passive trade plan in the string 001000011001 |

| (3) |

|

|

|||||

| Figure 7: Mutual Information of Example Trade Scenarios. The rows indicate the number of a type of good sold. P(y), or the frequency of trade in a good, is determined by dividing the number of times a goods is sold in a row by the total number of trades. The columns indicate the sign displayed. P(x), or the frequency of the display of a sign, is determined by dividing the number of times a sign was displayed for a trade in a column by the total number of trades. P(x,y) is the frequency of co-occurrence of a particular sign and trade | |||||

|

|

|||||

| High Mutual Information Trade Scenario Sign Displayed |

|||||

| Good Sold | Sign 0 | Sign 1 | Sign 2 | Sign 3 | |

| Oats | 10 | ||||

| Peas | 7 | ||||

| Beans | 3 | ||||

| Barley | 9 | ||||

|

|

|||||

| Zero Mutual Information Trade Scenario Sign Displayed |

|||||

| Good Sold | Sign 0 | Sign 1 | Sign 2 | Sign 3 | |

| Oats | |||||

| Peas | |||||

| Beans | 10 | 11 | 9 | 8 | |

| Barley | |||||

|

|

|||||

| Zero Mutual Information Trade Scenario Sign Displayed |

|||||

| Good Sold | Sign 0 | Sign 1 | Sign 2 | Sign 3 | |

| Oats | 7 | ||||

| Peas | 9 | ||||

| Beans | 5 | ||||

| Barley | 13 | ||||

|

|

|||||

| Figure 8. Simple scenario average fitness. The utility of the agents is averaged for the 20 runs of the role recognition treatment and for the individual recognition treatments. The yellow vertical lines indicate places where a t-test shows a significant difference between treatments, which is true for every 10 cycles, making the space between the role and individual lines completely yellow. There are 1000 days of trade per "cycle." Each cycle is one generation of the agent's genetic algorithms |

| Figure 9. Composite good scenario average fitness. The utility of the agents is averaged for the 20 runs of the role recognition treatment and for the individual recognition treatments. The yellow vertical lines indicate places where a t-test showed a significant difference between treatments, which is true for every 10 cycles, making the space between the role and individual lines completely yellow |

AXTELL, R. Epstein, and Young (2001) "The Emergence of Class Norms in a Multi-Agent Model of Bargaining." In Durlouf and Young, eds. Social Dynamics. Cambridge: MIT Press

BERGER, P and Thomas Luckmann (1966) The Social Construction of Reality New York: Anchor Books

COLEMAN, J (1994) Foundations of Social Theory. New York: Belknap

CONTE, R. and Castelfranci C (1995) Cognitive and Social Action London: UCL Press

CONTE, R and Frank Dignum (2003) "From Social Monitoring to Normative Influence." Journal of Artificial Societies and Simulation, vol 4, no. 2 https://www.jasss.org/4/2/7.html

DITTRICH, P Thomas Kron and Wolfgang Banzhaf (2003) "On the Scalability of Social Order: Modeling the Problem of Double and Multi Contingency Following Luhmann." Journal of Artificial Societies and Simulation, vol 6, no. 1 https://www.jasss.org/6/1/3.html

DUONG, D V (1991) "A System of IAC Neural Networks as the Basis for Self Organization in a Sociological Dynamical System Simulation." Masters Thesis, The University of Alabama at Birmingham http://www.scs.gmu.edu/~dduong/behavior.html

DUONG, D V and Kevin D. Reilly (1995) "A System of IAC Neural Networks as the Basis for Self Organization in a Sociological Dynamical System Simulation." Behavioral Science, 40,4, 275-303. http://www.scs.gmu.edu/~dduong/behavior.html

DUONG, D V (1995) "Computational Model of Social Learning" Virtual School ed. Brad Cox. http://www.virtualschool.edu/mon/Bionomics/TraderNetworkPaper.html

DUONG, D V (1996) "Symbolic Interactionist Modeling: The Coevolution of Symbols and Institutions." Intelligent Systems: A Semiotic Perspective Proceedings of the 1996 International Multidisciplinary Conference, Vol 2, pp. 349 - 354. http://www.scs.gmu.edu/~dduong/semiotic.html

DUONG, D V (2004) SISTER: A Symbolic Interactionist Simulation of Trade and Emergent Roles. Doctoral Dissertation, George Mason University, Spring.

EPSTEIN, J and Robert Axtell (1996) Growing Artificial Societies: Social Science from the Bottom Up, Boston: MIT Press

HALES, D (2002) "Group Reputation Supports Beneficent Norms." Journal of Artificial Societies and Simulation, vol 5, no. 4. https://www.jasss.org/5/4/4.html

HALES, D and Bruce Edmonds (2003). Can Tags Build Working Systems? From MABS to ESOA. Working Paper, Center For Policy Modelling, Manchester, UK.

HOLLAND, J H (1975) Adaptation in Natural and Artificial Systems. Ann Arbor: University of Michigan Press

KLUEVER, J and Christina Stoica (2003) "Simulations of Group Dynamics with Different Models." Journal of Artificial Societies and Simulation, vol 6, no. 4 https://www.jasss.org/6/4/8.html

KLUEVER, J (2002) An Essay Concerning Sociocultural Evolution. Theoretical Principles and Mathematical Models. Dordrecht: Kluwer Academic Publishers

LACOBIE, K J (1994) Documentation for the Agora. Unpublished document

LUHMANN, N (1984) Social Systems. Frankfort: Suhrkamp

OLIPHANT, M and J. Batali (1997) "Learning and the emergence of coordinated communication." Unpublished manuscript.

PARSONS, T (1951) The Social System. New York: Free Press

PERFORS, A (2003). "Simulated Evolution of Language: a Review of the Field." Journal of Artificial Societies and Simulation, vol 5, no. 2. https://www.jasss.org/5/2/4.html

POTTER, M and Kenneth DeJong (2000) "Cooperative Coevolution: An Architecture for Evolving Coadapted Subcomponents." Evolutionary Computation, 8(1), pages 1-29. MIT Press

RIOLO, R, Michael Cohen and Robert Axelrod (2001) "Evolution of Cooperation without Reciporcity," Nature Vol 414, November

RITZER, G (1999) Sociological Theory. New York: McGrawHill

SHANNON, C E (1993) Collected Papers. New York: Wiley

STEELS, L (1999) The Spontaneous Self-organization of an Adaptive Language. In Koichi Furukawa and Donald Michie and Stephen Muggleton, editors, Machine Intelligence 15, pages 205--224. St. Catherine's College, Oxford: Oxford University Press

WEBER, Max (2001) The Protestant Ethic and the Spirit of Capitalism. New York: Routledge

WERNER and Dyer (1992) "Evolution of communication in artificial organisms." Artificial Life II. eds. Langton et al. New York: Addison Wesley

WINOGRAD, T and Fernando Flores (1987) Understanding Computers and Cognition New York: Addison-Wesley

Return to Contents of this issue

© Copyright Journal of Artificial Societies and Social Simulation, [2005]