Arianna Dal Forno and Ugo Merlone (2004)

From Classroom Experiments to Computer Code

Journal of Artificial Societies and Social Simulation

vol. 7, no. 3

<https://www.jasss.org/7/3/2.html>

To cite articles published in the Journal of Artificial Societies and Social Simulation, reference the above information and include paragraph numbers if necessary

Received: 01-Nov-2003 Accepted: 31-May-2004 Published: 30-Jun-2004

Abstract

Abstract

|

| Figure 1. Widespread approach used in experiments |

|

| Figure 2. Our proposal for a new approach |

|

| Figure 3. Procedure used to code behaviors |

where:

where:

|

It measures the predictive power of the model and always falls between 0 and 1. When U=0 there is perfect fit, while U=1 means the predictive value of the model is very bad (for details see Pindyck and Rubinfeld 1991).

|

|

where:

|

|

|

| Figure 4. Instructions for the prisoner's dilemma experiment |

|

|

while the coordination equilibrium is

|

|

|

| Figure 5. Question form for the prisoner's dilemma experiment |

| 1. Subject #17A: (Close to Nash agent) | ||||||||||

| Turn | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | ||

| Agent's Prof. | 6.409 | 6.932 | 6.263 | 6.08 | 5.899 | 5.94 | 6.77 | 5.9 | ||

| Actual Eff. | 1.462 | 0.921 | 0.9 | 0.92 | 0.92 | 0.92 | 0.92 | 0.92 | rms | U |

| Simul. Eff. | 1.462 | 0.921 | 0.921 | 0.92 | 0.921 | 0.92 | 0.92 | 0.92 | 0.0075 | 0.0037 |

Motivation: The optimal effort for a couple of agents is 1.462. I therefore started from this value. With the first attempt my profit was low, so I decided to exert a different effort. The effort 0.92 is the one that, at one and the same time, binds my loss in profit when my partner exerts a low effort and gives me a higher profit when matched with a high effort partner.

Comments: This result is one of the most interesting since, just observing the data, one would guess that this subjects plays the Nash equilibrium. Yet, a more careful analysis of the motivation shows that the actual reasoning behind the data is based on a heuristic which, in this case, matches the Nash effort eN.

case :

if (first_iteration) {

curr_effort=1.462;

}

else{

if (my_last_profit < profit_level) {

curr_effort = Nash_effort;

}

}

effort = curr_effort;

break;

| 2. Subject #25A: (Adaptive agent with Lower Bound) | ||||||||||

| Turn | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | ||

| Avg. Eff. | 1.354 | 1.395 | 1.168 | 0.81 | 0.931 | 1.01 | 0.94 | 0.85 | ||

| Actual Eff. | 1.46 | 1.47 | 1.35 | 1.12 | 1.29 | 1.25 | 1.27 | rms | U | |

| Simul. Eff. | 1.462 | 1.462 | 1.462 | 1.316 | 1.18 | 1.07 | 0.96 | 0.1659 | 0.0636 | |

Motivation: The optimal effort for a couple of agents is 1.462, so I started with this value but, in the end, I decided to engage a lower effort to avoid free riding of partner. I probably should have had the courage to exert an even lower effort.

Comments: The data and the motivations are not completely consistent. One possibility would be to model the behavior described in the motivation. Other approaches are, however, possible: for instance, studying the effort exerted by the subject's partners, or, more simply, modeling their behavior as if it chose its effort randomly over a certain range.

case :

if (first_iteration) {

counter=0;

curr_effort=cooper_effort;

}

else{

if (avg_effort < my_last_effort) {

counter++;

if (counter > threshold) {

counter=0;

curr_effort*=0.9;

curr_effort=max{curr_effort, lower_bound};

}

}

}

effort=curr_effort;

my_last_effort=effort;

break;

| 3. Subject #26A: | ||||||||||

| Turn | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | ||

| Avg. Eff. | 1.354 | 1.395 | 1.168 | 0.81 | 0.931 | 1.01 | 0.94 | 0.85 | ||

| Actual Eff. | 1.462 | 1.31 | 1 | 0.98 | 0.89 | 0.89 | 0.88 | 0.65 | rms | U |

| Simul. Eff. | 1.462 | 1.254 | 1.295 | 1.07 | 0.707 | 0.83 | 0.91 | 0.84 | 0.1462 | 0.0692 |

Motivation: I started with the optimal effort: 1.462. Since other students kept on lowering their effort I tried to exert an effort slightly lower than the average in order to free ride.

Comments: This subject induced us introduce different classes of reducing effort agents in our simulations.

case :

if (first_iteration) {

curr_effort=1.462;

}

else{

curr_effort = avg_effort-0.1;

}

effort = curr_effort;

break;

| 4. Subject #49A: (Idealistic Hi Effort) | ||||||||||

| Turn | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | ||

| Actual Eff. | 1.5 | 1.5 | 1.5 | 1.5 | 1.4 | 1.4 | 1.4 | 1.4 | rms | U |

| Simul. Eff. | 1.45 | 1.45 | 1.45 | 1.45 | 1.45 | 1.45 | 1.45 | 1.45 | 0.05 | 0.0172 |

Motivation: If both agents of a couple provide effort equal to 1.4 or 1.5 we obtain optimal profit. Even when I noticed that other players were exerting low effort, I kept on providing a higher one because, in my opinion, high team productivity is always more important than my personal profit.

Comments This subject gave an ethical rationale for keeping on exerting a high effort. This subject adopts an internal standard that gives her a sense of self-worth (Bandura 1999).

case : effort=1.45; break;

| 5. Subject #44A: (Adaptive agent) | ||||||||||

| Turn | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | ||

| Avg. Eff. | 1.354 | 1.395 | 1.168 | 0.81 | 0.931 | 1.01 | 0.94 | 0.85 | ||

| Winner's Eff. | 1 | 0.756 | 1 | 0.9 | 1.4 | 1.3 | 0.51 | 1 | ||

| Actual Eff. | 1.5 | 1.5 | 1.5 | 1.39 | 1.07 | 0.91 | 0.9 | 0.88 | rms | U |

| Simul. Eff. | 1.5 | 1.5 | 1.5 | 1.08 | 0.854 | 1.17 | 1.15 | 0.73 | 0.1916 | 0.078 |

Motivation: The optimal outcome would have been to have all the players commit to an effort of about 1.4 or 1.5. Nevertheless, this way an individual providing no effort could free-ride. After a few turns I decided to take into account both the winning and average effort.

Comments: Some subjects considered different variables in order to monitor the overall situation of the organization.

case :

if (first_iteration) {

counter=0;

curr_effort=1.5;

}

else{

if (avg_effort < my_last_effort){

counter++;

}

if (counter > threshold) {

curr_effort = (winner_effort + avg_effort) / 2.0;

}

effort = curr_effort;

my_last_effort=effort;

}

break;

| 6. Subject #43A: (Adaptive to Modal Effort agent) | ||||||||||

| Turn | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | ||

| Modal Eff. | 1.462 | 1.5 | 0.9 | 0.9 | 0.9 | 1 | 0.9 | 0.9 | ||

| Actual Eff. | 1.462 | 1.462 | 0.8 | 1.1 | 1 | 0.8 | 0.85 | 0.85 | rms | U |

| Simul. #1 | 1.462 | 1.362 | 1.4 | 0.8 | 0.8 | 0.8 | 0.9 | 0.8 | 0.2512 | 0.1167 |

| Simul. #2 | 1.462 | 1.462 | 1.5 | 0.9 | 0.9 | 0.9 | 1 | 0.9 | 0.2681 | 0.1201 |

Motivation: I started with the optimal effort: 1.462. Then, I engaged the most commonly supplied effort of the other players.

Comments: At least in their motivation, this subject exhibits behavior similar to reciprocity in the Bolton and Ockenfels (2000) model. Yet, a closer analysis shows that empirical data are better explained when the subject exerts an effort that is slightly lower than the most common. We have provided the simulated effort both when there is a small decrement (Simul. #1) and when there is none (Simul. #2). It is clear that considering a decrement provides a better approximation of the observed behavior.

case :

decrement = -0.1;

if (iteration < bootstrp_time) {

curr_effort = 1.462;

}

else{

curr_effort = modal_effort + decrement;

}

effort=curr_effort;

break;

| 7. Subject #13B: (Cheap Talk agent) | |||||||

| Turn | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| Effort | 0.91 | 0.725 | 0.693 | 0.71 | 0.65 | 0.63 | 0.625 |

Motivation: I did not look at the previous outcomes in deciding. Instead, I used comments and reactions from other agents when discussing previous results. Furthermore, when talking to the others, I tried to persuade them to a given effort so as to increase the chances of achieving the highest profit by playing my best response.

Comments: This behavior is similar to the model of selfish player presented in Fehr and Schmidt (1999). We did not model this behavior; however it is an important one since it highlights the need to consider different aspects of the game.

|

|

A reward is given depending on Qi. By backward induction it is obvious that, if α is greater than n, it is not rational to fish in periods different from the final one. Vice versa, if α is less than n, not to fish is a dominated strategy. Of course, in this case, there is a strong incentive to deviate from any commitment and this means that the probability of cooperative equilibrium being played is very low.

|

| Figure 6. Instructions for the harvesting dilemma experiment |

|

| Figure 7. Report form for the harvesting dilemma experiment |

| 1. Subjects A1, C1, C5, C6, C7 and C8: (Stubborn Committed player in a group with free-rider); Subjects A9, C2 and C4: (Unconscious Committed player in a group with free-rider); Groups B, D, E and F: (Committed player in a homogeneous group; 10 subjects in each group). | |||

| Year | % catch | Motivation | % simul. |

| 0 | 0% | I let the fish population increase as much as possible | 0% |

| 1 | 0% | I let the fish population increase as much as possible | 0% |

| 2 | 0% | I let the fish population increase as much as possible | 0% |

| 3 | 0% | I let the fish population increase as much as possible | 0% |

| 4 | 100% | I get all the fish since this is the last period | 100% |

| rms | 0 | ||

| U | 0 | ||

Comments: These types of subjects, even when in different groups, exhibit the same behavior and give the same motivations. They can not be distinguished unless the composition of the group they belong to is taken into account. One possible approach is to have the same computer code for all of them. Obviously, either designing other experiments to differentiate them or using their behavior as a common starting point for modeling more complex behaviors would also be a possibility. Another approach could be that of increasing the number of subjects in the experiment. The latter would enable the detection of the greatest number of possible situations in which different strategies show up.

case :

percentage = 0.0 %;

if (last_period) {

percentage = 100.0 %;

}

break;

| 2. Subject A2: (Hard Adaptive Committed player in a group with free-rider) | ||||

| Year | % catch | Motivation | Total % | % simul. |

| 0 | 0% | I let the fish population increase as much as it can | 34% | 0% |

| 1 | 100% | I get some fish | 48% | 100% |

| 2 | 100% | I get some fish | 55% | 100% |

| 3 | 100% | I get some fish | 63% | 100% |

| 4 | 100% | I get all the fish since this is the last period | 100% | 100% |

| rms | 0 | |||

| U | 0 | |||

Comments: The subject noticed that somebody was catching fish and reacted. This subject exhibits a reciprocity behavior in line with the Bolton and Ockenfels (2000) model.

case :

percentage = 0.0 %;

if (total_percentage > 0.0 %) {

percentage = 100.0 %;

}

if (last_period) {

percentage = 100.0 %;

}

break;

| 3. Subject A6: (Soft Adaptive Behavior) | ||||

| Year | % catch | Motivation | Total % | % simul. |

| 0 | 0% | I let the fish population increase as much as it can | 34% | 0% |

| 1 | 50% | I get some fish | 48% | 50% |

| 2 | 50% | I get some fish | 55% | 50% |

| 3 | 50% | I get some fish | 63% | 50% |

| 4 | 100% | I get all the fish since this is the last period | 100% | 100% |

| rms | 0 | |||

| U | 0 | |||

Comments: The motivation given by the subject is not self explanatory. In this case, given the fact that the subject was in a group with several free riders, it is not unlikely that he/she reacted (softly) to the behavior of the other subjects.

case :

percentage = 0.0 %;

if (total_percentage > 0.0 %) {

percentage = 50.0 %;

}

if (last_period) {

percentage = 100.0 %;

}

break;

| 4. Subject A7: (Soft Adaptive with Delayed Reply Committed agent in a group with free-rider) | |||||

| Year | % catch | Motivation | Exp. Pop. | Act. Pop. | % simul. |

| 0 | 0% | I let the fish population increase as much as it can | 500 | 500 | 0% |

| 1 | 0% | I let the fish population increase as much as it can | 600 | 396 | 0% |

| 2 | 50% | The fish population is decreasing so I get some fish | 475.2 | 247.104 | 50% |

| 3 | 50% | The fish population is decreasing so I get some fish | 296.5248 | 133.4362 | 50% |

| 4 | 100% | I get all the fish since this is the last period | 160.1234 | 59.24566 | 100% |

| rms | 0 | ||||

| U | 0 | ||||

Comments: Actually some individuals in this subject's group started to fish right from the first period. A possible solution is to implement their behavior by simply introducing a delay in the Soft Adaptive Behavior code and considering expected population.

case :

counter=0;

percentage = 0.0 %;

if (population < expected_population) {

counter++;

if (counter > threshold) {

percentage = 50.0 %;

};

if (last_period) {

percentage = 100.0 %;

}

break;

| 5. Subjects A4, A5 and A10: (Risk-averse) | |||

| Year | % catch | Motivation | % simul. |

| 0 | 100% | I do not know other players' strategies so I get as much as I can | 100% |

| 1 | 100% | I do not know other players' strategies so I get as much as I can | 100% |

| 2 | 100% | I do not know other players' strategies so I get as much as I can | 100% |

| 3 | 100% | I do not know other players' strategies so I get as much as I can | 100% |

| 4 | 100% | I get all the fish since this is the last period | 100% |

| rms | 0 | ||

| U | 0 | ||

Comments: These agents did not commit and/or did not believe in the pre-game commitment. This is another example of behavior close to the model of selfish player presented in Fehr and Schmidt (1999).

case : percentage=100.0%; break;

| 6. Subject C10: (Free-rider/Trigger) | |||

| Year | % catch | Motivation | % simul. |

| 0 | 0% | To maximize last year fish population | 0% |

| 1 | 75% | Somebody is fishing so I fish as well | 75% |

| 2 | 75% | I keep on fishing | 75% |

| 3 | 75% | I keep on fishing | 75% |

| 4 | 100% | I get all the fish since this is the last period | 100% |

| rms | 0 | ||

| U | 0 | ||

Comments: Actually, nobody in their group caught any fish at time 0. The motivation given by the subject does not elicit whether their miscalculation was deliberate. As a consequence, we have introduced a random error term. Finally this behavior, even if exhibited by a single agent, is likely to trigger a fishing war in a population with adaptive agents. This is a further example of the heterogeneity relevance in social phenomena.

case :

percentage = 0.0 %;

if (total_percentage + error > 0.0 %){

percentage = 75.0 %;

}

if (last_period) {

percentage = 100.0 %;

}

break;

| 7. Subject A3: ('Fixed Population Goal' heuristic with adaptation) | |||||

| Year | % catch | Motivation | Exp. Pop. | Act. Pop. | % simul. |

| 0 | 30% | To keep fish population constant | 500 | 500 | 2% |

| 1 | 30% | Even if the population decreases I keep on with my strategy | 500 | 396 | 2% |

| 2 | 30% | Even if the population decreases I keep on with my strategy | 500 | 247.104 | 2% |

| 3 | 90% | I change strategy since the fish population decreases too much | 500 | 133.4362 | 90% |

| 4 | 100% | I get all the fish since this is the last period | 500 | 59.24566 | 100% |

| rms | 0.219469 | ||||

| U | 0.176028 | ||||

Comments: This subject uses a personal heuristic; in a group of committed players this behavior can trigger a reaction.

case :

counter=0;

percentage = (alpha-1.0) / (alpha*#_of _players);

if (population < expected_population) {

counter++;

if (counter > threshold) {

percentage = 90.0 %;

};

}

if (last_period) {

percentage = 100.0 %;

}

break;

In this case, the subject miscalculates the catch percentage to keep the population constant. In the simulation we used the right value. This explains the differences between simulated and actual values. However, the patterns of the behaviors are close. This is confirmed by the relatively low value for Theil's inequality coefficient.

| 8. Subject A8: (Non Trivial Adaptive behavior) | ||||||

| Year | % catch | Motivation | Exp. Pop. | Act. Pop. | Total % | % simul. |

| 0 | 10% | To get some fish without depleting the population | 500 | 500 | 34% | 1% |

| 1 | 0% | The population decreased too much | 500 | 396 | 48% | 0% |

| 2 | 20% | People is still fishing so I have to get some fish | 500 | 247.104 | 55% | 48% |

| 3 | 40% | I got too little fish I need to increase my quantity | - | - | 63% | 55% |

| 4 | 100% | I get all the fish since this is the last period | - | - | 100% | 100% |

| rms | 0.147853 | |||||

| U | 0.141399 | |||||

Comments: This subject is similar to the previous one, even though in this case he/she shows some concern about not depleting the fish population too soon.

case :

counter=0;

if (counter < threshold) {

percentage = (alpha-1.0) / (alpha*#_of _players) * 0.5;

if (population < expected_population)

counter++;

percentage=0.0%;

};

}

else {

percentage = total_percentage;

}

if (last_period) {

percentage = 100.0 %;

}

break;

The same sort of miscalculation has affected this subject too. As a consequence, there are differences between simulated and actual values. In this case, too, the patterns of the behaviors are similar. This is confirmed by the relatively low value of Theil's inequality coefficient.

| 9. Subject C3: (Adaptive - with miscalculations - behavior) | |||||

| Year | % catch | Motivation | Exp. Pop. | Act. Pop. | % simul. |

| 0 | 0% | Group committed to the no fishing strategy | 500 | 500 | 0% |

| 1 | 0% | Group followed the strategy, so I keep on | 600 | 600 | 0% |

| 2 | 0% | Even if there was some deviation I commit to the strategy | 720 | 666 | 0% |

| 3 | 2% | I deviate as much as my estimation of others' deviation | 799.2 | 739.26 | 8% |

| 4 | 100% | I get all the fish since this is the last period | 887.112 | 752.271 | 100% |

| rms | 0.024597 | ||||

| U | 0.054846 | ||||

Comments: This subject does not react immediately to somebody deviating from commitment, subsequently he/she tries to play a Tit for Tat strategy.

case :

counter=0;

percentage = 0.0%;

if (population < expected_population) {

counter++;

if (counter > threshold) {

percentage = others'_percentage;

};

}

if (last_period) {

percentage = 100.0%;

}

break;

In this subject we find another example of miscalculation. In this case its effects are less[6] than in previous subjects. This can also be observed to the extent of the goodness of fit we have taken into account.

| 10. Subject C9: (Lack of Comprehension of the game) | |||

| Year | % catch | Motivation | % simul. |

| 0 | 0% | To increase the fish population | 0% |

| 1 | 0% | To increase the fish population | 0% |

| 2 | 0% | To increase the fish population | 0% |

| 3 | 75% | I catch 3/4 of my fish | 75% |

| 4 | 25% | I catch the remaining 1/4 of my fish | 25% |

| rms | 0 | ||

| U | 0 | ||

Comments: This subject clearly did not understand the game. Nevertheless, it is important to realize that this sort of cognitive mistakes can potentially trigger unwanted reaction in other subjects.

case :

percentage = 0.0 %;

if (last_period - 1) {

percentage = some_value %;

}

if (last_period) {

percentage = 100.0 % - sum_of_ my_prev_percent;

}

break;

|

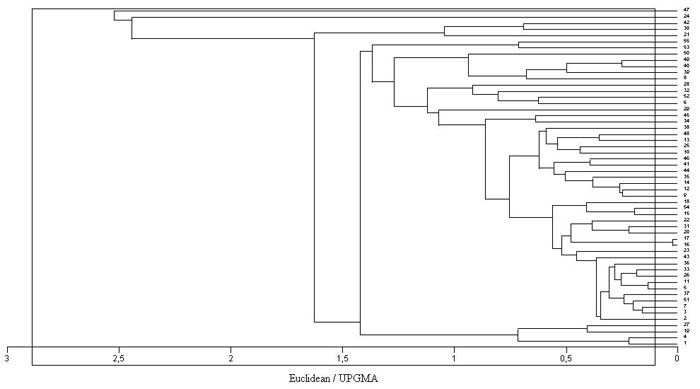

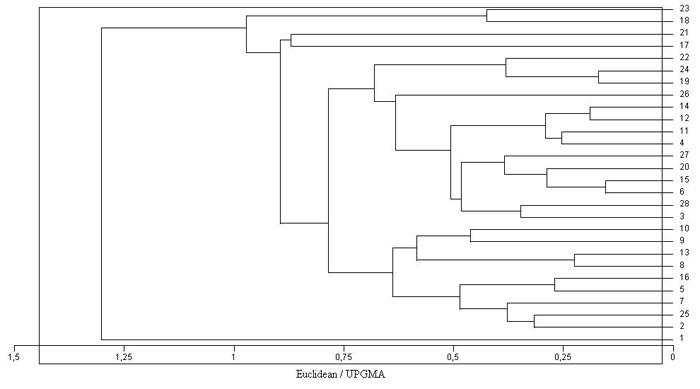

| Figure 8. Clustering of subjects' behavior in the first series of experiments |

|

| Figure 9. Clustering of subjects' behavior in the second series of experiments |

| Table 10: Population composition for first series in prisoner's dilemma experiment | |

| % | Behavior |

| 19% | Random |

| 19% | Nash effort |

| 20% | Shrinking effort |

| 32% | Hi Random |

| 5% | Low Random |

| 5% | Average effort |

| Table 11: Population composition for second series in prisoner's dilemma experiment | |

| % | Behavior |

| 16% | Nash effort |

| 5% | Average effort |

| 37% | Low Random |

| 10% | Fixed low effort=0.01 |

| 16% | Random |

| 16% | Shrinking effort |

| Table 12: Simulation results for first series in prisoner's dilemma experiment | ||||||||||

| Turn | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | ||

| Actual Avg Eff. | 1.354 | 1.395 | 1.200 | 0.807 | 0.931 | 1.010 | 0.940 | 0.853 | rms | U |

| Simul. Avg. Eff. | 1.332 | 1.169 | 1.098 | 1.033 | 0.962 | 0.936 | 0.915 | 0.930 | 0.134 | 0.059 |

| Std. Dev. | 0.07 | 0.06 | 0.1 | 0.08 | 0.13 | 0.07 | 0.08 | 0.08 | ||

| Table 13: Simulation results for second series in prisoner's dilemma experiment | |||||||||

| Turn | 1 | 2 | 3 | 4 | 5 | 6 | 7 | ||

| Actual Avg Eff. | 0.709 | 0.609 | 0.634 | 0.565 | 0.657 | 0.639 | 0.690 | rms | U |

| Simul. Avg. Eff. | 1.193 | 1.010 | 0.923 | 0.863 | 0.779 | 0.725 | 0.785 | 0.29 | 0.19 |

| Std. Dev. | 0.04 | 0.09 | 0.06 | 0.07 | 0.04 | 0.04 | 0.07 | ||

2 The ranking of performance may have consequences on strategies. This may be relevant in the first experiment we examine (see Dal Forno and Merlone 2001), even though subjects did not seem aware of this.

3 Of course, any subject could have made clear their commitments but, given the number of subjects in each experiment, this was unlikely to be relevant. Furthermore, some subjects mentioned they failed to make a credible commitment.

4 We are well aware that asking the subject to motivate their choices after each session could have resulted in different motivations. Both the experimental examples we report here are just examples of the application of our approach.

5 They wrote and circulated some sheets referring to the ‘Tragedy of the Commons’ and strongly suggested a proposal of commitment.

6 Miscalculation occurs only in the last but one year and the error is lower than in previous cases.

AXELROD R. (1980a). "Effective Choice in the Iterated Prisoner's Dilemma". Journal of Conflict Resolution. 24:3-25.

AXELROD R. (1980b). "More Effective Choice in the Prisoner's Dilemma". Journal of Conflict Resolution. 24:379-403.

AXELROD R. (1984). The Evolution of Cooperation. New York: Basic Books.

BANDURA A. (1999). "Social learning". In: The Blackwell Encyclopedia of Social Psychology. Edited by Manstead A.S.R., Hewstone M. Blackwell Publishers. Oxford UK.

BOERO R. (2003). "Inferire comportamenti economici da dati sperimentali: un nuovo approccio alla microfondazione dei modelli basati su agenti". Draft.

BOLTON G.E., Ockenfels A., (2000). "ERC: A Theory of Equity, Reciprocity, and Competition". The American Economic Review. 90(1), pp. 166-193.

BURLANDO R.B., Guala F. "Overcontribution and Decay in Public Goods Experiments: a Test of the Heterogeneous Agents Hypothesis", working paper 2003.

DAL FORNO A., Merlone U., (2001), "Incentive Policy and Optimal Effort: Equilibria in Heterogeneous Agents Populations", Quaderni del Dipartimento di Statistica e Matematica Applicata, n.10.

DAL FORNO A., Merlone U. (2002). "A Multi-agent Simulation Platform for Modeling Perfectly Rational and Bounded-rational Agents in Organizations". Journal of Artificial Societies and Social Simulation, Vol. 5, no. 2. https://www.jasss.org/5/2/3.html

DAL FORNO A., Merlone U. (2004). "Personnel Turnover in Organizations: an Agent-Based Simulation Model". Nonlinear Dynamics, Psychology & Life Sciences, 8(2), pp. 205-230

DAWES R.J, McTavish J. and Shaklee H. (1977). "Behavior, Communication and Assumptions about other People's Behavior in a Commons Dilemma's situation". Journal of Personality and Social Psychology, 35(1), pp.1-11

DUFFY J. (2001). "Learning to Speculate: Experiments with Artificial and Real Agents". Journal of Economic Dynamics and Control, 25, 295-319.

DUFFY J., Engle-Warnick J., (2002). "Using Symbolic Regression to Infer Strategies from Experimental Data" in S-H. Chen eds., Evolutionary Computation in Economics and Finance, Physica-Verlag. New York

FEHR E., Schmidt K.M., (1999). "A Theory of Fairness, Competition, and Cooperation". The Quarterly Journal of Economics, 114 (3), pp. 817-868.

FUDENBERG D., Levine D.K., (1998). The Theory of Learning in Games. The MIT Press. Cambridge, Mass.

GARVIN S., Kagel J.H., (1994). "Learning in Common Value Auctions: some Initial Observations". Journal of Economic Behavior and Organization, 25, pp. 351-372.

GIGERENZER G., Selten R., (2001). "Rethinking Rationality". Report of the 84th Dahlem Workshop on Bounded Rationality: The Adaptive Toolbox. Berlin, March 1999. Edited by G. Gigerenzer and R. Selten. The MIT Press. Cambridge MA.

LALAND K.L., (2001). "Imitation, Social Learning and Preparedness as Mechanisms of Bounded Rationality". Report of the 84th Dahlem Workshop on Bounded Rationality: The Adaptive Toolbox. Berlin, March 1999. Edited by G. Gigerenzer and R. Selten. The MIT Press. Cambridge MA.

LEDYARD J.O., (1995). "Public Goods: a Survey of Experimental Research". In: The Handbook of Experimental Economics. Kagel J.H., Roth A.E. (Ed.). Princeton University Press. Princeton, NJ.

MACY M.W., (1998). "Social Order in Artificial Worlds". Journal of Artificial Societies and Social Simulation, 1, no. 1, https://www.jasss.org/JASSS/1/1/4.html

MARWELL G., Ames R., (1979). "Experiments on the Provision of Public Goods I: Resources, Interest, Group size, and the Free-rider Problem". American Journal of Sociology, 84 (6), pp.1335-60.

MCCAIN R.A., (2000). Agent-based Computer Simulation of Dichotomous Economic Growth. Kluwer Academic Publisher.

MELLERS B.A., Erev I., Fessler D.M.T., Hemelrijk C.K., Hertwig R., Laland K.N., Scherer K.R., Seeley T.D., Selten R., Tetlock P. E.. (2001). "Group Report: Effects of Emotions and Social Processes on Bounded Rationality". Report of the 84th Dahlem Workshop on Bounded Rationality: The Adaptive Toolbox. Berlin, March 1999. Edited by G. Gigerenzer and R. Selten. The MIT Press. Cambridge MA.

PINDYCK R.S., Rubinfeld D.L., (1991). Econometric Models & Economic Forecast. Third Edition. McGraw-Hill, Inc.

PRUITT D.G., (1999). "Experimental Games". In: The Blackwell Encyclopedia of Social Psychology. Edited by Manstead A.S.R., Hewstone M. Blackwell Publishers. Oxford UK.

RABIN M., (1993). "Incorporating Fairness into Game Theory and Economics". The American Economic Review, 83(5), pp.1281-1302.

ROTH A.E., (1995a). "Introduction to Experimental Economics". In: The Handbook of Experimental Economics. Kagel J.H., Roth A.E. (Ed.). Princeton University Press. Princeton, NJ.

ROTH A.E., (1995b). "Bargaining Experiments". In: The Handbook of Experimental Economics. Kagel J.H., Roth A.E. (Ed.). Princeton University Press. Princeton, NJ.

SMITH V. L., (1979a). "An Experimental Comparison of Three Public Good Decision Mechanisms". Scandinavian Journal of Economics, 81(1), pp.198-215.

SMITH V. L., (1979b). Research in Experimental Economics, Vol.1. Greenwich, Conn.: JAI Press.

STERMAN J. D. (2000). Business Dynamics. System Thinking and Modeling for a Complex World. Boston, Ma.: Irving McGraw-Hill

STIGLER G.J., (1961). "The Economics of Information". J. Pol. Econ., 69, pp.213-225.

Return to Contents of this issue

© Copyright Journal of Artificial Societies and Social Simulation, [2004]