Chris Goldspink (2002)

Methodological Implications Of Complex Systems Approaches to Sociality: Simulation as a foundation for knowledge

Journal of Artificial Societies and Social

Simulation

vol. 5, no. 1

To cite articles published in the Journal of Artificial Societies and Social

Simulation, please reference the above information and include paragraph

numbers if necessary

<https://www.jasss.org/5/1/3.html>

Received: 1-Nov-2001

Accepted: 11-Jan-2002

Published: 31-Jan-2002

Abstract

Abstract

![]() Introduction

Introduction

The Growing Interest in

Simulation Method in the Social Sciences

The Growing Interest in

Simulation Method in the Social SciencesA computer is an organization of elementary functional components in which, to a high approximation, only the function performed by those components is relevant to the behavior of the whole system (1996: 17-18).

Like deduction, it starts with a set of explicit assumptions. But unlike deduction, it does not prove theorems. Instead simulation generates data that can be analysed inductively. Unlike typical induction, however, the simulated data comes from a specified set of rules rather than direct measurement of the real world (1997: 17).

The Particular Relevance

of Simulation to Understanding Complex Systems

The Particular Relevance

of Simulation to Understanding Complex Systems Agent Design

Agent Design

Analysis of Results

Analysis of Results

Limits to Simulation

Limits to Simulation

If sensitivity analysis has yielded the result that the trajectory of the system depends sensitively on initial conditions and parameters, then quantitative prediction may not be possible at all. And if the model is stochastic, then only a prediction in probability is possible (1997: 49).

We can't know what will happen regardless of our acts. We can know what might happen if we act a certain way... In this way of thinking simulation is clearly a tool which helps us not know what will happen, but what can be made to happen (1997: 5).

|

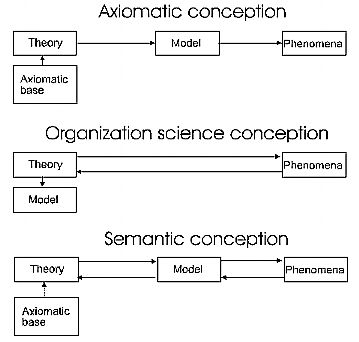

| Figure 1. McKelvey's (1999) conception of the Axiom-theory-model- phenomena relationship. |

ontological adequacy is tested by comparing the isomorphism of the models idealised structures/processes against that portion of the total "real-world" phenomena defined as "within scope of the theory (1999: 18).

|

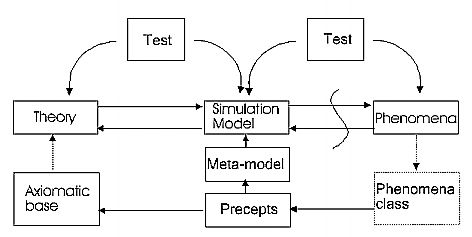

| Figure 2. An extended semantic model. |

Qualitative descriptions seem to be best suited for capturing the circular texture of organisational phenomena. How else could one hope to do justice to the historicity of the phenomena to be explained, if not by narrating how the actions of interacting agents and the occurrence of chance events, unfolding in time, have been intertwined to generate the phenomena at hand (1998: 303).

Narratives are analytical constructs that unify a number of past or contemporaneous actions and happenings, which might otherwise have been viewed as discrete or disparate, into coherent relational whole that gives meaning to and explains each of its elements (1993: 1097).

if puny and unknowable details do in fact play an essential role in some particular history, narrative accounts of that history need not have access to that detail. The narrator can still describe and emplot events and the effects of that detail even though the detail itself and its causal power is not recognised. As a causal explanation the resulting narrative would appear, from some ideal vantage, to be incomplete or incorrect. But at least it would remain parallel and in step with events that actually occurred (1991: 18).

AXELROD, R. (1997), 'Advancing the Art of Simulation in the Social Sciences', Complexity,Vol. 3, No 2, John Wiley N.Y. p.p. 16- 22.

BAERT P (1998), Social Theory in the Twentieth Century, Polity.

BOOKCHIN, M. (1995), The Ecology of Freedom: The Emergence and Dissolution of Hierarchy,Black Rose, Montreal.

BRASSEL K. H. MOHTING, M. SCHUMACHER E. & TROITZSCH K. G. (1997), 'Can agents Cover All the World'. In Conte R. Hegselmann R. & Terna P. eds. Simulating Social Phenomena, Springer, Berlin.

BURRELL G. & Morgan G. (1994), Sociological Paradigms and Organisational Analysis, Virago, London.

BYRNE, D. (1997) 'Simulation - A Way Forward?' Sociological Research Online, Vol. 2, no. 2, http://www.socresonline.org.uk/socresonline/2/2/4.html

CARLEY, K. M , PRIETULA M.J. & ZHIANG L., (1998), 'Design vs Cognition: The Interaction of agent cognition and organizational design on organizational performance', Journal of Artificial Societies and Social Simulation, Vol. 1 No. 3, https://www.jasss.org/1/3/4.html

CASTI, J.L. (1994), Complexification: Explaining a Paradoxical World Through the Science of Surprise, Abacus, U.K.

CHECKLAND, P. SCHOLES J., 2000, Soft Systems Methodology in Action, Wiley, Chichester.

CONTE, R., HEGSELMANN R. & TERNA P. eds. (1997) Simulating Social Phenomena, Springer, Berlin.

CONTE R (1998), email subject titled, Carts and horses in computer simulation for the social sciences, posted to SIMSOC list server, simsoc@mailbase.ac.uk, 26 January 13:55:02.

EPSTEIN J.M & AXTELL R. (1996), Growing Artificial Societies, MIT Press, Cam. Ma.

EVE, R.A., HORSFALL S & LEE M.E., (1997), Chaos, Complexity and Sociology, Sage, London.

FERBER J. (1999), Multi-agent Systems: An Introduction to Distributed Artificial Intelligence,Addison -Wesley, New York.

FISHWICK P. (1995) 'What is Simulation', http://www.cis.ufl.edu/~fishwick/introsim/node1.html.

GALBRAITH J.K. (1994), The world economy since the wars,Sinclair-Stevenson, London.

GILBERT, N. (1996), 'Computer Simulation of Social Processes', Social Research Update, Issue Six, http://www.soc.surrey.ac.uk/sru/SRU6.html.

Gilbert, N. & CONTE R. eds. (1995), Artificial Societies, UCL Press, London.

GILBERT, N. & TROITZSCH K. G. (1999), Simulation for the Social Scientist,Open University Press, Buckingham.

GOLDSPINK, C. 2000a, 'Contrasting linear and non- linear perspectives in contemporary social research', Emergence, Vol 2 No 2. pp. 72-101.

GOLDSPINK, C. 2000b, 'Modelling social systems as complex: Towards A social simulation meta-model', Journal of Artificial Societies and Social Simulation, Vol 3 No 2, https://www.jasss.org/3/2/1.html

GRIFFIN L.J. (1993), 'Narrative, Event-Structure Analysis, and Causal Interpretation in Historical Sociology', American Journal of Sociology, Vol 98, No. 5 p.p. 1094-1133.

HANNERMAN R. A. (1995), 'Simulation Modeling and Theoretical Analysis in Sociology', Sociological Perspectives, Vol. 38, No. 4 p.p. 457-462.

HANNERMAN R. A. and PATRICK S. (1997), 'On the Uses of Computer-assisted Simulation Modeling in the Social Sciences, Sociological Research online,Vol. 2, No. 2, http://www.socresonline.org.uk/socresonline/2/2/5.html

HODGSON G. M. (1996), Economics and Institutions, Polity Press, Oxford.

Holland, J.H. (1998), Emergence: from chaos to order, Addison Wesley, MA.

ILGEN, D.R & HULIN C.L. (eds) (2000), Computational Modeling of Behavior Organizations: The Third Scientific Discipline, American Psychological Association, Washington DC.

KENNEDY J. & EBERHART R.C. (2000), Swarm intelligence, Morgan Kaufmann.

KOLLMAN K, MILLER J.H. & PAGE S. (1997) 'Computational Political Economy', in Arthur W.B., Durlauf S. N. & Lane D.A. (eds.), The Economy as an Evolving Complex System II, Addison-Wesley, Reading Ma.

LEIK, R. K. & MEEKER B. F. (1995) 'Computer Simulation for Exploring Theories: Models of Interpersonal Cooperation and Competition', Sociological Perspectives, Vol. 38, No. 4, p.p. 463-482.

LEWIN R., PARKER T. & REGINE B. (1998), 'Complexity Theory and the Organization: Beyond the Metaphor', Complexity, Vol 3 No. 4. John Wiley, p.p. 36-40.

LINCOLN, Y.S. & GUBA E.G. 1985, Naturalistic Inquiry, Sage N.Y.

MCTAGGART R. (1991), 'Principles for Participatory Action Research', Adult Education Quarterly, Vol 41, No. 3, p.p. 168-187.

MARION, R. (1999), The Edge of Organization: Chaos and Complexity Theories of Formal Social Systems, Sage, CA.

MCKELVEY, B., (1997), 'Quasi-Natural Organisation Science', Organization Science,

MCKELVEY, B. (1999), 'Complexity Theory in Organization Science: Seizing the Promise or Becoming a Fad?', Emergence, Vol 1 No 1., p.p. 5-32.

ORMEROD, P. (1995), The Death of Economics, Faber and Faber, London.

ORMEROD, P. (1998), Butterfly Economics, Faber & Faber, London.

PARUNAK, V. (1997), 'Towards the Specification and Design of Industrial Synthetic Ecosystems', Paper presented at the Fourth International Workshop on Agent Theories, Architectures and Languages (ATAL'97), Industrial Technology Institute, http://citeseer.nj.nec.com/parunak97toward.html

PRIETULA, M.J. CARLEY K.M. & GASSER L. eds. (1998), Simulating Organizations, M.I.T. Press, Ca.

REASON P. & ROWAN J. eds. (1981) Human Inquiry: A Sourcebook of New Paradigm Research, John Wiley.

REISCH G.A. (1991), 'Chaos, History and Narrative', History and Theory, Vol 30, p.p. 1-20.

ROSENAU P. M. (1992), Post-modernism and the social sciences, Princeton University Press, N.J.

SHRECKENGOST (1985), 'Dynamic Simulation Models: How Valid Are They?', in Rouse B.A, Kozel N. & Richards L.G (eds) Self Reporting Methods of Estimating Drug Use: Meeting Current Validity Challenges, NIDA Research Monograph 57, Washington.

SIMON, H.A. (1996), The Sciences of the Artificial, 3rd edition, The MIT Press, Cam. Ma.

STEWART I. (1990), Does God Play Dice - The New Mathematics of Chaos, Penguin.

TROITZSCH K.G. (1997), 'Social Science Simulation: Origins, Prospects and Purposes', in Conte R. Hegselmann R. & Terna P. eds. (1997) Simulating Social Phenomena, Springer, Berlin.

WHYTE W.F. ed. (1991), Participatory Action Research, Sage.

WINTER R. (1987) Action Research and the Nature of Social Enquiry, Gower, U.K.

Return to Contents of this issue

© Copyright Journal of Artificial Societies and Social Simulation, 2002