A Pragmatic Reading of Friedman's Methodological Essay and What It Tells Us for the Discussion of ABMs

Journal of Artificial Societies and Social Simulation

12 (4) 6

<https://www.jasss.org/12/4/6.html>

For information about citing this article, click here

Received: 04-Aug-2009 Accepted: 20-Aug-2009 Published: 31-Oct-2009

Abstract

Abstract

|

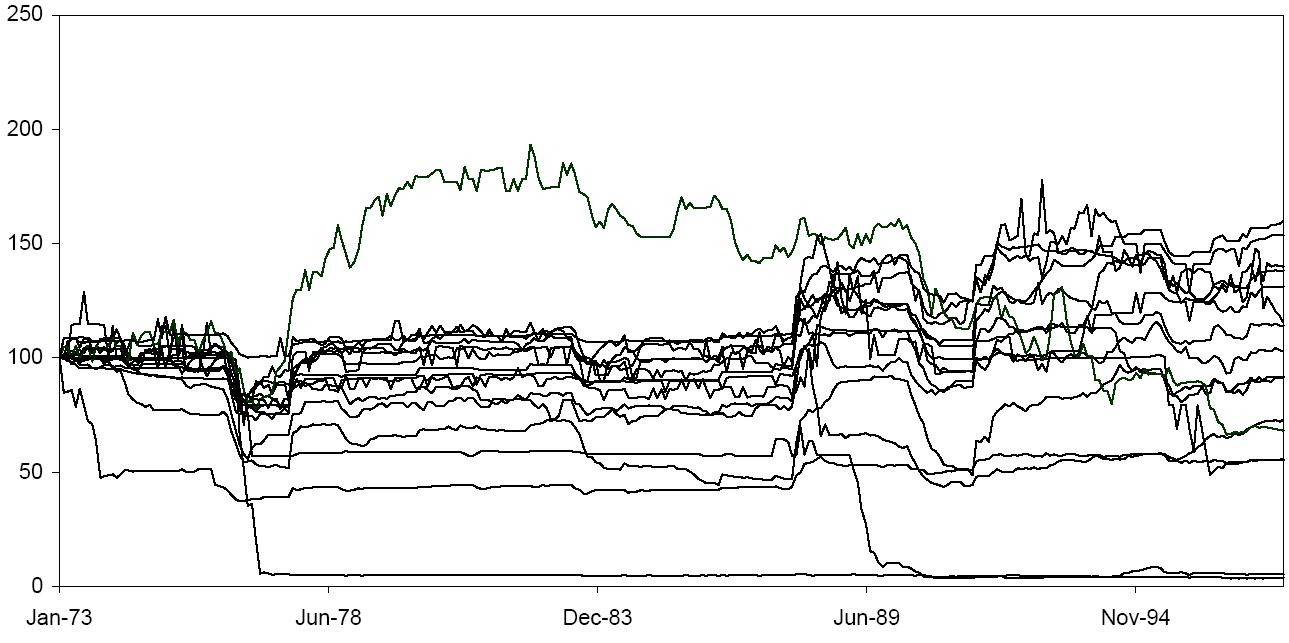

| Figure 1. Aggregate Water demand from model runs specified in Edmonds and Moss (2004), p.10. |

4 We do not deliver an introductory summary of Friedman's essay as it is hard to summarize it in a neutral way due to the huge amount of different interpretations available in the literature. The pragmatic interpretation which we are going to develop in this text is therefore not the only one consistent with the text. Of course, in our view, it is the one that fits best Friedman's general point, even if it has to live with some inconsistencies.

5 See Schliesser (2005), Schröder (2004) and Hoover (2004) for some other recent interpretations of Friedman's classic that agree on this point.

6 See Hausman (1992b), p.70-73.

10 Note, that Friedman equates "realistic" assumptions with descriptively accurate ones. Therefore, his thesis is not an ontological, but a methodological one. We stick to this notion of realism throughout the paper.

12 Sometimes it seems that this view is attributed to Friedman, even if it is obviously absurd. (See e.g. Samuelson (1963), p.233.) Such critics seem to forget that Friedman is accepting only those assumptions that lead to correct predictions. Besides that, it is simply a logical error to conclude from Friedman's "the more significant a theory, the more unrealistic the assumptions" to the statement that unrealistic assumptions imply significant theories.

14 "Realisticness" is a term introduced by Uskali Mäki in order to distinguish descriptively accurate assumptions from the philosophical position of realism. See e.g. Mäki (1998). This paper equates "realistic assumptions" with "empirically adequate assumptions" and hence avoids the philosophical discussion about realism.

17 Of course, it is sometimes not fruitful to assume rational behaviour. E.g. modelling situations that involve decisions under uncertainty or trying to analyse innovation processes that require creativity are not likely to be fruitfully reconstructed by rational choice models. However, as there are situations that can be reconstructed by rational choice models, we cannot judge this methodological principle as such on grounds of its (im)plausibility, but only the aptness of a concrete application of the principle.

18 Marcel Boumans adds that Friedman encourages empirically exploring the domain of a negligibility assumption. See Boumans (2003), p.320.

19 Musgrave takes Newton's reduction of planets to mass points as an example for a heuristic assumption, but instead of refuting Friedman's claim this seems rather to confirm his view that significant theories are often based on unrealistic heuristic assumptions.

20 This statement does not touch the philosophical position of scientific realism, which is a theory about the truth status of causal connections in scientific theories. The above argument is headed against a more naïve form of realism, which identifies realism with a one-to-one correspondence to observation.

21 The rational choice assumption often leads to false implications and cannot be fruitfully adapted to some economic problem fields such as innovation economics, but as there are many problems that can be tackled (if only to a certain degree) by the (implausible) rationality assumption (see e.g. the works of Gary Becker), it should be clear that this assumption cannot be rejected beforehand by calling it unrealistic.

22 See e.g. Mäki (2008), p.14.

23 The current paper largely ignores the philosophical discussion about realism and anti-realism because this can be separated completely from questions of theory evaluation.

26 The essential difference between Hausman's and Friedman's position boils down to this point: Hausman favours general models with broad predictive success whereas Friedman would argue that this is neither feasible nor appropriate.

28 See Hirsch and DeMarchi (1990) for a detailed analysis of the pragmatic elements in Friedman's methodology. Particularly they argue for the thesis that Friedman was heavily influenced by John Dewey's views. Hence, the term "pragmatic" is to be understood in Dewey's sense in this text. However, it is not to be understood in the sense of a pragmatic theory of truth, but meant to shift the focus to the truth-independent usefulness of theories.

29 In that sense Friedman's essay completely is beyond the traditional distinctions of realism (theories can be true) and instrumentalism (theories are neither true nor false).

30 See Edmonds and Moss (2004).

31 The distinction between KISS and KIDS does not deny the fact, that there may be cases in which descriptively adequate assumptions are very simple. In these cases, there is no dissent between advocates of KISS and KIDS. In the vast majority however, there is a huge difference between building models that are as descriptively accurate as possible or as simple as possible.

32 See Schelling (1971) for the locus classicus.

33 This result is robust under various changes, see e.g. Flache and Hegselmann (2001).

34 Note, that even the "toughest" sciences like physics make heavy use of idealisations or unrealistic assumptions. Just think of planets as "mass points"or laws that apply only in vacuum. Without radical simplification, many of the basic laws of physics would have never been found.

35 Note however, that we do not draw the conclusion that simple models are more realistic than complex ones. For advocates of the KISS modelling approach it is crucially important to keep in mind how incomplete such models are and how difficult it is to transfer their results into the real world.

36 The term "explanation" is itself under philosophical discussion. The covering law model of explanation is generally considered out-dated due to several difficulties. We do not enter in this philosophical discussion here, but stick to a commonsense notion of explanation.

37 See Edmonds and Moss (2004).

38 The policy agent suggests a lower usage of water if there is less than a critical amount of rain during a month. This influences the agents to a certain degree.

39 Edmonds and Moss (2004), p. 8.

40 Edmonds and Moss (2004), p.10.

41 See Edmonds and Moss (2004), p.10.

42 Edmonds and Moss (2004), p.8.

43 See Chwif, Barretto and Paul (2000), p.452.

44 See e.g. Brenner and Werker (2007), p.230.

45 A nice example for this can be found in the literature about the evolution of cooperation that started with a simple tit-for-tat model, which was thereafter refined in various respects. See Ball (2004), chapter 17-18 for an overview.

46 See Peirce (1867), 5, p. 145. Again, we suspend judgement on whether accepting an explanation implies accepting its (ontological) truth.

49 See Brenner and Werker (2007), p.233.

BOUMANS, Marcel (2003): How to Design Galilean Fall Expermiments in Economics; in: Philosophy of Science 70, p. 308-329.

BRENNER, Thomas and Werker, Claudia (2007): A Taxonomy of Inference in Simulation Models; in: Computational Economics 30, p. 227-244.

CHWIF, Leonard, Barretto, Marcos Ribeiro Pereira and Paul, Ray J. (2000): On simulation model complexity; in: Proceedings of the 32nd conference on Winter simulation p. 449-455.

EDMONDS, Bruce and Moss, Scott J. (2004): From Kiss to KIDS - an 'antisimplistic' modelling approach; in: P. Davidsson et al. (eds.): Multi Agent Based Simulation; Springer, Lecture Notes in Artificial Intelligence, 3415: p.130-144. http://bruce.edmonds.name/kiss2kids/kiss2kids.pdf, Download Date: 23.9.2008.

FLACHE, Andreas and Hegselmann, Rainer (2001): Do Irregular Grids make a Difference? Relaxing the Spatial Regularity Assumption in Cellular Models of Social Dynamics; in: Journal of Artificial Societies and Social Simulation vol. 4 no. 4, https://www.jasss.org/4/4/6.html, Download Date: 10.10.2008.

FRIEDMAN, Milton (1953): "The Methodology of Positive Economics"; in: Friedman, Milton (1953): Essays in Positive Economics, University of Chicago Press, p. 3-43.

HAUSMAN, Daniel M. (1992a): The Inexact and Separate Science of Economics; Cambridge University Press, Edition of 1992.

HAUSMAN, Daniel M. (1992b): Essays on Philosophy and Economic Methodology; Cambridge University Press, Edition of 1992.

HIRSCH, Abraham and DeMarchi, Neil (1990): Milton Friedman - Economics in Theory and Practice; Harvester Wheatsheaf, Edition of 1990.

HOOVER, Kevin D. (2004): Milton Friedman's Stance: The Methodology of Causal Realism; in: Working Paper University of California, Davis 06-6 http://www.econ.ucdavis.edu/working_papers/06-6.pdf, Download Date: 12.02.2008.

KALDOR, Nicholas (1978): Further Essays on Economic Theory; Gerald Duckworth & Co Ltd., Edition of 1981.

MÄKI, Uskali (1998): "Entry 'As If'"; in: Davis, John B. et al. (1998): The Handbook of Economic Methodology, Cheltenham Northampton: p. 25-27.

MÄKI, Uskali (2000): Kinds of Assumptions and Their Truth: Shaking an Untwisted F-Twist; in: Kyklos 53/3, p. 317-335.

MÄKI, Uskali (2008): Realistic realism about unrealistic models (to appear in the Oxford Handbook of the Philosophy of Economics); in: Personal Homepage http://www.helsinki.fi/filosofia/tint/maki/materials/MyPhilosophyAlabama8b.pdf, Download Date: 14.11.2008.

MALERBA, F., Nelson, R.R., Orsenigo, L. and Winter, S.G. (1999) 'History friendly models of industry evolution: the computer industry', Industrial and Corporate Change, Vol. 8, pp.3–40.

MUSGRAVE, Alan (1981): 'Unreal Assumptions' in Economic Theory: The F-Twist Untwisted; in: Kyklos Vol.34/3, p. 377-387.

PEIRCE, Charles S. (1867): Collected Papers of Charles Sanders Peirce; Harvard University Press, Edition of 1965.

SAMUELSON, Paul (1963): Problems of Methodology - Discussion; in: American Economic Review 54, p. 232-236.

SCHELLING, Thomas C. (1971): Dynamic Models of Segregation; in: Journal of Mathematical Sociology 1, p. 143-186.

SCHLIESSER, Eric (2005): Galilean Reflections on Milton Friedman's "Methodology of Positive Economics", whith Toughts on Vernon Smiths's "Economics in the Laboratory"; in: Philosophy of the Social Sciences Vol. 35, p. 50-74.

SCHRÖDER, Guido (2004): "Zwischen Instrumentalismus und kritischem Rationalismus?—Milton Friedmans Methodologie als Basis einer Ökonomik der Wissenschaftstheorie"; in: Pies, Ingo/Leschke, Martin (2004): Milton Friedmans Liberalismus, Tübingen: Mohr Siebeck, p. 169-201.

Return to Contents of this issue

© Copyright Journal of Artificial Societies and Social Simulation, [2009]