Pedro Ribeiro de Andrade, Antonio Miguel Vieira Monteiro, Gilberto Câmara and Sandra Sandri (2009)

Games on Cellular Spaces: How Mobility Affects Equilibrium

Journal of Artificial Societies and Social Simulation

vol. 12, no. 1 5

<https://www.jasss.org/12/1/5.html>

For information about citing this article, click here

Received: 01-Apr-2008 Accepted: 04-Jan-2009 Published: 31-Jan-2009

Abstract

Abstract| Table 1: Game payoffs, in pairs (A, B) | ||

| B escalates | B does not escalate | |

| A escalates | (-10, -10) | (+1, -1) |

| A does not escalate | (-1, +1) | (0, 0) |

|

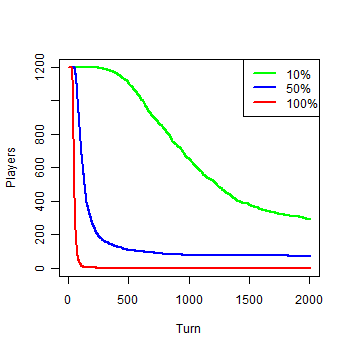

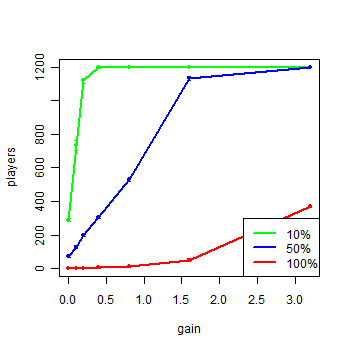

| Figure 1a. Results of the first experiment: a) Number of agents |

|

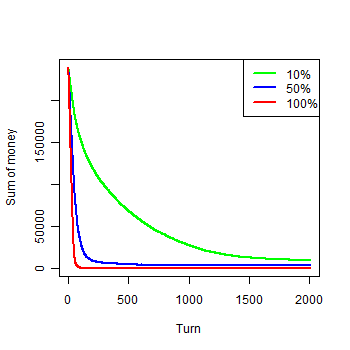

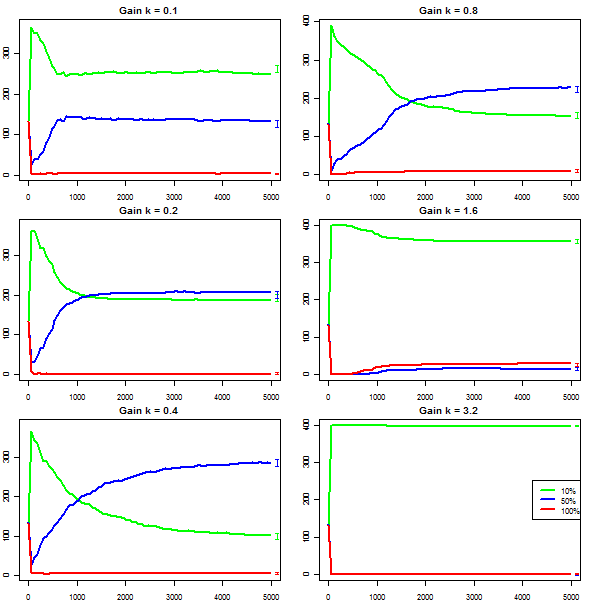

| Figure 1b. Results of the first experiment: b) Money by groups |

|

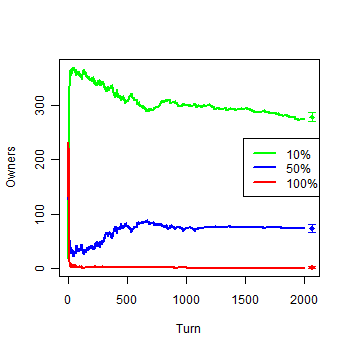

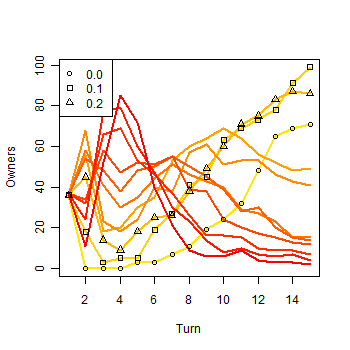

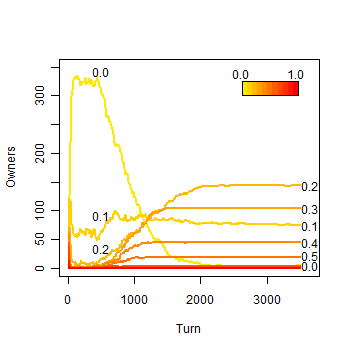

| Figure 1c. Results of the first experiment: c) Owners by groups |

|

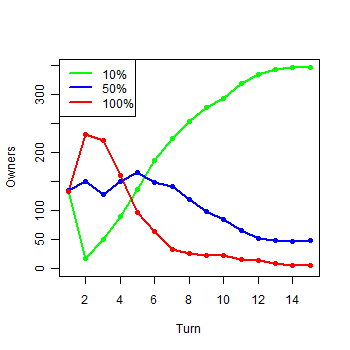

| Figure 1d. Results of the first experiment: d) Owners in the first 15 turns |

|

| Figure 2. Example of a run of the first experiment |

|

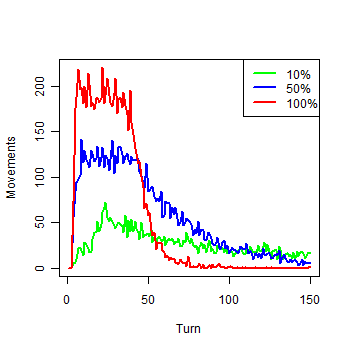

| Figure 3. Movements of each group in the first 150 turns |

|

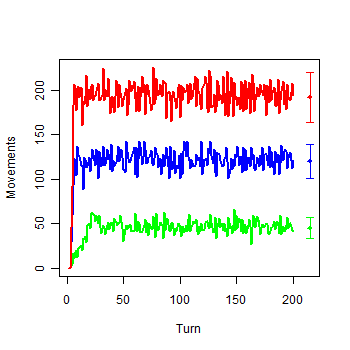

| Figure 4a. Model with infinite Money: a) Movements of each group |

|

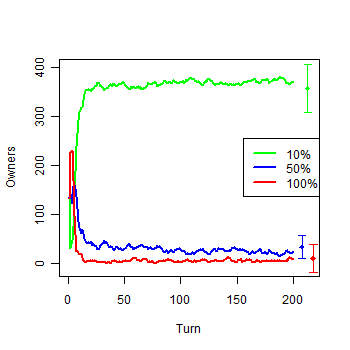

| Figure 4b. Model with infinite Money: b) Owners by groups |

| Table 2: Impact of the escalating probability in the movement | |||

| s0.1 | s0.5 | s1.0 | |

| Against s0.1 | -0.10 | -0.10 | -0.10 |

| Against s0.5 | -0.90 | -2.50 | -4.50 |

| Against s1.0 | -1.90 | -5.50 | -10.00 |

| Mean | -0.97 | -2.70 | -4.87 |

| Turns before an agent moves | 20.61 | 7.40 | 4.10 |

| Expected movements by turn | 58.22 | 162.16 | 292.68 |

| Movements with infinite money | 47.25 | 123.20 | 196.13 |

| Difference | 10.97 | 38.96 | 96.55 |

| Decrease (%) | 18.84 | 24.02 | 32.98 |

|

| Figure 5. Agents of each group in the model with extra gain after turn 3000 |

|

| Figure 6. Owners by group with six values of extra gain |

|

| Figure 7a. Results of a single run with eleven strategies: a) Owners in the first 15 turns |

|

| Figure 7b. Results of a single run with eleven strategies: b) Owners along the simulation |

|

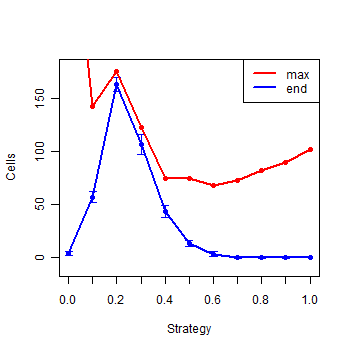

| Figure 8. Summary of the eleven strategies at the end of the simulations |

|

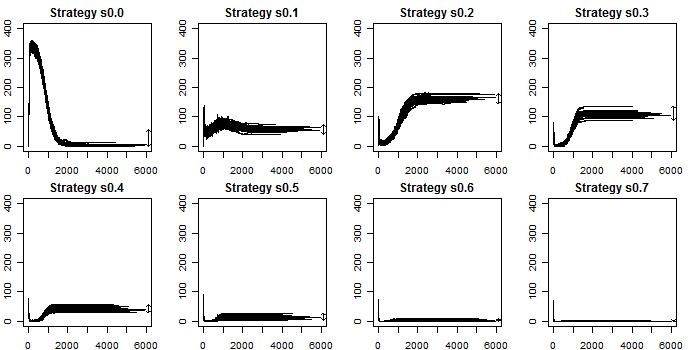

| Figure 9. Ownership of strategies along all simulations |

BELTRAN, F. S., SALAS, L., and QUERA, V. (2006), 'Spatial Behavior in Groups: an Agent-Based Approach', Journal of Artificial Societies and Social Simulation, 10.

BIELY, C., DRAGOSITS, K., and THURNER, S. (2007), 'Prisoner's dilemma on dynamic networks under perfect rationality', Physica D, 40-48.

CAMARA, G., et al. (2000), 'TerraLib: Technology in Support of GIS Innovation', II Brazilian Symposium on Geoinformatics, GeoInfo2000 (São Paulo).

CARNEIRO, T. G. S. (2006), 'Nested-CA: a foundation for multiscale modeling of land use and land change', (INPE, Brazil).

CASSAR, A. (2007), 'Coordination and cooperation in local, random, and small world networks: Experimental evidence', Games and Economic Behavior, 58, 209-30.

DURAN, O. and MULET, R. (2005), 'Evolutionary prisoner's dilemma in random graphs', Physica D, 208 (3-4), 257-65.

EPSTEIN, J. M. (1997), 'Zones of Cooperation in Demographic Prisoner's Dilemma', Complexity, 4 (2), 36-48.

FELDMAN, B. and NAGEL, K. (1993), 'Lattice Games with Strategic Takeover', in D Stein and L. Nadel (eds.), Lectures in Complex Systems, Papers from the summer school held in Santa Fe (5: Addison-Wesley), 603-14.

FERRIÈRE, R. and MICHOD, R. E. (2000), 'Wave Patterns in Spatial Games and the Evolution of Cooperation', in U. Dieckmann, R. Law, and J. A. Metz (eds.), The Geometry of Ecological Interactions: Simplifying Spatial Complexity (Cambridge University Press), 318-36.

IERUSALIMSCHY, R. (2003), Programming in Lua (Lua.Org).

KOSMIDIS, K., HALLEY, J. M., and ARGYRAKIS, P. (2005), 'Language evolution and population dynamics in a system of two interacting species', Physica A, 353, 595-612.

LE GALLIARD, J, FERRIÈRE, R., and DIECKMANN, U. (2003), 'The Adaptive Dynamics of Altruism in Spatially Heterogeneous Populations', International Journal of Organic Evolution, 57 (1), 1-17.

MANSURY, Y., DIGGORY, M., and DEISBOECK, T. S. (2006), 'Evolutionary game theory in an agent-based brain tumor model: Exploring the `genotype-phenotype' link', Journal of Theoretical Biology, 238, 146-56.

MAYNARD SMITH, J. (1982), Evolution and the Theory of Games (Cambridge University Press).

NASH, J. (1950), 'Non-cooperative games', (Princeton University).

NASH, J. (1951), 'Non-cooperative games', Annals of Mathematics (54), 286-95.

NOWAK, M. A. and MAY, R. M. (1992), 'Evolutionary Games and Spatial Chaos', Nature, 359, 826-29.

NOWAK, M. A. and SIGMUND, K. (1993), 'A strategy of win-stay, lose-shift that outperforms tit-for-tat in the Prisoner's Dilemma game', Nature, 364, 56-58.

NOWAK, M. A. and SIGMUND, K. (2000), 'Games on Grids', in U. Dieckmann, R. Law, and J. A. Metz (eds.), The Geometry of Ecological Interactions: Simplifying Spatial Complexity (Cambridge University Press), 135-50.

NOWAK, M. A., MAY, R. M., and SIGMUND, K. (1995), 'The Arithmetics of Mutual Help', Scientific American, 272 (6), 76-81.

OHTSUKI, HISASHI and NOWAK, MARTIN A. (2006), 'The replicator equation on graphs', Journal of Theoretical Biology, 243 (1), 86-97.

SCHEURING, I (2005), 'The iterated continuous prisoner's dilemma game cannot explain the evolution of interspecific mutualism in unstructured populations', Journal of Theoretical Biology, 232, 99-104.

SIGMUND, K (1993), Games of Life (Oxford University Press).

SOARES, R. O. and MARTINEZ, A. S. (2006), 'The geometrical patterns of cooperation evolution in the spatial prisioner's dilemma: an intra-group model', Physica A, 369, 823-29.

VAINSTEIN, M. H. and ARENZON, J. J. (2001), 'Disordered Environments in Spatial Games', Physical Review E, 64.

VUKOV, J. and SZAB, G. (2005), 'Evolutionary prisoner's dilemma game on hierarchical o lattices', Physical Review E, 71.

WU, Z. X., et al. (2006), 'Evolutionary prisoner's dilemma game with dynamic preferential selection', Physical Review E, 74.

ZHANG, J. (2004), 'Residential segregation in an all-integrationist world', Journal of Economic Behavior & Organization, 54, 533-50.

Return to Contents of this issue

© Copyright Journal of Artificial Societies and Social Simulation, [2009]