P.C. Buzing, A.E. Eiben and M.C. Schut (2005)

Emerging communication and cooperation in evolving agent societies

Journal of Artificial Societies and Social Simulation vol. 8, no. 1

<https://www.jasss.org/8/1/2.html>

To cite articles published in the Journal of Artificial Societies and Social Simulation, reference the above information and include paragraph numbers if necessary

Received: 12-Dec-2003 Accepted: 20-Sep-2004 Published: 31-Jan-2005

Abstract

Abstract|

|

|||

| Table 1: An overview of VUSCAPE monitors | |||

|

|

|||

| Type | Name | Denotes | Domain |

| Agent | age | age of the agent | [0:100] |

| Agent | listenPref | whether agent listens | [0:1] |

| Agent | talkPref | whether agent talks | [0:1] |

| Agent | sugarAmount | sugar contained by an agent | [0:∞] |

| Agent | inNeedOfHelp | percentage of agents on sugar > coopThresh | [0:1] |

| Agent | cooperating | percentage of agents that cooperates | [0:1] |

| Agent | exploreCell | percentage of agents that moved to a new cell | [0:1] |

| Agent | hasEaten | amount of food that agent has eaten | [0:4] |

| World | numberOfAgents | number of agents | [0: ∞] |

| World | numberOfBirths | number of just born agents | [0: ∞] |

| World | numberOfDeaths | number of just died agents | [0: ∞] |

|

|

|||

|

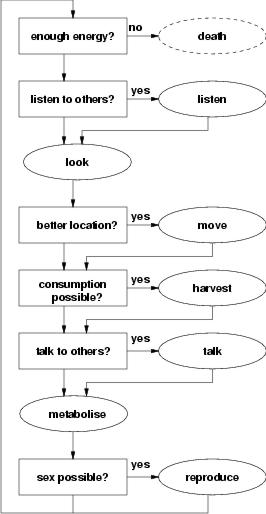

| Figure 1. The agent control loop in VUSCAPE |

|

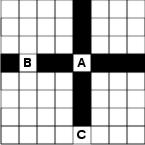

| Figure 2. Communication in VUSCAPE over the axes. In this example, agent A multicasts information, which can be received by agents B and C |

|

|

|||

| Table 2: Experimental settings. Parameters not explained in this article are identical to those in SUGARSCAPE | |||

|

|

|||

| Parameter | Value | Parameter | Value |

| Height of the world | 50 | Minimum death age | 60 |

| Width of the world | 50 | Maximum death age | 100 |

| Initial number of agents | 1000 | Minimum begin child bearing age | 12 |

| Sugar richness | 1.0 | Maximum begin child bearing age | 15 |

| Sugar growth rate | 1.0 | Minimum end child bearing age male | 50 |

| Minimum metabolism | 1.0 | Maximum end child bearing age male | 60 |

| Maximum metabolism | 1.0 | Minimum end child bearing age female | 40 |

| Minimum vision | 1.0 | Maximum end child bearing age female | 50 |

| Maximum vision | 1.0 | Reproduction threshold | 0 |

| Minimum initial sugar | 0.0 | Mutation sigma | 0.1 |

| Maximum initial sugar | 100.0 | Sex recovery | 0 |

|

|

|||

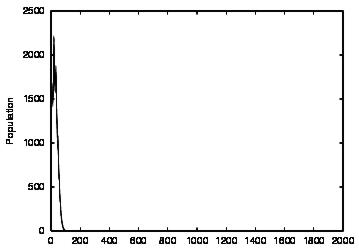

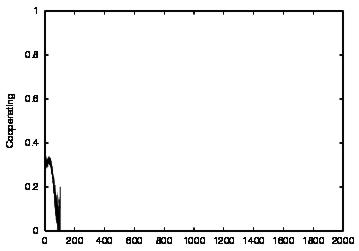

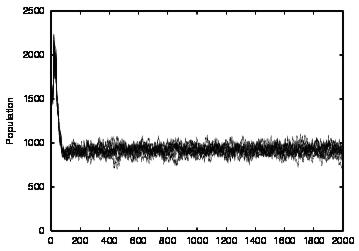

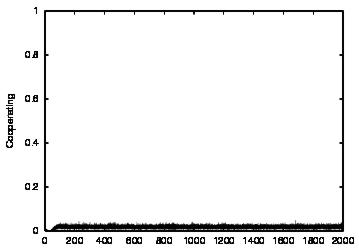

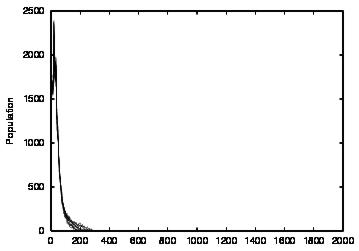

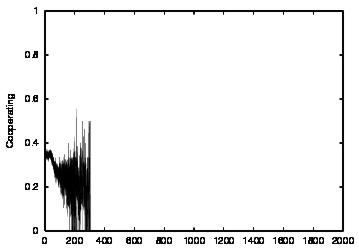

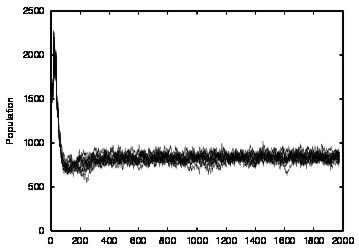

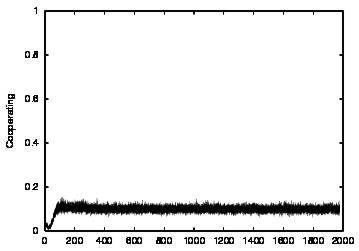

| Population | Cooperation | |

| CT=0 |  |

|

| CT=4 |  |

|

| (a) | (b) | |

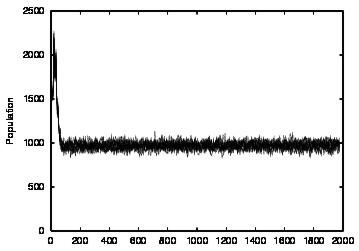

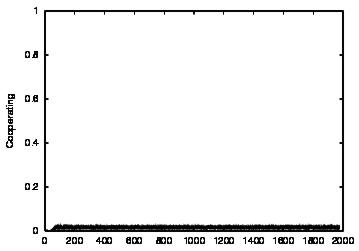

| Figure 3. Results for non-learning agents with communication suppressed (COM0). The results are shown for CT = 0 (first row) and CT = 4 (second row). The population size over time is shown in column (a) and the cooperations as a proportion of all performed actions over time is shown in column (b) | ||

| Population | Cooperation | talkPref | listenPref | |

| CT=0 |  |

|

|

|

| CT=1 |  |

|

|

|

| CT=2 |  |

|

|

|

| CT=3 |  |

|

|

|

| CT=4 |  |

|

|

|

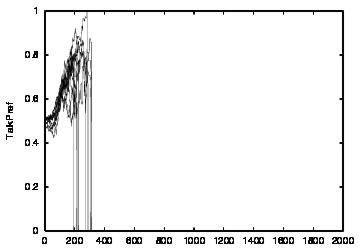

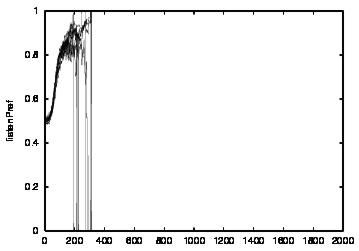

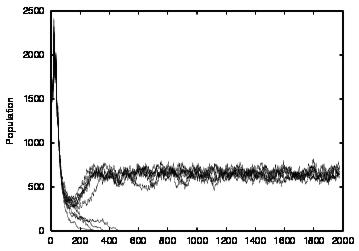

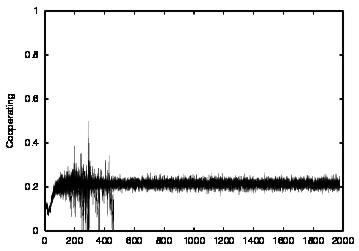

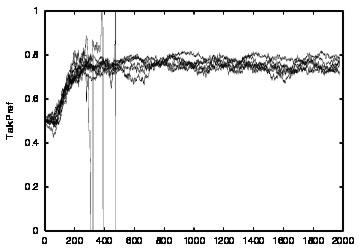

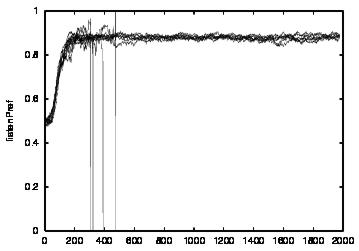

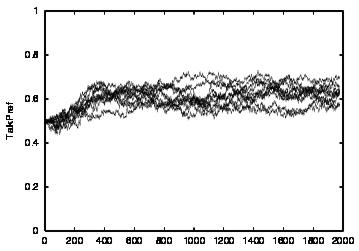

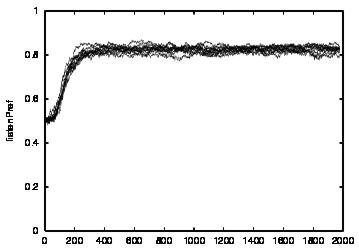

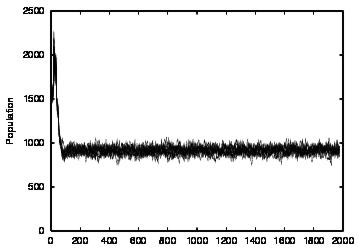

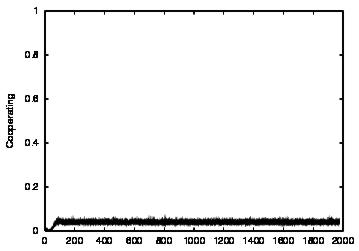

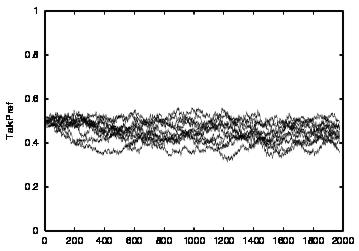

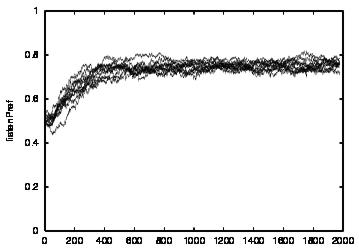

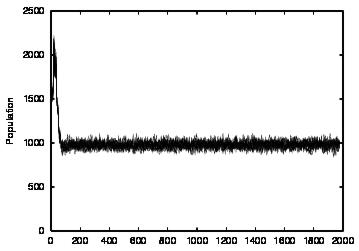

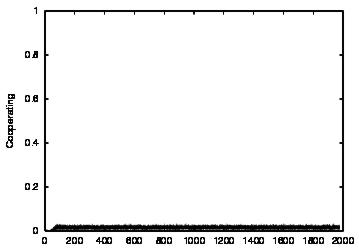

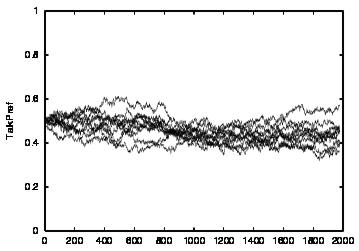

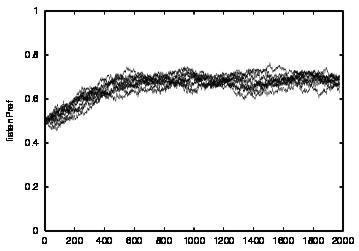

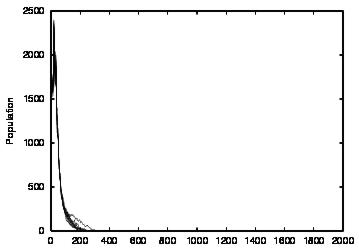

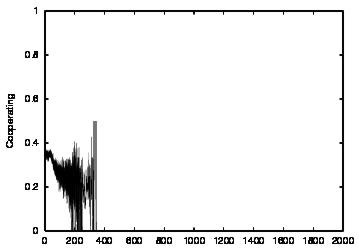

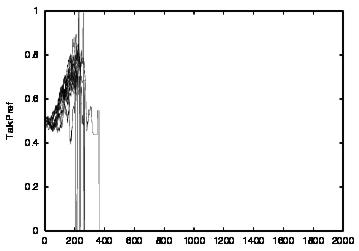

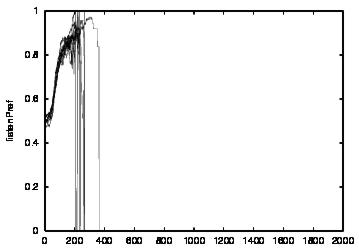

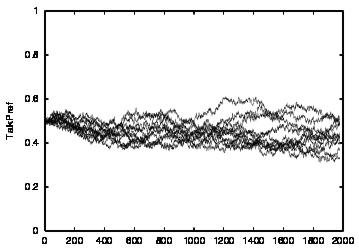

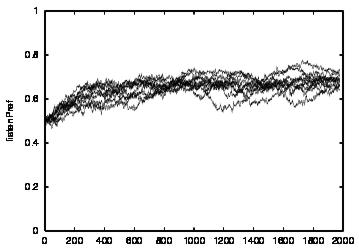

| (a) | (b) | (c) | (d) | |

| Figure 4. Results for learning agents with communication enabled and message removal method COM1 active. From top to bottom, the results are shown for CT = 0, 1, 2, 3 and 4. The population size over time is shown in column (a), the proportion of cooperations of all performed actions over time is shown in column (b), the average talk preference of agents is shown in column (c) and the average listen preference is shown in column (d). Each Figure exhibits the results of 10 independent runs overlaid | ||||

| Population | Cooperation | talkPref | listenPref | |

| CT=0 |  |

|

|

|

| CT=4 |  |

|

|

|

| (a) | (b) | (c) | (d) | |

| Figure 5. Results for learning agents with communication enabled and message removal method COM2 active. The results are shown for CT = 0 (first row) and CT = 4 (second row). The population size over time is shown in column (a), the proportion of cooperations of all performed actions over time is shown in column (b), the average talk preference of agents is shown in column (c) and the average listen preference is shown in column (d) overlaid | ||||

2Each variable can be monitored as average, minimum, maximum, sum, variance, standard deviation, frequency, or any combination of these.

3Conceptually, all agents execute these stages in parallel. However, technically, the stages are partially executed in sequence. Therefore, the order in which agents perform their control loop, is randomised over the execution cycles to prevent order effects.

4Choosing the closest (and breaking ties randomly) is the method used in SUGARSCAPE. We have the option in VUSCAPE to either choose a random one or the closest. Since a move action does not have a cost associated with it, we do not consider choosing the closest as having a reasonable rationale.

5For 2,500 sugar units, this means that there are 250 seeds with maximum 1, 250 with maximum 2, etc. amounting to 2,500 in total.

6The JAWAS software offers more possibilities for methods to remove messages than were researched in this series of experiments.

7This means that within a single world execution loop, agent loop B is executed before agent loop C. This conceptually means that agent B receives the message from agent A before agent C does. Note that with COM1, agent C never receives the message.

AXELROD, R. (1984). The Evolution of Cooperation. Basic Books, New York.

AXELROD, R. (1997). The Complexity of Cooperation: Agent-Based Models of Competition and Collaboration. Princeton University Press, New Jersey.

BINMORE, K. (1998). Review of the Complexity of Cooperation. The Journal of Artificial Societies and Social Simulation, 1(1). https://www.jasss.org/1/1/review1.html.

BUZING, P., Eiben, A., and Schut, M. (2003). Evolving agent societies with VUScape. In Banzhaf, W. and Christaller, T., editors, Proceedings of the Seventh European Conference on Artificial Life.

COLLIER, N. (2000). Repast: An extensible framework for agent simulation. Technical report, Social Science Research Computing, University of Chicago, Chicago, Illinois.

DANIELS, M. (1999). Integrating simulation technologies with swarm. In Proceedings of the Workshop on Agent Simulation: Applications, Models, and Tools, University of Chicago, Illinois.

DAVIDSSON, P. (2001). Categories of artificial societies. Lecture Notes in Computer Science, 2203.

EIBEN, A.E., and Smith, J.E. (2003). Introduction to Evolutionary Computation. Springer, Berlin, Heidelberg, New York.

EPSTEIN, J. and Axtell, R. (1996). Growing Artificial Societies: Social Science From The Bottom Up. Brookings Institute Press.

GILBERT, N. and Conte, R. (1995). Artificial Societies: the computer simulation of social life. UCL Press, London.

HALES, D. (2002). Evolving specialisation, altruism and group-level optimisation using tags. In Sichman, J., Bousquet, F., and Davidsson, P., editors, Multi-Agent-Based Simulation II. Lecture Notes in Artificial Intelligence 2581, pages 26-35. Berlin: Springer-Verlag.

JELASITY, M. and van Steen, M. (2002). Large-scale newscast computing on the internet. Technical report, Department of Computer Science, Vrije Universiteit, Amsterdam, The Netherlands.

JELASITY, M., van Steen, M., and Kowalczyk, W. (2004). An Approach to Massively Distributed Aggregate Computing on Peer-to-Peer Networks. In Proceedings of the 12th Euromicro Conference on Parallel, Distributed and Network-based Processing, pages 200-207, IEEE Computer Society.

KREBS, J. and Dawkins, R. (1984). Animal signals: Mind reading and manipulation. Behavioural Ecology: An Evolutionary Approach (Second edition), pages 380-402.

NOBLE, J. (1999). Cooperation, conflict and the evolution of communication. Journal of Adaptive Behaviour, 7(3/4):349-370.

OLIPHANT, M. (1996). The dilemma of saussurean communication. Biosystems, 37(1-2):31-38.

OLIPHANT, M. (1997). Formal Approaches to Innate and Learned Communication: Laying the Foundation for Language. PhD thesis, University of California, San Diego.

OLIPHANT, M. and Batali, J. (1996). Learning and the emergence of coordinated communication. Center for research on language newsletter, 11(1).

PARKER, M. (2000). Ascape: Abstracting complexity. Technical report, Center on Social and Economic Dynamics, The Brookings Institution, Washington D.C.,Washington, USA.

PERFORS, A. (2002). Simulated evolution of language: a review of the field. Journal of Artificial Societies and Social Simulation, 5(2). https://www.jasss.org/5/2/4.html.

RAPOPORT, A. and Chammah, A. (1965). Prisoner's Dilemma. University of Michigan Press, Ann Arbor.

Return to Contents of this issue

© Copyright Journal of Artificial Societies and Social Simulation, [2004]