Abstract

Abstract

- A structure for analysing narrative data is suggested, one that distinguishes three parts in sequence: context (a heuristic to identify what knowledge is relevant given a kind of situation), scope (what is possible within that situation) and narrative elements (the detailed conditional and sequential structure of actions and events given the context and scope). This structure is first motivated and then illustrated with some simple examples taken from Sukaina Bharwani's thesis (Bharwani 2004). It is suggested that such a structure might be helpful in preserving more of the natural meaning of such data, as well as being a good match to a context-dependent computational architecture, and thus facilitate the process of using narrative data to inform the specification of behavioural rules in an Agent-Based Simulation. This suggestion only solves part of the 'Narrative Data to Agent Behaviour' puzzle — this structure needs to be combined and improved by other methods and appropriate computational architectures designed to suit it.

- Keywords:

- Qualitative Data, Context, Scope, Analysis, Specification, Narrative

Introduction

Introduction

- 1.1

- Agent-based modellers have always used a variety of sources to inform the design of their simulations. This paper looks at the use of narrative data for this purpose. This involves analysing transcribed accounts obtained from stakeholders and using these to inform the specification of the behavioural rules of corresponding agents within a simulation. This method goes beyond simply talking to stakeholders and using the understanding gained to inform ones modelling in an informal manner, as Moss (1998) does. It divides the process into stages: (a) conducting interviews, (b) transcribing these into text (the "narrative data"), (c) analysing this data, and finally (d) specifying the behavioural rules for a social simulation. As far as I am aware, Richard Taylor (2003) and Sukaina Bharwani (2004) were the first to do this.

- 1.2

- The advantages of doing this analysis in this staged and formalised ways should be obvious. The data can be made available and examined by subsequent researchers who may spot aspects that the original observer has missed as well as seeing how the sense of the stakeholder might have been changed during the process. Unlike an informal approach, which does not work from transcribed text, a subsequent researcher can follow the chain of analysis and understand it better. This mirrors attempts to improve the documentation of simulation code and to make simulations easier to replicate and investigate. Although the process of analysing natural language data is never going to be a completely formal process and relies upon the understanding of the interviewer and/or analyst, formalising the process makes it more transparent and replicable.

- 1.3

- This paper aims to further formalise this approach by suggesting some more structure for analysing such narrative data that will facilitate this task, but which is also appropriate for specifying and writing the code that determines the behaviour of agents within a social simulation. This involves a three-stage analysis, into what I call: context, scope, and narrative elements — the distinction between context and scope being the main contribution of this paper. This increased structure should make the process more transparent and systematic, but also might cause less distortion to the original sense and make the process easier. The task of moving from natural language narrative to computer code is an extremely challenging one — this paper is only a suggestion for an element of this whole process and will leave many 'gaps'. I hope these gaps can be filled in by the contributions of other researchers and hence move towards a more comprehensive method than is presented here. Thus the aim of this chapter is to suggest some of the structure that might make such a project possible — by no means does it claim to be a complete solution.

- 1.4

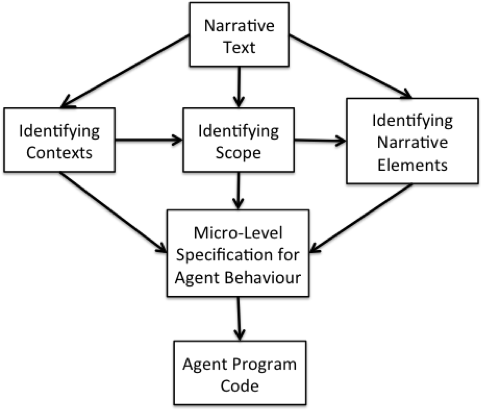

- In summary I am suggesting a particular kind of step in the

process of going from narrative data to program code, as illustrated in

Figure 1.

Figure 1. An Illustration of the Proposed Analysis Structure - 1.5

- In this paper I first discuss each of: "context", "scope" and "narrative elements" separately, before discussing how these might be used in sequence in the analysis of narrative data, illustrating this process with some examples. It then ends by discussing some aspects of the transformation of the results of such an analysis into code, including a discussion about the kinds of computer architecture that might make this easier, and some directions for future research.

Summary

Summary

- 2.1

- (1) Recognising the kind of situation (broadly the "context") allows for all the relevant knowledge to this being available for use. (2) Working out what is possible in such situations (broadly the "scope") is onerous and occasionally done or simply recalled having been learnt previously and associated with the appropriate context. (3) Given context and scope the "rules" or sequences that describe what is done there (the "narrative elements") can be relatively simple. Analysing narrative text in this order fits human narrative well and reveals more about the structure of those narratives. It also puts the analysis in a form suitable for implementing as program code.

Context

Context

- 3.1

- Context is a difficult word to use, for at least two reasons. Firstly it seems to be used to refer to a number of related, but different kinds of thing, and secondly it is not always clear that there is any thing that is "the context", but rather that the word is being used only as an abstraction to facilitate discussion. I am not going to go into a full discussion of the concept and definition of context here, as I have gone into this in previous publications, e.g. (Edmonds 1999, 2012). However, since context is central to this paper, a few words are necessary to make my intended meaning clear.

- 3.2

- First there is the context in terms of the external situation in which an event occurred. Thus the situation for a certain conversation might be position: latitude N53:20:45, longitude W1:59:13 at 9.45 in the morning on December 22nd 2012. In this paper I will call this the 'situation' to distinguish it. However this is not such a useful construct since, although precise, it imparts very little useful information about what is relevant in this case. Even if one could retrieve what was around at that point in time and space, there is an indefinite number of potential factors that might be pertinent there. Thus it is almost universal to abstract from such precision and try to indicate the kind of situation one is referring to. For example, one might say "I was on the train on my way to work just before Christmas". This kind of context can be seen as the answer someone might give to the question "What was the context?" after having been told something they did not fully understand. In this sense the "context" is defined by what is needed to be known about the situation to sufficiently understand the specific utterance. This kind of response is specific to the hearer's particular purpose; however, it is surprising how informative such a thin characterisation can be in that we can recognise a host of details that would normally accompany such a situation, filling out the details. In other words we are able to recognise the kind of situation being signalled by another person on the basis of very little information.

- 3.3

- Many aspects of human cognition have been shown to be context-dependent, including: language, visual perception, choice making, memory, reasoning and emotion (Tomasello 1999; Kokinov & Grinberg 2001). Context-dependency seems to be built into our cognition at a fundamental level. There seems to be a good reason why this is so, as a mechanism for social coordination. That humans are able to co-recognise the same kinds of situation and thus bring to bear the same co-learnt norms, habits and other knowledge in those situations. Thus our cognition has a way of recognising the kind of exterior situation, in other words we have some kind of internal correlate of the relevant kind of situation, which I will call the "cognitive context". It is this cognitive context that is most relevant to the process in this paper. The very facility with which we recognise different cognitive contexts, learn new knowledge with respect to them, and can bring to mind the relevant knowledge for them causes difficult for us when trying to identify the cognitive contexts with respect to which people act. This is due to the richness of the information that seems to be used in such recognition, and also the fact that its recognition is done largely unconsciously. It is this that makes context such a difficult thing to handle.

- 3.4

- Sometimes the cognitive contexts are commonly recognised in a society, and specially demarked as such. In other words the context becomes socially entrenched over time, and its recognition becomes part of the culture — I will call this a "social context". The more recognisable a situation is as a commonly recognised context, the more special kinds of protocol, norms, terms, habits and infrastructure are developed for that context; the more a context is marked by differences of these kinds the more it is recognisable. Examples of such social contexts include: the lecture, a birthday party or an interview. We can all readily recognise such contexts and can access a rich array of relevant expectations, knowledge and habits without apparent effort. Unlike cognitive contexts in general, social contexts are easier to identify, due to the fact that it is often entrenched in our common practices and institutions. It is social contexts that are of the greatest interest here. Also although we are frequently unconscious of which cognitive context we are assuming, we are very sensitive to violations of social contexts — that is, where the commonly accepted norms, expectations, habits etc. are not adhered to by others, or where others seem to have identified the situation as a different social context than oneself.

- 3.5

- To summarise, cognitive context is the cognitive correlate of a kind of situation that is recognised by our brains (largely unconsciously) and is associated with the set of knowledge (norms, expectations, habits etc.) that are relevant to that kind of situation. Some contexts are co-learnt by a group so that they all recognise the same kind of situation as the same context and hence bring to bear similar sets of knowledge about it. Social contexts are those so entrenched within a culture that they have agreed names and people can consciously identify them.

Scope

Scope

- 4.1

- Scope is a context-like construct, but one I wish to distinguish from context in this analysis. Scope, in general, is the set of conditions that demarks when some model or knowledge is true. In this case I am using the label "scope" to indicate the set of what is and is not possible given any particular situation. Thus when entering the context of a lecture one may assess where it is possible to sit — it may be very crowded and it might not be possible to slip in at the back and one might have to sit on the steps rather than a seat. Thus scope is something one assesses within a context, since its assessment will often depend upon context-dependent information (such as what is socially acceptable in a lecture context, which may rule out vaulting over the seats).

- 4.2

- Whilst the recognition of cognitive context and the access to the associated knowledge is rapid and automatic, working out what is and is not possible in any situation is computationally onerous[1]. This is the reason that humans only do such calculations rarely, for the most part assuming past assessments of scope to frame decision making rather than checking these frequently. Indeed, it seems likely that there are a number of heuristics at play to make assessments of scope feasible, including: not working it out explicitly but relying on a learning process of trial and error, or only attending to scope when meeting new kinds of situation or when something has gone wrong in an unexpected manner. If Luhman (1984) is right, a major function of social institutions is to simplify calculations like these for its participants, and it is very plausible that social contexts evolve so that they ensure that scope is more stable inside a context — this would mean that some of the knowledge that comes from a social context is about what one can assume will always be the same. In this case people can learn much about the scope for that context and not have to continually revaluate it. For example, in a court case, reckoning about what is and is not possible is central to the activity there and thought about consciously, but the rules, norms and habits that are pertinent in this context are unconscious and given — they serve to limit what kinds of possibility there are at each stage and to ensure that possibilities are increasingly constrained as one approaches the verdict.

- 4.3

- To sum up, there may be some quite complex reasoning about scope by subjects, especially when encountering new contexts or when something fundamentally surprising has occurred, but that between these events scope may be quite stable and is something that can be elicited when interacting with subjects familiar with that context.

Narrative

Elements

Narrative

Elements

- 5.1

- Both context and scope (in the above senses) help to delimit, frame and determine the relevance of knowledge that pertains to it. Sometimes, as in habit, the context may simply trigger action without much thought. Sometimes the scope of a situation will leave very few possibilities for action open to a subject, effectively determining action. However in other cases individuals may reason about what action to take (assuming the cognitive context and scope considerations). As Simon observed (Simon 1947) within very regular and constrained environments (office situations) the reasoning of people is better characterised as 'procedural' rather than approximating any kind of ideal (what he called 'substantive') rationality. That is, they have a set of interconnected but fairly simple set of rules that they use if circumstances are as they expected them to be. This was also a lesson from the field of "Expert Systems" which attempted to elucidate rules for how domain experts behaved. The rules they discovered where surprisingly simple but, of course, this was only within a settled kind of situation with fairly constrained goals. In a similar vein, Gigerenzer and Goldstein (1996) identified a host of fairly simple heuristics that are effective in our daily lives[2]. We call these simple rules, or known sequences the "narrative elements" (due to the fact that they are bulk of explicit narratives reported by subjects).

- 5.2

- The simplest and most common structure for expressing these procedural rules is a simple conditional: "if this (some condition) then this (some consequence, action or calculation)". Such conditionals can either be used to represent a decision point for the agent or represent some of the causation within a situation. However there are others that are maybe so obvious that one tends to overlook them, such as: sequencing ("this follows that", or conjunction ("this … together with this"), alternatives ("this or else that"), or simple expectations about the situation ("this" or "not that"). Such local rules look something like a logic[3], which together determine what a person might do, although on the whole these are not manipulated in very complex ways (except if a revaluation of possibilities are required, for example in debugging code). Rather than to rely on complex reasoning, humans often seem to prefer other tactics such as habit, imitation, or trial and error learning. For example, when faced with 1000s of food choices in a supermarket we do not make extensive pairwise price-utility comparisons but constrain our own choices via habit and use alternatives to comparison such as social imitation. Only in very limited situations (e.g. we have decided to buy baked beans and now wish to work out which has less sugar in them) do we resort to detailed comparison and complex reasoning.

- 5.3

- The best (alternatively most natural) way of representing these narrative elements is not entirely clear. Maybe the argumentation framework of Toulmin (2003) would be helpful here, or maybe we need more of a narrative structure such as that of Abell (2003). This is fruitful area for future research. This paper focuses only on the distinction between context, scope and narrative elements — its necessity and usefulness — and hopes that others will help improve the method for coding these elements into computational structures and identify more structure that would help code other parts of the narrative to code translation puzzle.

CSNE

Analysis

CSNE

Analysis

- 6.1

- Thus, at the first level of approximation the proposal is

that narrative data be analysed for the following kinds of

element/referent: context, scope, and narrative elements (CSNE). These

correspond to different aspects of the narrative, namely: relevance,

applicability, and the detail of the narrative steps. The idea is that

the analysis of narrative data should first seek

for clues as to context, then to indications of

scope (given the hypotheses as to the relevant context) and finally

to base narrative elements (given the contexts and scopes identified),

such as antecedents and consequents or conditionals. These are

summarised in Table 1.

Table 1: The three main aspects in the CSNE framework and their corresponding properties.

CSNE Aspect Assumptions Focus Properties Context That the kind of situation can be recognised Relevance Scope That the appropriate cognitive context is already identified Applicability Narrative Element That the context is identified and scope considerations worked out or recalled Cause-effect pairs, decision points, sequences, alternatives etc.

- 6.2

- The hypothesis put forward here is that using this kind of structure will (a) improve the 'fit' to natural accounts of occurrences and so distort them less; (b) reveal more useful information about behaviour; (c) produce analyses that are more amenable to programming agent behaviour (given a suitable architecture); and (d) thus make more transparent the assumptions used since these can be associated with the level at which they are made (context, scope or narrative element). I have motivated the distinctions underlying the approach above, and give a few worked examples below, but ultimately these are pragmatic hypotheses — either they will help extract usable specifications for agent behaviour from narrative data or they wont.

- 6.3

- Identifying context is tricky due to the fact that normally we do this unconsciously. However we do seem to have an innate ability to recognise the appropriate contexts where these are (a) social and (b) entrenched within a society we have sufficient experience of. Moreover since people seem to be particularly sensitive to misidentified context, this suggests an approach where contexts are first coded at based on the intuitions of the analyst and then repeatedly re-confronted with the data and the opinions of others (e.g. the sources of the data).

- 6.4

- Once the implicit contexts for chunks of text are coded, the data should next be scanned for indications as to judgements of possibility with respect to the context. It should be born in mind that some of the constraints concerning what is possible will be implicit (e.g. that some things are not acceptable to say when greeting a stranger and can only be said once the parties have got to know each other). Such judgements might be accompanied by some complex reasoning, but in other cases might come out of previous experience, or simple assumption.

- 6.5

- Finally, with the identified contexts and scope judgements in mind, the narrative elements can be identified and coded. This is the more straightforward stage, since these are those 'foreground' elements that people talk about explicitly — those that they consciously bring to mind. There might be a whole menu of constructions that an analyst might look for, but not all narrative elements will fit these and thus some flexibility, in terms of allowing new constructions, should be maintained.

- 6.6

- Any imposed structure or theory can limit and/or bias subsequent analysis, but on the other hand being completely theory/structure free is impossible. I am suggesting that the CSNE structure is relatively 'benign' in this respect because it 'fits' how humans think. However this also suggests that the sort of approach as championed by Grounded Theory (GT)[4] might be appropriate, since this seeks to avoid constraining analysis by high theory (where possible), rather letting the evidence lead[5]. As in GT, I would expect that the emerging analysis will need to be repeatedly confronted with the data to check and refine it. For example, if the analysis assumes a particular kind of social context for some text, and this seems to result in a bad 'fit' for several elements of the identified scope and narrative elements, then this may suggest revisiting and reconsidering the context identification (in contrast to a single 'oddity' which might indicate a misfit at the level of narrative element).

An

Illustrative Example

An

Illustrative Example

- 7.1

- Here we illustrate the use of the CSNE structure in the analysis of narrative data. The examples of such data are taken from Bharwani (2004) — they are a series of excerpts from an interview with a particular farmer on the various farming decisions he is faced with. The examples here are not presented in the same order as they are in Bharwani (2004) and I am selecting from them to illustrate different aspects of the CSNE approach.

- 7.2

- The first example considers the effect of people switching

from rice to wheat and thus concerns an emerging change in the world

market for wheat.

(Quote 1) "The one conundrum here is that there are more people in the East who want to get away from rice and gradually upgrade to more wheat allied products, that may alter the value of the end product to us. You see the worst thing that has happened to us worldwide is the collapse of the Eastern economy… over the past 4 or 5 years, but it is coming back again now and that actually may help us again. It is a great shame because we were getting into the Eastern markets and it was beginning to grow and suddenly it collapsed." (Bharwani 2004, p.113)

- 7.3

- From reading the complete selection of quotes in Bharwani (2004),

I would guess at the following cognitive contexts by imagining the sort

of situation that the farmer is talking from.

Clearly these are merely hypotheses and could be wrong, but they are a

starting point.

- "survival" — things are continually getting worse and the primary goal is to keep in farming, battling against nature, the economy and regulation

- "comfort" — conditions are comfortable with no immediate survival threat, one could stop worrying so much and take things a little easy

- "entrepreneur" — one is looking to make a big profit, taking risks if necessary

- 7.4

- The above quote is firmly from the survival context — in

other contexts people moving from rice to wheat might have been

interpreted as implying higher prices and hence representing an

opportunity, but here it is interpreted just as a trap, citing the East

European example. The quote acknowledges implicitly that prices could

increase due to this change in consumption in the East, but then

anticipates that this might be followed by a collapse (and thus pose a

risk if resources were committed on the basis of rising prices). The

survival context implies several facts about what possibilities for

action there might be, that is it constrains the scope (what is and is

not possible in a situation). In this case we know that: (a) one is not

going to be able to discover a "killer" profit, (b) to survive one has

to focus on margins, looking for small improvements that will allow one

to break even. Once the context and scope has been identified and

stripped away this quote seems to boil down to two observations (1)

prices for wheat might increase (2) increases in price can be followed

by a sudden collapse.

Table 2: CSNE Analysis of Quote 1.

Context Scope Narrative Elements Survival No "killer" profit available - Prices for wheat may increase in near future

- Price increases can be followed by a sudden

collapse

- Prices for wheat may increase in near future

- 7.5

- The second quote imagines the situation under possible

climate change.

(Quote 2) "I, as a farmer would imagine that if the summers were warmer and the autumns were wetter you would have an earlier harvest, and therefore all that would happen is that the harvest would come early and your drilling [cultivation of ground for sowing seeds] would come early so that you would still be able to establish your winter crops before the rain really started. If the rains were really early then we would have to resort to spring sown varieties… The net effect would be that you would be drilling as soon as you possibly could which may be later than normal, but because the weather is warmer that would make up for lost time, so harvest would still be about the same time I would suspect…. If the autumn was continuously wet, wet, wet and we were under water, I mean that is a serious change - that is like this year, every year. If it was like this year every year, then yes there could be a problem." (Bharwani 2004, p.112)

The last sentence implies the survival context again, the possible changes in weather do not indicate any positive possibilities but only potential threats. It documents in detail some of the complex reasoning about scope. The scope is considered that under the conditions that "if the summers were warmer and the autumns were wetter" with refinements of this, adding: "If the rains were really early" and then "If the autumn was continuously wet, wet, wet and we were under water". Each of these implies different narrative elements.

Table 3: CSNE Analysis of Quote 2.

Context Scope Narrative Elements Survival Summers warmer and autumns wetter - Harvest comes early

- Therefore drilling needs to be early

Summers warmer and autumns wetter + rains really early - Need spring sown variety

- Therefore drilling as soon as possible

- Probably harvest at the same time due to warmer

weather

Summers warmer and autumns wetter + autumn was continuously wet and we were under water - If wet like this every year then there is a

serious problem

- Harvest comes early

- 7.6

- In the next excerpt the farmer reflects upon his own and

other's attitudes, the text implies that the farmer has just been asked

whether the aim is to maximise profit.

(Quote 3) "Well not necessarily some people would say, now you see we have often had this conversation around this table. Some people don't want to maximize profit…. They are happier to take a slightly easier, lower level approach and have an easier life, and not make quite so much money…. And I can relate to that... But because I'm a tenant I don't own my own land… Everything we farm is rented and therefore we have an immediate cost, the first cost we meet is to our landlord and that tends to go up. (Bharwani 2004, p.127)

In the piece the farmer imagines how another farmer might feel, one who owns their own land and hence is more secure, inhabiting a comfort context. However this is not possible for him, being a tenant. This implies he has to maximise profit to survive and meet the immediate cost of possibly rising rent. The theoretical possibility of the "entrepreneur" situation is implicitly ruled out.

Table 4: CSNE Analysis of Quote 3.

Context Scope Narrative Elements Comfort Does not have to maximise profit to survive - Can take life easier

- Does not make quite so much money

Survival Has immediate cost (rent) which tends to go up - Has to maximise profit to survive

- Can take life easier

- 7.7

- The next quote I consider is where the farmer considers the

future's market for fixing the price he would get for his crops ahead

of time.

(Quote 4) "Trading in Futures is always an option but I think it is almost a different ball game that is not really farming as such, but… it's hedging your bets on guaranteeing a price ahead. So if you think you can make money on a particular price level and you can see that on a Future's price, say next April, next May, next June, and so on, you could hook on to that and guarantee you are going to get that price but if the market goes up of course you lose out, course if the market goes down you've won. So you know it's 50/50 one way or the other. Not many farmers do it - there was a period when a lot of us tried it and… it wasn't a vast success. I think I have tried it three times now. This is on Options - three times I've done it, and I think twice we have missed out and once we won out, so there's not much in it." (Bharwani 2004, p.145)

Note that this is not considered from a comfort point of view (then not having to worry about the final price) or the entrepreneur point of view (making a profit via speculation) but, again, the survival viewpoint. If this had been within an entrepreneur context it might have led to quite a different analysis.

Table 5: CSNE Analysis of Quote 4.

Context Scope Narrative Elements Survival Fix price on Futures Market - If market drops over the year you gain

- If market rises over the year you lose

- No gain in long term

- If market drops over the year you gain

- 7.8

- The final quote I consider is about other farmers.

(Quote 5) "We used to grow onions here years ago and I have been wondering whether we should go back into onions, but so is every other Tom, Dick and Harry, and I think that will be the next thing that is over done… So, got to be careful there." (Bharwani 2004, p. 120)

Being in the pessimistic survival context, he expects that if there is any opportunity everyone else will also see it and hence the price advantage disappear (although this will be more relevant to a risky and smaller-demand crop like onions). Growing onions is a possibility because he already has experience in growing it.

Table 6: CSNE Analysis of Quote 4.

Context Scope Narrative Elements Survival Could try growing onions - If it is good to grow onions, maybe other farmers

think so to and the price would be poor, thus needs to be make with

caution (avoiding excessive risk)

- If it is good to grow onions, maybe other farmers

think so to and the price would be poor, thus needs to be make with

caution (avoiding excessive risk)

- 7.9

- All these quotes are from a farmer reflecting on his (he is reported as male) everyday practice, and thus is not situated within the various seasons of the year. If one was simulating a month-by-month process it may be that the seasons also help determine the immediate context as well as the general attitude of the farmer, but in this case the quotes do not indicate this, but a year-long view taking into account the whole cycle. Indeed many of the quotes involve consideration of the year taken as a whole.

- 7.10

- Looking at the above, very sketchy, analysis I hope it is starting to be clear how a simulation of many farmers might be programmed. The narrative elements clearly talk about different stages of the yearly cycle and their timing, so at least a monthly granularity needs to be considered. The farmer obviously considers the response of other farmers to opportunities, so that a multi-agent model makes sense with each looking to the innovations and decisions of others as part of their decision-making. Different kinds of situation relating to kinds of risk dominate the thinking and might form the backbone of the model, involving some rich but "fuzzy" mechanism determining whether and when farmers switch between survival, comfort and entrepreurial contexts, for example it may be there is evidence that switching to survival is fairly rapid, but it takes many good years to switch to comfort and more if you are a tenant farmer. The quotes do not present any evidence the farmer has been in an entrepreneurial context, though it is possible that either newcomers to farming or very comfortable farmers might. Clearly the choices made by a farmer are perceived as being tightly constrained by what is possible, so this is an important part of behaviour determination, and probably should be part of a simulation.

- 7.11

- The simulation in Bharwani (2004) took a particular framework (Bennett's theory of adaptive dynamics, 1976) and a knowledge engineering approach, looking at the factors that seem to be significant in each decision (primarily how much of each crop to grow). There were two kinds of agent: adaptive and sceptic (that is, sceptic of climate change and hence effectively non-adaptive). Given these kinds of agent there is essentially a complex risk-benefit analysis depending on climate, capital etc. of the farm. In contrast the CSNE analysis suggests an extension of this where farmers might change their strategies, depending on their outlook on the future and the possibilities that are possible.

- 7.12

- Thus, although the above illustration clearly has depended on the excellent standard of elicitation and selection in Bharwani (2004) making my task easier, it does suggest an enhanced version of the model developed there, in particular the usefulness of taking into account both context and scope considerations.

From

CSNE Analysis to Program Code

From

CSNE Analysis to Program Code

- 8.1

- The overall aim is to make the relationship between the program code that defines agent behaviour within a simulation and the original narrative data as close and as transparent as possible. To this end we proposed the CSNE structure that we hypothesise would facilitate this by making the process of analysis 'fit' better into how humans and human language work. Another step to facilitate the same goal is to bring the computer code closer to the analysis. That is, to make the structure of the coding as coherent with the structure of the analysis as possible. We now describe some preliminary thoughts on this.

- 8.2

- There are no agent-based coding schemes that provide ready structures for context recognition/use[6] and very few have anything that might help with reasoning about scope. Thus there is a need to provide these structures in the agent architecture, narrowing the 'jump' from analysis to code, just as we are trying to narrow the 'jump' from data to analysis. Although this paper is primarily concerned with the step from narrative data to CSNE analysis, it will now briefly discuss the CSNE analysis to computer code step, in order to show that a CSNE structure could also facilitate this stage of the process.

- 8.3

- Just as with the CSNE analysis of natural language, this

involves some compromises. Using high-level structures in programming

eases the task of program construction, but it also can make the

understanding and verification of code harder, because the micro-level

steps are not directly specified and the high-level structures might

encode assumptions that programmers do not fully understand. However,

to a large extent it should be the computer science that adapts to suit

the kind of accounts that people make of their behaviour rather than

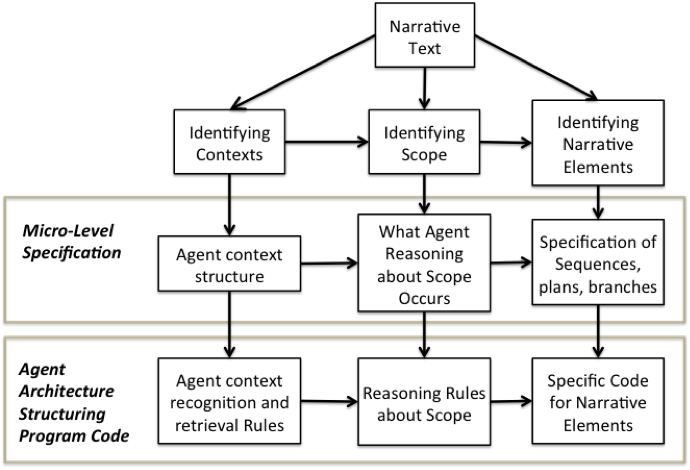

the other way around. Figure 2

is an elaboration of Figure 1

with an outline of how the CSNE analysis might relate to micro-level

program code. Ideally, as in Figure 2,

the different parts of the analysis (context, scope and narrative

elements) should inform different parts of the specification of the

code. Of course, this is only possible if the agent architecture does,

in fact, keep these aspects separate and only easy if the architecture

provides algorithmic support for them. Currently there are no

architectures that are so structured, but an early attempt to build-in

context-sensitivity into an agent is in Edmonds and Norling (2007).

Work is under way to produce such architectures building on existing

architectures (BDI) (Norling

2004) and past experience in developing architectures that

suit the task of social simulation (Moss

et al. 1998; Edmonds

& Wallis 2002).

Figure 2. How CSNE analysis might relate to specification and code - 8.4

- In the absence of a ready architecture to achieve such a clean separation, I will give an Illustration of how some of the information analysed in the previous section might give rise to specifications for agent behaviour.

- 8.5

- Firstly I look at the information regarding the contexts.

Here the relevant contexts seemed to be based in the perception of

comfort/risk — it might have been the place or the season but this did

not come out of my reading of the narrative examples as a contextual

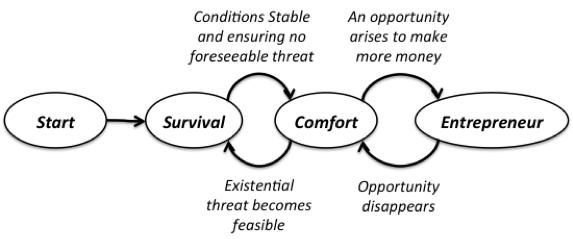

feature. The structure and examples suggest that the "survival" context

is the appropriate default context for this farmer, with transitions to

"comfort" or even "entrepreneurial" being left to more specific

conditions (maybe only hypothetical conditions as in the above

examples). In this case this might lead to an underlying set of state

transitions as indicated in Figure 3

below.

Figure 3. Illustration of state changes between contexts - 8.6

- Depending on which state the agent is in, this would bring into play different sets of rules and knowledge that were more relevant to that context[7].

- 8.7

- Next we turn our attention to the specification of what

happens given one of these contexts, in this case the default context

of "survival". I will take as an example the analysis of Quote 2 for

this (Table 3).

In this case the reasoning is fairly simple, which might well not be

the case in other cases. A reasoning rule about this might look like as

in Table 7.

Table 7: Simple rules for reasoning about scope.

IF summers are warmer and autumns wetter than at present IF rains really early IF it is continuously wet over the year for several years THEN there is a serious problem (take some emergency action) ELSE THEN obtain spring sown variety, drill as early as possible but harvest at same time as normal ELSE THEN drill and harvest early

- 8.8

- The format for the action parts of the rules will partly depend upon the set-up and complexity of the environment that agents act within. There may be additional general conditions on actions, such as whether one is able to afford spring-sown variety, but this might not be a result from the analysis of the narrative of a particular farmer (especially if this contingency is unlikely for the particular farmers being modelled). Thus the analysis of the particular narrative will (often) need to be supplemented with general knowledge that the narrator assumes is generally known. The parts of the specification that correspond to the Narrative Elements should be something near plans or routines — they may involve sub-cases and simple reasoning, but essentially they stand for a well-established or explicitly reasoned pattern of behaviour. In practice, routines may be composed of habitual behaviour and require little in terms of reasoning or adaption, but may be interrupted if what is possible suddenly changes (for example making the routine now impossible) or a change in context. Thus the CSNE structure helps to isolate the parts of the routine that are more stable, and explain when it may be disrupted.

- 8.9

- It must be stressed that narrative data will rarely provide enough information to specify program code on its own. Agent-based models are often complex: with many possible options, several component processes and many parameters. For this reason they require a lot of evidence/data in order to plausibly constrain these choices. Minimally this should include bringing evidence to bear on both micro- and macro-levels (Moss & Edmonds 2005a). Also it is a fundamental principle that evidence should not be ignored without a VERY good reason (Moss & Edmonds 2005b), so that if other evidence is available it should be also applied. This has the corollary that as much evidence, from as many sources of possible, should be applied. The point of this paper (and others in the special section) is that qualitative data can be usefully deployed to inform the design of agent-based social simulations, and hence should be used. However there are other sources of evidence such as the structure and the nature of simulated entities themselves (e.g. that people are part of families, and families can inhabit houses). Such common knowledge (which is rarely articulated) might be used to further constrain the computer code and provide an additional check on its consistency. Ontologies and ontological frameworks could help do this, and further aid the processes of narrative data analysis and the production of computer code (e.g. Polhill & Gotts 2009)[8].

Concluding

Discussion

Concluding

Discussion

- 9.1

- I have not been able to describe a comprehensive and complete method for determining what program code might be indicated by some narrative data. That is an extremely complex and difficult task that will take a lot more research and certainly could not be packed into a single paper like this. However, I do think that what I have described is part of the solution.

- 9.2

- In terms of progress towards this goal, the advances claimed in this paper pivot upon distinguishing between context and scope in the analysis of narrative data, resulting in a CSNE structure. Firstly, I hypothesise that this distinction will also allow a more natural analysis of narrative data — one that 'fits' better into how people behave. Secondly, that distinguishing between these kinds of human construct impacts upon how behavioural rules are eventually coded, simplifying the end rules considerably. Finally, that a computer architecture could be designed into which this structure of analysis would naturally fit. I do not think there will be a 'magic' bullet that allows anything like the automatic translation of narrative data into computer code. However I do claim that something a CSNE structure (or something similar) could play a useful role in mediating between narrative data and the code that determines agent behaviour within a simulation, helping reduce avoidable distortions of the data, and making the process more open and reproducible.

- 9.3

- Clearly however, this is only one part of the "Narrative Data to Agent Behaviour" puzzle. There needs to be a synthesis of methods to develop the most effective technique possible if we are going to produce a more comprehensive method. This will probably need to draw on a variety of approaches, such as: Grounded Theory (Urquhart 2012), Conversational Analysis (ten Have 1999) or KnETs (Bharwani 2006). This should be a creative and appropriate mix, which will not occur if each approach holds inflexibly to its own traditions and shibboleths.

Acknowledgements

Acknowledgements

- This research was partially supported by the Engineering and Physical Sciences Research Council, grant number EP/H02171X/1. Many thanks to Emma Norling, Cathy Urquhart, Richard Taylor and all the participants of the Informal Workshop on the topic held in Manchester (http://cfpm.org/qual2rule/informal-workshop.html) and the ESSA conference in Warsaw, 2013, with whom I have discussed these ideas.

Notes

Notes

- 1This

difficulty is very close to that of the "frame problem" of AI (McCarthy

& Hayes 1990), which is that of understanding what is

amenable to change after an event and what one can assume remains

constant.

2In that they take it as obvious when the simple rules should be applied and when not. However, if one tries to write down the condition for the 'firing' of such simple rules one finds it is very complex. What was missing from their account was the role that cognitive context plays in categorizing kinds of situation so only rules will only be used where appropriate.

3These do not correspond to a formal logic but rather an expression of common sense relationships and beliefs. However the fact that these elements are situated within context and scope makes them easier to relate to a more formal representation to program the behavior of an agent.

4A good introduction to Grounded Theory is Urquhart (2012).

5As Martin Neumann suggests in this special issue of JASSS.

6To be precise, there are some logics (McCarthy & Buvac 1998) and systems like CYC (Lenat 1995) but they are not so well suited to the "fuzzy", recognition-based contextual structures that humans seem to use.

7Clearly it might be sensible to make this the default set of rules and knowledge, leaving open the possibility that if they do not apply or are not sufficient to determine action that other rules from other contexts might be brought into play.

8One referee suggested that the CSNE structure and ontologies could "incorporate an NLP- and rule-based subsystem for processing the raw narrative into ontological elements". Whilst I do think that this might be the case, I can not comment as I am not an expert on this.

References

References

- ABELL,

P. (2003) The Role of Rational Choice and Narrative Action Theories in

Sociological Theory The Legacy of Coleman's Foundations. Revue

Franaise de Sociologie, 44(2), 255—273. [doi:10.2307/3323135]

BENNETT, J. W. (1976) The Ecological Transition: Cultural Anthropology and Human Adaptation. Pergamon Press Inc., New York.

BHARWANI, S. (2004) Adaptive Knowledge Dynamics and Emergent Artificial Societies: Ethnographically Based Multi-Agent Simulations of Behavioural Adaptation in Agro-Climatic Systems. Doctoral Thesis, University of Kent, Canterbury, UK. <http://cfpm.org/qual2rule/Sukaina%20Bharwani%20Thesis.pdf>

BHARWANI, S. (2006) Understanding complex behaviour and decision making using ethnographic knowledge elicitation games (KnETs). Social Science Computer Review, 24(1), 78—105. [doi:10.1177/0894439305282346]

EDMONDS, B. (1999) The Pragmatic Roots of Context. CONTEXT'99, Trento, Italy, September 1999. Lecture Notes in Artificial Intelligence, 1688, 119—132.

EDMONDS, B. (2012) Context in Social Simulation: why it can't be wished away. Computational and Mathematical Organization Theory, 18(1), 5—21. [doi:10.1007/s10588-011-9100-z]

EDMONDS, B. & Norling, E. (2007) Integrating Learning and Inference in Multi-Agent Systems Using Cognitive Context. In Antunes, L. & Takadama, K. (Eds.) Multi-Agent-Based Simulation VII, Lecture Notes in Artificial Intelligence, 4442, 142—155. [doi:10.1007/978-3-540-76539-4_11]

EDMONDS, B. & Wallis, S. (2002) Towards an Ideal Social Simulation Language. 3rd International Workshop on Multi-Agent Based Simulation (MABS'02) at AAMAS'02, Bologna, July 2002. Lecture Notes in Artificial Intelligence, 2581, 104—124.

GIGERENZER, G., & Goldstein, D. G. (1996) Reasoning the fast and frugal way: models of bounded rationality. Psychological review, 103(4), 650. [doi:10.1037/0033-295x.103.4.650]

KOKINOV, B. & Grinberg, M. (2001) Simulating Context Effects in Problem Solving with AMBR. in Akman, V., Bouquet, P., Thomason, R. and Young, R. A. (eds.), Modelling and Using Context, Lecture Notes in Artificial Intelligence 2116, 221—234. [doi:10.1007/3-540-44607-9_17]

LENAT, D.B. (1995) CYC — A Large-Scale Investment in Knowledge Infrastructure. Communications of the ACM, 38(11), 33—38. [doi:10.1145/219717.219745]

LUHMANn, N. (1984): Soziale Systeme. Grundrisseiner allgemeinen Theorie. Frankfurt/M. (Engl.: Social Systems, Stanford University Press 1995).

MCCARTHY, J., & Buvac, S. (1998) Formalizing context (expanded notes). In Aliseda, A., van Glabbeek, R., & Westerståhl, D. (eds.) Computing Natural Language, pp. 13—50. CSLI, Stanford.

MCCARTHY, J., & Hayes, P. J. (1990) Some Philosophical Problems From The Standpoint Of Artificial Intelligence. Readings in planning (1990), 393.

MOSS, S. (1998) Critical Incident Management: An Empirically Derived Computational Model. of Artificial Societies and Social Simulation, 4(1) 1: <https://www.jasss.org/1/4/1.html>.

MOSS, S. & Edmonds, B. (2005a) Sociology and Simulation: — Statistical and Qualitative Cross-Validation, American Journal of Sociology, 110(4), 1095—1131. [doi:10.1086/427320]

MOSS, S. & Edmonds, B. (2005b) Towards Good Social Science. Journal of Artificial Societies and Social Simulation, 8(4), 13: <https://www.jasss.org/8/4/13.html>.

MOSS, S., Gaylard, H., Wallis, S. & Edmonds, B. (1998) SDML: A Multi-Agent Language for Organizational Modelling. Computational and Mathematical Organization Theory, 4:43—69. [doi:10.1023/A:1009600530279]

NORLING, E. (2004, July) Folk psychology for human modelling: Extending the BDI paradigm. In Proceedings of the Third International Joint Conference on Autonomous Agents and Multiagent Systems-Volume 1, IEEE Computer Society, pp. 202—209.

POLHILL, J. G. & Gotts, N. M. (2009) Ontologies for transparent integrated human-natural system modelling. Landscape Ecology, 24, 1255—1267. [doi:10.1007/s10980-009-9381-5]

SIMON, H. A. (1947) Administrative behaviour, a Study of decision-making processes in Administrative Organization. Macmillan.

TAYLOR, R. I. (2003) Agent-Based Modelling Incorporating Qualitative and Quantitative Methods: A Case Study Investigating the Impact of E-commerce upon the Value Chain. Doctoral Thesis, Manchester Metropolitan University, Manchester, UK: <http://cfpm.org/cpmrep137.html>.

TEN HAVE, P. (1999) Doing Conversation Analysis: A Practical Guide (Introducing Qualitative Methods). SAGE Publications.

TOMASELLO, M. (1999) The cultural origins of human cognition. Harvard University Press.

TOULMIN, S. (2003) The uses of argument. Cambridge University Press. [doi:10.1017/CBO9780511840005]

URQUHART, C. (2012) Grounded Theory for Qualitative Research: A Practical Guide. SAGE Publications Limited.