Introduction

Agent-Based Modeling (ABM) and simulation are becoming increasingly common as a scientific method to examine complex phenomena (Bonabeau 2002; Epstein 2009; Grimm et al. 2005; Maglio, Sepulveda, & Mabry 2014; Thiele & Grimm 2015; Wilensky & Rand 2015). The value of ABM as a means for building theory and gaining a better understanding of mechanisms driving complex phenomena is becoming widely recognized. The computational nature of these models allows them to be run frequently (with varying parameters), making ABM a particularly useful tool for exploring the parameter space of dimensions that drive the phenomena under study, and conducting computational experiments on the impact of changes in these dimensions.

This ability of ABM to explore a large parameter space makes it a valuable tool for decision-making and policy development. ABM’s ability to examine both impact at local and higher systems level makes it increasingly used as a decision support tool in complex social systems. When using ABMs to guide decision-making and policy development, it becomes critical to ensure that these models accurately capture the nuances of the phenomenon, so that their behavior becomes increasingly realistic, and they can be used to make predictions about the phenomenon in practice.

We classify the resulting models as high-fidelity models, and loosely define them as being more inclusive and detailed, incorporating a higher number of dimensions, being strongly grounded in empirical data, with model behavior that produces realistic behavior when compared to observed data, all with the aim of supporting decision making. In contrast to Janssen (2009) who identifies two types of models, we identity a spectrum for models ranging from completely abstract to including many elements and in greater detail based on empirical data, without a clear cut-off as to when exactly models become high-fidelity. Models aimed at highlighting the dependence relationships, so-called theory-driven models, reside towards the abstract side of this spectrum. Whereas high-fidelity models are placed closer to the real-world side. As high-fidelity models aim to support decision making in practice, for them to be useful one will need to demonstrate that these models produce realistic findings by validating model behavior against empirical data. Only when validity is shown one can use the models to make accurate projections and support decision making.

The relatively high number of (interacting) mechanisms in high-fidelity models make it challenging to associate the system-level behavior, which is generally used as a source for validation of model behavior, to the behavior of underlying mechanisms. This means that to ensure the model behaves as intended, we need to validate each mechanism (and module capturing that mechanism) individually as well as their interactions with other, related mechanisms. This makes accurate replication of high-fidelity models more laborious, to such an extent that we propose that it requires a different set of replication and documentation strategies as compared to those presented in the current replication literature. Thus, there is a significant gap in our scientific protocols for the process of replicating high-fidelity ABMs.

Our paper aims to fill this gap by providing best practices distinct for replication of high-fidelity models, and highlight which practices are shared with research focused on replicating theory-generating models (e.g. Wilensky & Rand 2007; Rand & Rust 2011; Fachada & Rosa 2017). Specifically, in this paper, we address two fundamental questions. First, what are successful approaches, procedures, and practices for replicating high-fidelity models? And second, what can those who build high-fidelity models do to facilitate replication of their models?

To address these questions, we report on our efforts in replicating a modeling research study conducted by Jenness and colleagues (Jenness et al. 2016). In this study, a network-based model of HIV transmission dynamics, parameterized with empirical data on sexual partnership network structure, sexual behaviors within partnerships, and HIV transmission risks given sexual activity, was used to evaluate different scenarios of scaling up of HIV Preexposure Prophylaxis (PrEP) among men who have sex with men (MSM) in the United States. PrEP is a highly effective preventive medication, which has to date seen limited use among those who could benefit. We undertook this replication effort with the goal to verify and validate both the study results and underlying model behaviors.

The remainder of this paper will be structured as follows. First, we will discuss the value of replication and review the existing literature on replication of ABMs. We will identify facilitators and barriers, and consider how high-fidelity model replication fits within the current literature. Second, we provide a brief overview of the study being replicated and the original model, which from this point onwards we will refer to as EpiModel, in line with the name of the platform on which the original model was built (Jenness, Goodreau & Morris 2018). Next, we highlight the replication process, resulting in the replicated model, which we from this point onwards will refer to as the NetLogo HIV spread model (the NHS model) (Hjorth, Vermeer & Wilensky 2018), using three separate examples of our replication process. Each example focuses on a specific module at a different level of granularity. And lastly, in our discussion section, we present a synthesis across the lessons we learned during replication and provide general guidance for effective replication of high-fidelity models.

The Value of Replication

Replication is a fundamental building block of scientific practice. By checking if others who follow the same methods can obtain similar results, the reliability of these results can be increased, and previous research can be used as the foundation for future experiments, facilitating the building of scientific knowledge.

Similar to improving the validity of research outcomes, replication can improve the validity of computational model outcomes, yet doing so requires two additional steps (Wilensky & Rand 2007; Rand & Rust 2011). First, as computational models are by definition abstractions of the real world, their conceptual model — which describes how the various (agent) behaviors and interactions with the environment occur — will need to be checked. This process is called model validation, and refers to the process of checking whether the model captures a phenomenon accurately enough to answer the driving question behind the model (e.g., “Does the model behave like the phenomenon observed in the real world?”). Second, the implemented model — the translation of the conceptual model into actual code— needs to be considered. This process is called model verification and refers to the process of checking that the translation from conceptual model to the implemented model is correct (e.g., “Does the model do what it is intended to do?”). Both of these processes should occur naturally during replication of ABMs and other computational models (Wilensky & Rand 2007; 2015, Rand & Rust 2011) before the implemented model can be considered as credibly producing counterfactual evidence of experiments for hypothesis testing, and decision-making. The fact that complex systems models often exhibit sensitive dependence to initial conditions (Lorenz 1972), with very small changes in inputs sometimes resulting in very large differences in outputs, leads to challenges in conducting these model correctness checking processes.

Replication of ABMs

Several computational modelers have previously recognized the need for model replication (Axtell et al. 1996; Edmonds & Hales 2003; Wilensky & Rand 2007; Thiele & Grimm 2015). Wilensky and Rand (2007; Wilensky & Rand 2015), for example, focused on standards for replicating ABMs and provided an overview of the replication work done in the agent-based domain prior to 2007; this includes the seminal works of Axtell et al. (1996) and Edmonds & Hales (2003). We highlight Axtell et al. (1996) specifically as they introduce three standards of replication (RS) used in our work: numerical identity — the notion that exact numerical matching across multiple implemented models is obtained — distributional equivalence — the notion that two models produce distributions of results that cannot be differentiated statistically — and relational alignment, the notion that two models can be shown to produce the same internal relationship among their results. These standards highlight that, depending on the output that is considered, varying levels of strictness can be adopted in what one considers successful replication, with numerical identity being the strictest, followed by distributional equivalence and relational alignment respectively. We note that choosing a stricter standard does not imply a more rigorous replication — a high quality replication will choose a replication standard that is well matched with validation of the focal model and outcomes. If, for example, we are replicating a model of network partnership formation which aims to produce variations of a partnership network, it would not be wise to use an RS of numerical identity as by its very nature the original model must produce a distribution of partnership networks, and therefore a RS of distributional equivalence would be preferred.

Since the Wilensky & Rand (2007) paper, work on replication of ABMs has continued. Merlone, Sonnessa, & Terna (2008) compared three implementations of a model of industrial production to study the emergence of structures found in the real world. They found relational but not numerical alignment among their models, and recognized that the affordances of the platform used to implement the model mattered strongly for simulation results. Will & Hegselmann (2008) reported on their failure to replicate a trust model that aims to describe the formation of markets, based on the original publication by Macy & Sato (2002). Janssen (2009), presents a replication of the Artificial Anasazi model (Axtell et al. 2002) which considers historical population dynamics in the Long House Valley in Arizona between 800 and 1350. While they find results that relationally align with data, they conclude the original findings hold only partially and are produced mainly by fitting the model to field data. Stonedahl & Wilensky (2010) and Gunaratne & Garibay (2017) build replications of the same model showing how generic algorithms can be used for calibration and optimization models. They show this method can be leveraged to present alternative theories for observed behavior. Radax & Rengs (2010) replicated the Demographic Prisoner's Dilemma model (Epstein 1998), and found that the replicated model results differed from the original, and distributional alignment could only be achieved under certain circumstances. They highlighted timing in the models as a potential cause of the discrepancies. Arifin & Zhou (2010) described the replication of an Anopheles gambiae mosquito’s model using multiple implementations. The model simulated population dynamics based on a conceptual framework of reproductions and death. The authors found that variations among implementations had an extensive effect on population structure and dynamics. Miodownika et al. (2010) replicated the Bhavnani (2003) model that considers the process by which historical political units could have evolved to form civic regions that approximate those observed in present day Italy. The authors were not able to distributionally align the implemented models, and noted that observed differences in model outcomes might stem from implementation differences. Seagren (2015) replicated the model of Tiebout sorting (Kollman et al. 1997), which considered the stylized interactions between individual (political) preferences and local policy making under various electoral landscapes. The authors achieved relational alignment in their replication, and highlighted the value of doing additional sensitivity analysis based on the replicated model. Donkin et al. (2017) replicated a model originally published by Potting et al. (2005), describing an agro-ecological world in which pest insect’s behavior was modeled on two platforms. The authors based their replications solely on the published model, and found model behaviors to be numerically, distributionally and relationally dissimilar. They attributed this misalignment, to a large extent, to the unavailability of source code. Fachada & Rosa (2017) replicated a version of the predator–prey model, and used it as a showcase for formal testing of replication and model alignment, resulting in guidelines on how to examine replication efforts statistically. The above presented review of replication efforts shows how difficult it has been to fully reproduce model-based research, sometimes even at the least strict level of relational alignment.

The limited degree of alignment achieved in many replication studies is both problematic, and highlights the importance of replication as a means to ensure reliable models and validated model outcomes. Without undertaking model validation and model verification, reliability of models and their outcomes are questionable. With the trend of models to become more sophisticated and become widely adopted, a lack of a comprehensive replication methodology and practice would have the potential to result in a strong increase in unreliable models. In turn, the field of simulation modeling runs the risk of lowering its credibility and risking the integrity of computational modeling as a rigorous scientific method.

This risk has been previously identified (Edmonds & Hales 2003), and others have highlighted various lessons for improving replication efforts. Wilensky & Rand (2007), for example emphasize the need for replication standards, availability of detailed documentation including source code, and the value of interaction and collaboration with original authors. Thiele & Grimm (2015) identify a number of ways in which to stimulate a culture of replication within the research community, including, standardizing model descriptions, software platforms, and sub-models, and providing open code and documentation. Additionally, various authors have argued for the need for standards, both in terms of model description (Grimm et al. 2006; Grimm et al. 2010; Grimm et al. 2017) model building (Grimm et al. 2014), and sharing (Collins et al. 2015). Furthermore, recent work by Fachada & Rosa (2017) describes a set of formal testing approaches for replication. This body of work on replications has identified several standards that help integrate replication into general modelling practice and ABM usage. While these are certainly steps in the right direction, sufficient documentation of replications remains relatively rare.

Replication of high-fidelity models

The provided overview of replication work done within the ABM domain, consists largely of theory-driven models built without extensive calibration based on existent empirical data. The purpose of these models is generally to help researchers better understand either the underlying mechanisms that drive a complex phenomenon, or to generate or improve their theory of the phenomenon. By design, the models used in such attempts are more stylized, focus on behaviors that are more abstract, and have a limited connection to the complexities present in real world phenomena. While such simplifications are what makes these models especially powerful for theory development and for eliminating possible explanatory factors, not all models have those specific aims.

In contrast, the high-fidelity models aim to support decision-making and policy development. High-fidelity models embrace the complexities of real-world complex systems to make model outputs as relevant as possible and maximize their value for decision support. Models with these aims will thus incorporate a large number of dimensions and will use empirical data to link with the real-world dynamics. As such, they will have a larger number of moving parts which are likely to be interdependent. Such interdependencies make it challenging to fully grasp how the system-level behavior traces back to the behavior of modules within the overall model. This means that unless we validate each mechanism (and module) individually, even numerical identity on the model level could be the result of a coincidence (albeit an unlikely one).

To our knowledge, there is a void in the documentation on replication of high-fidelity models. Yet, the increased complexity of these model suggests that accurate replication of high-fidelity models is more laborious, to such an extent that it requires a set of replication strategies different from those documented in previous replication literature, a hypothesis we explore in this paper.

The Computational Experiment Being Replicated

In this paper, we describe the replication of a simulation study by Jenness et al. (2016). The primary focus that study was to predict the impact of CDC’s recommendations for HIV Pre-Exposure Prophylaxis (PrEP) among men who have sex with men (MSM) in the United States. This biomedical intervention, when taken regularly by MSM engaged in unprotected anal intercourse in non-monogamous relationships, greatly reduced the risk of HIV infection for this high-risk group (Liu et al. 2016). However, to date, PrEP usage in the United States is far below that recommended by the Centers for Disease Control and Prevention (CDC 2014).

To judge the impact of the CDC guidelines, Jenness and colleagues developed a network-based model of HIV transmission dynamics, calibrated with empirical data on sexual partnership network structure, sexual behaviors within partnerships, and HIV transmission risks given sexual activity. The resulting EpiModel (Jenness et al. 2018) was the platform used to evaluate different scenarios of scaling up PrEP based on different interpretations of CDC guidelines. As these indications for PrEP require an interpretation that could be implemented in practice, e.g., a non-monogamous relationship cannot be completely assessed during testing of only one partner, multiple versions of the CDC guidelines were defined and their impact on population-level infections averted were compared (see Table 1, Jenness et al. 2016). This paper aims to replicate this experiment and consequently validate the results of the same nine different interpretations of the CDC’s indications for PrEP, and doing so required two replication steps.

In the first step, based on the conceptual model of HIV transmission used in the original study, an implemented replication model had to be created, which we call the NetLogo HIV spread model (the NHS model) (Hjorth et al. 2020). We opted to build the NHS model using a platform other than the original EpiModel for two reasons. First, being able to replicate successfully across platforms makes the results more robust. Second, as building high-fidelity models requires a high level of familiarity with the platform in which the model is build, we chose to adopt the platform the replicators were most familiar with. The EpiModel has been implemented in the open source R package similarly called EpiModel, the version used was version 1.2.5 (Jenness et al. 2018) and this package relies on a statistical estimation of dynamic networks (exponential random graphs modeling, ERGMs) to form and dissolve sexual relationships. The replication model uses NetLogo version 6.1. NetLogo is a widely used and flexible ABM platform (Wilensky 1999), and our implementation forms network structures based on agents’ local behaviors. Consequently, the NHS model followed a conceptual model for governing the network formation and dissolution that is similar, but not identical, to the one used in the EpiModel. For all other parts of the model (behavioral dynamics, and transmission risks) the NHS model does strictly follow the conceptual model from EpiModel.

In the second step, once the NHS model was built, we repeated the computational experiment originally done with EpiModel with the re-implemented NHS model, and compared the results of this replication to the original. In doing so, we simultaneously attempted to validate the results of these experiments and the conclusions in the original study.

EpiModel — A brief overview of the conceptual model

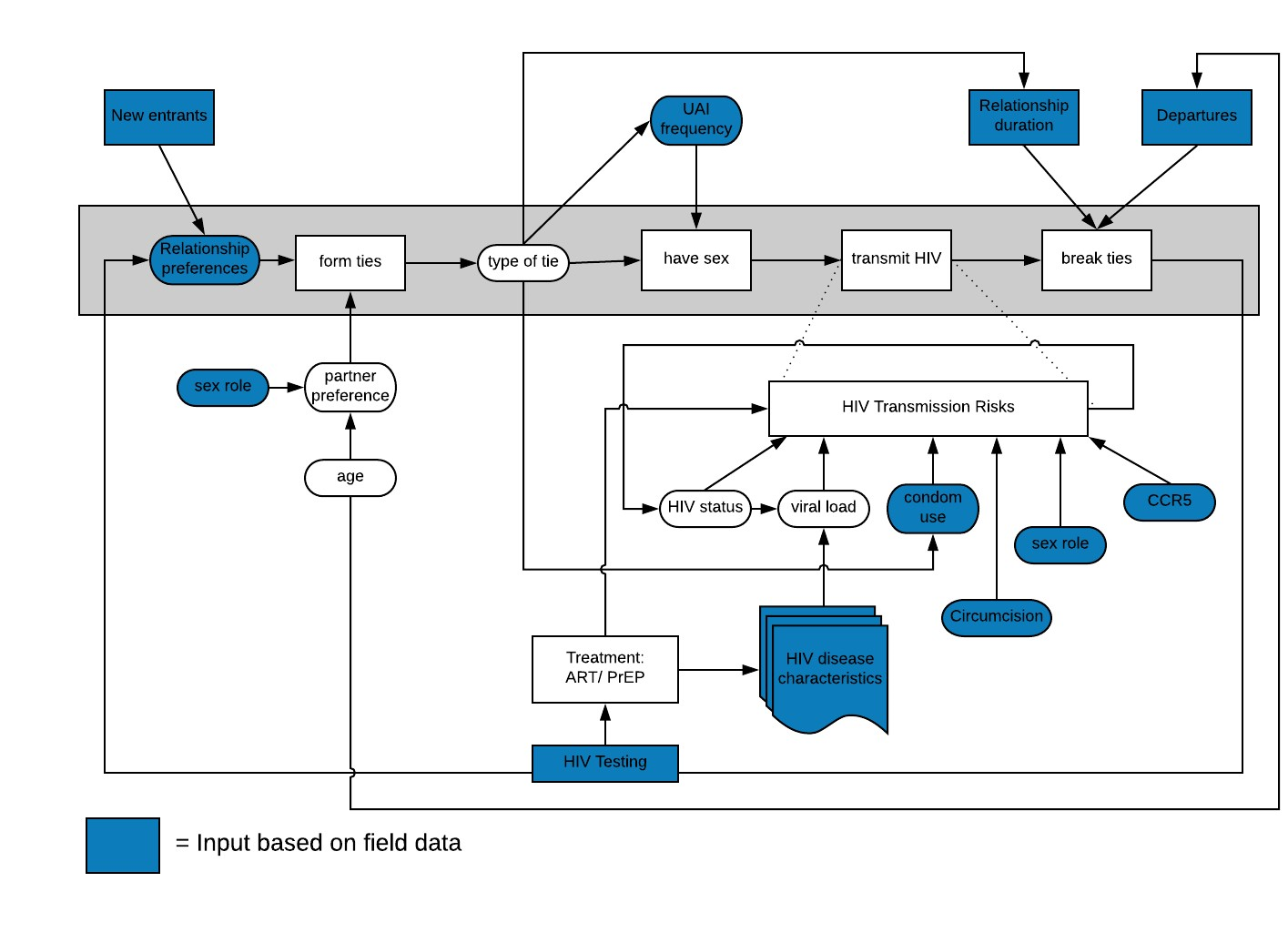

EpiModel incorporates a wide array of dimensions feeding into system level HIV transmission behavior. The behaviors of these dimensions are anchored in empirical data from various sources to ensure the model behavior matches observations made of the phenomenon in practice. As such, EpiModel clearly fits our description of a high-fidelity model both in terms of its model and its goals.

Below, we provide an overview of the original model. We consider it essential when considering any replication study that the original model description be accessible, and consequently we refer the reader to the Technical Information of the original study (Jenness et al. 2016) for full details on the description of the EpiModel method, and the model itself, and its component behaviors. Additionally, we refer the reader to the complete source code for EpiModel which is publicly available on Github (http://github.com/statnet/EpiModelHIV). Here in this paper we present a minimal overview of model behavior below, and an abstract flow of the stages of the model behavior can be found in Appendix A.

EpiModel by default consists of two main components: a partnership dynamics component and a transmission behavioral component. The partnership dynamics component determines how agents create and break sexual partnerships with each other over time, forming longer or shorter-term relationships and one-time ties. The transmission behavioral component describes the spread of HIV based on the behavior of agents within this sexual activity network, how they choose to have intercourse, sexual positions, condom use, etc. Together, these two components simulate how HIV spreads dynamically in this MSM population. For the purpose of the specific experiment in the original paper an additional component describing the various PrEP intervention interpretations is added to this model. While the model combines the interactions between these components into system level dynamics, each of these components acts and can be described relatively independently.

Partnership dynamics: The modeled partner network described three types of partners: main partners, shorter-term casual partners with repeated contacts, and one-time partners. Parameters for sexual behavior were drawn from 2 empirical studies of MSM in Atlanta, Georgia (Hernández-Romieu et al. 2015). The predictors of partnership formation varied by partnership type, with different model terms for degree (number of ongoing partners for each member of the pair), age, homophily (selecting partners of similar age and race/ethnicity), and sexual role segregation (such that 2 exclusively receptive men cannot pair, nor can 2 exclusively insertive men). For main and casual partnerships, there was a constant risk of relationship dissolution, reflecting the median duration of each type. This resulted in a dynamic network on which HIV can spread.

Transmission behavior: Per-act factors influencing the transmission probability for HIV included viral load of the infected partner (Hughes et al. 2012), condom use (Weller & Davis‐Beaty 2002), receptive versus insertive sexual position (Goodreau et al. 2012), circumcision for an insertive negative partner (Wiysonge et al. 2011), and the presence of the CCR5-\(\Delta\)32 genetic allele in the HIV negative partner (Marmor et al. 2001; Zimmerman et al. 1997). Once infected the clinical HIV progression was programmed to follow the empirical courses of disease and antiretroviral therapy (ART) treatment profiles (Mugavero et al. 2013). ART is associated with a dramatically decreased viral load and consequently lower transmission risks (Cohen et al. 2011) and extended life span (Goodreau et al. 2014). Persons who were HIV positive and not on ART were modeled with evolving HIV viral loads that changed their infectivity over time. After infection, persons were assigned into clinical care trajectories controlling for timing of HIV diagnosis, ART initiation, and HIV viral suppression, to match empirical estimates of the prevalence of these states (Sullivan et al. 2015).

PrEP Indications and Uptake: The CDC guidelines for PrEP prescription consider the sexual behaviors in the 6 months prior to diagnostic HIV testing (the risk window). MSM were assessed for PrEP indications only at visits in which their HIV test result was negative, as ART, rather than PrEP, is indicated for positives. At time of HIV testing, eligible MSM were allowed to start PrEP only if the proportion of MSM on this regimen had not surpassed a threshold coverage of 40% of the population. This threshold accounted for an external constraint on PrEP availability, and was varied in robustness checks in the original experiment.

PrEP eligibility is determined based on the 3 behavioral conditions in the CDC guidelines: Unprotected Anal Intercourse (UAI) in monogamous partnerships with a partner not recently tested negative for HIV, UAI outside a monogamous partnership, and AI in a known-serodiscordant partnership (CDC 2014). For each criteria 2 different functional definitions were implemented: a “literal” version based on the specific guideline wording and a “clinical” version that could be more realistically assessed in practice.

An important goal of the simulation was to order the alternative interpretations of CDC guidelines on their ability to effect incidence. While the clinical versions are generally less strict than literal ones (e.g., a monogamous individual may erroneously indicate his partner is also monogamous), no version is defined in such a way to be superior to any other. Thus, all orderings are possible, and therefore their replication would provide a good test of distributional or relational alignment.

The Replication Process in Overview

The full replication process constituted several months of work spread out over a period of 18 months. In it we followed an approach that can be divided into three stages. In the first stage, the replicating team started from the published documentation to validate the translation from conceptual model to implemented model, and used the Technical Information from the original paper to implement the NHS model based solely on this information. As this translation left some open questions as to how to implement the NHS model, the second stage involved connecting with the senior author of the original model to provide clarification on the model implementation details. In the third stage, we started testing the alignment of the models, one module at a time, at which point we pulled in the full source code to further align the NHS model.

While all three stages are critical for effective replication, in this manuscript we report primarily on the third stage of our process. Rather than going through each step of the replication process we will highlight the process by presenting three examples of replication that occurred during out process: the replication of the viral load progression module, the replication of the transmission risk module, and the replication of the computational experiment. The selection of these specific examples is based on four reasons. First, each of the examples considers replication at a different level of granularity, the first example considers a micro-level module, the second a meso-level module consisting of a combination of multiple modules, and the latter the full system-level behavior of the model including all its sub-modules. As such, the combination of examples provides insight into how interactions among modules occurs and can cause emergent behaviors, and how the hierarchical structure and modularity can be leveraged during replication. Second, this combined set of examples allows us to highlight how the replication differed from the original and discuss challenges during replication (Wilensky & Rand 2007). Examples of such challenges include the impact of having a different set of authors replicate the model and interpret model documentation, the potential impact of differences in algorithms, and the impact of varying the platform and/or modeling philosophy can have. Third, each of the examples considers replication using a different replication standard (Axtell et al. 1996), therefore the combination of examples allows us to provide a comprehensive description of replication covering each of these standards. Lastly, we found the combination of these three examples to be illustrative of the lessons we learned during our process of replication of this high-fidelity model, and as such this set of examples was considered both necessary and sufficient for the purposes of this manuscript. In the sections following, we will describe each of the examples in detail.

Example 1: The Viral load module

The first example involves replication of the viral-load module. We chose this example specifically because the viral load of a person with HIV directly affects their risk of transmitting HIV. Consequently, it is considered a critical component in determining the system level spread of HIV. While being a critical driver of systemic behavior, viral load progression is a dimension that can be specified relatively independent of the remainder of the model and hence is an ideal starting point for replication. When someone contracts HIV, “viral load” is used as a measurement of the number of copies of the virus that person has in their blood; it is directly related to infectivity. Detailed viral load progression for HIV in the absence of ART follows four general stages. In the first stage upon infection (the acute rise stage) the viral load will rapidly increase to a peak viral load, after which the viral load will drop towards set point levels (acute decline), this stage is followed by a relatively long period of stable viral load (stable set point), until inevitably in the AIDS stage the viral load increases until mortality (Little et al. 1999).

The structure of the original viral-load module

EpiModel captures the evolution of HIV viral load continuously. Following the previously described viral dynamics it determines an individual’s viral load based on two dimensions; disease stage, and anti-retroviral treatment (ART) adherence.

Disease stage: The progression of viral load over the course of an infection is captured using four stages in EpiModel: 1) An initial rapid increase to peak viral load, 2) a rapid decline from peak to set-point viral load, 3) a long period of stable set-point viral load, and 4) an AIDS phase with increasing viral load and eventual mortality. Both within and between stages the rate of change over time was assumed to be linear.

ART: An infected individual can be put on anti-retroviral treatment (ART) when their test for HIV results in a positive test result. ART treatment will effectively reduce the set-point viral load of the individual (for as long as they remain on ART). The extent to which this set-point is reduced depends on individual attributes (suppression level), and the extent to which viral load is effectively reduced depends on the sustained adherence to ART.

The process of replicating the viral-load module

Replicating the viral progression module from EpiModel required various steps and substantial effort on the replicators’ part. In the following paragraphs we will highlight the process we went through to align this module across implementations, this process is strongly influenced by the framework put forward in Wilensky & Rand (2007).

The first step in any replication process, is to determine which sources of information are going to be used during replication. Replication can be based on various types of model descriptions: a fully documented model description, a model’s source code, or a verbal description of the model during communication by the model authors. Each of these descriptions has its own affordances and limitations, and requirements in terms of access to resources. We initiated our replication process by considering only the model description, and did so for two reasons. First, the model description is aimed to be comprehensive, and as such should be a source that is both detailed and relatively easy to process. Second, for most researchers, the documentation is the (only) source that is available for replication, and as such replicating based on the documentation is a good representation of what one can reasonably expect to achieve in replication based on the current reporting standards.

With our replication source determined, we considered the level of alignment that is desirable and required to consider the replication effort successful. This applies as much to the replication of complete models as it does to sub-modules. Among the three standards of replication, relational alignment, distributional equivalence and numerical identity (Axtell et al. 1996), we selected numerical identity as the replication standard for the viral load module for three reasons. First, we see viral load to be a critical component of EpiModel as it is one of the most prominent factors driving the risk of transmission. Second, high accuracy in the replication is critical for alignment of results on the system level. Values of viral load can vary by six orders of magnitude depending on the stage of infection. Thus, we considered it necessary to adopt a strict replication standard that would allow us to capture such fluctuations accurately. Third, as viral load describes an agent property (which is independent of population behaviors), and there are substantial quantitative data on which to build a model of viral load, it was feasible to numerically align this module. These arguments indicated numerical identity was both an achievable and desirable replication standard.

Next, we determined the mechanisms that went into the viral load calculations, and identified for which cases alignment of model behavior needed to be tested. We explored three behaviors: 1) the viral load progression in the absence of treatment, 2) the dynamics of getting on and off ART, and 3) the interaction between the viral-load progression and the treatment behavior.

While studying the viral progression in the absence of treatment we found that even minor implementation differences in implementation can have large effects on model behavior. Conceptually we know that viral load numbers, the number of copies of the virus present in a ml of someone’s blood, impacts the risks of transmission of HIV; the more virus in one’s blood the higher the risk of transmission. The implemented EpiModel determines the extent of this effect on risk based on the following calculation: \(2.45^{(x-4.5)}\) where \(X\) is the logarithm (base 10) of the number of copies in one’s blood. For each of the stages of infection, the documentation described end point viral load, and it described a linear change over time across the various disease levels. EpiModel applied this linear effect to the logarithm of the viral load levels (so effectively increasing \(X\) linearly), while our replication applied a linear change over time to the number of virus copies in one’s blood. While this might seem like a minor difference in interpretation, the effect this had on emergent model behavior was significant, with the NHS yielding an HIV prevalence level of \(\sim\)10% higher than the EpiModel implementation.

To understand the impact on the system level better, the actual viral load across implementations needs to be plotted, and the interactions of the viral load with other modules needs to be understood. Note that the two implementations differ only in the way they process changes in viral load, and consequently only produce different results in the stages of the infection during which viral-load is in flux (acute rise, acute decline (which we combine into an onset stage) and the AIDS stage). For both implementations the viral load level during these stages are plotted in Figure 1.

While differences are observable across implementations, the gravity of their impact can only be understood within the larger model structure. To do so we first reiterate that log of viral load is used as an exponent in the risk calculation formula \(2.45^{(x-4.5)}\). This implies that even small differences in the log of the viral load (\(X\)) will have substantial impact on the actual risks of transmission during phases where \(X\) is high. Add to this the notion that during the onset period (acute rise and acute decline) the infection is acute and is consequently much more contagious (by a factor 6), and one can see how risks of transmission can be drastically inflated by a seemingly small implementation difference.

Figure 2 shows the factors by which risk of transmission are inflated during the onset and AIDS stages based on only the viral-load and acute stage multiplier. A detailed look at these results (Appendix B) shows that the difference of implementation yielded an inflation by on average a factor 12.509 during onset, 2.258 during the AIDS stage and 1.442 over the entirety of the infection. These numbers highlight how interaction between modules can radically amplify minor implementation differences, and in turn affect the emergent behaviors on the system level. In our case the interaction yielded a situation of extremely high risk after initially contracting HIV, which caused a self-perpetuating mechanism of new infections.

We should note that both implementations are accurate translations from the conceptual model, which posited linear changes over time, and hence from a model verification standpoint there is no a priori reason to prefer one over the other. This is a perfect example of how seemingly small differences in implemented models, even when using the same conceptual model, can have significant impact on the emergent properties of a system. The complex nature of high fidelity models stems from to the interactions and feedback loops inherent in them, so that small changes can be amplified to have significant effects on the system level behavior. This observation highlights that even minor differences in implementation can potentially result in large changes in model behavior on the system level, and that further attention to the behavior of this module is needed to understand its behavior.

The progression over time of viral load in EpiModel is based on previous work by Little et al. (1999). Taking their progression as the ground truth for HIV viral load progression, we can compare the behavior of both implementations to the behavior in that paper as a means to validate the behavior of both implementations. The second figure in Little et al. (1999) reveals a smooth transition of viral load progression which more closely fits the implementation of the NHS model than it does the EpiModel. Regardless, we choose to align the NHS model to the implementation of EpiModel, to ensure comparison of these models. But in doing so we note that our replication effort reveals that the viral load progression module is an area where future model improvements might be desirable.

For successful replication, it proved critical that we also aligned the treatment dynamics. In implementing the process of ART adherence in the NHS model, we based our modeling decisions primarily on the provided documentation. However, in the case of treatment dynamics, the extensive documentation (EpiModel has an elaborate 25 page description of model behaviors (SI of Jenness et al. 2016) did not provide sufficient information for exact re-implementation of the module. We consider this to be an inherent problem with documentation of high-fidelity models rather than an issue with EpiModel specifically, as the sheer amounts of documentation and translation needed in this type of model is likely to introduce points of uncertainty.

To clarify the sections that were unclear to the replicators during the re-implementation process, the replicating team contacted the lead author of the EpiModel (SMJ), to engage in a richer means of communication regarding model functioning. Based on a concrete set of clarifying questions, the author of EpiModel referred us to specific segments of the source code of EpiModel specifically addressing these questions (see Appendix C). Taking into consideration the sources code allowed the replicating team to strictly align the behavior of the treatment module in the NHS model with EpiModel. This process is a clear example of how each resource has different affordances when it comes to replication, the documentation provides the main conceptual model, the authors the details and the model overview, and the source code the details needed for re-implementation.

With both the natural progression and impact of ART treatment modules evaluated on their own, we considered the interaction between the two. Effectively we considered the effect of initiating or maintaining treatment at different stages of the disease progression. Being on ART for a week has an effect of reducing the viral load by a given amount (up until a given threshold). Similarly, not adhering will result in the agent moving back towards the default trajectory. As ART effects wear off during the AIDS stage, such dynamics result in a set of six scenarios (see below) for which the behavior will need to be tested for alignment.

- Get on ART during acute rise stage, and remain on ART

- Get on ART during acute decline stage, and remain on ART

- Get on ART during stable setpoint stage, and remain on ART

- Being on ART during acute rise stage, and go off ART during that stage

- Being on ART during acute decline stage, and go off ART during that stage

- Being on ART during stable setpoint stage, and go off ART during that stage

This set of scenarios was replicated for 2 types of agents (those with complete suppression, and those with partial suppression), resulting in a total of 12 critical scenarios. The effects of treatment are fairly straightforward during the set-point viral progression stage (scenarios 3 and 6) as the viral load in that stage is stable except for deviations due to ART treatment thus leaving very little room for variation in interpretation of how to implement. However, during the other stages, the effects of treatment are far less obvious. As viral load is changing naturally during these stages, the implementation of an additional change is far from unambiguous.

To test alignment we wrote test cases for all of the 6 scenarios both in EpiModel, by extending its code, and the NHS model. We then compared the outputs of these test scenarios across implementations. In doing so, we observed some model behavior which was not expected based on the conceptual model. We found that in EpiModel once a single dose of ART is taken, the default trajectory is disregarded and viral load progression is based on an in-treatment (and potentially adhering) logic rather than the traditional viral progression path. Particularly in the acute rise (and decline) stages, this can yield a dramatic shift from default behavior (see Appendix D), in which taking one pill can effectively prevent the occurrence of the complete acute stage, or slow down the default viral load decline to such levels that it is worse than not taking a dose at all (when a dose is taken during the acute decline stage).

The replicating team considered these scenarios to be unrealistic, but recognize that they will occur extremely rarely. Similar to the earlier variation in implementation, they also found that these scenarios do have a substantial effect on the virility of an individual and that such discrepancies are amplified during the acute stage, and consequently significantly impacted the system level behavior of the model. This is another example of the value of replication as a tool for model validation. It is unlikely anyone would first explore, second notice, and third interpret, the impact of such an implementation decision unless replication was attempted.

The viral load progression module proved difficult to replicate primarily due to a difference in the conceptual model of the ART module between the two teams. More specifically, the assumptions relating to the role of path dependence in this module differed between the original model builders and the replicators, which caused an initial hurdle in alignment. Where EpiModel effectively made an agent’s viral load a Markov process conditional on the previous state, the replicating team assumed that path played a role in determining these treatment dynamics. In the path dependent interpretation, it is not only the state but also the direction in which the viral load has moved in the past that determines the effect of a dose of treatment. E.g., the effects of treatment might be very different for someone whose viral load has been on the rise and is currently at \(10^5\) compared to someone who has a viral load that has been dropping and is currently at \(10^5\). Capturing such a conceptual interpretation of treatment requires the path an agent has taken to get to its current state, and the history of agents’ behavior, to be incorporated into the model, whereas the Markov implementation does not incorporate such information.

While our goal was to numerically align behavior of the module across implementations, the replicating team decided to adjust its initial implementation in the NHS model, and re-implement it so it would strictly follow the implementation of EpiModel, while at the same time marking modeling of ART effects as an area that deserves future considerations in sensitivity analysis. Consequently, the NHS model dynamics were modeled to effectively stating that once a dose of treatment is consumed an individual’s viral-load will change with a rate of 0.25, and will gravitate towards the set-point viral-load (4.5) with that rate when no treatment is consumed, similarly it will gravitate towards the virally suppressed level of viral-load (1.5) with that rate when treatment is consumed. This is in line with what EpiModel implementation does. Once this conceptualization was implemented both implementations indeed showed numerical identical results for the viral-load progression module, and hence replication of the viral-load module was considered successful and numerically identical (see Appendix E).

Example 2: replication of the risk-of-transmission module

As a second example of our replication process, we discuss the replication of the module that determines the risk of HIV transmission. This module describes the transmission of HIV by means of a process that depends on both a series of agent behaviors and on the complex evolution of sexual activity networks in the model. We chose to report on this module as it differs from the previous module in some key dimensions. First, this module considers the behavior of a dyad rather than an individual, and hence considers interactions among agents. Second, this module includes randomness, whereas the previous module was fully deterministic. Third, the module consists of multiple sub-modules, that each feed into it, as such it highlights the relevance of hierarchy, structure and interaction among sub-modules during model replication. And lastly, this example presents a perspective on how to deal with situations in which strict alignment in one of the sub-modules is impossible (or as in our case purposely foregone).

The structure of the risk-of-transmission module

The risk-of-transmission module can be conceptually broken down into a set of three independent (sub)modules that, when combined, determine the risk of spread on the system level. 1) A Partnership Formation and Dissolution Module, which determines where ties are present to facilitate spread using three types of ties, main, casual and one-time ties; 2) a module determining the rate of sexual acts within each tie; and 3) a module determining the Risks of Transmission per sex act.

The process of replicating the risk-of-transmission module

Replication of the risk-of-transmission module was done using an approach that began similar to the one described for the viral-load example but differed in later steps. We again considered each of the sub-modules in isolation, before combining them into to a more complex module where they interact, which is similar as before. However, as one of the sub-modules differed across implementations our assessment of the interactions of these modules differed. During the replication process we made the conscious choice to not to strictly replicate the partnership formation and dissolution sub-module. We did so primarily because the philosophy of network formation adopted in EpiModel differed from our own. EpiModel adopts an ERGM based formation process which bases the formation of ties on the fit with system-wide structural characteristic. In contrast, the replicated model assumes partnership formation to inherently occur at the individual level, where individual decision making results in an emergent structure. Consequently, to align with this modeling philosophy, we implement this module in NHS in a classic agent-based manner, where each individual’s partnering decisions result in an emergent partner network (see Appendix F for pseudo code of this module). We do use the global properties to cap individual’s behaviors to ensure the formed networks in the NHS model match the global properties of those produced by EpiModel. In choosing a different conceptualization for producing aggregate network structures and dynamics our replication has become a test of the hypothesis that these two different approaches to partnership formation align, not only in the requisite aggregate parameters, but rather align well enough to support model validity and the main conclusions of a successful replication. We stay alert to the possibility that this hypothesis will be rejected and these different mechanisms will yield fundamentally different results.

While partnership selection is one of the sub-modules that affects the risk of transmission, our design choice has implications for the method of replication and the replication standard adopted. As one of the input sub-modules conceptually differs, aiming for numerical identical results at the level of the complete module makes little sense. In fact, to consider alignment when the various sub-modules are combined, we will need to first control for the effects of the partnership formation and dissolution module and test alignment for all other interacting sub-modules. Only after that process is done can we include it in our tests for alignment and see if this specific sub-module yields comparable results. As such, we add an intermittent step in our replication process, in which after aligning the sub-models individually we check for their interaction while controlling for the partnership formation and dissolution module.

Aligning the risks per act sub-module

The first sub-module, the per-act risk of transmission module, has 5 independent inputs. The first, the Viral load module, has been discussed previously, two others are trivial binary checks. The acute stage and CCR-5 mutation each have their own risk multiplier. Two less obvious interacting sub-modules include condom use and sex-role.

All of these input modules are fully deterministic, and consequently we consider numerical identity an appropriate standard for replication for this module. Additionally, as these per act risks are the backbone of the spreading behaviors we consider accuracy critical for overall model behavior, and hence claim that numerical identity for this sub-module is desirable.

For the two remaining non-trivial input modules we identify the variability that can occur given that all other inputs remain constant. Ceteris paribus, sexual acts resulting in HIV transmission can occur in three ways; An HIV-positive agent can either be insertive, receptive, or versatile (i.e. both positions), and whose behavior is conditional on the sexual behavior preferences of both partners in the tie. When versatile behavior occurs, it is considered a compound of 1x insertive and 1 x receptive act, and consequently by knowing both the risk for the insertive and receptive acts, one can deduce the risk related to versatile acts. As such, 2 critical states exist from the sexual behavior perspective. From the condom use perspective also two options are available — Protected and Unprotected — resulting in a total of 4 (2 x 2) critical scenarios for which alignment has to be tested.

For both the EpiModel and NHS models we create scripts to generate the risks based on these critical input scenarios and compare results across models. We initially found significant differences across implementation, which required substantial effort to identify — a difference in interpreting a parameter being on a log versus a log-odds scale — and then minimal effort to resolve. (Details on the steps required for alignment of this sub-module can be found in Appendix G).

Aligning the rate of sexual acts per partnership sub-module

Next, we considered the sub-module that determines the rate of sexual acts within a partnership. Note that the rates in this module are based on average behaviors in a previous cohort study (Hernández-Romieu et al. 2015). These rates thus represent the mean behavior within the entire population, stratified by partnership type. Based on the population behavior each individual relationship in each week is assigned an activity by drawing from an independent Poisson distribution. This means that stochasticity is added to this module’s outputs. While one could potentially align the random number generators and random seeds across both implementations — and by doing so attempt to obtain numerically identical results-- we consider this a task that requires too much effort for relatively little gain. Instead we adopted the less strict replication standard of distributional equivalence, which is more appropriate, allowing us to incorporate the stochasticity and consider the alignment in a distribution of outputs rather than every unique outcome.

Comparing the number of acts per type of tie across both models initially revealed large differences. More specifically, the replicated model showed far less sexual activity across all types of ties. Exploration of the potential causes of these differences proved difficult, and only after inspection of the EpiModel source code were we able to pinpoint the cause of the misalignment. Differences were caused by an inflation factor applied in EpiModel, which was not implemented in the NHS model. EpiModel included a parameter (AI_Scale), which modified the number of acts in all types of ties; it was used to fit the model’s system level HIV prevalence to observed empirical data. In the implemented EpiModel study this value was set at 1.324, effectively inflating the sexual activity by that factor across the board (compared to empirical point estimates). Incorporating this inflation factor in the NHS model resulted in distributional equivalence of acts among implementations (see Appendix H).

Aligning the partnership formation and dissolution sub-module

As mentioned prior, in building the NHS model a design choice was made not to strictly follow the network formation and dissolution processes as implemented in EpiModel. In EpiModel, the network formation and dissolution is controlled by a statistical model for network structure: a temporal exponential random graph model (TERGM) (Krivitsky & Handcock 2014). TERGMs try to find dyadic mechanisms that results in a fit of a set of system-level network structural properties; as such it makes local behaviors conditional on population level properties. Such a process runs somewhat counter to the modeling philosophy of agent-based models in which agents use only local information in their decision making and have no access to population-level information. While implementing a network formation module that strictly follows the EpiModel method would be possible in NetLogo, such a module is not as good a fit for ABM, as ERGM models fit aggregate model parameters. Instead we decided to re-implement the network formation process in a more agent-based fashion, and replaced the TERGMs network component by an individual-level matching module that similarly fits the population distributions, but does so by employing local matching decisions for partner selection and dissolution (See Appendix F).

Controlling for the partnership formation and dissolution module

As, by design, the network formation process differs across implementations, it is reasonable to assume the networks created with those processes will differ. Both implementations form networks with the same number of individuals, density, and degree distribution, and hence produce networks with similar global network parameters (SI of Jenness et. Al. 2016) for a detailed parameterization). However, the networks formed are likely to differ locally as the mechanisms that determine where ties are formed differs drastically. As it is known that such a local difference can have a large impact on spreading processes, it is to be expected that HIV spread will differ in the networks formed using the different implementations. Consequently, should we find any difference in the spread module we would be unable to attribute to these differences to any failed alignment in specific mechanism or module or interactions among them; observed differences might stem from variation in the partnership network formation, the dynamics of network change, or misalignment elsewhere in the module, making for an inconclusive test scenario. To effectively compare model implementations, we therefore needed to control for network formation (and its dynamics) while testing alignment of the interaction of the two other modules.

Leveraging the modular structure of both models, we could relatively easily do so. The network module simply provides an input (a network structure, and list of agent states) to the spreading module. As such, we can swap out the module in both implementations with a fixed network having stable characteristics. As long as the stable network is identical as across both models, the stochastic behavior on top of this network should be the same as long as the models’ behavior is in fact aligned. To create such a test, we ported the world-state across models: we outputted all the agent and tie attribute data of a given world state from EpiModel and wrote a script to read those into the NHS model, creating two identical instances. By matching the world-state across both models we ensure both are identical in terms of the networks they use, as such we control for the influence of network structure. However as network are dynamic and change over time the network structures will only stay identical for a single time step (tick). To control for the dynamics of the network we consequently consider only the spreading behavior in the first tick (when networks are still identical), and do a test for alignment for those spreading data. This “one-tick-test” effectively controls for modules known to vary across implementations and isolates the modules and mechanisms that we do want to align. In modular models this general approach can be extremely powerful to reduce the complexity, and allow one to focus on alignment of specific (sets of) modules. What is more, this type of test can be devised for formally testing higher level modules even when lower sub-modules are known to deviate. In our case as the network formation and dissolution was modified purposely this test was our primary tool for aligning the spreading behavioral component across implementations.

A “one-tick-test” for aligning the spreading module

During the one-tick-test, we evaluated alignment of the system-wide transmission risk by considering the number of new infections across implementations, the HIV incidence. Note that the occurrence of new incidence cases is conditional upon a set of stochastic processes throughout the system. This has two implications on how alignment needs to be tested; 1) To obtain reliable results we need a sufficient number of repetitions of the same experiment to account for variance that is inherent in any stochastic process, and 2) the stochasticity implies that the results are unlikely to be numerically identical, and that we instead will look for statistically similar results, and adopt distributional equivalence as the replication standard.

In both implementations we found substantial variance of the incidence across repetitions with new incidences cases ranging from 0 to 18 per time step with a mode of 4. Given the relatively low per act transmission risk, such variance is not surprising. We can assess this variance by repeating the same experiment multiple times and considering the average behaviors across these repetitions. Effectively we are producing a distribution of incidence, which becomes more and more stable as behavior is averaged over more repetitions. We found that our incidence distribution becomes stable once the number of repetitions was increased to the order of 50-100k, and that consequently the variance of the mean incidence largely disappeared as that point, and that very narrow confidence intervals for the incidence distribution are obtained. Consequently, we used the one-tick-test with 100k repetitions to compare the incidence across implementation for a given world-state. This comparison revealed distributional differences in incidence across the implementations, given that we had previously aligned the sub-modules that drive this distribution this was a surprising result.

Addressing the misalignment across spreading modules

After finding differences in the mean values of transmission risks across implementations, we explored the module for indications of the source of misalignment. First, we looked at the mean risks for each tie individually (over 50k repetitions). We fixed the number of acts per tie to one (for all tie types), and compared the risks obtained across both implementations. We filtered out the ties that yielded different mean risks across implementations and explored their characteristics to identify the potential source of the differences. We went through several iterations of this process, which allowed us to 1) spot a bug in our script for porting world-states across implementation, which caused two agent attributed to be switched, 2) notice that the acute stage had been renamed in a later version of EpiModel which resulted in it not being correctly translated during the porting across implementations resulting in a misalignment of risks, and 3) most notably, it allowed us to track differences back to a discrepancy in the risk calculation module, which we will further elaborate on below. These are but a few examples of how statistical testing of alignment can serve as an exploratory tool for finding source of misalignment.

By outputting the distribution of risks for each tie (rather than just the means), we observed that a total of up to six different risks could be generated within a given tie. These risks are linked to the critical scenarios identified previously as a combination of the sexual behaviors (insertive/receptive/versatile) and use of condoms (Yes/No), resulting in \(\times\) \(=\) 6 scenarios. We found that for the versatile sex acts, the risk numbers across the implementations did not align. Note that such sex acts are the compound of both an insertive and a receptive sex act, and hence had previously been considered a non-critical scenario in our tests. However, due to differences in the way risks were compounded, the NHS model and EpiModel did not yield the same numbers after compounding, even when the risk for the individual insertive and receptive acts did match numerically. Changing the implementation of compounding of risks in the NHS model (effectively treating the act as two separate acts one insertive and one receptive rather that combining them in a single chance of success) resulted in numbers for all risk scenarios that matched exactly (achieving numerically identical results also for versatile events). After these changes, the one-tick test showed promising results, with nearly identical HIV incidence frequency distributions across implementations, when simulated 100,000 times (Figure 3).

Diagnostic plots and tests for distributional alignment

While the overlay of the two distributions in Figure 3 seems to show a high degree of agreement as the frequencies look similar, this type of figure is a poor way to determine distributional differences since there is little room to examine the tails of the distribution. In what follows, we describe the statistical tests and plots we used to compare distributions of new incident cases across implementations.

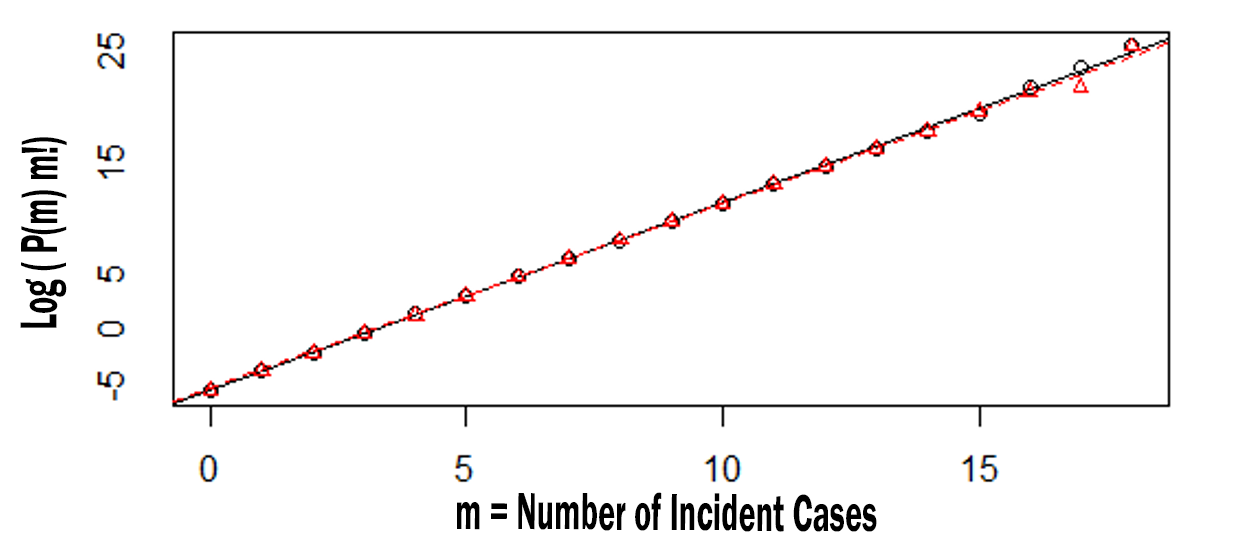

We examined whether the new incidence distributions in both implementations match well against a Poisson or mixture of Poisson distributions. This is shown in Figure 3, where for each observed number of incident cases, \(k= 0, 1,\dots K\), we plot this against a function of the following observed proportion, \(P(k)\) of observed cases across all simulations. With \(Y(k) = \log (P(k) + \log (k!)\), a Poisson random variable will show a linear relationship on \(k\), with intercept \(–\lambda\) and slope \(\log \lambda\), where \(\lambda\) is the mean of the distribution. A typical Poisson mixture distribution will show an approximate quadratic relationship. In this plot, both the EpiModel and NHS model plots look exceptionally linear, and they are nearly on top of one another. Thus, there is no indication of a departure from Poissonness, and the difference in the EpiModel and NHS model means are extremely small.

These graphical results were then repeated across 50 variations of randomly generated starting networks. A Poisson model fits all these data well (and formal tests for extra-Poisson variation are all nonsignificant). Consequently, we conducted formal tests of the differences between EpiModel and NHS model means under a Poisson assumption.

Running this formal test on all 50 networks revealed that, for 10 out of the 50 networks (20%), there was a significant difference in mean incidence rates between the EpiModel and the NHS model at the 0.05 level (Appendix I). This is far above the 2-3 out of 50 trials we would expect by chance, signaling that full alignment had not yet been achieved. Our analysis also revealed the differences in means across implementations were tiny in terms of effect sizes, with the largest being 0.006, indicating that a small but systematic difference was occurring.

To address this misalignment, we once more looked at the distribution of risks per tie and found (as established before) that the transmission risks calculated are identical across the models. This left only two potential sources of the discrepancy: 1) the frequency of acts that occur is different across the implementations, or 2) the distribution of risk scenarios — that is, a combination of using a condom, and choosing a sex-role which is associated with a set risk of transmission — is different across implementations. Outputting data of all acts (per tie) revealed no structural differences across implementations in the distribution of the risk scenarios. For the rate of sex acts, we only found significant differences in one type of sexual tie — Casual Ties — but not for the others. After observing these results, we found that the parameter for the mean number of sex acts in casual ties differed slightly between the EpiModel code — 0.955 — and the value reported in its documentation — 0.96 —, the latter being the rounded up version of the prior. Whereas the prior was used in the EpiModel implementation, the NHS model, relying on the documentation, used the latter.

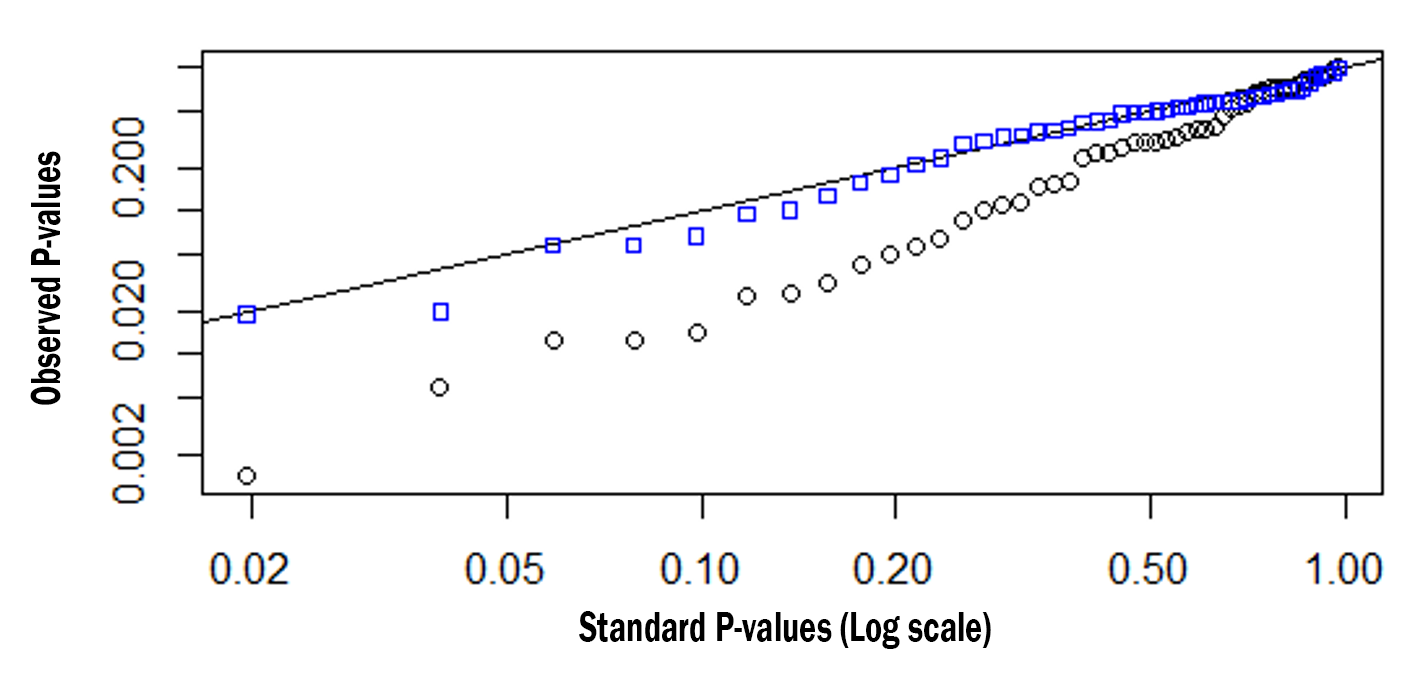

We adjusted the EpiModel implementation to reflect the value used in its documentation (and the NHS model) and reran the one-tick test. The results (Appendix I) showed that in 48/50 (96%) of cases, the outcomes of the models were statistically indistinguishable. Figure 5 shows two sets of significance levels for these tests; the lower set for 50 variations using the initial parameter, and the upper ones for the same 50 variations using the updated (aligned) parameter. On the x-axis we provide the expected values of 50 p-values (log transformed) under a null distribution; on the y-axis are the ordered observed p-values.

Under the null distribution, the p-values should fall along the \(y = x\) line. The observed p-values for the EpiModel and the NHS model comparisons with the non-adjusted parameter (black squares) fall well below this line, evidencing systematic differences between these EpiModel and the NHS model distributions. However, once the parameter for mean number of casual sex-acts was aligned to 0.955 (blue squares), the p-values fall nearly perfectly on the \(y = x\) line, indicating excellent agreement.

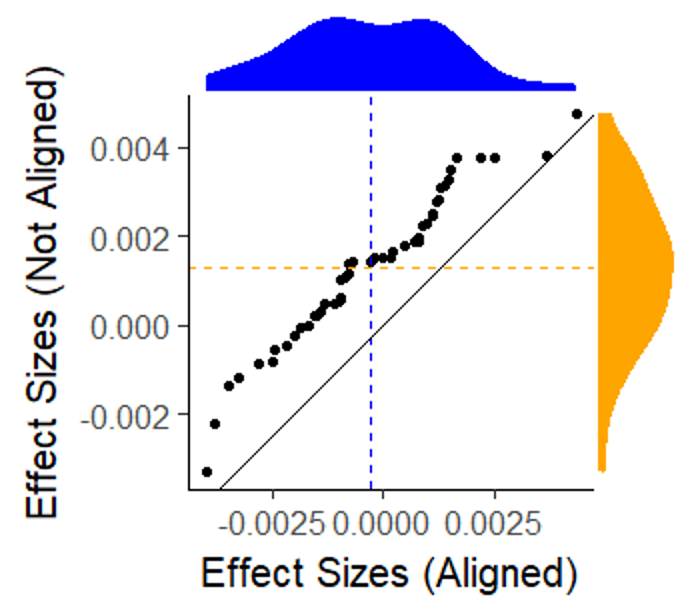

Figure 5 describes how small a difference we are able to detect. This empirical quantile-quantile (EQQ) plot (Chambers 2018) uses the ordered corrected effect sizes of EpiModel versus the NHS model on the x-axis, and presents the ordered effect sizes for the non-aligned variations on the y-axis. The non-aligned version clearly deviated from the expected \(y = x\) line. The marginal distributions for the corrected and rounded-off effect sizes are shown on the top and right sides of the figure, and the medians are shown on the dotted lines. Note that the median for those that are corrected are centered at zero while the median for those with the rounded off parameter are slightly positive. Also note that the effect sizes are all exceptionally small, ranging from +/-0.005, demonstrating how precise we can estimate these quantities with large enough number of simulations.

Example 3: Replication of the computational experiment

Generally speaking, high-fidelity models are created with the aim of making inferences about certain phenomena. They serve as a tool for facilitating experimentation or exploration, and as such increase our knowledge and support decision making. EpiModel is no exception in this regard. In the work by Jenness et al. (2016) the model is used to make inferences about the relative effectiveness of different interpretations of CDC clinical practice guidelines of PrEP indications among MSM, effectively determining what the criteria are for being eligible to receive PrEP. By adding a PrEP intervention module to the previously described transmission risk module, the effects of various interpretations are studied using a computational experiment. Because this experiment effectively incorporates the full model, we chose this computational experiment as our final example of replication.

The experiment compared a total of 10 scenarios; 1 baseline without interventions and 9 variations with different (combinations of) interpretations of CDC guidelines for PrEP. For each scenario a period of 520 time-steps (representing 10 years) was modeled, after which the prevalence was recorder. As each simulation run consists of a multitude of stochastic decisions which have an inherent path dependence in them, random fluctuation in model behavior are to be expected. Consequently, obtaining reliable results for any given scenario will require averaging the results across multiple repetitions. For this reason each of these scenarios was repeated 250 times. Based on the collected data, a the mean incidence, and a 95% confidence interval of this mean is calculated for each scenario, allowing a comparison of the relative effectiveness of the interpretations of the CDC PrEP guidelines. Additionally, as data for the EpiModel experiment is presented in Jenness et al. (2016), this also allowed us to compare the NHS model findings to the findings of EpiModel.

Prior to running the experiment, EpiModel implemented a burn-in procedure to generate a randomized starting state. During the burn-in process 250 instantiations of the model (set up with the parameters reported) were run for a period of 2600 time-steps (50 years). This burn-in period aimed to make sure that bias from the initial setup was dissolved and potential model dynamics stemming from a potentially biased setup had stabilized and as such played no role in the experiment. After this burn-in period, the single instance (1 out of 250) that best fitted empirical data (indicated by a stable prevalence at a level of \(\sim\)26%) was selected. This “world” was then used as the starting state of all experiments.

The structure of the computational experiment

In replicating this experiment, there are three modules that needed to be considered; the intervention module, the partnership selection module, and the transmission module. These modules essentially make up the complete EpiModel method, and determine the macro-level behavior of the model. Two of the modules (partner selection and transmission risk) have been discussed as part of our previous replication examples. In order to compare CDC guidelines, only a module describing the effects of such guidelines had to be added to the model.

Intervention Module: This module effectively describes how individuals get tested, and, when found to be HIV-negative, get assigned to PrEP if eligible. The assignment to PrEP depends on two factors; 1) an individual’s indications, which depend on interpretation of the CDC guidelines being adopted, and 2) on availability for PrEP. The latter we kept fixed for the purpose of replication as we consider it of secondary importance in our replication efforts. Once an individual is on PrEP, their risk of being infected is reduced by an amount which is conditional on the level of adherence to the drug.

The process of replicating the experiment

Replication of the intervention module proved particularly challenging. The documentation, which provided a plain English description of the meaning of the interpretations of the CDC guidelines, proved insufficient to convey and distinguish the nuances of how each of these interventions varied across scenario, and hence how it should be implemented. Communicating with the senior author of EpiModel model did resolve many but not all issues in this regard, the nuances of the interpretation are simply hard to convey in plain English. However, by referring to specific sections of the source code directly, the EpiModel author made sure these nuances and the differences in the meanings of the various intervention scenarios could be distinguished.

In replicating the experiment, we opted for relational alignment for two reasons; first, relational alignment suffices to answer the key question. In the original paper the experimental results are discussed only in relative terms (A is more effective than B) and the actual numerical impact is ignored (e.g. A reduces prevalence by X percent). The authors of the original experiment made this choice intentionally, and this signals the relative confidence in the models numerical results. More specifically, it indicates that the relative orderings are considered the most critical take-aways from the experiment, especially among those that produce the lowest incidence. As such, relational alignment, as a replication standard, suffices for making claims about the alignment of these results across implementations. Second, we consider it feasible, in fact the only feasible standard available. The fact that by design the partnership selection and dissolution module differs across implementations, and the fact that the resulting differences in structure can have an impact on the spreading dynamics (Vermeer et al. 2018), limits the alignment that can be expected among the two models. Based on these difference we consider the chance that models will produce outputs that are numerically identical essentially non-existent, and the chance that results distributionally align slim at best. Consequently, it is most appropriate to aim for a replication standard of relational alignment.

After having aligned the intervention module based on the source code, we ran a set of simulations with varying conditions for qualifying for PrEP, replicating the experiment conducted in the original study (see Table 1). Note that in these simulations we know that the spreading behavior module is aligned, and that partnership selection module is not strictly aligned.

The results of these simulations (Table 1, Figure 6) revealed three critical things. First, our results show that we are quite far from distributional alignment. A comparison of the 10 means yields a z-value of 8.6, with a p-value \(< 10^{-17}\). Second, there is a very strong correlation across implementations (Figure 6), in fact the correlation between the average incidence in the 10 scenarios, across EpiModel and the NHS model was 0.98. And third, in addressing the question of whether the orderings of the 10 EpiModel and 10 NHS model interventions on incidence are similar, we find that 92% of all pairwise orderings in intervention effectiveness were consistent across implementations. We note that this percent could well be improved if the EpiModel means had higher precision like those we calculated in the NHS model (Table 1 ).

| Criteria code | Interpretation of the CDC guideline for prescribing PrEP | EpiModel | NHS model |

| Baseline | 25.9 | 25.3 | |

| Condition 1: Unprotected Anal Intercourse (UAI) in a monogamous partnerships with unknown HIV status | |||

| 1a | A partnership is monogamous when it is the only tie for both partners | 24.5 | 23.1 |

| 1b | A partnership is monogamous when it is the only tie for at least one of the partners | 23.6 | 21.6 |

| Condition 2: Unprotected Anal Intercourse (UAI) outside a monogamous partnership | |||

| 2a | Any tie beyond the first classifies as ‘outside a monogamous partnership’. | 23.3 | 20 |

| 2b | All ties other than the main tie is classify as ‘outside a monogamous partnership’. | 21.1 | 15.7 |

| Condition 3: Anal intercourse (AI) in any known-serodiscordant partnership | |||

| 3a | Any AI will quality the person | 23 | 20.8 |

| 3b | Only Unprotected AI will qualify the person | 23.5 | 21.7 |

| Combinations of conditions | |||

| J1 | Criteria 1a, 2a and 3a all quality an individual | 20.6 | 16.2 |

| J2 | Criteria 1b, 2b and 3a all quality an individual | 19.2 | 14.8 |

| J3 | Criteria 1b, 2b and 3b all quality an individual | 19.4 | 14.9 |

Discussion