Introduction

Recent years have seen the emergence of agent-based simulation as a powerful tool, especially in social sciences, for studying situations where human actors are involved. In this precise context, the community is now speaking of social simulations (Gilbert & Troitzsch 2005), involving social agents simulating humans. These social agents are supposed to reproduce the human behavior in a studied situation, which means making complex decisions while taking into account psychological and social notions.

Achieving believable agents implies the addition of social features to agents’ behavior (Sun 2006). This view is also supported by the EROS principle (Enhancing Realism Of Simulation) (Jager 2017), which is in opposition with the KISS principle (Keep It Simple, Stupid) (Axelrod 1997). The EROS principle implies that integrating psychological theories into the definition of agent behavior favors believable results from the simulation of a real-life situation involving human beings.

To tackle these issues of realism, several architectures, each covering different aspects of human behavior, have been proposed: from reflexive agents, researchers went to cognitive agents, emotional agents, agents with a personality, and normative systems. However, these architectures are still rarely used in the social simulation community. Indeed, researchers from this community are facing a wide variety of agent architectures, some of them are either not implemented or not in widely used simulation platforms, or are domain dependent. In addition, developing a detailed understanding of the differences between these architectures, which integrate various social features, is a difficult task. Finally, very high-level programming skills are required to use these existing behavior architectures.

The BEN (Behavior with Emotions and Norms) architecture proposed in this paper aims to address these issues. It enables modelers to create agents simulating human actors with social dimensions such as cognition, emotions, emotional contagion, personality, social relations and norms. In fact, adding social features can not only help creating agents with a believable behavior, but also provide a high-level explanation of such behavior (Jager 2017). Moreover, as the BEN architecture is modular, few constraints are imposed upon the users, who can enable or disable the multiple social features, depending on the studied case. Finally, BEN has been implemented in the GAMA modeling and simulation platform (Taillandier et al. 2018), which has had a growing success over the last few years, thus ensuring its usability and its diffusion among a large audience.

Section 2 of the paper discusses the other agent architectures proposed in recent years. Section 3 outlines the formalism used to represent the social features and the way they were redefined to fit into the architecture. The BEN architecture is presented and discussed in details in Section 4. Section 5 illustrates the use of BEN by presenting the case of the evacuation of a nightclub in Brazil. Finally, Section 6 serves as a conclusion.

Related Works

As creating believable agents is a key issue in social simulations, several works have already been conducted to create agent architectures meant to reproduce human behavior. Using architectures enables to unify modeling practices. Without a precise architecture, each modeler creates a behavior from scratch, using different theories and formalisms, making it difficult to compare between two models of the same situation.

In the context of credible agents in social simulations, adding cognition to the agent’s capabilities is a first step to create more complex agents, which are able to make decisions that are not based only on the last perception (Adam & Gaudou 2016; Balke & Gilbert 2014). Thus, a cognitive engine has to be the base of any domain-independent social architecture.

Cognitive architectures

Different approaches have been proposed to tackle this issue. Architectures such as SOAR (Laird et al. 1987), Clarion (Sun et al. 2001) or ACT/R (Byrne & Anderson 1998) rely on neurological and psychological research. They make a distinction between the agent’s body and mind, with the body serving as an interface between the environment and the reasoning engine, represented by the mind. This reasoning engine is composed of multiple parts, enabling an agent to do short term actions and take into account its long term will. However, to our knowledge, these architectures have not been used on real life scenarios featuring hundreds of social agents.

Another approach on cognition is proposed by the BDI (Belief Desire Intention) paradigm (Bratman 1987). Formalised with modal logic (Cohen & Levesque 1990), it defines the concepts of beliefs, desires and intentions as well as the logical links to choose a plan of action in order to address the agent’s intentions. This paradigm has been translated into PRS (Procedural Reasoning System) (Myers 1997) which manipulates high level concepts with a set of rules that determines the actions of an agent depending on its perceptions and its current state. This process has subsequently been applied into agent architectures such as JACK (Howden et al. 2001) or JADEX (Pokahr et al. 2005). These architectures based on the BDI paradigm are called BDI architectures.

Social architectures

BDI architectures have been derived to integrate social features. This is how eBDI (Jiang et al. 2007) has been built to create emotional agents using a BDI cognition. It is based on the cognitive appraisal theory of emotions (Frijda et al. 1989; Smith & Lazarus 1990; Scherer et al. 1984; Ortony et al. 1988), which states that emotions are a valued answer to the cognitive appraisal of the environment. Other architectures have been proposed using the same theoretical background such as EMA (Gratch & Marsella 2004), FAtiMA (Dias et al. 2014) or DETT (Van Dyke Parunak et al. 2006), with different implementations and interpretations of the psychological research. For example, EMA is based on the appraisal theory described by Smith & Lazarus (1990) while DETT rely on the OCC model (Ortony et al. 1988) and FAtiMA is a modular framework to test various appraisal approaches. Emotional concepts have also been widely used in social simulations without being supported by an architecture (Bourgais et al. 2018).

BDI architectures have also been derived to integrate norms and obligations, creating the BOID (Belief Obligation Intention Desire) architecture (Broersen et al. 2001). Obligations are added to the classical BDI, challenging the agent’s desires. BOID has been extended to BRIDGE (Dignum et al. 2008) to include a "social awareness" based on social norms. Another approach consists in creating normative systems, which do not need any cognition, as is the case with EMIL-A (Andrighetto et al. 2007). This model describes all the possible states of the agent in terms of norms which may be fulfilled or violated, leading an agent to make decisions in conformity with the norms of the system. This approach is extended by the NoA architecture (Kollingbaum 2005). However, these two last works are only theoretical to our knowledge.

Other works tried to integrate emotional contagion (Hatfield et al. 1993) into simulation, leading to the ASCRIBE (Agent-based Social Contagion Regarding Intentions, Beliefs and Emotions) model (Bosse et al. 2009) describing the dynamic evolution of emotions in a population. This model was improved to take socio-cultural parameters into account in evacuation simulations and became the IMPACT model (Wal et al. 2017). The concepts developed by the authors involve computing the intensity of a mental state (an emotion, a belief or an intention) by taking into account the intensities of these values from other agents.

With the same idea of combining social features, different architectures have been proposed in simulation by mixing cognition either with emotions, personality and social relations (Ochs et al. 2009) or with emotions, emotional contagion and personality (Lhommet et al. 2011). These approaches use the OCEAN model (McCrae & John 1992) of personality to make a link between the social features integrated.

Commentary and synthesis

There are two main problems when trying to simulate real case scenarios featuring people : handling a large number of agents in a reasonable computation time and creating a credible behavior. The architecture HiDAC (Pelechano et al. 2007) proposes to take into account psychological notions such as stress to simulate a dense crowd evacuating a building. This work offers good results in terms of computation time but is only applied to the specific case of inside evacuations. The MASSIS architecture (Pax & Pavón 2017) proposes another approach to the same problem, creating the agent’s behavior with a set of plans triggered by perceptions. However, this work is also dependant of its case studied and is not made to be easily adapted to social simulations in general.

The EROS principle (Jager 2017) fosters the use of psychological theories in the definition of social agents to gain credibility and explainabilty from the obtained results. This section has shown that various efforts were made to create general architectures for making decisions, integrating cognitive, affective, and social dimensions based on psychological theories. However, none of them covers at the same time all these notions, which could be useful from the perspective of a modeler with low level skills in programming who could try and find the best suited dimensions to its own case.

The BEN architecture aims to fill this void by integrating cognition, emotions, emotional contagion, management of norms and social relations into a single agent architecture for the social simulation. A personality feature is used to help combining these dimensions so an agent makes a decision based on its own perception of the world and its own overall mental state. All these dimensions enable a modeler to define an expressive and complex agent’s behavior while being able to explain the observations in common language (e.g., "this agents is doing this action because it has a fear emotion about a thing and at the same time a social relation with this other agent").

Besides, BEN is independent from any specific application and is built to be modular. Thus, a modeler does not have to use all the components if it is not necessary. The objective is to be as accessible as possible for all the social simulation community. This means compromises have been made to enable the computation of thousands of agents in a reasonable time, while ensuring a decision-making process that takes into account all the elements present in the agent’s mental state.

Representing Social Features

The BEN architecture features notions such as cognition, personality, emotions, emotional contagion, norms and social relations to describe the agents’ behavior in the context of a social simulation. In order to link these features together in the architecture, each one of these components has to be represented using a formalism that ensures its compatibility with the others. In this section, the formalization of each social feature is described in details.

The main part of BEN is the agent’s cognition. A cognitive agent may reason over a set of perceptions of its environment and a set of previously acquired knowledge. In BEN, this environment is represented through the concept of predicates.

A predicate represents information about the world. This means it may represent a situation, an event or an action, depending on the context. As the goal is to create behaviors for agents in a social environment, that is to say taking actions performed by other agents into account with facts from the environment in the decision making process, an information \(P\) caused by an agent \(j\) with an associated list of value \(V\) is represented by P\(_{j}\)(V). A predicate P represents an information caused by any or none agent, with no particular value associated. The opposite of a predicate \(P\) is defined as not P.

The rest of the section introduces how notions related to cognition, personality, emotions, emotional contagion, norms and social relations are represented in BEN.

Cognition about the environment

Reasoning with cognitive mental states

Through the architecture, an agent manipulates cognitive mental states to make a decision; they constitute the agent’s mind. A cognitive mental state possessed by the agent \(i\) is represented by M\(_{i}\)(PMEm,Val,Li) with the following meaning:

- M: the modality indicating the type of the cognitive mental state (e.g. a belief, a desire, etc.).

- PMEm: the object with which the cognitive mental state relates. It can be a predicate, another cognitive mental state, or an emotion.

- Val: a real value which meaning depends on the modality.

- Li: a lifetime value indicating the time before the cognitive mental state is forgotten.

A cognitive mental state with no particular value and no particular lifetime is written M\(_{i}\)(PMEm). Val[M\(_{i}\)(PMEm)] represents the value attached to a particular cognitive mental state and Li[M\(_{i}\)(PMEm)] represents its lifetime.

The cognitive part of BEN is based on the BDI paradigm (Bratman 1987) in which agents have a belief base, a desire base and an intention base to store the cognitive mental states about the world. In order to connect cognition with other social features, the architectures outlines a total of six different modalities which are defined as follows:

- Belief: represents what the agent knows about the world. The value attached to this mental state indicates the strength of the belief.

- Uncertainty: represents an uncertain information about the world. The value attached to this mental state indicates the importance of the uncertainty.

- Desire: represents a state of the world the agent wants to achieve. The value attached to this mental state indicates the priority of the desire.

- Intention: represents a state of the world the agent is committed to achieve. The value attached to this mental state indicates the priority of the intention.

- Ideal: represents an information socially judged by the agent. The value attached to this mental state indicates the praiseworthiness value of the ideal about P. It can be positive (the ideal about P is praiseworthy) or negative (the ideal about P is blameworthy).

- Obligation: represents a state of the world the agent has to achieve. The value attached to this mental state indicates the priority of the obligation.

Acting on the world through plans

To act upon the world according to its intentions, an agent needs a plan of actions, that is to say a set of behaviors executed in a certain context in response to an intention. In BEN, a plan owned by agent \(i\) is represented by Pl\(_{i}\)(Int,Cont,Pr,B) with:

- Pl: the name of the plan.

- Int: the intention triggering this plan.

- Cont: the context in which this plan may be applied.

- Pr: a priority value used to choose between multiple plans relevant at the same time. If two plans are relevant with the same priority, one is chosen at random.

- B: the behavior, as a sequence of instructions, to execute if the plan is chosen by the agent.

The context of a plan is a particular state of the world in which this plan should be considered by the agent making a decision. This feature enables to define multiple plans answering the same intention but activated in various contexts.

Personality

In order to define personality traits, BEN relies on the OCEAN model (McCrae & John 1992), also known as the big five factors model. In the BEN architecture, this model is represented through a vector of five values between 0 and 1, with 0.5 as the neutral value. The five personality traits are:

- O: represents the openness of someone. A value of 0 stands for someone narrow-minded, a value of 1 stands for someone open-minded.

- C: represents the consciousness of someone. A value of 0 stands for someone impulsive, a value of 1 stands for someone who acts with preparations.

- E: represents the extroversion of someone. A value of 0 stands for someone shy, a value of 1 stands for someone extrovert.

- A: represents the agreeableness of someone. A value of 0 stands for someone hostile, a value of 1 stands for someone friendly.

- N: represents the degree of control someone has on his/her emotions, called neurotism. A value of 0 stands for who is neurotic, a value of 1 stands for who is calm.

Emotions

Representing emotions

In BEN, the definition of emotions is based on the OCC theory of emotions (Ortony et al. 1988). According to this theory, an emotion is a valued answer to the appraisal of a situation. Once again, as the agents are taken into consideration in the context of a society and should act depending on it, the definition of an emotion needs to contain the agent causing it. Thus, an emotion is represented by Em\(_{i}\)(P,Ag,I,De) with the following elements :

- Em\(_{i}\): the name of the emotion felt by agent \(i\).

- P: the predicate representing the fact about which the emotion is expressed.

- Ag: the agent causing the emotion.

- I: the intensity of the emotion.

- De: the decay withdrawn from the emotion’s intensity at each time step.

An emotion with any intensity and any decay is represented by Em\(_{i}\)(P,Ag) and an emotion caused by any agent is written Em\(_{i}\)(P). I[Em\(_{i}\)(P,Ag)] stands for the intensity of a particular emotion and De[Em\(_{i}\)(P,Ag)] stands for its decay value.

Emotional contagion

Emotional contagion is the process where the emotions of an agent are influenced by the perception of emotions of agents nearby (Hatfield et al. 1993). This influence can lead to the perceived emotion being copied or to the creation of a new emotion. In BEN, the formalism of this process is based on a simplified version of the ASCRIBE model (Bosse et al. 2009), represented by (Em\(_{i}\),Em\(_{j}\),Ch\(_{i}\),R\(_{j}\),Th\(_{j}\)) for a contagion from agent \(i\) to agent \(j\) with the following meanings:

- Em\(_{i}\): the emotion from \(i\) that triggers the contagion if it is perceived by \(j\).

- Em\(_{j}\): the emotion created by \(j\). It can be a copy of Em\(_{i}\) (with other value of intensity and decay) or a new emotion.

- Ch\(_{i}\): the charisma value of \(i\), indicating its power to express its emotions.

- R\(_{j}\): the receptivity value of \(j\), expressing its capacity to be influenced by other agents.

- Th: a threshold value. The contagion is executed only if \(charisma \times receptivity\) is greater than this threshold.

Norms and obligations

The definition of a normative system in BEN is based on the theoretical definition provided by Tuomela (1995), the BOID architecture (Broersen et al. 2001) and the framework proposed by López y López et al. (2006). This means that a norm is considered to be a behavior, active under certain conditions, that an agent may choose to obey to answer one of its intentions. In BEN, only the concepts of social norms and obligations are encompassed under the notion of norms as described below. A social norm is a convention adopted implicitly by a social group while an obligation is an explicit rule imposed by an authority. In the BEN architecture, a norm possessed by agent \(i\) is represented by No\(_{i}\)(Int,Cont,Ob,Pr,B,Vi) with:

- No\(_{i}\): the name of the norm owned by agent \(i\).

- Int: the intention which triggers this norm.

- Cont: the context in which this norm can be applied.

- Ob: an obedience value that serves as a threshold to determine whether or not the norm is applied depending on the agent’s obedience value.

- Pr: a priority value used to choose between multiple norms applicable at the same time.

- B: the behavior, as a sequence of instructions, to execute if the norm is followed by the agent.

- Vi: a violation time indicating how long the norm is considered violated once it has been violated.

This definition of norms covers entirely the concept of social norms, but it is not enough to fully represent an obligation. To do so, the concept of laws is introduced. A law is an explicit rule, imposed upon the agent, that creates an obligation, as a cognitive mental state, under certain conditions. Once again, a law may be violated. Using all these elements, a law is represented by La(Cont,Obl,Ob) with:

- La: the name of the law.

- Cont: the context in which this law can be applied.

- Obl: the obligation created by the law.

- Ob: an obedience value that serves as a threshold to determine whether or not the law is to be executed depending on the agent’s obedience value.

Finally, as norms and laws may be violated, the architecture needs an enforcement system to apply sanctions against agents violating norms or laws. A sanction is a sequence of instructions triggered by enforcement. Enforcement done by agent \(i\) on agent \(j\) is represented by (Me\(_{j}\),Sa\(_{i}\),Re\(_{i}\)) with the following elements:

- Me\(_{j}\): the modality of agent \(j\) that needs to be enforced. It can be a norm, a law or an obligation (the agent applied the law but did not execute the norm corresponding to its obligation).

- Sa\(_{i}\): the sanction the agent \(i\) applies if the modality enforced is violated.

- Re\(_{i}\): the sanction the agent \(i\) applies if the modality enforced is fulfilled, called the reward.

An enforcement works depending on its modality. Enforcing a norm means checking, if its context was fulfilled, if it was applied or not by the agent enforced. Enforcing a law means checking, if its context was fulfilled, if it created or not the given obligation into the enforced agent. Finally, the enforcement of obligations enables modelers to create systems where an agent may fulfil a law (the law is "accepted" by the agent) while the corresponding obligation (i.e., actions implied) may not be followed by the agent.

Social relations

As people create social relations when living with other people and change their behavior based on these relationships, the BEN architecture makes it possible to describe social relations in order to use them in agents’ behavior. Based on the research carried out by Svennevig (2000), a social relation is described by using a finite set of variables. Svennevig identifies a minimal set of four variables : liking, dominance, solidarity and familiarity. A trust variable is added to interact with the enforcement of social norms. Therefore, in BEN, a social relation between agent \(i\) and agent \(j\) is expressed as R\(_{i,j}\)(L,D,S,F,T) with the following elements:

- R: the identifier of the social relation.

- L: a real value between -1 and 1 representing the degree of liking with the agent concerned by the link. A value of -1 indicates that agent \(j\) is hated, a value of 1 indicates that agent \(j\) is liked.

- D: a real value between -1 and 1 representing the degree of power exerted on the agent concerned by the link. A value of -1 indicates that agent \(j\) is dominating, a value of 1 indicates that agent \(j\) is dominated.

- S: a real value between 0 and 1 representing the degree of solidarity with the agent concerned by the link. A value of 0 indicates that there is no solidarity with agent \(j\), a value of 1 indicates a complete solidarity with agent \(j\).

- F: a real value between 0 and 1 representing the degree of familiarity with the agent concerned by the link. A value of 0 indicates that there is no familiarity with agent \(j\), a value of 1 indicates a complete familiarity with agent \(j\).

- T: a real value between -1 and 1 representing the degree of trust with the agent \(j\). A value of -1 indicates doubts about agent \(j\) while a value of 1 indicates complete trust with agent \(j\). The trust value does not evolve automatically in accordance with emotions.

With this definition, a social relation is not necessarily symmetric, which means R\(_{i,j}\)\((L,D,S,F,T)\) is not equal by definition to R\(_{j,i}\)\((L,D,S,F,T)\). L[R\(_{i,j}\)] stands for the liking value of the social relation between agent \(i\) and agent \(j\), D[R\(_{i,j}\)] stands for its dominance value, S[R\(_{i,j}\)] for its solidarity value, F[R\(_{i,j}\)] represents its familiarity value and T[R\(_{i,j}\)] its trust value.

Integrating Social Features into an Agent Architecture

The BEN architecture, represented in Figure 1, provides cognition, emotions, emotional contagion, social relations, personality and norms to agents for social simulation. All these features evolve together during the simulation in order to give a dynamic behavior to the agent, which may react to a change in its environment.

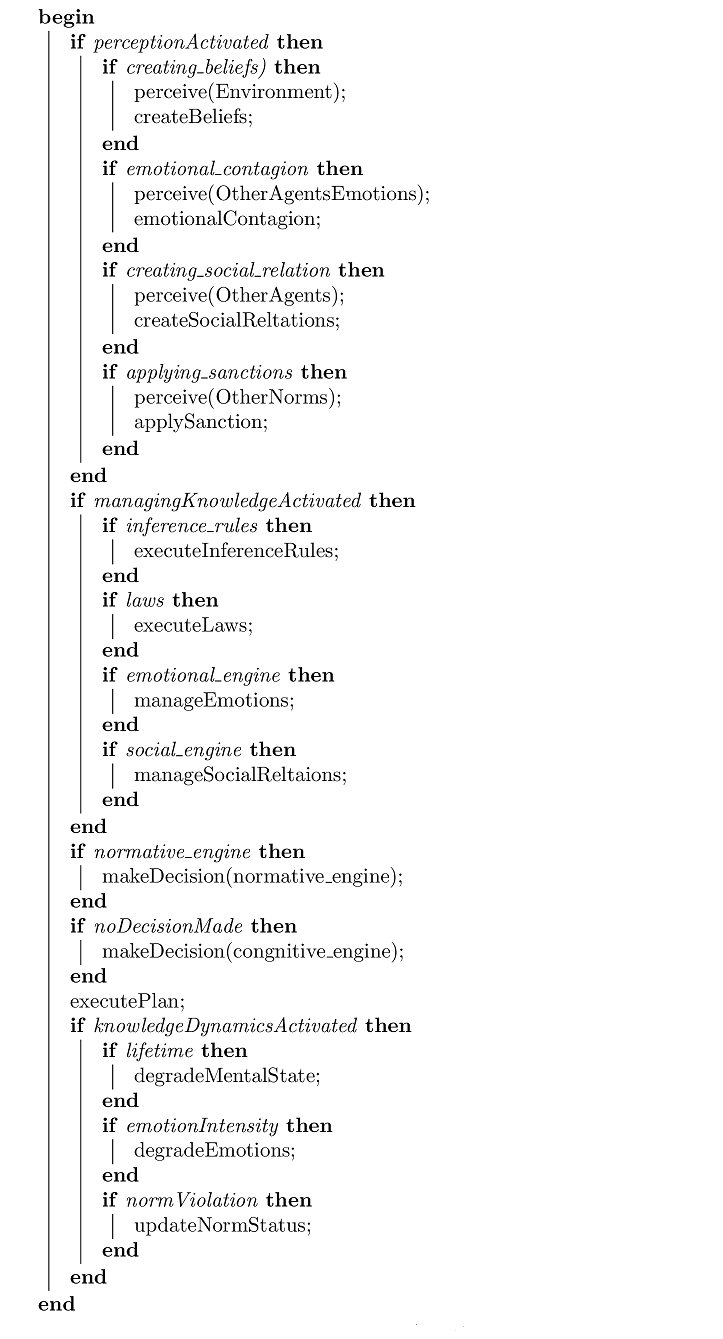

The architecture, which its execution is detailed in algorithm 1, is composed of four main parts connected to the agent’s knowledge bases, seated on the agent’s personality. Each part is made up of processes that are automatically computed (in blue) or which need to be manually defined by the modeler (in pink). Some of these processes are mandatory (in solid line) and some others are optional (in dotted line). This modularity enables each modeler to only use components that seem pertinent with the studied situation without creating heavy and useless computations.

In this section, each part of the architecture is explained; each dynamic concept developed hereafter is based on the static representation proposed in Section 3.

Knowledge of the agent

The agent’s knowledge, which constitutes the core of the architecture, is composed of knowledge bases and variables.

The cognitive bases store all cognitive mental states of the agent as outlined in Section 3.11; the emotional base stores emotions; the social relations base contains all the social relations the agent has with other agents and the normative base contains all the norms of the agent as exposed in Section 3.5. The agent also has a base that stores the sanctions and a base for the action plans which are triggered by the cognitive engine. Finally, a personality based on the formalism presented in Section 3.2 is used by the overall architecture for a global parameterization. These three last bases are apart from the center block as the architecture’s processes cannot modify them during the simulation.

In addition to these knowledge bases, the agent also has variables related to some of the social features. The idea behind the BEN architecture is to connect these variables to the personality module and in particular to the five dimensions of the OCEAN model in order to reduce the number of parameters which need to be entered by the user. These additional variables are the probability to keep the current plan, the probability to keep the current intention, a charisma value linked to the emotional contagion process, an emotional receptivity value linked to the emotional contagion, and an obedience value used by the normative engine.

With the cognition, the agent has two parameters representing the probability to randomly remove the current plan or the current intention in order to check whether there could be a better plan or a better intention in the current context. These two values are connected to the consciousness components (C) of the OCEAN model as it describes the tendency of the agent to prepare its actions (with a high value) or act impulsively (with a low value).

| \[Probability Keeping Plans = \sqrt{C}\] | \[(1)\] |

| \[Probability Keeping Intentions = \sqrt{C}\] | \[(2)\] |

For the emotional contagion, the formalism proposed in Section 3.1 requires charisma (Ch) and emotional receptivity (R) to be defined for each agent. In BEN, charisma is related to the capacity of expression, which is related to the extroversion of the OCEAN model, while the emotional receptivity is related to the capacity to control the emotions, which is expressed with the neurotic value of OCEAN.

| \[Ch = E\] | \[(3)\] |

| \[R = 1-N\] | \[(4)\] |

With the concept of norms, the agent has a value of obedience between 0 and 1, which indicates its tendency to follow laws, obligations and norms. According to research in psychology, which tried to explain the behavior of people participating in a recreation of the Milgram’s experiment (Bègue et al. 2015), obedience is linked with the notions of consciousness and agreeableness which gives the following equation:

| \[obedience = \sqrt{\frac{C+A}{2}}\] | \[(5)\] |

With the same idea, all the parameters required by each process are linked to the OCEAN model as it is explained in the rest of this section.

Perceiving the environment

The first step of BEN, corresponding to the module number 1 on Figure 1, is the perception of the environment. This module is used to connect the environment to the knowledge of the agent, transforming information from the world into cognitive mental states, emotions or social links but also used to apply sanctions during the enforcement of norms from other agents.

The first process in this perception consists in adding beliefs about the world. During this phase, information from the environment is transformed into predicates which are included in beliefs or uncertainties and then added to the agent’s knowledge bases. This process enables the agent to update its knowledge about the world. From the modeler’s point of view, it is only necessary to specify which information is transformed into which predicate. The addition of a belief \(Belief_{A}(X)\) triggers multiple processes related to belief revision: it removes \(Belief_{A}(not X)\), it removes \(Intention_{A}(X)\), it removes \(Desire_{A}(X)\) if \(Intention_{A}(X)\) has just been removed, it removes \(Uncertainty_{A}(X)\) or \(Uncertainty_{A}(not X)\), and it removes \(Obligation_{A}(X)\).

The emotional contagion enables the agent to update its emotions according to the emotions of other agents perceived. Based on the formalism exposed in Section 3.32, the modeler has to indicate the emotion triggering the contagion, the emotion created in the perceiving agent and the threshold of this contagion; the charisma (Ch) and receptivity (R) values are automatically computed as explained in Section 4.1. The contagion from agent \(i\) to agent \(j\) occurs only if \(Ch_{i} \times R_{j}\) is superior or equal to the threshold (Th), which value is 0.25 by default. The presence of the trigger emotion in the perceived agent is checked in order to create the emotion indicated. The equations to determine the intensity and the decay of the new emotion are expressed with Equation 6 and Equation 7.

| \[\left\lbrace \begin{array}{rrcl} \text{If } Em_{j}(P) \text{ already exists: } & I[Em_{j}(P)] &=& I[Em_{j}(P)] + I[Em_{i}(P)] \times Ch_{i} \times R_{j} \\ \text{else: } & I[Em_{j}(P)] &=& I[Em_{i}(P)] \times Ch_{i} \times R_{j} \end{array} \right. \] | \[(6)\] |

| \[\left\lbrace \begin{array}{rrcl} \text{If } Em_{j}(P) \text{ already exists: } & De[Em_{j}(P)] &=& \left\lbrace \begin{array}{rrcl} De[Em_{i}(P)] \text{ if } I[Em_{i}(P)] > I[Em_{j}(P)] \\ De[Em_{j}(P)] \text{ if } I[Em_{j}(P)] > I[Em_{i}(P)] \end{array} \right.\\ \text{else: } & De[Em_{j}(P)] &=& De[Em_{i}(P)] \end{array} \right. \] | \[(7)\] |

Thereafter, the agent has the possibility of creating social relations with other perceived agents. The modeler indicates the initial value for each component of the social link, as explained in Section 3.5. By default, a neutral relation is created, with each value of the link at 0.0. Social relations can also be defined before the start of the simulation, to indicate that an agent has links with other agents at the start of simulation, like links with friends or family members.

Finally, the agent may apply sanctions through the norm enforcement of other agents perceived. The modeler needs to indicate which modality is enforced and the sanction and reward used in the process. Then, the agent checks if the norm, the obligation, or the law, is violated, applied or not activated by the perceived agent. To do so, each agent has to have access to other agents’ normative bases.

A norm is considered violated when its context is verified, and yet the agent chose another norm or another plan to execute because it decided to disobey. A law is considered violated when its context is verified, but the agent disobeyed it, not creating the corresponding obligation. Finally, an obligation is considered violated if the agent did not execute the corresponding norm because it chose to disobey.

Updating the knowledge

The second step of the architecture, corresponding to the module number 2 on Figure 1, consists in managing the agent’s knowledge. This means updating the knowledge bases according to the latest perceptions, adding new desires, new obligations, new emotions or updating social relations, for example.

Modelers have to use inference rules for this purpose. These rules are triggered by a new belief, a new uncertainty or a new emotion, in a certain context, and may add or remove any cognitive mental state or emotion indicated by the user. Using multiple inference rules helps the agent to adapt its mind to the situation perceived without removing all its older cognitive mental states or emotions, thus enabling the creation of a cognitive behavior. These inference rules enable to link manually the various dimensions of an agent, for example creating desires depending on emotions, social relations and personality.

Using the same idea, modelers can define laws, based on the formalism defined in Section 3.5. These laws enable the creation of obligations in a given context based on the newest beliefs created by the agent through its perception or its inference rules. The modelers also need to indicate an obedience threshold and if the agent’s obedience value is below that threshold, the law is violated. If the law is activated, the obligation is added to the agent’s cognitive mental state bases . The definition of laws makes it possible to create a behavior based on obligations imposed upon the agent.

The other two processes of this module are the automatic computations of the agent’s emotions and social relations. The following subsections indicate which models are used in the implementation of these processes.

Adding emotions automatically

BEN enables the agent to get emotions about its cognitive mental states. This addition of emotions is based on the OCC model (Ortony et al. 1988) and its logical formalism (Adam 2007), which has been proposed to integrate the OCC model in a BDI formalism.

According to the OCC theory, emotions can be split into three groups: emotions linked to events, emotions linked to people and actions performed by people, and emotions linked to objects. In BEN, as the focus is on relations between social agents, only the first two groups of emotions (emotions linked to events and people) are considered.

The twenty emotions defined in this paper can be divided into seven groups depending on their relations with mental states: emotions about beliefs, emotions about uncertainties, combined emotions about uncertainties, emotions about other agents with a positive liking value, emotions about other agents with a negative liking value, emotions about ideals and combined emotions about ideals. All the initial intensities and decay value are computed using the OCEAN model and the value attached to the concerned mental states.

The emotions about beliefs are joy and sadness and are expressed this way:

| Joy\(_{i}\)(P\(_{j}\),j) \(\stackrel{\mbox{def}}{=}\) Belief\(_{i}\)(P\(_{j}\)) & Desire\(_{i}\)(P) |

| Sadness\(_{i}\)(P\(_{j}\),j) \(\stackrel{\mbox{def}}{=}\) Belief\(_{i}\)(P\(_{j}\)) & Desire\(_{i}\)(not P) |

Their initial intensity is computed according to Equation 8 with N the neurotism component from the OCEAN model.

| $$I[Em_{i}(P)] = V[Belief_{i}(P)] \times V[Desire_{i}(P)] \times (1+(0,5-N))$$ | (8) |

The emotions about uncertainties are fear and hope and are defined this way:

| Hope\(_{i}\)(P\(_{j}\),j) \(\stackrel{\mbox{def}}{=}\) Uncertainty\(_{i}\)(P\(_{j}\)) & Desire\(_{i}\)(P) |

| Fear\(_{i}\)(P\(_{j}\),j) \(\stackrel{\mbox{def}}{=}\) Uncertainty\(_{i}\)(P\(_{j}\)) & Desire\(_{i}\)(not P) |

Their initial intensity is computed according to Equation 9.

| \[I[Em_{i}(P)] = V[Uncertainty_{i}(P)] \times V[Desire_{i}(P)] \times (1+(0,5-N)) \] | \[(9)\] |

Combined emotions about uncertainties are emotions built upon fear and hope. They appear when an uncertainty is replaced by a belief, transforming fear and hope into satisfaction, disappointment, relief or fear confirmed and they are defined this way:

| Satisfaction\(_{i}\)(P\(_{j}\),j) \(\stackrel{\mbox{def}}{=}\) Hope\(_{i}\)(P\(_{j}\),j) & Belief\(_{i}\)(P\(_{j}\)) |

| Disappointment\(_{i}\)(P\(_{j}\),j) \(\stackrel{\mbox{def}}{=}\) Hope\(_{i}\)(P\(_{j}\),j) & Belief\(_{i}\)(not P\(_{j}\)) |

| Relief\(_{i}\)(P\(_{j}\),j) \(\stackrel{\mbox{def}}{=}\) Fear\(_{i}\)(P\(_{j}\),j) & Belief\(_{i}\)(not P\(_{j}\)) |

| Fear confirmed\(_{i}\)(P\(_{j}\),j) \(\stackrel{\mbox{def}}{=}\) Fear\(_{i}\)(P\(_{j}\),j) & Belief\(_{i}\)(P\(_{j}\)) |

Their initial intensity is computed according to Equation 10 with Em’\(_{i}\)(P) the emotion of fear/hope.

| \[I[Em_{i}(P)] = V[Belief_{i}(P)] \times I[Em'_{i}(P)] \] | \[(10)\] |

On top of this, according to the logical formalism (Adam 2007), four inference rules are triggered by these emotions: the creation of fear confirmed or the creation of relief will replace the emotion of fear, the creation of satisfaction or the creation of disappointment will replace a hope emotion, the creation of satisfaction or relief leads to the creation of joy, the creation of disappointment or fear confirmed leads to the creation of sadness.

The emotions about other agents with a positive liking value are emotions related to emotions of other agents which are in a social relation base with a positive liking value on that link. They are the emotions called "happy for" and "sorry for" which are defined this way:

| Happy for\(_{i}\)(P,j) \(\stackrel{\mbox{def}}{=}\) L[R\(_{i,j}\)]\(>0\) & Joy\(_{j}\)(P) |

| Sorry for\(_{i}\)(P,j) \(\stackrel{\mbox{def}}{=}\) L[R\(_{i,j}\)]\(>0\) & Sadness\(_{j}\)(P) |

Their initial intensity is computed according to Equation 11 with A the agreeableness value from the OCEAN model.

| \[I[Em_{i}(P)] = I[Em_{j}(P)] \times L[R_{i,j}] \times (1-(0,5-A)) \] | \[(11)\] |

Emotions about other agents with a negative liking value are close to the previous definitions, however, they are related to the emotions of other agents which are in the social relation base with a negative liking value. These emotions are resentment and gloating and have the following definition:

| Resentment\(_{i}\)(P,j) \(\stackrel{\mbox{def}}{=}\) L[R\(_{i,j}\)]\(<0\) & Joy\(_{j}\)(P) |

| Gloating\(_{i}\)(P,j) \(\stackrel{\mbox{def}}{=}\) L[R\(_{i,j}\)]\(<0\) & Sadness\(_{j}\)(P) |

Their initial intensity is computed according to Equation 12. This equation can be seen as the inverse of Equation 11, and means that the intensity of resentment or gloating is greater if the agent has a low level of agreeableness contrary to the intensity of "happy for" and "sorry for".

| \[I[Em_{i}(P)] = I[Em_{j}(P)] \times |L[R_{i,j}]| \times (1+(0,5-A)) \] | \[(12)\] |

Emotions about ideals are related to the agent’s ideal base which contains, at the start of the simulation, all the actions about which the agent has a praiseworthiness value to give. These ideals can be praiseworthy (their praiseworthiness value is positive) or blameworthy (their praiseworthiness value is negative). The emotions coming from these ideals are pride, shame, admiration and reproach and have the following definition:

| Pride\(_{i}\)(P\(_{i}\),i) \(\stackrel{\mbox{def}}{=}\) Belief\(_{i}\)(P\(_{i}\)) & Ideal\(_{i}\)(P\(_{i}\)) & V[Ideal\(_{i}\)(P\(_{i}\))] \(>0\) |

| Shame\(_{i}\)(P\(_{i}\),i) \(\stackrel{\mbox{def}}{=}\) Belief\(_{i}\)(P\(_{i}\)) & Ideal\(_{i}\)(P\(_{i}\)) & V[Ideal\(_{i}\)(P\(_{i}\))] \(<0\) |

| Admiration\(_{i}\)(P\(_{j}\),j) \(\stackrel{\mbox{def}}{=}\) Belief\(_{i}\)(P\(_{j}\)) & Ideal\(_{i}\)(P\(_{j}\)) & V[Ideal\(_{i}\)(P\(_{j}\))] \(>0\) |

| Reproach\(_{i}\)(P\(_{j}\),j) \(\stackrel{\mbox{def}}{=}\) Belief\(_{i}\)(P\(_{j}\)) & Ideal\(_{i}\)(P\(_{j}\)) & V[Ideal\(_{i}\)(P\(_{j}\))] \(<0\) |

Their initial intensity is computed according to Equation 13 with O the openness value from the OCEAN model.

| \[I[Em_{i}(P)] = V[Belief_{i}(P)] \times |V[Ideal_{i}(P)]| \times (1+(0,5-O)) \] | \[(13)\] |

Finally, combined emotions about ideals are emotions built upon pride, shame, admiration and reproach. They appear when joy or sadness appear with an emotion about ideals. They are gratification, remorse, gratitude and anger which are defined as follows:

| Gratification\(_{i}\)(P\(_{i}\),i) \(\stackrel{\mbox{def}}{=}\) Pride\(_{i}\)(P\(_{i}\),i) & Joy\(_{i}\)(P\(_{i}\)) |

| Remorse\(_{i}\)(P\(_{i}\),i) \(\stackrel{\mbox{def}}{=}\) Shame\(_{i}\)(P\(_{i}\),i) & Sadness\(_{i}\)(P\(_{i}\)) |

| Gratitude\(_{i}\)(P\(_{j}\),j) \(\stackrel{\mbox{def}}{=}\) Admiration\(_{i}\)(P\(_{j}\),j) & Joy\(_{i}\)(P\(_{j}\)) |

| Anger\(_{i}\)(P\(_{j}\),j) \(\stackrel{\mbox{def}}{=}\) Reproach\(_{i}\)(P\(_{j}\),j) & Sadness\(_{i}\)(P\(_{j}\)) |

Their initial intensity is computed according to Equation 14 with Em’\(_{i}\)(P) the emotion about ideals and Em"\(_{i}\)(P) the emotion about beliefs.

| \[I[Em_{i}(P)] = I[Em'_{i}(P)] \times I[Em"_{i}(P)] \] | \[(14)\] |

In order to keep the initial intensity of each emotion between 0 and 1, each equation is truncated between 0 an 1 if necessary.

The initial decay value for each of these twenty emotions is computed according to the same Equation 15 with \(\Delta\) t a time step which enables to define that an emotion does not last more than a given time.

| \[De[Em_{i}(P)] = N \times I[Em_{i}(P)] \times \Delta t \] | \[(15)\] |

Updating social relations

When an agent already known is perceived (i.e. there is already a social link with it), the social relation with this agent is updated automatically by BEN. This update is based on the work of Ochs et al. (2009) and takes the agent’s cognitive mental states and emotions into account. In this section, the automatic update of each variable of a social link R\(_{i,j}\)(L,D,S,F,T) by the architecture is described in details; the trust variable of the link is however not updated automatically.

According to (Ortony 1991), the degree of liking between two agents depends on the valence (positive or negative) of the emotions induced by the corresponding agent. In the emotional model of the architecture, joy and hope are considered as positive emotions (satisfaction and relief automatically raise joy with the emotional engine) while sadness and fear are considered as negative emotions (fear confirmed and disappointment automatically raise sadness with the emotional engine). So, if an agent \(i\) has a positive (resp. negative) emotion caused by an agent \(j\), this will increase (resp. decrease) the value of appreciation in the social link from \(i\) concerning \(j\).

Moreover, research has shown that the degree of liking is influenced by the solidarity value (Smith et al. 2014). This may be explained by the fact that people tend to appreciate people similar to them.

The computation formula is described with Equation 16 with mPos the mean value of all positive emotions caused by agent \(j\), mNeg the mean value of all negative emotions caused by agent \(j\) and \(\alpha _{L}\) a coefficient depending of the agent’s personality, indicating the importance of emotions in the process, and which is described by Equation 17.

| \[\begin{gathered} L[R_{i,j}]=L[R_{i,j}]+|L[R_{i,j}]|(1-|L[R_{i,j}]|)S[R_{i,j}] +\alpha _L (1-|L[R_{i,j}]|)(mPos-mNeg) \end{gathered}\] | \[(16)\] |

| \[\alpha _L = 1-N \] | \[(17)\] |

Keltner & Haidt (2001) and Shiota et al. (2004) explain that an emotion of fear or sadness caused by another agent represent an inferior status. But Knutson (1996) explains that perceiving fear and sadness in others increases the sensation of power over those persons.

The computation formula is described by Equation 18 with mSE the mean value of all negative emotions caused by agent \(i\) to agent \(j\), mOE the mean value of all negative emotions caused by agent \(j\) to agent \(i\) and \(\alpha _{D}\) a coefficient depending on the agent’s personality, indicating the importance of emotions in the process, and which is described by Equation 19.

| \[D[R_{i,j}]=D[R_{i,j}]+\alpha _D (1-|D[R_{i,j}]|)(mSE-mOE) \] | \[(18)\] |

| \[\alpha _D = 1-N \] | \[(19)\] |

As explained in the formalism exposed in Section 3.5, the solidarity represents the degree of similarity of desires, beliefs and uncertainties between two agents. In BEN, the evolution of the solidarity value depends on the ratio of similarity between the desires, beliefs and uncertainties of agent \(i\) and those of agent \(j\). To compute the similarities and oppositions between agent \(i\) and agent \(j\), agent \(i\) needs to have beliefs about agent \(j\)’s cognitive mental states. Then it compares these cognitive mental states with its own to detect similar or opposite knowledge.

On top of that, according to Rivera & Grinkis (1986), negative emotions tend to decrease the value of solidarity between two people. The computation formula is described by Equation 20 with sim the number of cognitive mental states similar between agent \(i\) and agent \(j\), opp the number of opposite cognitive mental states between agent \(i\) and agent \(j\), NbKnow the number of cognitive mental states in common between agent \(i\) and agent \(j\), mNeg the mean value of all negative emotions caused by agent \(j\), \(\alpha _{S1}\) a coefficient depending of the agent’s personality, indicating the importance of similarities and oppositions in the process, and which is described by Equation 21 and \(\alpha _{S2}\) a coefficient depending of the agent’s personality, indicating the importance of emotions in the process, and which is described by Equation 22.

| \[\begin{gathered} %\[ S[R_{i,j}]=S[R_{i,j}]+ S[R_{i,j}] \times (1-S[R_{i,j}]) \times (\alpha _{S1} \frac{sim-opp}{NbKnow} -\alpha _{S2} mNeg)) \end{gathered}\] | \[(20)\] |

| \[\alpha _{S1} = 1-O \] | \[(21)\] |

| \[\alpha _{S2} = 1-N \] | \[(22)\] |

In psychology, emotions and cognition do not seem to impact the familiarity. However, Collins & Miller (1994) explain that people tend to be more familiar with people whom they appreciate. This notion is modeled by grounding the evolution of the familiarity value on the liking value between two agents. The computation formula for the evolution of the familiarity value is defined by Equation 23.

| \[F[R_{i,j}]=F[R_{i,j}](1+L[R_{i,j}]) \] | \[(23)\] |

All the equations have been elaborated such as the evolution remains between -1 and 1 for liking and dominance and between 0 and 1 for solidarity and familiarity, in accordance with the formalism exposed in Section 3.5.

The trust value is not evolving automatically in BEN, as there is no clear and automatic link with cognition or emotions. However, this value can evolve manually, especially with sanctions and rewards to social norms where the modeler can indicate a modification of the trust value during the enforcement process described in Section 4.2.

Making decisions

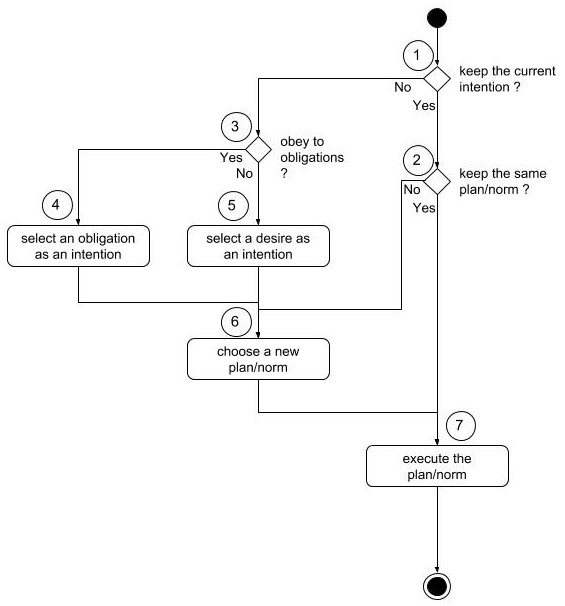

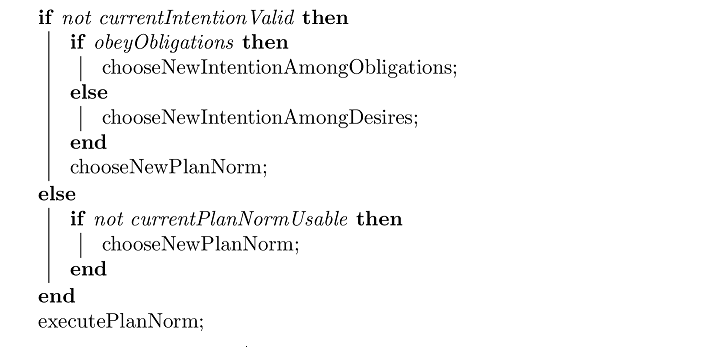

The third part of the architecture, number 3 on Figure 1, is the only one mandatory as it is where the agent makes a decision. A cognitive engine can be coupled with a normative engine to chose an intention and a plan to execute. The complete engine is summed up in Figure 2 and described by the Algorithm 2.

This decision making process may be divided into seven steps:

- Step 1: the engine checks the current intention. If it is still valid, the intention is kept so the agent may continue to carry out its current plan.

- Step 2: the engine checks if the current plan/norm is still usable or not, depending on its context.

- Step 3: the engine checks if the agent obeys an obligation taken from the obligations corresponding to a norm with a valid context in the current situation and with a threshold level lower than the agent’s obedience value as computed in Section 4.1.

- Step 4: the obligation with the highest priority is taken as the current intention.

- Step 5: the desire with the highest priority is taken as the current intention.

- Step 6: the plan or norm with the highest priority is selected as the current plan/norm, among the plans or norms corresponding to the current intention with a valid context.

- Step 7: the behavior associated with the current plan/norm is executed.

Steps 4, 5 and 6 do not have to be deterministic; they may be probabilistic. In this case, the priority value associated to obligations, desires, plans and norms serves as a probability.

Creating a temporal dynamic

The final part of the architecture, number 4 on Figure 1, is used to create a temporal dynamic to the agent’s behavior, useful in a simulation context. To do so, this module automatically degrades mental states and emotions and updates the status of each norm.

The degradation of mental states consists in reducing their lifetime. When the lifetime is null, the mental state is removed from its base. The degradation of emotions consists in reducing the intensity of each emotions stored by its decay value. When the intensity of an emotion is null, the emotion is removed from the emotional base.

Finally, the status of each norm is updated to indicate if the norm was activated or not (if the context was right or wrong) and if it was violated or not (the norm was activated but the agent disobeyed it). Also, a norm can be violated for a certain time which is updated and if it becomes null, the norm is not violated anymore.

These last steps enable agent’s behavioral components to automatically evolve through time, leading the agents to forget a piece of knowledge after a certain amount of time, creating dynamics in their behavior.

Discussion

The contribution presented in this paper is articulated around three points: a global architecture, the aggregation of known social and affective theories, and an implementation of the global architecture into a behavior model to use BEN on a real case study.

A general behavioral architecture

The BEN Architecture shown in Figure 1 represents a global and general behavioral architecture for the development of agents simulating human actors. BEN is a proposition that connects multiple affective and social features for the agent’s behavior, making it possible for these social features to interact with each other. Nevertheless, the architecture can be easily adapted to a specific context or refined to integrate new elements without having to modify the overall structure.

The main advantage of BEN is its modularity ; even if all the processes are explained in detail in this section, they are not all mandatory. A modeler may unplug any optional part of the architecture without preventing the rest from working properly. For example, If someone estimates that norms and obligations have nothing to do with the case being studied, processes linked to norms and obligations can be unplugged without stopping the architecture from functioning.

This modularity means that all the social features are related but not dependent to each other. For example, emotions can be used in the context definition of plans or norms, linking all these notions together and thus creating a richer behavior.

Assumptions about social and affective theories

In order to implement the general architecture into a behavioral model, we had to choose particular theories to support the various processes. As our objective is to build an architecture usable by social scientists and experts of various fields, and not only by computer researchers, we integrated theories already used by the social simulation community (Bourgais et al. 2018).

In details, the cognitive engine to make a decision is based on BDI (Bratman 1987), the representation and automatic creation of emotions is based on the OCC model (Ortony et al. 1988) and its formalization with BDI (Adam 2007), the emotional contagion process is based on the ASCRIBE model (Bosse et al. 2009) and the representation and manipulation of social relations is based on the work of (Svennevig 2000). Finally, the personality of the agent, used for the parametrization of the overall architecture, is based on the OCEAN model (McCrae & John 1992) which makes a consensus in the community (Eysenck 1991).

As experts are familiar with these social and affective theories, we assess it is easier for them to use BEN for the definition of an agent simulating a human actor as they already manipulates the concepts encompass and used by the architecture.

Implementation of a behavioral model

To use BEN on a real life scenario, we have to instantiate the theories used with computation formulas where they were missing. To do so, we mostly rely on existing works (Adam 2007; Ochs et al. 2009; Lhommet et al. 2011), adapting them when needed, which is discussed in detail in this section.

The various equations proposed for computing the parameters, intensity and decay of emotions or evolution of social relations were all developed to be linked with the fewest dimensions of OCEAN in the simplest way to respect the principle of parsimony. Different people do not have the same value for these parameters, which is explained by the various personalities observed on a population. Also, one of the objectives was to reduce the number of parameters entered by the modeler to ease the parametrization phase of a model using BEN.

Most of the relations with OCEAN dimensions are linear to stay simple. However, linear relations were not satisfying for parameters such as obedience as people tends to have a high value of obedience, even with an average personality. This is why the equation proposed uses square roots.

The equations for the computation of initial intensities of emotions presented in Section 4.31 may be simplified in the way they are written; they were presented this way to highlight their construction. These equations are composed of a term directly related to the cognitive mental states involved and then pondered upon by a personality dimension; this dimension being balanced on its neutral value of 0.5 as explained in Section 3.2. Also, for each emotion, only one personality dimension was retained: the one closely related to the meaning of the emotion, once again keeping the parsimony principle in mind. For example, the agreeableness dimension of personality is used with emotions related to emotions of others (happy for, sorry for, resentment and gloating).

The equations for the evolution of dimensions from social relations were developed to respect the underlying psychological notions, but also with a will to keep them in their limits (-1 and 1 for liking and dominance, 0 and 1 for solidarity and familiarity). Personality dimensions were included once again to reproduce the fact that these evolution are different for persons with different personalities. The neurotism dimension is used for the part related to emotions, while the openness dimension is used for the part related to the similarities and differences of mental states between two agents (someone open-minded will give less importance to this field compared to someone narrow-minded).

The implementation we made of the chosen theories may be discussed or changed by any expert user. For example, the emotional engine can be replaced with another one based on another theory, the same for the update of social relations or the cognitive engine. However, these modifications would not affect the overall structure of the general architecture.

The Kiss Nightclub Case

The BEN architecture has been implemented and integrated in the GAMA modeling and simulation platform (Taillandier et al. 2018). This platform, which has been a growing success over the last few years, aims to support the development of complex models while ensuring that non-computer scientists get an easy access to high-level, otherwise complex, operations.

In this section, one of the case studies using the GAMA implementation of BEN to model human behavior is presented. The case study concerns the evacuation of a nightclub in Brazil, the Kiss Nightclub. The main goal of this example is to show the richness of behavior possible with BEN, still keeping high level explanations. The complete model is available on OpenABM (https://www.comses.net/codebases/7ca5fbb1-9e3a-4ea1-a63f-87d2ba9f39d6/releases/1.1.0/).

Presentation of the case

In January 27th of 2013, a fire started inside the Kiss Nightclub in Santa Maria, Rio Grande do Sul in Brazil, causing the death of 242 people. Many factors caused this tragedy: between 1200 and 1400 persons were in the nightclub while it had a maximum capacity of 641 people, there was only one exit door, there was no alarm and the exit signals were broken, indicating the restrooms instead of the exit. The vast majority of people who died were found in the restrooms, dead because of the smoke (Atiyeh 2013).

This case has been studied before (Silva et al. 2017) with a simple model for the agent’s behavior. The aim of the authors was to show that respecting the safety measures could have helped reducing the number of casualties. In this paper, the goal is to show how this case could be modeled with the BEN architecture and how it could help in getting a result closer to the real events. Also, BEN enables to incorporate more complex behaviors thanks to its affective and social features, closer to human reactions in this situation. The goal is also to show that BEN runs on a simulation platform with an acceptable computation time.

In order to be as close as possible to the real case, the club’s blueprint at the time of the tragedy was reproduced. The environment of the simulation is shown in Figure 3 where walls and fences high enough to block people are in black and the exit door is in blue. The yellow disc in the left top corner is the ignition point approximately placed where the real fire started (on the scene where a local music band was playing) and white triangles represent the people placed randomly.

In this case, the focus is on the propagation of the smoke as it is the cause in more than 95 per cent of the casualties. The spread of this smoke has been based on a study made by the French government to model hazards due to fires (Chivas & Cescon 2005). The main idea is that smoke spreads from its initial point at a constant pace, filling the entire nightclub within 4 to 5 minutes. The floor of the club is divided into square cells, which all have a percentage representing how much smoke is in the volume above it. The visual result of the spread of smoke, two minutes after the start of the fire, is shown in Figure 4.

According to the same report (Chivas & Cescon 2005), it takes about 50 seconds to faint because of this kind of smoke. The simulation was configured to respect this time so agents are not automatically killed when touched by the smoke.

Creating agents’ behavior using BEN

Evacuating a nightclub in fire is not a common situation. It involves not only cognition to evaluate the context and make a decision but also emotions as people react according to their fear of fire. Given that a nightclub features a lot of people who act together, emotional contagion and social relations have to be taken into account. Finally, the evacuation plan imposed by the authorities can be modeled through the notions of laws, obligations and norms.

The main focus of this example is the behavior of people evacuating the nightclub, given that the objective is to mimic the real situation. Nevertheless, there is not enough testimonials from people who lived this tragedy about their behavior during the evacuation and hence their actions are hypothetical. This means that the agents’ behavior is based on hypotheses about the behavior of real people in this situation.

At the beginning of the simulation, agent’s initial knowledge are of three kinds: beliefs about the worlds, initial desires and social relations with friends. Also, each agent has a personality. Table 1 shows how some of these initial knowledge may be formalized with BEN.

| statement | formalisation | description |

| A belief on the exact position of the exit door | \(Belief_{i}(exitDoor,lifetime1)\) | Each agent has a belief about the precise location of the exit door with a lifetime value at \(lifetime1\). |

| A desire there is no fire | \(Desire_{i}(not Fire,1.0)\) | Each agent wish there is no fire in the nightclub with a priority of \(1.0\). This desire cannot lead to an action (no action plan is defined to answer it). |

| a relation of friendship with another agent | \(R_{i,j}(L,D,S,F,T)\) | Each agent \(i\) is likely to have a social relation with agent \(j\), representing its friend. |

The first step of BEN is the perception of the environment which means defining what an agent perceives and how it affects its knowledge. Here are examples of the agent’s perceptions in the studied case:

| Perception | Action |

| exit door | updates the beliefs related to it |

| fire | adds the belief there is a fire |

| smoke | adds the belief about the level of smoke perceived |

| other human agent | create social relations with them and execute an emotional contagion about the fear of a fire |

Once the agent is up to date with its environment, its overall knowledge has to adapt to what was perceived. This is done with the definition of inference rules and laws:

| Law/Rule | Activation | Action |

| law | the level of smoke is maximum | follow the exit signs |

| rule | belief there is fire | adds the desire to flee |

| rule | belief there is smoke | adds an uncertainty there is a fire |

| rule | has a fear emotion about the fire with an intensity greater than a given threshold | adds the desire to flee |

With the execution of inference rules and laws, each agent creates emotions through the emotional engine. In this case, the presence of an uncertainty about the fire (added through the inference rule concerning the belief about smoke), with the initial desire that there is no fire, produces an emotion of fear which intensity is computed depending on the quantity of smoke perceived.

Once the agent acquires the desire to flee (because it perceived the fire or its fear of a fire had an intensity great enough), it follows action plans and norms. Table 4 shows the definition of some action plans and norms used by the agent to answer its intention to flee, depending on the context perceived.

| conditions | actions | commentaries |

| The agent has a good visibility and has a belief on the exact location of the exit door | The agent runs to the exit door | In this plan, the agent runs to the exit door following the shortest path. |

| The agent has a good visibility and has no belief about the location of the door | the agent follows the agent in its field of view with the highest trust value among its social relations | This norm works with the trust value of social relations created during the simulation. |

| The agent has a bad visibility and has the obligation to follow signs | The agent goes to the restrooms | In this norm, the agent comply with the law that indicates to follow exit signs. |

| The agent has a bad visibility and has a belief exit signs are wrong | The agent moves randomly | In this plan, the agent moves randomly in the smoke. |

The social relation defined with a friend may also be used to define plans to help one’s friend if it is lost in smoke. This plan consists in finding the friend and telling it the location of the exit door.

The variety in agent personalities makes it possible to obtain heterogeneous behaviors as the intensities computed for the fear emotion are different. This means that two agents placed in the same situation will not decide to flee at the time, some going out early and some waiting a little more time.

By multiplying perceptions, inference rules and plans or norms, it is possible to create a wide variety of behaviors, from agents fleeing when seeing the fire, to agents lost in smoke or going back to the smoke to help their friends. Also, BEN enables agents to react to a change in their environment, not continuing with a behavior with no sense in the current context.

Results and discussion

At the start of the simulation, agents are placed randomly inside the nightclub. Indeed, there is no information available about the precise location of each and every occupant of the nightclub. However, since the place was overcrowded, using a random location for them seems to be an acceptable approximation. Also, it is assumed that people were going to the club with friends. The precise initial number of people inside the club at the moment of the tragedy is not known, but reports indicate there were between 1200 and 1400 people (Atiyeh 2013). Thus, three cases were simulated: 1200 initial people, 1300 initial people and 1400 initial people at the starting point.

For each case, 10 simulations were run with a new random location for each agent at each starting time. The simulations were run on an Intel core i7 with 16 Gb of RAM. Figure 5 shows a visual result of the simulation after a minute and a half of simulated time. The different colors of triangles represent the various behavior adopted by the agents: white agents are not aware of the danger, green agents go to the exit, yellow agents go to the exit direction, blue agents comply with the law, red agents are lost in smoke, brown agents follow another agent and purple agents are going through the smoke as they remember the location of the exit. The complete model with a video of the simulation may be found at the following address: https://github.com/mathieuBourgais/ExempleThese.

The statistical outputs of the simulations are shown in Table 5. For each case, a mean value of agents dead because of the smoke as well as a standard deviation was computed. The OCEAN parameters of each agent were randomly initialized with a Gaussian distribution centered on 0.5, the mean value for each personality dimension. The perception of each agent has been based on real value for the field of view and the vision amplitude. The only parameters tuned in the model are the thresholds representing the quantity of smoke to start the evacuation and the quantity of smoke that decreases the field of view and forces the agent to obey to the law.

Table 5 shows that the simulation is able to statistically be close to the real case where there were 242 victims. However, the main result concern the credibility of the simulation which may be observed with Figure 5 or on the video of the simulation (at the following address: https://github.com/mathieuBourgais/ExempleThese). Agents show various behaviors in almost similar situations which is explained by the various personalities as in real life where two persons in the same situation will not obviously make the same decision based on their personalities. It is also possible to observe behavior patterns: some people are leaving early because they perceived the fire while others are still dancing at the beginning of the simulation; the first persons evacuating are joined by people between them and the exit thanks to emotional contagion; people still in the nightclub lately are trapped in smoke and have to follow the signs which, in this particular case, are pointing to the toilets, leading to the vast majority of death in the simulations; people getting out of smoke and perceiving the exit change their action and flee from the nightclub. BEN enables to explain and express these behaviours with high-level concepts such as the personality which enables the creation of emotions with different intensities, different threshold of obedience, and so on.

| number of people | 1200 | 1300 | 1400 |

| mean | 230.2 | 237.7 | 249.4 |

| standard deviation | 20.1 | 15.6 | 32.6 |

Table 6 shows results obtained with extreme values for personality traits (all traits at 0.0 or all traits at 1.0) for 1200 initial agents. This table tells the personality has an important impact on the results : in a case, most of the people flee at the beginning because of a huge emotional contagion, in the other case, most of the people flee late, because of low levels of emotions and emotional contagion, which simulates the running of the model with modules related to emotions and emotional contagion switched off.

| personality | 0.0 | 1.0 |

|---|---|---|

| mean | 3.4 | 362.8 |

| standard deviation | 1.43 | 20.16 |

Table 7 indicates the mean computation time for each step in ms. This means that, for 1200 people initially in the club, it takes approximately 860 ms to compute the behavior of all the agents still in the simulation at each step, representing 1 second of simulated time. This result shows that BEN enables the simulation of hundreds of agents with almost all the features possible, within a reasonable computation time.

| number of people | 1200 | 1300 | 1400 |

| mean | 861.7 | 841.4 | 884.9 |

| standard deviation | 78.4 | 57.7 | 101.2 |

As explained previously, the agents’ behavior developed in this example is based on hypotheses but only obvious hypotheses were retained. Also, the BEN architecture enables to translate directly these obvious hypotheses into behaviors keeping their high-level descriptions. These high-level concepts are then supported by low level plans which describe simple actions, retaining the high-level explanation for each agent’s behavior.

The evacuation of the Kiss Nightclub has been previously studied by other researchers with an agent-based simulation (Silva et al. 2017) with a simpler behavior model: agents are either happy and they dance or they are scared by the start of the fire and they flee. However, the results obtained do not reproduce statistically the real case, with more than 400 death in the simulation against the 242 real casualties. Our approach seems better on this particular case with at least a closer result to the real case.

There already exists other approaches for the simulation of crowd evacuations with agent-based models, describing the behavior with social forces (Pelechano et al. 2007) or social contagion (Bosse et al. 2013) with promising results. There also exists other ways to model emergency evacuations without using agent-based simulations (Bakar et al. 2017). However, as BEN is grounded on folk psychology concepts (Norling 2009), we assess it may defines a more credible and a more explainable behavior than the cited approaches.

Another strength of BEN is its capability to define a large amount of simulated behavior. Although we are not expert in emergency evacuation, we were able to create variations, so the fleeing behaviour seems more believable. This point is supported by previous works where BEN has been compared to a final state machine (Adam et al. 2017) and has been used by non-programming researchers to test its easiness of use (P. Taillandier et al. 2017).

The main contribution of BEN is the explainability gained for social simulations in general. The behavior of each agent is expressed with psychological terms instead of mathematical equations, which is easier to understand and to explain, from the modeler’s point of view (Broekens et al. 2010; Kaptein et al. 2017). Besides, the definition of a credible behavior is eased thanks to the modularity of the architecture where only useful processes in the context of the studied case have to be implemented.

Conclusion

This paper presents BEN, an agent architecture featuring cognition, emotions, emotional contagion, personality, social relations and norms for social simulations. All these features act together to reproduce the human decision-making process. This architecture was built to be as modular as possible in order to let modelers easily adapt it to their case studies.

This architecture relies on a formalization of all its features which was developed according to psychological theories with the desire to standardize the representation of the concepts as much as possible. Then, BEN has been implemented in the GAMA platform to ensure its usability. An example concerning the evacuation of a nightclub showed that even with a simple model, BEN allows to produce a great variety of behaviors, while remaining credible. This example also showed that using BEN only requires one to translate high level hypotheses into behavior with the same high level concepts.

BEN also represents a solid base, which may be extended in the future. Other social features such as culture or experience may be integrated into the architecture. Eventually, it is possible to imagine a future architecture based on BEN, with multiple theories and social features, asking the users to select their own combinations, which could be used to test psychological hypotheses.

Finally, as BEN is a modular architecture, it has already been used in various project where only a few parts of the architecture were put into use. The cognitive engine has help studying the land use change in the Mekong delta (Truong et al. 2015), cognition and emotions are used by the SWIFT project which studies the evacuation during bushfires in Australia (Adam et al. 2017), cognition and social relations are used by the Li-BIM project (Taillandier et al. 2017), which is about household energy consumption and BEN has been used in a more complete way for the evacuation of a nightclub under fire in USA (Valette & Gaudou 2018). These different examples shows that BEN has already been used partially in various contexts, showing its modularity and independence to the case studied.

References

ADAM, C. (2007). Emotions: From psychological theories to logical formalization and implementation in a bdi agent [PhD thesis]. INPT Toulouse; INPT Toulouse.

ADAM, C., & Gaudou, B. (2016). BDI agents in social simulations: A survey. The Knowledge Engineering Review, 31(3), 207-238. [doi:10.1017/s0269888916000096]

ADAM, C., Taillandier, P., Dugdale, J., & Gaudou, B. (2017). BDI vs fsm agents in social simulations for raising awareness in disasters: A case study in melbourne bushfires. International Journal of Information Systems for Crisis Response and Management (IJISCRAM), 9(1), 27–44. [doi:10.4018/ijiscram.2017010103]

ANDRIGHETTO, G., Campennı̀, M., Conte, R., & Paolucci, M. (2007). On the immergence of norms: A normative agent architecture. Proceedings of Aaai Symposium, Social and Organizational Aspects of Intelligence, 19.

ATIYEH, B. (2013). Brazilian kiss nightclub disaster. Annals of Burns and Fire Disasters 26(1), 3.

AXELROD, R. (1997). 'Advancing the art of simulation in the social sciences.' In Conte, R. Hegselmann, R. & Terna, P. (Eds.), Simulating Social Phenomenas. Berlin/Heidelberg, Springer, pp. 21–40.

BAKAR, N. A. A., Majid, M. A., & Ismail, K. A. (2017). An overview of crowd evacuation simulation. Advanced Science Letters, 23(11), 11428–11431. [doi:10.1166/asl.2017.10298]

BALKE, T., & Gilbert, N. (2014). How do agents make decisions? A survey. Journal of Artificial Societies and Social Simulation, 17(4), 13: https://www.jasss.org/17/4/13.html. [doi:10.18564/jasss.2687]

BÈGUE, L., Beauvois, J.-L., Courbet, D., Oberlé, D., Lepage, J., & Duke, A. A. (2015). Personality predicts obedience in a milgram paradigm. Journal of Personality, 83(3), 299–306.

BOSSE, T., Duell, R., Memon, Z. A., Treur, J., & Van Der Wal, C. N. (2009). Multi-agent model for mutual absorption of emotions. In J. Otamendi, A. Bargiela, J. L. Montes, & L. M. D. Pedrera (Eds.), Proceedings of the 23rd European Conference on Modelling and Simulation (ECMS'09), 2009, 212–218. [doi:10.7148/2009-0212-0218]

BOSSE, T., Hoogendoorn, M., Klein, M. C., Treur, J., Van Der Wal, C. N., & Van Wissen, A. (2013). Modelling collective decision making in groups and crowds: Integrating social contagion and interacting emotions, beliefs and intentions. Autonomous Agents and Multi-Agent Systems, 27(1), 52–84. [doi:10.1007/s10458-012-9201-1]

BOURGAIS, M., Taillandier, P., Vercouter, L., & Adam, C. (2018). Emotion modeling in social simulation: A survey. Journal of Artificial Societies and Social Simulation, 21(2), 5: https://www.jasss.org/21/2/5.html. [doi:10.18564/jasss.3681]

BRATMAN, M. (1987). Intentions, plans, and practical reason. Philosophical Review, 100(2).

BROEKENS, J., Harbers, M., Hindriks, K., Van Den Bosch, K., Jonker, C., & Meyer, J.-J. (2010). Do you get it? User-evaluated explainable bdi agents. German Conference on Multiagent System Technologies. [doi:10.1007/978-3-642-16178-0_5]

BROERSEN, J., Dastani, M., Hulstijn, J., Huang, Z., & Torre, L. van der. (2001). The boid architecture: Conflicts between beliefs, obligations, intentions and desires. Proceedings of the Fifth International Conference on Autonomous Agents, 9–16. [doi:10.1145/375735.375766]

BYRNE, M., & Anderson, J. (1998). Perception and Action. The Atomic Components of Thought. London, UK: Psychology Press.

CHIVAS, C., & Cescon, J. (2005). Formalisation du savoir et des outils dans le domaine des risques majeurs (dra-35) - toxicité et dispersion des fumées d’incendie phénoménologie et modélisation des effets. INERIS.

COHEN, P. R., & Levesque, H. J. (1990). Intention is choice with commitment. Artificial Intelligence, 42, 213-261. [doi:10.1016/0004-3702(90)90055-5]

COLLINS, N. L., & Miller, L. C. (1994). Self-disclosure and liking: A meta-analytic review. Psychological Bulletin, 116(3), 457-75. [doi:10.1037/0033-2909.116.3.457]

DIAS, J., Mascarenhas, S., & Paiva, A. (2014). 'Fatima modular: Towards an agent architecture with a generic appraisal framework.' In Bosse T., Broekens J., Dias J., van der Zwaan J. (Eds.), Emotion Modeling. Berlin Heidelberg: Springer, pp. 44–56. [doi:10.1007/978-3-319-12973-0_3]

DIGNUM, F., DIGNUM, V., & Jonker, C. M. (2008). Towards agents for policy making. International Workshop on Multi-Agent Systems and Agent-Based Simulation, 141–153. [doi:10.1007/978-3-642-01991-3_11]