Josep M. Pujol, Andreas Flache, Jordi Delgado and Ramon Sangüesa (2005)

How Can Social Networks Ever Become Complex? Modelling the Emergence of Complex Networks from Local Social Exchanges

Journal of Artificial Societies and Social Simulation

vol. 8, no. 4

<https://www.jasss.org/8/4/12.html>

For information about citing this article, click here

Received: 20-Jun-2005 Accepted: 28-Sep-2005 Published: 31-Oct-2005

Abstract

AbstractMit = {(oij,tij)}j ∈ Jit,

where tij represents the time point at which the memorized interaction took place and Jit represents the subset of all agents that i memorizes, Jit ⊆ {1..N}. One time step corresponds to an interaction round in which all the agents are activated once in random order to make decisions (asynchronous random activation). The subset of network members which an agent can remember is limited in size by a maximum memory size Mc, i.e. #Jit ≤ Mc∀t∀i. We model the maximum memory size Mc as a parameter that is equal for all agents, because we are mainly interested in effects of structural conditions that shape individuals' learning capacities through their ability to store information. Such a condition is for example the capacity of the information storage technology that is available in a society (cf. Mark 1998). Obviously, agents have in our model only access to a partial view of the network, and their knowledge remains local, i.e. it depends exclusively on the individual experiences of agents.

|

(1) |

|

(2) |

| Agent j | |||

Agent i |

C |

D |

|

| C |

(Gij-Lij , Gji-Lji) | (-Lij, Gji) | |

| D |

(Gij, -Lji) | (0, 0) | |

|

(3) |

|

(4) |

where t represents the current point in time.

|

(5) |

where tij refers to the current simulation time and pij denotes the payoff attained in the most recent interaction. When an interaction proposal is rejected, the memory is also updated according to 5, where the payoff pij, is set to zero.

|

(6) |

Knowledge stemming from referees or observation only applies when first-hand knowledge is not available (denoted by tik=0). Once direct experience knowledge about agent k exists (tik > 0), agent i stops updating the expected outcome oik based on third-parties experience.

| Network | N=1000 | N=5000 | N=10000 | |||

| l | C | l | C | l | C | |

| Random | 3.27 | 0.0085 | 3.97 | 0.0016 | 4.30 | 0.00093 |

| BA | 2.98 | 0.037 | 3.52 | 0.010 | 3.76 | 0.0044 |

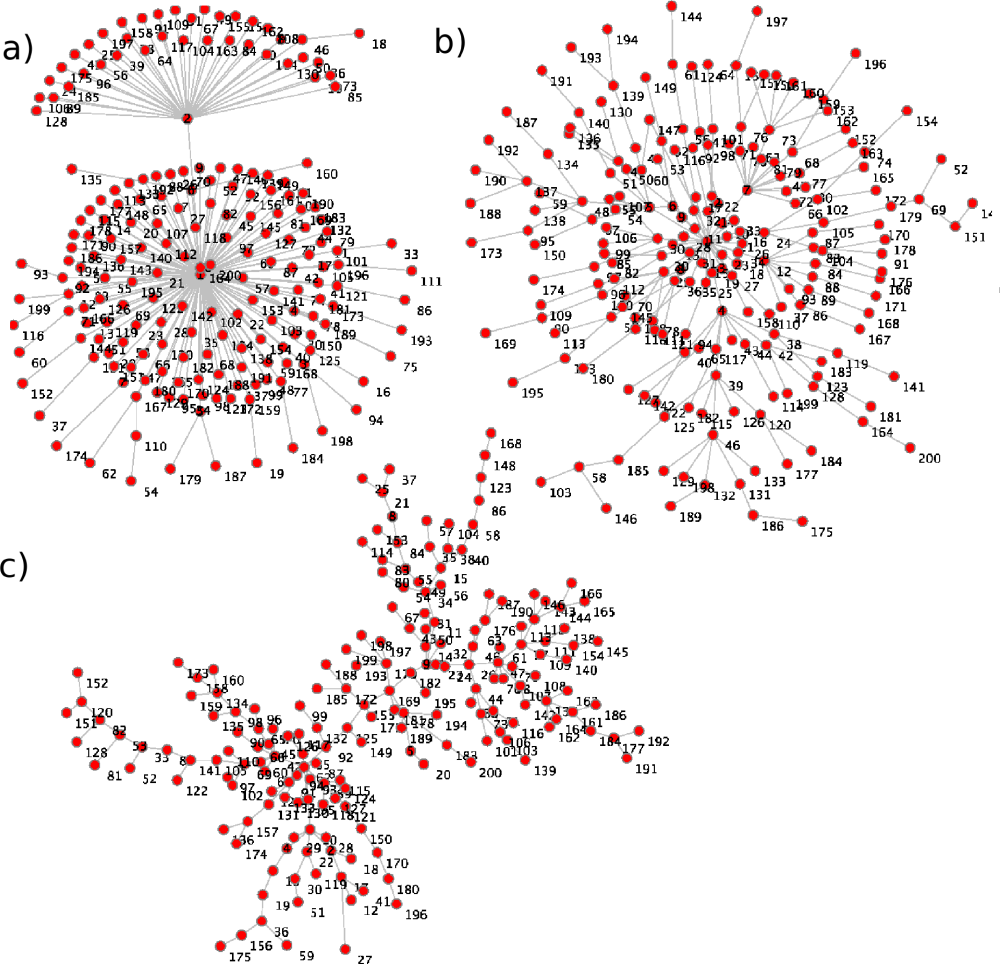

| LO(E/B=0,I) | 2.47 | 0.16 | 3.22 | 0.05 | 3.56 | 0.034 |

| LO(E/B=0,E) | 2.51 | 0.20 | 3.16 | 0.085 | 3.42 | 0.065 |

| LO(E/B=3/16,I) | 3.66 | 0.052 | 4.09 | 0.038 | 4.29 | 0.032 |

| LO(E/B=3/16,E) | 3.71 | 0.034 | 4.14 | 0.030 | 4.37 | 0.029 |

| LO(E/B=8/16,I) | 3.98 | 0.012 | 4.45 | 0.015 | 4.68 | 0.017 |

| LO(E/B=8/16,E) | 3.91 | 0.027 | 4.57 | 0.040 | 4.84 | 0.038 |

|

|

|

|

|

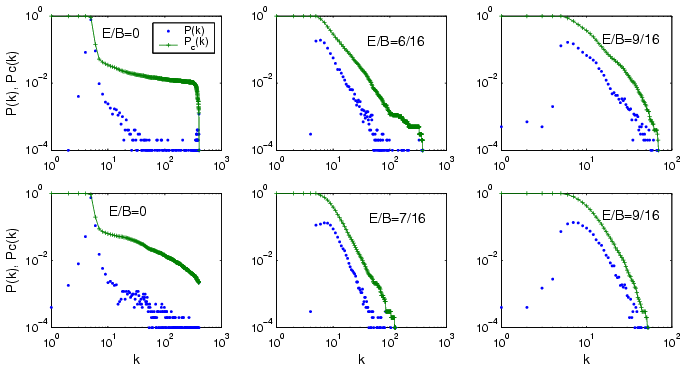

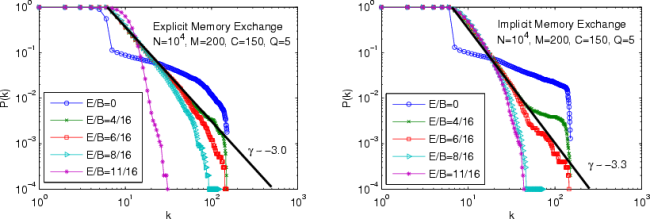

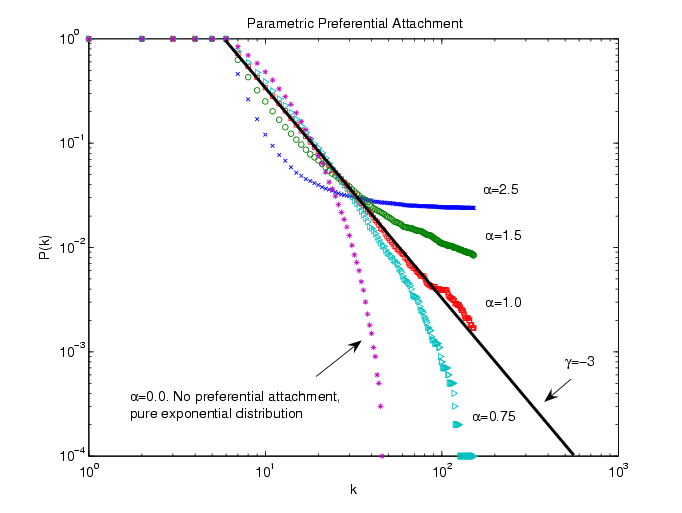

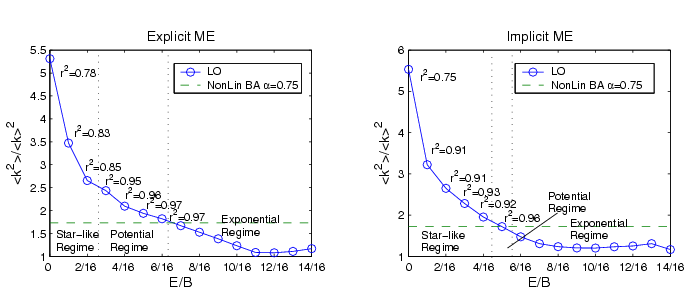

| Setting | Mem. Exch. | Star-like Reg. | Power-law Reg. | Exponential Reg. |

| A | Explicit | [0..5/16] | [6/16] | [7/16..14/16] |

| A | Implicit | [0..6/16] | [7/16] | [8/16..14/16] |

| B | Explicit | [0..3/16] | [4/16..6/16] | [7/16..14/16] |

| B | Implicit | [0..4/16] | [5/16] | [6/16..14/16] |

| C | Explicit | [0..2/16] | [3/16..6/16] | [7/16..14/16] |

| C | Implicit | [0..4/16] | [5/16] | [6/16..14/16] |

| D | Explicit | [0..2/16] | [3/16..7/16] | [8/16..14/16] |

| D | Implicit | [0..4/16] | [5/16] | [6/16..14/16] |

| E | Explicit | [0..2/16] | [3/16..4/16] | [5/16..14/16] |

| E | Implicit | [0..3/16] | [4/16] | [6/16..5/16] |

|

int N; // number of agents

int Mc; // memory size

int Mo; // initial number of agents in memory

int Q; // number of interactions that can be initiated

int C; // interaction capacity

double B; // Benefit

double E; // Effort

ME={Explicit,Implicit} // Memory exchange

Agent {

int id; // agent's id

double alpha; // expertise of the agent, know as 'a' in the model

MemoryEntry memory;

}

MemoryEntry {

int agent; // agent in memory

int expout; // expected outcome of the agent

int time; // time of the last interaction.

// if time<-1 agent belongs to the unknown set

// if time==0 agent belongs to the known set

// if time>0 an interaction occurred at that time

}

// initialization of the model

procedure init() {

Agents agents = new Agent[N];

for each agent in agents {

// init expertise of the agent in the [0.05..0.95] range

// with a 10^-3 precision

agent.alpha = (double)((random()*0.90+0.05)/1000);

agent.memory = new MemoryEntry[Mc];

for each me in agent.memory up to Mo {

me.time=-1;

me.expout=0.0;

me.agent = random(N);

// check that the me.agent is different than the agent itself

// and it's not already in the agent's memory.

}

}

}

procedure main() {

init();

time=1;

do {

createPermutationOfAgents();

do {

agent=getAgentFromPermutation();

run(agent,time);

removeAgentFromPermutation(agent);

} while(!isPermutationEmpty());

time=time+1;

} while(time<100);

} //end main

// agent's behaviour

procedure run(agent, time) {

// chose a set of agents to interact with (<=Q)

agentSet = chooseAgentsToInteract(agent);

for each agentToInteract in agentSet {

// is agentToInteract accepting the agent's proposal

//for interaction?

if (acceptInteractionProposal(agentToInteract,agent)) {

[ag1_outcome,ag2_outcome,ag1_netbenefit,ag2_netbenefit]

= playGame(agent,agentToInteract);

updateMemory(agent,agentToInteract,

ag1_outcome,ag1_netbenefit,time);

updateMemory(agentToInteract,agent,

ag2_outcome,ag2_netbenefit,time);

// if interaction is positive for both agents,

//then exchange memories

if (ag1_outcome>0 and ag2_outcome>0)

exchangeMemories(agent,agentToInteract,time,outcomes);

}

else {

interactionIsRefused(agent,agentToInteract);

}

}

}

// agent chooses a set of agents to interact with (up to Q).

// The set of agent is chosen at random or maximizing the

// expected outcome depending on the exploration

// probability

function chooseAgentsToInteract(agent) {

agentSet = new List();

// we have to calculate the exploration probability. So we

// must know how many agents in agent's memory are known or

// unknown (K and U set in section 2.1)

unknown=0;

known=0;

for each mem in agent.memory {

if (mem.agent>=0) {

if (mem.time<0) unknown=unknown+1;

else known=known+1;

}

}

// equation 3

explorationProb = (unknown / (unknown+known))^2.0;

i=0;

do {

if (explorationProb < random()) {

// agent is not exploring,

mem = maximum(agent.memory);

// mem is the memory entry with maximum expected outcome

// (mem.expout) provided that mem.agent is not already

// in agentSet

agentSet.add(mem.agent);

}

else {

// agent is exploring

// choose one agent at random from agent's memory.

// Check that mem.agent is not already in agentSet.

mem = random(agent.memory);

agentSet.add(mem.agent);

}

i=i+1;

} while(i<Q);

return agentSet;

}

// the agent must decide whether to accept the proposal

// made by initiatorAgent

function acceptInteractionProposal(agent,initiatorAgent) {

mem=getMemoryEntry(agent,initiatorAgent,time);

if (isNull(mem)) {

if (getCurrentInteractions(agent,time)<C) return true;

else return false;

}

else {

if (mem.expout>=0.0

and getCurrentInteractions(agent,time)<C) return true;

else return false;

}

}

// the agent updates its memory after the interaction

// equation 5

procedure updateMemory(agent, partner, outcome, netBenefit, time) {

mem=getMemoryEntry(agent,partner);

if (isNull(mem)) {

// the agent did not have partner in its memory

memToR=getLessAttractiveMemEntry(agent,time);

memToR.agent = partner;

memToR.time = time;

if (netBenefit>=0) memToR.expout = outcome;

else memToR.expout = -outcome;

}

else {

// the agent did have partner in its memory

if (netBenefit>=0) {

// agent cooperated in the interaction with partner

if (mem.time>0) mem.expout=(mem.expout+outcome)/2.0

else mem.expout = outcome;

}

else {

// agent defected in the interaction with partner

mem.expout = -outcome;

}

// update the interaction time, if t>0 means that the agent

// and partner have interacted, otherwise the knowledge

// about partner comes from the memory exchange process

mem.time=time;

}

}

// returns the memory entry from agent's memory whose

// mem.expout (expected outcome) is minimum in

// absolute value. Provided that mem.time < time

function getLessAttractiveMemEntry(agent,time);

// remove the memory entry memToRemove from agent's memory

removeMemoryEntry(agent,memToRemove);

// add the memory entry memToAdd to agent's memory

addMemoryEntry(agent,partner,time,expoutPartner) {

mem = getMemoryEntry(agent,partner);

if (!isNull(mem)) {

// partner was already in agent's memory

if (mem.time<=0) {

if (mem.time<0) mem.expout=expoutPartner;

else if (mem.time==0)

mem.expout=(mem.expout+expoutPartner)/2.0;

mem.time=0;

}

}

else {

// partner was not in agent's memory

memToR = getLessAttractiveMemEntry(agent,time);

if (fabs(memToReplace.expout)<fabs(expoutPartner)) {

// replace the old entry by the new one

memToR.agent = partner;

memToR.expout = expoutPartner;

memToR.time = 0;

}

}

}

// agents exchange information about other agents

procedure exchangeMemories(agent, partner, time) {

if (ME==Explicit) {

memFromAgent = chooseMEExplicit(agent);

memFromPartner = chooseMEExplicit(partner);

}

else {

memFromAgent = chooseMEImplicit(agent,time);

memFromPartner = chooseMEImplicit(partner,time);

}

addMemoryEntry(agent,memFromPartner.agent,time,

memFromPartner.expout);

addMemoryEntry(partner,memFromAgent.agent,time,

memFromAgent.expout);

}

// the agent reduces the expected outcome after

// partner's rejection to interact

procedure interactionIsRefused(agent,partner) {

mem = getMemoryEntry(agent,partner);

mem.expout = (mem.expout+0.0)/2.0;

}

// return a memory entry whose mem.time is bigger than 0,

// which that at least one interaction between agent and mem.agent

// has occurred. The chosen memory entry is the maximum

// mem.expout in absolute value. In the case of a clash the

// returned memory entry will be chosen at random among those with

// maximum expected outcome.

function chooseMEExplicit(agent);

// return a memory entry whose mem.time is equal to the current time,

// which means a current interaction of the agent. The memory entry

// is chosen at random

function chooseMEImplicit(agent, time);

// returns the current number of interactions of the agent, which is

// the number of memory entries in its memory with mem.time equal to

// the current time

function getCurrentInteractions(agent,time);

// play the game G

function playGame(agent1, agent2) {

double Gij, Gji, Lij, Lji;

double outcomeAg1, outcomeAg2;

double netBenefitAg1, netBenefitAg2;

Gij = (1.0-agent1.alpha)*(agent2.alpha)*B;

Lij = (1.0-agent2.alpha)*(agent1.alpha)*E;

Gji = (1.0-agent2.alpha)*(agent1.alpha)*B;

Lji = (1.0-agent1.alpha)*(agent2.alpha)*mEffort;

netBenefitAg1 = Gij-Lij;

netBenefitAg2 = Gji-Lji;

if (netBenefitAg1>=0.0 and netBenefitAg2>=0.0) {

// both agents cooperate

outcomeAg1=Gij-Lij;

outcomeAg2=Gji-Lji;

}

else if (netBenefitAg1>=0.0 and netBenefitAg2<0.0) {

// agent2 defects and agent1 cooperates

outcomeAg1=-Lij;

outcomeAg2=Gji;

}

else if (netBenefitAg1<0.0 and netBenefitAg2>=0.0) {

// agent 1 defects and agent2 cooperates

outcomeAg1=Gij;

outcomeAg2=-Lji;

}

else {

// both agents defect

outcomeAg1=0.0;

outcomeAg2=0.0;

}

return [outcomeAg1,outcomeAg2,netBenefitAg1,netBenefitAg2];

}

// create a random permutation; so agents are executed only once per

// simulation step and they the order is random

function createPermutationOfAgents();

// remove agent from the permutation

procedure removeAgentFromPermutation(agent)

// return when all the agents have been already chosen

function isPermutationEmpty();

// returns the memory entry form agent's memory

// corresponding to agent2 (agentTo=agent.mem.agent).

// If it does not exist return null

function getMemoryEntry(agent, agentTo);

ALBERT, R., Barabási, A.-L., 2002. Statistical mechanics of complex networks. Review of Modern Physics 74, 47-97.

AXELROD, R., 1984. The Evolution of Cooperation. Basic Books, New York.

BARABASI, A.-L., Albert, R., 1999. Emergence of scaling in random networks. Science 286, 509-512.

BLAU, P., 1964. Exchange and Power in Social Life. New York: Wiley.

BONACICH, P., 1992. Social Dilemmas. Theoretical Issues and Research Findings. Pergamon Press, Ofxord, Ch. Communication Networks and Collective Action, pp. 225-245.

BUSKENS, V., 2002. Social Networks and Trust. Kluwer Academin Publishers.

CARLSON, J., Doyle, J., 2000. Highly optimized tolerance: Robustness and design in complex systems. Physical Review Letter 84 (11), 2529-2532.

COHEN, M., Riolo, R., Axelrod, R., 2001. The role of social structure in the maintenance of cooperative regimes. Rationality and Society 13, 5-32.

DAWES, R., 1980. Social dilemmas. Annual Review of Psychology 31, 169-193.

DELGADO, J., 2002. Emergence of social conventions in complex networks. Artificial Intelligence 141, 171-185.

EBEL, H., Davidsen, J., Bornholdt, S., 2002. Dynamics of social networks. Complex. 8 (2), 24-27.

EGUILUZ, V. M., Zimmermann, M. G., Cela-Conde, C., San Miguel, M., 2005. Cooperation and emergence of role differentiation in the dynamics of social networks. American Journal of Sociology 110, 977-1008.

FERRER, R., Solé, R., 2003. Statistical Physics of Complex Networks, Lecture Notes in Physics. Springer, Ch. Optimization in Complex Networks.

FLACHE, A., 2001. Individual risk preferences and collective outcomes in the evolution of exchange networks. Rationality and Society 13 (3), 304-348.

FLACHE, A., Hegselmann, R., 1999a. Altruism vs. self-interest in social support. computer simulations of social support networks in cellular worlds. Advances in Group Processes 16, 61-97.

FLACHE, A., Hegselmann, R., 1999b. Rationality vs. learning in the evolution of solidarity networks: A theoretical comparison. Computational and Mathematical Organization Theory 5 (2), 97-127.

GILBERT, N., Troitzsch, K., 1999. Simulation for the Social Scientist. Open University Press.

GOULD, R., 1993a. Collective action and network structure. American Sociological Review 58, 182-196.

GOULD, R., 1993b. Trade cohesion, class unity and urban insurrection: Artisanal activism in the paris commune. American Journal of Sociology 98, 721-754.

HEGSELMANN, R., 1998. Experimental ethics. De Gruyter, Berlin, Ch. A Computer Simulation of Classes, Cliques and Solidarity, pp. 298-320.

HOMANS, G.C., 1974. Social Behavior. Its Elementary Forms. New York: Harcourt Brace Jovanovich.

KAMADA, R., Kawai, S., 1989. An algorithm for drawing general undirected graphs. Inform. Process. Lett. 31, 7-15.

KOMTER, A., 1996. Reciprocity as a principle of exclusion. Sociology 30 (2), 229-316.

KRAPIVSKY, P., Redner, S., 2001. Organization of growing random networks. Physical Review E 63.

KRAPIVSKY, P., Redner, S., Layvraz, L., 2000. Connectivity of growing random networks. Physical Review Letter 85, 4629-4632.

LINDENBERG, S., 2001. Handbook of Sociological Theory. Kluwer, New York, Ch. Social Rationality versus Rational Egoism, pp. 635-668.

MACY, M., Flache, A., 2002. Learning dynamics in social dilemmas. Proceedings of the National Academy of Sciences 99 (10), 7229-7236.

MACY, M. W., Sato, Y., 2002. Trust, cooperation, and market formation in the u.s. and japan. Proceedins of the National Academy of Sciences 99, 7214-7220.

MARK, N., 1998. Beyond individual differences: Social differentiation from first principles. American Sociological Review 63, 309-330.

MARWELL, G., Oliver, P., Prahl, R., 1988. Social networks and collective action: a theory of the critical mass. American Journal of Sociology 94, 502-534.

PODOLNY, J., Page, L., 1998. Network forms of organizations. Annual Review of Sociology 24, 57-76.

PUJOL, J., Sangüesa, R., Delgado, J., July 2002. Extracting reputation in multi-agent systems by means of social network topology. In: Proceedings of the First International Joint Conference on Autonomous Agents and Multi-Agent Systems AAMAS-02. ACM, Bologna, pp. 467-474.

RAUB, W., Weesie, J., 1990. Reputation and efficiency in social interaction: an example of network effects. Americal Journal of Sociology 96, 626-654.

ROBINS, G., Pattison, P., Woolcock, J., 2005. Small and other worlds: Global network structures from local processes. American Jornal of Sociology 96, 626-654.

SIMON, H., 1982. Models of Bounded Rationality. MIT Press.

SNIJDERS, T. A., 2001. Sociological Methodology. Basil Blackwell, Boston and London, Ch. The Statistical Evaluation of Social Networks Dynamics, pp. 361-395.

TODD, P., Gigerenzer, G., 2003. Bounding rationality to the world. Journal of Economic Psychology 24, 143-165.

WALSH, T., July 1999. Search in a small world. In: In Proceedings of International Joint Conference on Artificial Intelligence (IJCAI'99). San Francisco, pp. 1172-1177.

WALSH, T., August 2001. Search on high degree graps. In: In Proceedings of International Joint Conference on Artificial Intelligence (IJCAI'01). Seattle, pp. 266-271.

WATTS, D., 1999. Network dynamics and the small world phenomenon. Americal Journal of Sociology 105 (2), 493-527.

WATTS, D., Strogatz, S., 1998. Collective dynamics of 'small-world' networks. Nature 393, 440-442.

WOOLDRIDGE, M., Jennings, N., 1995. Intelligent agents: theory and practice. Knowledge Engineering Review 10, 115-152.

YAMAGISHI, T., Cook, K. S., Watabe, M., 1998. Uncertainty, trust, and commitment formation in the united states and japan. American Journal of Sociology 104, 165-194.

YOUNGER, S., 2004. Reciprocity, normative reputation, and the development of mutual obligation in gift-giving societies. Journal of Artificial Societies and Social Simulation 7 (1).

Return to Contents of this issue

© Copyright Journal of Artificial Societies and Social Simulation, [2005]