Abstract

Abstract

- The Reactive-Causal Architecture (ReCau) is a cognitive agent architecture which is proposed to simulate human-like intelligence while satisfying the core attributes of believable agents. ReCau combines intentional notion and motivation theories. ReCau agents are entities driven by their unsatisfied needs, to satisfy those needs they act intentionally while satisfaction and dissatisfaction of needs results in affect display. In this paper, the results of a multi-agent based simulation called radar task are presented. With the help of this simulation, ReCau is compared with some other existing agent and cognitive architectures. The results indicate that ReCau provides a highly realistic decision-making mechanism. This architecture; therefore, contributes towards the solution for the development of believable agents.

- Keywords:

- Reactive-Causal Architecture, Radar Task Simulation

Introduction

Introduction

- 1.1

- Several scientists, studying organisation theory, attempt to determine the factors that affect organisational performance. To achieve this aim, they have tried to form different formal methods to understand and predict human behaviour in organisational decision-making processes. They have established several formal models by establishing mathematical models, performing simulation studies and using expert systems. These models have provided useful information to scientists on finding gaps and errors in verbal theories and understanding and foreseeing behaviour in organisational decision-making processes (Carley et al. 1998).

- 1.2

- One of the approaches used in this field is performing multi-agent based simulation (MABS). MABS uses techniques of agent-based computing and computer simulation. The purpose of MABS is to study the use of agent technology for simulating any phenomena on a computer (Davidsson 2002). MABS is mostly used in a social context. However, some properties of MABS make it useful for other domains like simulated reality, simulation of behaviour and dynamic scenario simulation. MABS are especially useful for simulating scenarios in more technical domains (Davidsson 2000).

- 1.3

- In the literature, a few multi-agent based simulation studies on radar task have been reported by using different agent and cognitive architectures. In those studies, scientists attempted to understand the effects of cognition and organisational design on performance by adopting radar task as an example decision-making problem. Radar task is a typical classification choice task. In this task, agents try to decide if a blip on a radar screen is friendly, neutral or enemy. In order to decide, agents consider nine different characteristics of aircrafts. The ratio of correct decisions determines the performance in this task (Carley et al. 1998).

- 1.4

- The first simulation on radar task was performed by Carley et al. (1998). In this simulation four different organisational settings are used together with five different agent models. They performed the same simulation on humans and compared the results. They analysed the results at both micro and macro levels. They found that there is a meaningful relationship between design and cognition.

- 1.5

- Later, Sun and Naveh (2004) performed the same simulation by using cognitive architecture called CLARION. By this study, they proved that not much learning took place in the original simulation performed on humans. In order to illustrate this, they adopted the Q-learning algorithm to enable agents to learn in a trial-error fashion. The simulations indicated that if enough time is given to the agents their performance increases.

- 1.6

- The conclusion is that in the original simulation the cognitive parameters in the decision-making mechanism affected the performance of the agents. Therefore, the results of the original radar task simulation provide a well-designed test bed to test decision-making mechanisms. It is because of the fact that in the original simulation study not much learning took place.

- 1.7

- With this respect, in this research study radar task simulation is undertaken to test and illustrate the decision-making mechanism of the Reactive-Causal Architecture (ReCau). This simulation provides a means to compare ReCau with some of the existing architectures; since, this simulation is undertaken by using those architectures. In addition to this, performing this simulation with ReCau without adopting any learning approach can give chance to observe the effects of cognitive parameters on performance. Therefore, the major aim of this study is: (1) to test and illustrate the decision-making mechanism proposed along with ReCau, and (2) to further understand the relation between organisational design and cognition.

- 1.8

- The organisation of this paper is as follows. In Section 2 existing work related with ReCau architecture is discussed. In Section 3, theoretical background and architectural details of ReCau are elaborated. In Section 4, the radar task simulation is explained. In Section 5, previous radar task simulation results are presented. In Section 6, the results of the simulation by employing ReCau agents are given. In Section 7, evaluation of the radar task simulation by ReCau is presented. In the last section, conclusions and discussions are given.

Related Work

Related Work

- 2.1

- In 1980, a group of researchers held a workshop at the Massachusetts Institute of Technology. Until that time, the Artificial Intelligence (AI) community was considering intelligence as a whole. However, in that workshop it was asserted that intelligence was constituted of distributed properties like learning, reasoning, and so on. After this workshop, the term agent attracted the focus of attention. This development gave rise to agent technologies which became a commonly approved research area (Jennings et al. 1998).

- 2.2

- Today, the agent term implies entities that represent some aspects of intelligence. In spite of the fact that there is a debate on what the term agent means, Jennings et al. (1998) put forward a commonly acceptable definition as follows:

A computer system, situated in some environment that is capable of flexible autonomous action in order to meet its design objectives.

In this definition, they put forward three attributes that an agent should have: situatedness, flexibility and autonomy. They state that the term situatedness implies entities that are capable of obtaining sensory data and performing actions to change the environment where they are embodied. They explained the term flexibility as a capability for performing flexible actions. They further elaborated this attribute by asserting its three constituents: responsive, pro-active and social. Entities that can understand their environment and respond to the changes that occur in their environment are called responsive. Pro-active entities can perform actions and take initiatives to achieve their objectives. Finally, the term social implies systems that are able to interact with other entities and also help others in their activities. - 2.3

- By the term autonomy Jennings et al. (1998) were referring to those entities that can perform actions without the assistance of other entities. Moreover, those entities have the ability to control their internal state and actions. Russell and Norvig (2002) gave a stronger sense of autonomy by adding that those entities should also have the ability to learn from experiences. To achieve stronger autonomy, Luck and D'Inverno (1995) suggested that the agents should have motives to allow them to generate goals. In addition to strong autonomy, to develop human-like intelligent agents, the research has indicated the importance of emotions (Bates 1994). Several researchers have stressed that one of the core requirements for believable agents is a capability for affect display.

- 2.4

- These and many other studies have come to conclusion that the commonly approved fundamental attributes that a believable agent should have are situatedness, autonomy, flexibility, and affect display. Adding some other attributes to believable agents depends on their design objectives.

- 2.5

- Researchers who aim to develop agents satisfying these attributes look for a way to formulate their approaches. For this purpose, particular methodologies such as agent architectures are used. Maes (1991) defined agent architecture as a particular methodology for building agents. She stated that agent architecture should be a set of modules. Kaelbling (1986) presented similar point of view and stated that agent architecture is a specific collection of modules and there must be arrows to indicate data flow among modules. In this context, agent architecture can be considered as a methodology for designing particular modular decompositions for tasks of the agents.

- 2.6

- While conceptualising agent and cognitive architectures, most of the researchers utilize intentional notion. They use intentional notion while they are trying to satisfy core attributes of intelligent agents. While explaining intentional stance, Dennett (2000) states three levels of abstraction which help explain and predict the behaviours of entities and objects: (1) physical stance; (2) design stance: and (3) intentional stance.

- 2.7

- Dennett (2000) puts forward physical stance to explain behaviours by utilizing concepts from physics and chemistry. In this level of abstraction behaviours of entities are predicted by considering the knowledge related with things like energy, velocity and so on. As an example, it can easily be predicted a ball would fall down to the ground if it is released from the top of a building. It is due to the fact that there is gravity on earth and the behaviour of the ball can be anticipated by considering this physical principle.

- 2.8

- Design stance is a more abstract level and at this level of abstraction, the manner of acting is explained and predicted through biology and engineering. At this level, things such as a purpose function and existential attributes are taken into account. It can be predicted that the speed of a car is going to increase whenever we press on the gas pedal. It is because of the fact that it is known that the gas pedal is made for increasing the speed of the vehicle. In this case, design stance is utilized to understand the behaviour of the car.

- 2.9

- The last stance is intentional stance which is the most abstract level. At this level of abstraction, an attempt is made to understand and predict the behaviours of software agents and minds by considering mental concepts such as intention and belief. As an example, if a bird flies away while a cat tries to catch it, it can be understood that the bird desires to live. This can be comprehended by taking intentional stance into account.

- 2.10

- According to intentional stance, entities are treated as rational agents. Behaviours of agents are predicted by considering what beliefs an agent ought to have based on its purpose in a given condition. Afterwards, an attempt is made to predict what desires the agent ought to have, based on the same conditions. Finally, it is predicted that a rational agent will act to achieve its goals under the guidance of its beliefs. From this point of view, practical reasoning helps towards an understanding of what the agent ought to do base on the chosen set of beliefs and desires.

- 2.11

- In the literature, there are several architectures that attempt to mimic human-like intelligence. These architectures fail to satisfy the aforementioned core attributes of believable agents all at once. Most of those studies utilize the intentional notion to simulate human intelligence. To achieve strong autonomy, some of those studies employ learning approaches while others adopt motivation theories. To simulate affect display, a few studies employ an emotion model.

- 2.12

- With this respect, there is no general approach covering all aspects of the problem. To achieve this aim an approach should employ a learning model, adopt motivation theories, and utilize an emotion model. Reactive-Causal Architecture is proposed to meet these requirements (Aydin et al. 2008a). For this purpose, ReCau adopts causality assumption and motivation theories while employing emotion model. In the following section theoretical background related with ReCau is given.

The Reactive-Causal Architecture

The Reactive-Causal Architecture

- 3.1

- In this section of the study, theoretical background of Reactive-Causal Architecture is presented. Then in the second subsection the mechanisms and components of the architecture are elaborated.

The Theoretical Background

- 3.2

- The Reactive-Causal Architecture (ReCau) is proposed to develop believable agents while considering agents as neither totally rational nor totally irrational. To achieve these aims, the intentional notion and theories of needs are combined together (Aydin et al. 2008b). In particular, in ReCau Belief, Desire, Intention approach and the theories of needs are brought together. By combining these approaches, agents are enabled to be driven by their motives. In this manner, the emergence of intelligent behaviour is explained as the result of unsatisfied needs.

- 3.3

- In the literature, the simulation of intelligence is tried to be achieved under certain assumptions. The most commonly accepted assumption in the literature is rationality. Instead of this assumption, in ReCau, causality is put forward as the most basic assumption of the simulation of intelligence.

- 3.4

- Even though, the intentional notion provides a good theoretical infrastructure for agency, it has few but vital obstacles in simulating intelligence. Some of these problems are explicitly stated by the founder of these stances—Daniel Dennett (1987). He stated that there were some unknown issues related to the emergence of intelligent behaviour. Besides, some other objections related to the intentional notion can be put forward.

- 3.5

- First of all, the animals cannot only be considered as intentional systems. In other words, too much abstraction in some cases may lead to false predictions in the behaviours of intelligent animals. In the end, these creatures -including humans- also have so called physical and design stances. The animals are bounded by the physical and the chemical principles of the universe; therefore, this situation certainly has an effect on intelligent behaviour.

- 3.6

- Besides, the behaviours of animals are delimited by existential attributes, purposes and functions and this stance is known as the design stance. Here instead of the term design, the existential attributes are used to refer to the same thing. The term existential attributes are used; since, this term does not refer to a creator. Usually the term design brings about belief on a creator. In the present study, it is not aimed to refer to a creator. Instead it is tried to express that all intelligent creatures have existential attributes regardless of whether a supreme deity created them or not.

- 3.7

- Secondly, the cause of the emergence of intelligent behaviour is not known as stated by Dennett (1987). It is commonly accepted fact that intelligent animals including human-beings are intentional. In other words, the behaviours of the animals can be somehow understood and predicted by employing the intentional stance. However, it cannot be known what causes the emergence of intelligent behaviour; therefore, the emergence of intelligence cannot be simulated by only applying the intentional notion.

- 3.8

- Last but not the least objection to the intentional notion is related to the rationality assumption. While explaining these stances, Dennett (2000) simply assumes that the agents are rational. The rationally debate wass initially put forward by Sloman and Logan (1998). They stated that the systems that are developed by utilizing findings of AI are neither rational nor irrational.

- 3.9

- Even though, the above opposition is a good starting point, a more serious objection is put forward by Stich (1985). He questioned if a man is ideally rational or irrational. He argued that the human-beings often have beliefs and/or desires which are irrational and the intentional stance does not help understanding and predicting the behaviours which are the result of irrational beliefs and/or desires.

- 3.10

- When explaining the intentional notion, Dennett (1987) stated that the animals are to be treated as rational agents and then attempted to understand what beliefs an agents ought to have, given their situation and purpose. However, as explained by Stich (1985), human-beings have beliefs and desires which are irrational. Therefore, while trying to understand and predict the behaviours of intelligent beings, the intentional stance fails to explain the behaviours that are the result of irrational beliefs and/or desires.

- 3.11

- Moreover, in many cases rational behaviour depends on time, culture, context, limited with the beliefs an intelligent-being has. Various behaviours of human-beings in the past are thought to be rational, while today some of them look irrational. Many more behaviours are considered as rational in certain cultures, but in other cultures they are presumed as irrational. Moreover, all intelligent behaviours are limited with the beliefs of an intelligent-being, which in turn may yield irrational actions.

- 3.12

- The last but not the least concern in here is that rationality is constrained with the beliefs an intelligent-being has. For instance, consider a cat is given to Jack as a present. Assume that Jack does not know much about cats. The following day, assume that he wants to go outside. Before going out, he decides to keep the cat away from his parlour. Therefore, he rationally closes the door of the parlour!

- 3.13

- Is it really a rational behaviour to close the doors? We would say ``No'' knowing that some cats can open a closed door. Cats are capable of using a door handle. The cats can jump on a handle and by pressing down; they can open closed doors (Thorndike 1998). Therefore, Jack should have locked the door which is the rational action to take before going out. But when deciding, he had a belief which is "Cats cannot open the closed doors.''. Actually, this belief is not sufficient to provide the rational action. Therefore, as stated previously intelligent behaviour is limited with the beliefs intelligent-beings have.

- 3.14

- In the frame of the above references, along with the ReCau architecture it is asserted that the rationality assumption fails to explain all aspects of the intelligent behaviour. However, without the rationality assumption the intentional notion is very useful in explaining, understanding, and predicting intelligent actions.

- 3.15

- Instead of the rationality assumption, along with ReCau, it is asserted that the most basic assumption of intelligence simulation should be causality. Basically, causality denotes the relationship between one event and another event which is the consequence of the first. The first event is called as the cause while the latter is called the effect. The cause must be prior to, or at least simultaneous with, the effect. According to the causality, the cause and the effect must be connected by a nexus which is a chain of intermediate things in contact (Pearl 2000).

- 3.16

- By the proposed approach, it is suggested that intelligent behaviour is produced in accordance with causality. Firstly, intelligent entities observe their internal state and the environment. Then based on the perceived input, intelligent entities perform some actions in the environment. According to this viewpoint, perceived input is the cause while the taken action is the effect.

- 3.17

- Actually, all of the current architectures which try to mimic intelligent behaviour are in accordance with causality, even if the researchers are not aware of this fact. In other words, the existing architectures produce intelligent behaviour in accordance with causality. Therefore, the most basic assumption of the simulation intelligence is that intelligent behaviour is produced in accordance with the causality.

- 3.18

- Aside from this fact, according to the present approach each cause and effect is connected by a particular motive. In other words, in the proposed approach the nexi are the needs. These needs provide the means to select among alternatives. From this point of view, while producing intelligent behaviour the intelligent beings choose a plan which satisfies their motives (i.e. needs) the best.

- 3.19

- Within this point of view, one can understand and predict an intelligent-being's behaviour if he knows its motives. Instead of considering the rational actions an intelligent-being has, one should consider its motives. Based on these motives, acts of an intelligent being can be understood and predicted by considering the action which satisfies the associated motive best.

- 3.20

- According to Britannica Concise Encyclopaedia, the term motivation refers to factors within an animal that arouse and direct goal-oriented behaviours (Britannica Concise Encyclopaedia 2005). Theories of human motivation have been extensively studied in disciplines such as Psychology. Among several established motivation theories, in the ReCau architecture, the theory of needs which is the most commonly accepted theory in explaining motives is adopted.

- 3.21

- The original theory of needs was proposed by Maslow (1987). According to this theory, human-beings are said to be motivated by their unsatisfied needs. Maslow lists these needs in a hierarchical order in five groups: physiological, safety, love and belongingness, esteem and self-actualization.

- 3.22

- The lowest level needs are the physiological needs such as air, water, food, sleep, sex, and so on. According to Maslow, when these needs are not satisfied, sickness, irritation, pain and discomfort can be felt. These needs motivate human-beings to alleviate them as soon as possible.

- 3.23

- Humans also need safety and security of themselves and their family. This need can be satisfied with a secure house and good neighbourhood. In addition to this, safety needs sometimes motivate people to be religious.

- 3.24

- Love and belongingness are the next level of needs. Human-beings need to be a member of groups like family, work groups, and religious groups. They need to be loved by others and need to be accepted by others. According to Maslow (1987), it is an essential need to be needed.

- 3.25

- Esteem needs are categorized in two as self-esteem and recognition that comes from others. Self-esteem results from competence or mastery of a task. This is similar to the belongingness; however, wanting admiration has to do with the need for power.

- 3.26

- The need for self-actualization is the need in becoming everything that one is capable of, as far as physical and mental capabilities permit. Human-beings that meet the other needs can try to maximize their potential. They can seek knowledge, peace, aesthetic experiences, self-fulfilment, and oneness with deity, and so on.

- 3.27

- According to Maslow (1987), certain lower needs are to be satisfied before higher level needs can be satisfied. From this perspective, once the lower level needs are taken care of, human-beings can start thinking about higher level needs.

- 3.28

- By expanding and revising above theory, Existence, Relatedness and Growth (ERG) theory is proposed by Alderfer (1972). In ERG, physiological and safety are placed in the existence category. Relatedness category contains love and external esteem needs. The growth category includes the higher order needs: self-actualization and self-esteem. Alderfer (1972) removed overlapping needs in the hierarchy of Maslow and reduced the number of levels. Therefore, in the current study ERG theory is adopted. It must be noted that ERG needs can still be mapped to those of Maslow's theory (Aydin and Orgun 2010).

- 3.29

- Along with this approach an emotion model is incorporated in ReCau in order to enable ReCau agents to display affect. In ReCau, affect is considered to be post-cognitive by following Lazarus (1982). According to this point of view, an experience of emotions is based on a prior cognitive process. As stated by Brewin (1989), in this process, the features are identified, examined and weighted for their contributions.

- 3.30

- Maslow (1987) also considers emotions as post-cognitive. While explaining the needs, he states that if the physiological needs are not satisfied, it results in different emotional states like irritation, pain and discomfort. Hereby, his approach is extended by stating that the satisfaction or the dissatisfaction of not only physiological needs but also every need results in feeling different emotions.

- 3.31

- While explaining emotions, an approach that commensurate the above idea is adopted. Specifically, the emotion model proposed by Wukmir (1967) is adopted. He proposed that emotions are such a mechanism that they provide information on the degree of favourability of the perceived situation. If the situation seems to be favourable to the survival of an intelligent being, then the being experiences a positive emotion. A being experiences a negative emotion, when the situation seems to be unfavourable for survival of the being.

- 3.32

- When the theories of needs are considered, it can be claimed that the survival of the beings depends on meeting their needs. From this point of view, Wukmir's (1967) approach and theories of needs can be combined. According to this proposal, every need in the hierarchy can be associated with two different emotions. While one of these emotions is positive, the other one is negative. Whenever a particular need is adequately satisfied, it results in the generation of a positive emotion; since, it is favourable for survival. Likewise, if a particular need is not sufficiently satisfied, it results in a negative emotion. It is because of the fact that when a need is not satisfied, it is not favourable for survival.

- 3.33

- In addition to these features of ReCau, social learning theory is also incorporated in ReCau architecture. For this purpose, social learning theory proposed by Bandura (1969) is adopted. This theory focuses on learning in a social context. The principles of social learning theory can be summarised as follows (Ormrod 2003):

- Agents can learn by observing the behaviours of others and the outcomes of those behaviours.

- Learning may or may not result in a behaviour change of an agent.

- Expected reinforcements or punishments can have effect on the behaviours of an agent.

- 3.34

- Additionally, Bandura (1969) suggests that the environment reinforces social learning. He states that this can happen in several different ways such as:

- A group of agents with strong likelihood to an agent can reinforce learning from them. For instance, a group of planning agents who use a hybrid planning approach can reinforce the other planning agents to learn the same approach.

- An individual third agent which has influence on an agent can reinforce learning from the other agents. As an example, a planning manager agent can reinforce one of the planning agents to learn a hybrid planning approach from the other agents.

- The expectation of satisfaction from a behaviour that is performed by the other agents can reinforce an agent to learn. The agent can observe that the other agents create plans faster than itself due to the use of the hybrid planning approach. In turn, the agent would be reinforced to learn to use the same approach to create plans faster.

- 3.35

- By adopting these ideas in the ReCau architecture, a social learning model is proposed. In order to establish the model, reinforcement learning is utilized. According to reinforcement learning, the agents learn a policy of how to act given an observation of the world. The policy maps the states of the world to the actions that the agent ought to take in those states (Sutton and Barto 1998).

- 3.36

- An agent can learn either in a supervised or an unsupervised manner. The reinforcement in the present approach is provided by the predicting the satisfaction that can be obtained by using the plan to be learned. If an agent considers that the plan is sufficiently satisfactory then the agent starts learning. Otherwise, the agent does not learn the plan.

- 3.37

- In the supervised learning an agent receives a complete plan from another agent. Since reinforcement learning is adopted, the plans include the conditions and the actions. In addition, in this approach when providing a plan to another agent, an agent provides the associated need. In the proposed approach, reinforcement realised by the satisfaction. To put in practice, a social context to an agent is established and every agent is grouped.

The ReCau Architecture

- 3.38

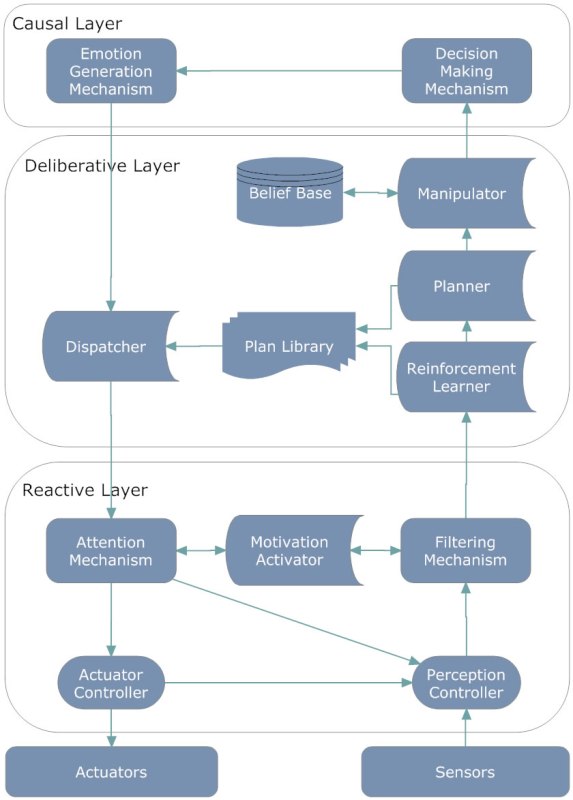

- The Reactive-Causal Architecture is a three tiered architecture consisting reactive, deliberative and causal layers. While the lowest layer is reactive, the highest layer is causal. The reactive layer controls perception and action. The deliberative layer has capabilities such as learning, action planning and task dispatching. In the highest layer, decision-making and emotion generation occurs. The components of the architecture are shown in Figure 1. Subsequently, the functions of these components are elaborated.

Figure 1. Reactive-Causal Architecture - 3.39

- ReCau continuously observes internal and external conditions by a perception controller. After receiving these conditions, data is sent to a filtering mechanism. The filtering mechanism filters out data which is not related with the predefined needs of an agent. If the sensory data is relevant to the needs of the agent, the motivation activator generates a goal to satisfy the corresponding need.

- 3.40

- To realise these mechanisms each condition is related to a need. To put this idea in practice, all of the needs of an agent are organised in a hierarchical order. While defining needs Existence, Relatedness and Growth approach proposed by Alderfer (1972) is adopted. According to the design purpose of the architecture needs are selected in accordance with the ERG approach.

- 3.41

- The goals of an agent are held in a queue. In this queue goals are in a hierarchical order in accordance with the level of the corresponding need. The goal related with the lowest level need takes the first order in the hierarchy. Being in the first place in the queue means the corresponding goal is processed first. The first goal in the queue and the related condition and need are sent to the deliberative layer by the filtering mechanism. While sending the goal, condition, and need, the goal is removed from the queue and agent status is changed as busy.

- 3.42

- Whenever a goal, a condition and a need are sent, they reach a reinforcement learner. The reinforcement learner is linked to a plan library. By using data in the plan library, the reinforcement learner determines if learning is required or not. If the reinforcement learner cannot map received condition with a plan, the agent starts learning. Otherwise, the reinforcement learner sends the condition and goal to a planner.

- 3.43

- Whenever the planner receives the condition and goal, it develops a plan by the help of the plan library. The planner of ReCau is a discrete feasible planner which means the state space is defined discretely. The planning starts in an initial state and tries to arrive at a specified goal state. Initial state is the condition received and the goal which satisfies the associated need is the final state.

- 3.44

- In ReCau, each goal state corresponds to a certain mean value of satisfaction degree (μ) and a variance value (σ2) which means that each plan alternative corresponds to these values. When the plan alternatives are developed the plan alternatives with associated values, the need, and the condition are sent to a manipulator.

- 3.45

- The responsibilities of the manipulator are to judge plan alternatives and resolve conflicts between other agents by using a belief base. When the manipulator receives a condition, if it is required, beliefs are updated in accordance with the changing condition. Then the manipulator simply checks post-conditions of each plan alternative. If there is a belief associated with the post-condition, manipulator analyses the impact of the belief on the plan alternative. To realise this impact, each belief has a certain impact factor (ψ). The impact factor is a value between -1 and 1 while 0 signifies no influence, 1 signifies strongest positive impact. The positive impact factor increases the mean value of satisfaction degree while negative impact factor reduces it.

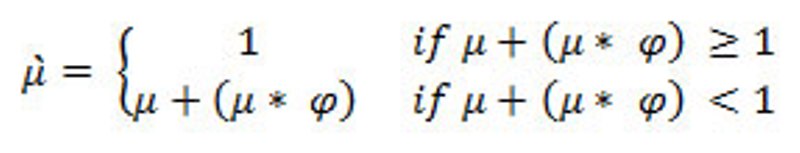

The manipulator recalculates the mean values of satisfaction degrees of each plan alternative by using these impact factors. Recalculated values are called ameliorated mean values of satisfaction degrees (μ'). These values are calculated by the following formula:

(1) - 3.46

- Having calculated the ameliorated mean values, the plan alternatives along with these values are sent to the causal layer. The plan alternatives, the need, the goal and corresponding values reach the decision-making mechanism first. The decision-making mechanism uses these values to determine the satisfaction degrees for each plan alternative.

- 3.47

- To determine satisfaction degrees, initially polar technique proposed by Box and Muller (1958) is applied to generate two independent and identically distributed (iid) uniform random variables (U1 and U2). Then these variables are transformed into two normally distributed random numbers (p1 and p2). These are independent and identically distributed variables. Besides, these are normally distributed random numbers with mean value of 0 and standard deviation of 1 (p1 and p2 are iid N ≈ (1)).

After obtaining two normally distributed random numbers (p1 and p2), one of them is used to calculate a satisfaction degree (δ). The formula for calculating satisfaction degrees is shown below.

(2) As stated before, the satisfaction degree should be between 0 and 1. Therefore, if the calculated value is bigger than 1 then satisfaction degree is set to 1 and if the calculated value is less than 0 the satisfaction degree is set to 0. Otherwise, calculated value becomes the satisfaction degree of the corresponding plan alternative.

- 3.48

- Each plan alternative corresponds to different mean value of the satisfaction degree and variance value. Therefore, for each plan alternative satisfaction degree is to be calculated. Then these values are compared by the decision-making mechanism to find the most satisfactory plan alternative. The plan alternative with the highest satisfaction degree is considered as the most satisfactory plan alternative.

- 3.49

- After finding the most satisfactory plan alternative, it is selected as the intention of the agent. The intention is sent to emotion generation mechanism along with the satisfaction degree, the need and the goal.

- 3.50

- In the emotion generation mechanism, the needs are associated with certain emotions. There are four emotion types in ReCau: (1) strong positive emotion, (2) positive emotion, (3) negative emotion, and (4) strong negative emotion. If the satisfaction degree is above or below certain emotion limits then the corresponding emotion is generated. More details on the emotion generation mechanism can be found in (Aydin and Orgun 2008).

- 3.51

- After determining the intention and the emotion, they are sent to the dispatcher. The responsibility of the dispatcher is to assign tasks to the components. To assign those tasks, according to the intention (i.e., the selected plan) and the emotion, the dispatcher obtains the details of the plans from the plan library. In the plan library, each action is described explicitly in such a way that each action corresponds to certain components. The emotions are also kinds of plans; therefore, they are held in the plan library.

- 3.52

- Then the details of the actions are sent to the actuator controllers through the attention mechanism. The attention mechanism enables the agent to focus on meeting the active motive. While executing certain actions to satisfy a particular need, it keeps the agent focused on that activity. To do so, it directs the controlling mechanisms. While performing the actions, if the agent's active goal changes, the attention mechanism changes the focus of the controllers. In other words, the agent stops executing the action by warning its actuator and perception controllers. These components start focusing on the new active goal. To do so, the actuator delays the current action to continue after finishing the new active goal.

- 3.53

- The last component of the reactive layer is the actuator controller. This component provides a means to perform actions in the environment. To perform actions, the ReCau agent requires external components like a body, arms, or just a message passing mechanism. These additional mechanisms may vary according to the design purposes of an agent.

- 3.54

- More details on the theoretical background, components and mechanisms of the ReCau architecture can be found in Aydin and Orgun (2010).

The Description of the Radar Task Simulation

The Description of the Radar Task Simulation

- 4.1

- In the field of organisational research many researchers focused on determinants of organisational performance. For this purpose, organisational theorists attempted to develop various formalisms to predict behaviour. Several formal models are developed by using mathematics, simulation, expert systems and formal logic. Those models help researchers to provide information on organisational behaviour, determine errors and gaps in verbal theories, and determine if theoretical propositions are consistent (Carley et al. 1998).

- 4.2

- Carley et al. (1998) explains organisational performance as a function of the task performed. A typical task is a classification choice task in which decision makers gather information, classify it and make a decision based on the classified information. In the field of organisational design, many researchers adopted the radar task in order to determine the impact of cognition and design on organisational performance. In this task organisational performance is characterised as accuracy.

- 4.3

- In the radar task, the agents try to determine whether a blip on a radar screen is a hostile plane, a civilian plane, or a flock of geese. Originally, there are two types of radar tasks. The first one is static and the second one is dynamic. In the static version of the task, the aircrafts do not move on the radar screen. In the dynamic radar task, the aircrafts move and the analysts may examine an aircraft several times (Lin and Carley 1995). In the present study, the static version of the radar task is adopted. Therefore, the static radar task is explained below.

- 4.4

- In this task, there is a single aircraft in the airspace at a given time. The aircrafts are uniquely characterized by nine different characteristics (features). The list of these features is shown in Table 1.

Table 1: The Features of an Aircraft [Source: (Lin and Carley 1995)] Name Range Categorisation of Criticality Low Medium High Speed 200-800 miles/hour 200-400 401-600 601-800 Direction 0-30 degrees 21-30 11-20 0-10 Range 1-60 miles 41-60 21-40 1-20 Altitude 5,000-5000 feet 35k-50k 20k-35k 5k-20k Angle (-10)-(10) degrees (4)-(10) (-3)-(3) (-10)-(-4) Corridor Status 0(in), 1(edge), 2(out) 0 1 2 Identification 0 (Friendly Military),

1 (Civilian),

2 (Unknown Military)0 1 2 Size 0-150 feet 100-150 50-100 0-50 Radar Emission Type 0 (Weather),

1 (None),

2 (Weapon)0 1 2 - 4.5

- In the radar task simulations each of the above characteristics can take on one of three values (low = 1, medium = 2, or high = 3). A number of agents must determine whether an aircraft observed is friendly (1), neutral (2), or hostile (3). The number of possible aircrafts is 19,683 which is the number of different unique combinations of the features (39).

- 4.6

- A task environment can either be biased or unbiased. If the possible outcomes of the task are not equally likely, it is said that the task environment is biased. If approximately one third of the aircrafts are hostile and one third of the aircrafts are friendly, then the environment is said to be unbiased. Lin and Carley (1992) state that biased tasks are less complex, since a particular solution outweighs others.

- 4.7

- The true state of an aircraft is determined by adding the values of the above 9 features. In an unbiased environment, if the sum is less than 17, then the true state of the aircraft is friendly. If the sum is greater than 19, then the true state of the aircraft is hostile. Otherwise, the aircraft's true state is neutral. The true state of the aircraft is not known before making the decision (Lin and Carley 1992).

- 4.8

- The responsibility of the organisation is to scan the air space and make a decision as to the nature of the aircraft. Some of the agents (the analysts of the organisation) have access to information on the aircraft related to its features. Based on this information, the agents make decision and develop a recommendation whether they think the aircraft is friendly, neutral, or hostile. The recommendations are processed or combined in accordance with the organisational structure. The types of organisational structures are as follows (Lin and Carley 1995):

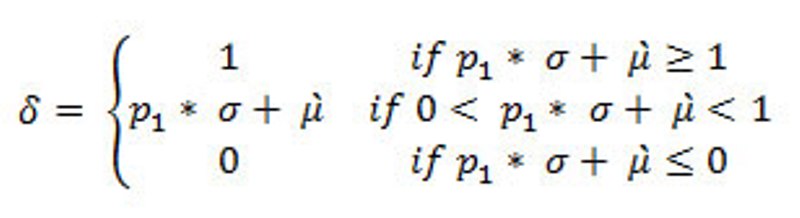

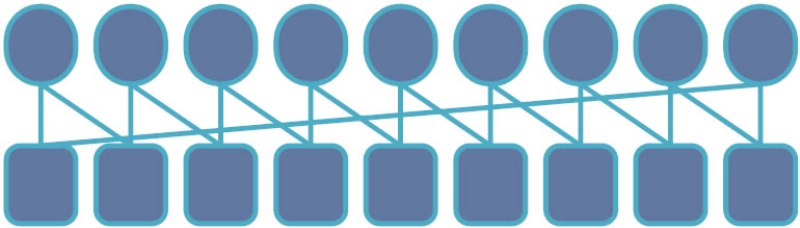

- Team with Voting: In this type of an organisational structure, each analyst has an equal vote. Each analyst examines available information and makes a decision. This decision is considered as the vote of the analyst. The organisational decision is made by the majority vote. This structure is illustrated in Figure 2.

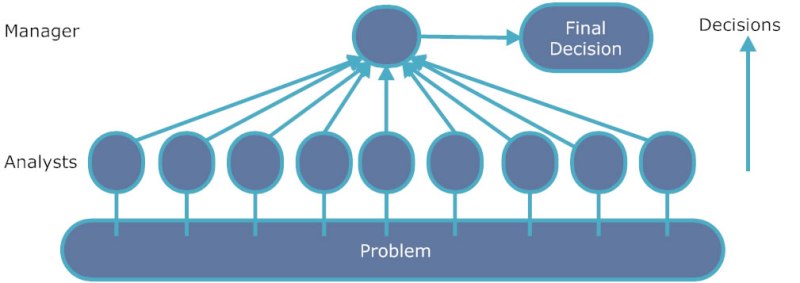

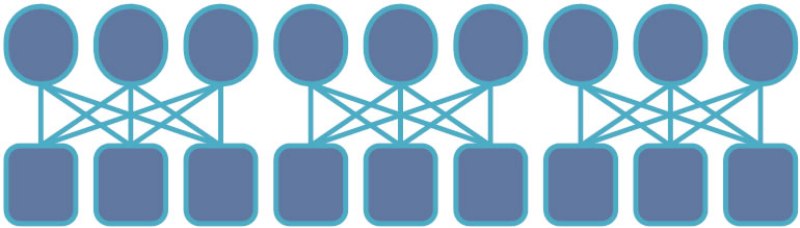

Figure 2. Organisational Structure of Team with Voting [Source: (Lin and Carley 1992)] - Team with Manager: In this structure, each analyst reports its decision to a single manager. Like the team with voting, analysts examine available information and recommend a solution. Based on these recommendations, the manager makes an organisational decision. The Team with Manager structure is shown in Figure 3.

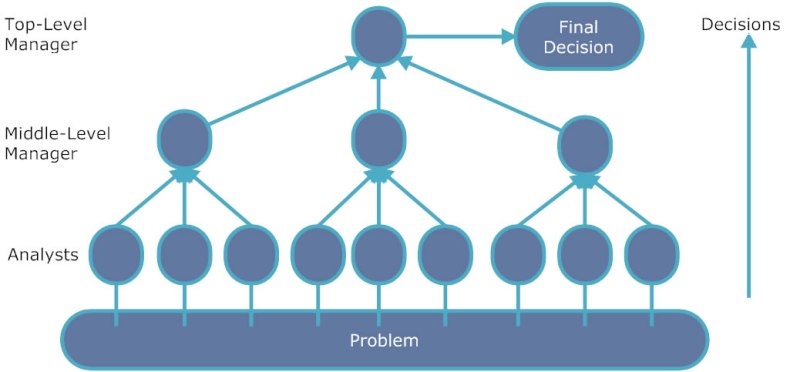

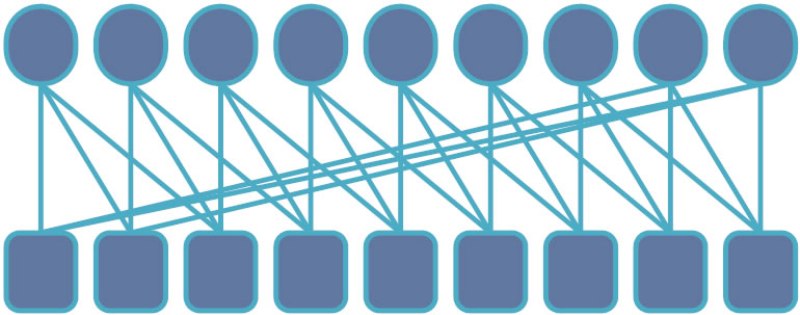

Figure 3. Organisational Structure of Team with Manager [Source: (Lin and Carley 1992)] - Hierarchy: In the hierarchical structure, each analyst reports to its middle-level manager and the middle-level managers report to the top-level manager. The analysts examine available information and make recommendations. Then the middle-level managers analyse the recommendations from their subordinates and make a recommendation to the top-level manager. Based on the middle-level managers' recommendations, the top-level manager makes organisational decision. This structure is illustrated in Figure 4.

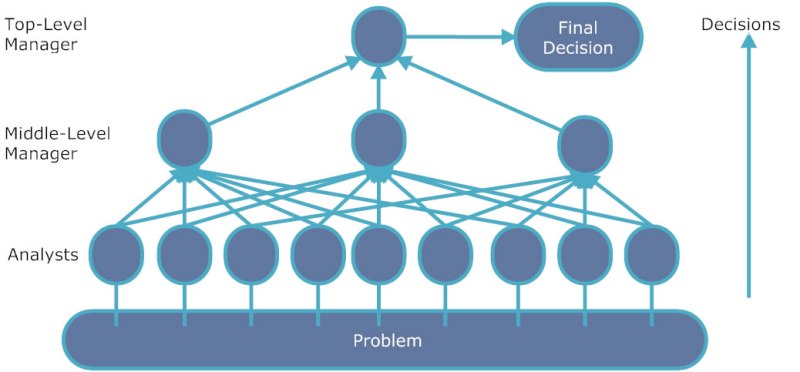

Figure 4. Organisational Structure of Hierarchy [Source: (Lin and Carley 1992)] - Matrix: This structure is also a hierarchical structure. However, in this structure, each analyst reports to two middle level managers. Each analyst examines information and makes a recommendation. By examining the recommendations of their subordinates, the middle-level managers make decision and report to the top-level manager. Top-level manager makes an organisational decision based on the recommendations of the middle-level managers. The matrix structure is shown in Figure 5.

Figure 5. Organisational Structure of Matrix [Source: (Lin and Carley 1992)]

- Team with Voting: In this type of an organisational structure, each analyst has an equal vote. Each analyst examines available information and makes a decision. This decision is considered as the vote of the analyst. The organisational decision is made by the majority vote. This structure is illustrated in Figure 2.

- 4.9

- As illustrated in the above figures, in the radar task simulations, each structure consists of nine analysts. As it can be seen, some structures also include middle and/or top-level managers.

- 4.10

- Within an organisation, there are also resource access structures. These structures determine the distribution of information to the analysts. Each analyst may have access to particular characteristics. There are four different types of resource access structures:

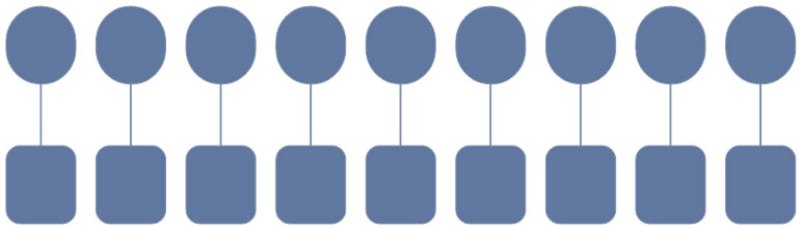

- Segregated: In such a structure, each agent has access to only one task component. This structure is illustrated in Figure 6.

Figure 6. Segregated Resource Access Structure [Source: (Lin and Carley 1992)] - Overlapped: In this structure, each agent has access to two task components, while each task component is accessible by only two analysts. Overlapped resource access structure is shown in Figure 7.

Figure 7. Overlapped Resource Access Structure [Source: (Lin and Carley 1992)] -

Blocked: In this type of structure, each agent has access to three task components. Three analysts have access to the exact same three task components. If these three analysts are in a hierarchical organisational structure, then they report to the same manager. This structure is illustrated in Figure 8.

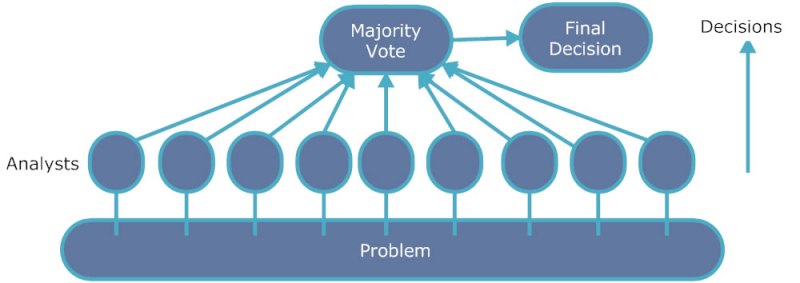

Figure 8. Blocked Resource Access Structure [Source: (Lin and Carley 1992)] - Distributed: In this structure, each agent has access to three task components. No two analysts see the same set of task components. If these analysts are in a hierarchy or a matrix, then the manager would have indirect access to all the task components. Distributed resource access structure is shown in Figure 9.

Figure 9. Distributed Resource Access Structure [Source: (Lin and Carley 1992)]

- Segregated: In such a structure, each agent has access to only one task component. This structure is illustrated in Figure 6.

- 4.11

- By utilizing these structures, the radar task simulations are performed to determine the relative impact of cognition and design on organisational performance. If the true state of the aircraft is the same with the final decision of the organisation then the decision is correct. For a set of problems, the percentage of the correct decisions of those problems determines the performance of the organisation.

- 4.12

- A number of radar task simulations are performed by adopting these organisational structures and resource access structures. In the following section the former radar task simulations are discussed.

Previous Simulation Results

Previous Simulation Results

- 5.1

- By implementing the radar task simulations, researchers attempt to analyse organisational performance. For this purpose, researchers use computational models, human experiments and archived data. These analyses can be performed at a micro (small group) and/or a macro (organisational) level. In the micro level studies, a hierarchy has a single tier. In other words, the hierarchy includes only one manager and 9 subordinates. In the macro level studies, a hierarchy may be multi-tier structure (Carley et al. 1998). In the present study, only micro level studies are considered.

- 5.2

- Carley et al. (1998) are the first researchers who studied the radar task simulations at the micro level. They adopted two resource access structures: the distributed structure and the blocked structure. At the same time, they adopted two organisational structures: team and hierarchy. They implemented the simulation in such a way that the organisations faced the same set of 30 tasks in the same order with the same organisational design while the agent models varied. In the first 30 tasks, the agents received feedback. For the second 30 tasks, the agents did not receive any feedback.

- 5.3

- In their analysis, they performed a series of experiments which included computational models and humans. These are:

- CORP-ELM,

- CORP-P-ELM,

- CORP-SOP,

- Radar-SOAR, and

- Humans.

- 5.4

- CORP is a computational framework which is a simulated testbed. This testbed is designed to enable the researchers to compare the performance of organisations with different settings. CORP models are artificial organisations consisting adaptive agents with task specific abilities (Carley and Lin 1995).

- 5.5

- The difference in CORP models is related to their decision making mechanism. CORP-SOP agents make decisions by following standard operating procedures provided by the organisation. CORP-ELM agents make decisions under the guidance of their own personal experience. CORP-P-ELM agents make decisions by guessing based on a probabilistic estimate of the obtained answers through their own experience (Carley et al. 1998).

- 5.6

- SOAR agents are more complex artificial entities. They are based on the SOAR architecture which attempts to mimic general intelligence. Radar-SOAR is a composite simulation system, specifically designed for the radar task. It provides a way to compare and contrast the performance of SOAR agents on the radar task (Carley and Ye 1995).

- 5.7

- Carley et al. (1998) used four different organisational designs in their simulations:

- A team with voting organisational structure and a blocked resource access,

- A team with voting organisational structure and a distributed resource access,

- A hierarchy with a single supervisor organisational structure and a blocked resource access, and

- A hierarchy with a single supervisor organisational structure and a distributed resource access.

Table 2: Radar Task Simulation Results of the Original Study [Source: (Carley et al. 1998)] Agent Organisational Design Team Hierarchy Blocked Distributed Blocked Distributed CORP-ELM 88.3 85.0 45.0 50.0 CORP-P-ELM 78.3 71.7 40.0 36.7 CORP-SOP 81.7 85.0 81.7 85.0 Radar-SOAR 73.3 63.3 63.3 53.3 Human 50.0 56.7 46.7 55.0 - 5.8

- All of the results shown in this subsection are performance percentage. According to these results, the agent models performed better than humans in all the team situations. Humans showed better performance than CORP-ELM and CORP-P-ELM in the hierarchy. In the distributed resource access structure humans performed better. However, CORP-P-ELM and Radar-SOAR agents performed better while the resource access is blocked. The other agent models, performed better in the distributed resource access like humans. CORP-P-ELM agents performed the worst in the hierarchy, while humans performed worst in the team with voting. The results indicate that the performance in a different organisational setting depends on the type of the employed agent.

- 5.9

- Sun and Naveh (2004) criticised these experiments stating that the agent models are being fairly simplistic. They stated that the intelligence level of these agents including SOAR was rather low. They added that learning was not complex enough to mimic human cognition. To address these criticisms they performed the same simulation by adopting a cognitive architecture called CLARION.

- 5.10

- In their simulations, Sun and Naveh (2004) used the same organisational setting while employing the same number of agents. They replaced the agents with CLARION agents. Then they chose 100 tasks randomly. In these settings, they performed a number of simulations. The first simulation was a docking simulation in an abstract sense. They run the simulation for 4,000 cycles to match the human data. The results of this experiment and previous human data are shown in Table 3.

All of the above results are performance percentage. According to these results, CLARION achieved the best performance match with human data.Table 3: Radar Task Simulation Results of CLARION Agents [Source: (Sun and Naveh 2004)] Agent Organisational Design Team Hierarchy Blocked Distributed Blocked Distributed Human 50.0 56.7 46.7 55.0 CLARION 53.2 59.3 45.0 49.4 - 5.11

- In the second simulation they performed, they increased the number of cycles to 2000. By this experiment, they proved that performance can be improved in the long run by the contribution of the learning approach adopted in CLARION. They criticised the original simulations being the result of limited training.

- 5.12

- Their findings indicated that a team organisation using distributed access achieves a high level of performance quickly then the learning process slows down and the performance does not increase much. Contrary to this, a team with blocked access starts out slowly. However, it reaches the distributed access' performance in the long run. In the hierarchy, they stressed that learning is slower and more erratic; since, two layers of agents are being trained. They indicated that when there is a hierarchy in a blocked access, the performance is worse; since, there is very little learning.

- 5.13

- In their third simulation, they varied a number of cognitive parameters and observed their effect on the performance. With this simulation, they confirmed the effects of the organisation structure and the resource access structure. Besides, they found that the interaction of the organisation structure and the resource access structure with the length of training was significant. In addition, they found no significant interaction between the learning rates with the organisational settings.

- 5.14

- In their last two simulations, they introduced individual differences in the agents. Firstly they replaced one of the CLARION agents with a weaker agent. Then they performed simulation in a hierarchy with distributed resource access. Under these settings, the performance of the organisation dropped by only three to four per cent. They concluded that the hierarchies are flexible enough to deal with a single weak performer. Secondly, they employed CLARION agents with a different learning rate. They found that the hierarchy performed better than team with voting. They claimed that supervisors could take individual differences into account by learning from experiences.

ReCau: Radar Task Simulation

ReCau: Radar Task Simulation

- 6.1

- In the literature, the radar task is chosen to analyse interaction between design and cognition for several reasons. The most important reasons are that the radar task is inspired from a real world problem and widely examined. The other reason is that the task is a specific and well defined task. Thirdly, the true decision can be known and feedback can be provided. Fourth, multiple agents can be employed in a distributed environment so that the agents can work on different aspects of the task. Fifth, the task has a limited number of cases; therefore, mathematical techniques can be used to evaluate agent performance. The last but not the least important reason is that the task can be expanded further by including other factors.

- 6.2

- Beside these reasons, in the present study the radar task is chosen in order to evaluate the decision-making mechanism of ReCau. The decision-making mechanism is the most significant component of the architecture. In the present study, several simulations are performed to test the decision-making mechanism of ReCau. These simulations are performed by using Netbeans IDE and Java Programming Language. The simulation codes can be obtained from the following web page: http://web.science.mq.edu.au/~aaydin/

- 6.3

- In this study, the same organisational settings with the former studies are implemented. In these settings, the same tasks are performed by ReCau agents. The most important difference between previous studies and this study is that the former studies chose a set of problems. They performed simulations for the chosen set of problems. In the present study, all of the tasks are generated randomly to realise a real unbiased environment. To achieve this aim, the features of each aircraft are generated randomly and independently from each other. The other difference is that in the present study the length of the simulation is higher.

- 6.4

- Initially, a docking simulation is performed while the organisational structure is the team with voting. By performing a docking simulation, cognitive parameters are adjusted to match human data in the first setting. Then the same cognitive parameters are used in the other settings.

- 6.5

- The length (cycle) of the simulation is set to 20,000 and then the simulation is run for 10 times for each setting separately. The results of the docking simulation are shown in Table 4. The results shown in the table are performance percentage.

Table 4: The Docking Simulation Results Agent Organisational Design Team Hierarchy Blocked Distributed Blocked Distributed 1 52.45 53.68 42.345 42.63 2 53.49 53.67 42.995 43.02 3 52.935 54.03 42.345 42.7 4 52.765 53.515 42.545 43.07 5 52.61 53.915 42.535 42.615 6 53.17 53.16 42.665 42.63 7 53.165 53.245 42.17 43.86 8 53.365 53.81 42.225 42.97 9 52.995 53.545 42.82 42.64 10 53.61 53.325 43.19 42.715 Average Performance 53.0555 53.5895 42.5835 42.885 Mean Values 0.65, 0.70, 0.75 Variance Value 0.085 - 6.6

- In the docking simulation the variance value is set to 0.085 and means values are adjusted as follows:

-

If the aircraft value is greater and equal to 7:

Satisfaction degree for hostile is 0.75 Satisfaction degree for neutral is 0.70 Satisfaction degree for friendly is 0.65

- If the aircraft value is greater and equal to 6:

Satisfaction degree for hostile is 0.70 Satisfaction degree for neutral is 0.75 Satisfaction degree for friendly is 0.70

- else:

Satisfaction degree for hostile is 0.65 Satisfaction degree for neutral is 0.70 Satisfaction degree for friendly is 0.75

-

If the aircraft value is greater and equal to 7:

- 6.7

- The cognitive parameters of ReCau provide very high flexibility. To illustrate this aspect of ReCau, in the following experiments the variance value in the decision-making mechanism is reduced to low levels (0.05 and 0.01). The same length, the number of replications, settings and mean values are used in these simulations. The results of these simulations are shown in Table 5. In this table, only the overall average performance percentages are shown.

As can be seen in the table, while the variance value is reduced, the performance of the organisation is increasing. The highest performance is achieved in a hierarchy with distributed resource access.Table 5: The Simulation Results when Variance Value is Reduced Agent Organisational Design Team Hierarchy Blocked Distributed Blocked Distributed Average Performance 59.2075 61.04 49.679 50.879 Mean Values 0.65, 0.70, 0.75 Variance Value 0.05 Average Performance 75.5565 72.2895 72.3165 76.5915 Mean Values 0.65, 0.70, 0.75 Variance Value 0.01 - 6.8

- In the following simulation, the difference between the mean values is increased. The results are shown in Table 6. In these simulations, the variance value is once again set to 0.085.

As can be seen, while the variance value is constant, if the difference between mean values is increased, the performance increases. However, with smaller variance, better performance is observed. The detailed evaluation of the radar task simulation can be found in the following subsection.Table 6: The Simulation Results when Differences between Mean Values are Increased Agent Organisational Design Team Hierarchy Blocked Distributed Blocked Distributed Average Performance 61.5195 63.5035 52.77 54.356 Mean Values 0.60, 0.70, 0.80 Variance Value 0.085 Average Performance 70.263 70.5555 64.2985 68.7015 Mean Values 0.50, 0.70, 0.90 Variance Value 0.085

The Evaluation of the Radar Task Simulation

The Evaluation of the Radar Task Simulation

- 7.1

- In this paper, the radar task simulation is undertaken to illustrate and evaluate the decision-making mechanism of ReCau and to better understand the relation between organisational design and cognitive parameters. For this purpose, the radar task simulations are performed by varying cognitive parameters in the decision-making mechanism of ReCau.

- 7.2

- In the Table 7, the simulation results of the existing architectures and the docking simulation results of ReCau are shown together to compare the results.

- 7.3

- As it can be seen in the table, the performance of the ReCau agents matches the human data well. A better match in this task means closer performance percentage to human data. ReCau performance best matches human data in a team with voting organisational structure. The performance of the CLARION agents also matches human data very well. Especially, in the first three setting, the performance percentage difference between CLARION, ReCau and humans is around 3 per cent. However, the performance of CLARION agents matches human data better in hierarchy with distributed resource access structure.

Table 7: Comparison of Docking Simulation Results Agent Organisational Design Team Hierarchy Blocked Distributed Blocked Distributed CORP-ELM 88.3 85.0 45.0 50.0 CORP-P-ELM 78.3 71.7 40.0 36.7 CORP-SOP 81.7 85.0 81.7 85.0 Radar-SOAR 73.3 63.3 63.3 53.3 CLARION 53.2 59.3 45.0 49.4 ReCau 53.1 53.6 42.6 42.9 Human 50.0 56.7 46.7 55.0 - 7.4

- The results show that the performance of ReCau in docking simulation is generally closer to human performance than CORP series and Radar-SOAR. As stated by Sun and Naveh (2004), those models are fairly simplistic. However, the decision-making model of ReCau is highly realistic. On the other hand, the trial and error fashion learning model adopted in CLARION enable CLARION agents to match human data slightly better. It is because of the fact that a little learning took place in the original simulation performed on humans.

- 7.5

- The performance pattern of ReCau agents also matches human data. As it can be seen, in the distributed resource access structures the ReCau performs slightly better. It is the same for human data. Humans also perform better in the distributed resource access structures. Humans and the ReCau agents show the best performance in a team with distributed resource access structure. They show the worst performance in a hierarchy with blocked resource access structure.

- 7.6

- From these results, it can be deduced that the distributed resource access structure has positive impact over performance. Except for CORP-SOP agents, the performance of all agents is higher in a team. Therefore, it can be asserted that the agents including the ReCau agents perform better in a team.

- 7.7

- In ReCau simulations, the learning approach proposed by ReCau is not implemented; since, social learning is not an appropriate approach to adopt in this type of a task. Under this setting, the findings support the results of Sun and Naveh (2004). They stated that very little learning takes place in a hierarchy with a blocked access. The results of ReCau simulations confirm this finding; since, the performance of ReCau agents matches human data well without employing any learning approach.

- 7.8

- It must be noted that, in the original simulation study very little learning took place. It is because of the fact that the results of the original study are the result of limited training. Therefore, the radar task simulation provides a very good test bed for testing decision-making mechanism of ReCau.

- 7.9

- The most significant performance difference between human data and the ReCau agents is observed in a hierarchy with distributed resource access structure. The performance of the CLARION agents matches human data better in this setting. These results indicate that the learning is more effective in a hierarchy with distributed resource access structure.

- 7.10

- Carley et al. (1998) stated the same predictive performance accuracy with human data can be achieved by more cognitively accurate models at the micro levels. In the light of this fact, the findings of the docking simulation of ReCau indicate that the decision-making mechanism proposed along with ReCau is highly realistic.

- 7.11

- When the cognitive parameters in the decision-making mechanism of ReCau are varied, higher performance percentages are achieved. Even though, the performance percentages of ReCau cannot go as high as CORP models, it still holds promise to perform like humans in this type of choice tasks.

- 7.12

- The simulation results of ReCau also reveal interesting results with regards to the organisational theory. The performance percentages of ReCau agents confirm the results of Carley et al. (1998) who stated that the agent cognition interacting with organisational design affects organisational performance.

- 7.13

- The results indicate that the organisational structure has a more significant effect on performance than the resource access structure. In the docking simulation, the performance of ReCau agents is significantly different in different organisational structures. However, there is no significant performance difference while the agents are in the same organisational structure and the resource access structure is different.

Conclusion

Conclusion

- 8.1

- The simulation results indicate that ReCau provides a highly realistic decision-making mechanism. In ReCau, satisfaction degrees are normally distributed random numbers coming from certain mean and variance values. As a result, the proposed approach provides a degree of randomness in the process which in turn explains human intelligent behaviour. Therefore, the decision-making mechanism of ReCau simulates the human behaviour better.

- 8.2

- In its current form, Reactive-Causal Architecture provides a good infrastructure for believable agents. However, in the future a number of improvements can be made. For instance, hierarchical and/or the non-linear planning approaches can be adopted to increase the efficiency of ReCau.

- 8.3

- The emotion model adopted in ReCau is very simplistic. Even though in its current form it provides a means to illustrate the ideas of Maslow, it can be further improved. For instance, there might be conflicting needs each of which is satisfied to a certain extent. Therefore, in the future, the emotion model of ReCau can be further extended to display affect different emotions in such cases.

- 8.4

- The type of learning adapted in ReCau is based on the theories of social learning and it is not implemented in the current simulation. In the near future, an approach to enable the ReCau agents to learn from their mistakes can be incorporated and social learning approach can be implemented. After adopting these approaches, the radar task simulation can be performed once again.

- 8.5

- While performing such a simulation study, human simulation must be performed once again to obtain better results. It is because of the fact that in the original simulation, humans were not allowed to learn sufficiently. In such a study, we plan to enable humans to learn socially and learn from their mistakes by providing feedback. Therefore, in the near future, we plan to perform this simulation on humans and by employing ReCau agents.

References

References

-

ALDERFER, C. P. (1972). Existence, Relatedness, and Growth: Human Needs in Organizational Settings. New York: Free Press.

AYDIN, A. O. and Orgun, M. A. (2010). Reactive-Causal Cognitive Agent Architecture. Advances in Cognitive Informatics and Cognitive Computing 323, 71-103. [doi:10.1007/978-3-642-16083-7_5]

AYDIN, A. O. and Orgun, M. A. (2008). The Reactive-Causal Architecture: Introducing an Emotion Model along with Theories of Needs. The 28th SGAI International Conference on Innovative Techniques and Applications of Artificial Intelligence. Cambridge: Springer.

AYDIN, A. O. Orgun, M. A. and Nayak, A. (2008a). The Reactive-Causal Architecture: Towards Development of Believable Agents. The 8th International Conference on Intelligent Virtual Agents, Lecture Notes in Computer Science 5208. Tokyo.

AYDIN, A. O. Orgun, M. A. and Nayak, A. (2008b). The Reactive-Causal Architecture: Combining Intentional Notion and Theories of Needs. The 7th IEEE International Conference on Cognitive Informatics. Los Angeles.

BANDURA, A. (1969). Social-Learning Theory of Identificatory Processes. In Goslin, D. A. (Ed.). Handbook of Socialization Theory and Research (pp. 213-262). Chicago: Rand McNally.

BATES, J. (1994). The Role of Emotion in Believable Agents. Communications of the ACM 37(7), 122-125. [doi:10.1145/176789.176803]

BOX, G. E. P. and Muller, M. E. (1958). A Note on the Generation of Random Normal Deviates. The Annals of Mathematical Statistics 29(2), 610-611. [doi:10.1214/aoms/1177706645]

BREWIN, C. R. (1989). Cognitive Change Processes in Psychotherapy. Psychological Review 96(3), 379-394. [doi:10.1037/0033-295X.96.3.379]

BRITANNICA CONCISE ENCYCLOPAEDIA. (2005). Motivation.

CARLEY, K. M. and Lin, Z. (1995). Organizational Designs Suited to High Performance Under Stress. IEEE : Systems Man and Cybernetics 25(1), 221-230. [doi:10.1109/21.364841]

CARLEY, K. M. and Ye, M. (1995). Radar-Soar: Towards an Artificial Organization Composed of Intelligent Agents. Journal of Mathematical Sociology 20, 219-246. [doi:10.1080/0022250X.1995.9990163]

CARLEY, K. M. Prietula, M. J. and Lin, Z. (1998). Design Versus Cognition: The Interaction of Agent Cognition and Organizational Design on Organizational Performance. Journal of Artificial Societies and Social Simulation 1(3) https://www.jasss.org/1/3/4.html.

DAVIDSSON, P. (2000). Multi Agent Based Simulation: Beyond Social Simulation. Lecture Notes in Artificial Intelligence 1979, 97-107. Springer. [doi:10.1007/3-540-44561-7_7]

DAVIDSSON, P. (2002). Agent Based Social Simulation: A Computer Science View. Journal of Artificial Societies and Social Simulation 5(1) https://www.jasss.org/5/1/7.html.

DENNETT, D. C. (1987). The Intentional Stance. Massachusetts: MIT Press.

DENNETT, D. C. (2000). Three Kinds of Intentional Psychology. In R. J. Stainton (ed). The Perspectives in the Philosophy of Language: A Concise Anthology (pp 163-186). Broadview Press.

JENNINGS, N. R. Sycara, K. and Wooldridge, M. (1998). A Roadmap of Agent Research and Development. Autonomous Agents and Multi-Agent Systems 1(1), 7-38. [doi:10.1023/A:1010090405266]

KAELBLING, L. P. (1986). An Architecture for Intelligent Reactive Systems. In Reasoning About Actions and Plans, (pp. 395-410), California: Morgan Kaufmann Publishers.

LAZARUS, R. S. (1982). Thoughts on the Relations between Emotions and Cognition. American Physiologist 37(10), 1019-1024. [doi:10.1037/0003-066x.37.9.1019]

LIN, Z. and Carley, K. M. (1992). Maydays and Murphies: A Study of the Effect of Organizational Design Taks, and Stress on Organizational Performance. Learning Research and Development Center. Pittsburgh University.

LIN, Z. and Carley, K. M. (1995). DYCORP: A Computational Framework for Examining Organizational Performance Under Dynamic Conditions. Journal of Mathematical Sociology 20(2-3), 193-217. [doi:10.1080/0022250X.1995.9990162]

LUCK, M. and D'INVERNO, M. (1995). A Formal Framework for Agency and Autonomy. The 1st International Conference on Multi-Agent Systems. AAAI Press/MIT Press.

MAES, P. (1991). The Agent Network Architecture: ANA. SIGART Bulletin 2(4), 115-120. [doi:10.1145/122344.122367]

MASLOW, A. H. (1987). Motivation and Personality. Harper and Row.

ORMROD, J. E. (2003). Human Learning. Prentice-Hall.

PEARL, J. (2000). Causality. Cambridge: Cambridge University Press.

RUSSELL, S. and Norvig, P. (2002). Artificial Intelligence: A Modern Approach. Prentice-Hall.

SLOMAN, A and Logan, B. (1998). Architectures and Tools for Human-Like Agents. The 2nd European Conference on Cognitive Modelling (pp 58-65).

STICH, S. P. (1985). Could man be an irrational animal?. Synthese 64(1), 115-135. [doi:10.1007/BF00485714]

SUN, R. and Naveh, I. (2004). Simulating Organizational Decision-Making Using a Cognitively Realistic Agent Model. Journal of Artificial Societies and Social Simulation 7(3),5 https://www.jasss.org/7/3/5.html.

SUTTON, R. S. and Barto, A. G. (1998). Reinforcement Learning: An Introduction. Massachusetts: MIT Press.

THORNDIKE, E. L. (1998). Animal Intelligence: An Experimental Study of the Associative Processes in Animals. The Macmillan Company. [doi:10.1037/0003-066x.53.10.1125]

WUKMIR, V. J. (1967). Emocin y Sufrimiento: Endoantropologa Elemental. Barcelona: Labor.