Abstract

Abstract

- This paper identifies possible pitfalls of simulation modeling and suggests ways to prevent them. First, we specify five typical pitfalls that are associated with the process of applying simulation models and characterize the "logic of failure" (Dörner 1996) behind the pitfalls. Then, we illustrate important aspects of these pitfalls using examples from applying simulation modeling to military and managerial decision making and present possible solutions to them. Finally, we discuss how our suggestions for avoiding them relate to current methodological discussions found in the social simulation community.

- Keywords:

- Logic of Failure, Management, Methodology, Military, Pitfalls, Simulation Models

Introduction

Introduction

- 1.1

- The interest in simulation modeling and the actual number of simulation models is increasing in the social sciences and economics (Gilbert and Troitzsch 2005; Meyer, Lorscheid and Troitzsch 2009; Reiss 2011). This can be observed in both practice and academia. For simulation users this does not come as a surprise given the many potential advantages inherent in the method (Railsback and Grimm 2011; Reiss 2011).

- 1.2

- That being said, one can find much methodological debate and disagreement in the field. It encompasses e.g., the issue of establishing standards for simulation modeling (e.g., Grimm et al. 2006; Richiardi et al. 2006), the discussion whether simulation mainly aims at prediction or at explanation (Epstein 2008), and the challenges of presenting simulation models and their results (Axelrod 1997; Lorscheid, Heine and Meyer in press). Given the method's relatively young age ongoing methodological debates are to be expected. It can even be considered as a necessary step towards establishing clear methodological standards.

- 1.3

- In the context of the ongoing methodological debates, this paper identifies possible pitfalls of simulation modeling and provides suggestions for avoiding them. Despite the described widespread disagreement on methodological issues, we have witnessed common pitfalls that simulation modeling appears to be prone to in practice. We found this agreement particularly astonishing because we have very different backgrounds and experiences in simulation modeling: One author is a general staff officer and business graduate who uses simulation in the military domain; one is academically rooted in business economics and philosophy and applies the method to accounting issues; one is a trained mathematician and physicist who regularly draws on simulation models as a business consultant.

- 1.4

- The approach adopted in this paper can be characterized along two lines. First, our objective resembles what the economic methodologist Boland describes as small-m methodology. While Big-M methodology addresses the big questions typically discussed by philosophers of science and historians of thought (e.g., "Is economics falsifiable?, (…) Does economics have enough realism?" (Boland 2001:4)), small-m methodology addresses concrete problems to be solved in the process of model building instead (Boland 2001). In this vein we strive to identify typical problems encountered when constructing simulation models and to present proven solutions based on the authors' practical experience. Second, the paper specifies the "drivers" of the problems that lead to the observed pitfalls. In line with Dörner's "logic of failure" (Dörner 1996), we look for typical thought patterns, habits and related context factors that can explain the regular occurrence of these pitfalls in the simulation modeling process. By making the unfavorable habits and corresponding pitfalls explicit, the paper creates awareness for the potential traps along the modeling process.

- 1.5

- The paper is structured as follows. First, we briefly present a simple process of applying simulation methods and specify on this basis when and why problems typically arise. Next, we illustrate the potential pitfalls with examples from military and managerial decision making and offer suggestions for avoiding them. Finally, we assess how the suggestions to avoid them relate to current methodological discussions found in the social simulation community. The paper concludes with a brief summary and outlook.

The Simulation Feedback Cycle and Potential Pitfalls for Simulation Modeling

The Simulation Feedback Cycle and Potential Pitfalls for Simulation Modeling

- 2.1

- The process of applying simulation methods can be visualized as a six-step cycle[1] (see Figure 1). The starting point of the simulation modeling cycle is typically a real-life problem, or more technically a research question, that has to be addressed. That is why the question is displayed at the top of the cycle. Then the essential processes and structures which are relevant for answering the research question have to be identified (Railsback and Grimm 2011). This makes it necessary to identify the aspects of the target system that are of interest and involves a reduction to its key attributes. This cut-off takes place with reference to the question under investigation and removes all irrelevant aspects of reality. Next, the model itself is constructed for this defined part of reality.[2] A model structure is chosen that reflects the relevant cause-and-effect-relationships that characterize the actual behavior of a target system (Orcutt 1960; Reiss 2011). Based on the model, simulation experiments are conducted with regards to the issues in question. A single, specific experimental setting is called scenario. It is defined by unique parameters that describe the experimental setting. Running the experiment itself is referred to as the simulation. The results of the simulation experiments are analyzed with respect to the question investigated and finally communicated (e.g., in practical settings clients, superiors and in academia supervisors, journal reviewers, research sponsors, etc.). The closed cycle and the dotted lines describe the fact that this process often has an iterative character resulting in refinements of earlier model versions.[3]

Figure 1. The simulation modeling cycle - 2.2

- In practice, simulation modeling is not always as straightforward as the simulation modeling cycle in Figure 1 suggests. Users of simulation methods might encounter the following five pitfalls: distraction, complexity, implementation, interpretation, and acceptance.[4] Every pitfall is associated with a specific step along the feedback cycle (see Figure 2). We will describe and analyze the pitfalls in more detail next.[5]

Figure 2. The simulation modeling cycle and associated pitfalls Distraction Pitfall

- 2.3

- Every simulation starts with developing a basic understanding of the question at hand. It is impossible to define the part relevant for modeling and simplify reality without having achieved that understanding. The process of conceiving the question commonly tempts users to address seemingly related questions at the same time. This expansion is mostly due to external pressures (e.g., from clients or superiors). Intrinsic motivation exists as well and stems predominantly from a user's desire to take advantage of perceived synergies between answering the question at hand and preparing other existing or anticipated questions. While the intent is understandable, expanding the original question typically requires enlarging the part of reality to be modeled in the next step which makes the model structure more complex. We call this the distraction pitfall.

- 2.4

- What is the "logic of failure" (Dörner 1996) leading to the distraction pitfall? As already mentioned, the involvement of other stakeholders in the modeling process is likely to be a main driver for the distraction pitfall. In a business environment as well as in the armed forces, clients or superiors might request to have several questions addressed in one model. In science, similar pressures might be exerted from fellow scientists in an audience, supervisors, reviewers, etc. Inexperienced modelers in particular can succumb to believing that they are "not accomplishing enough" in the project. Another driver might be the well-documented psychological phenomenon of overconfidence (Moore and Healy 2008; Russo and Schoemaker 1992), which manifests itself in the attitude that one can also manage several issues resulting in a more complex model. A further not negligible point for distractions is the fact, that a modeler has no clear understanding of the question to be addressed by the model. Surely this is not an easy task. Clients or superiors often lack a clear understanding of the question itself or the understanding might be diluted in the process of communication.[6] In science, experienced simulation modelers report that it is not uncommon to go through several iterations in the modeling process before arriving at a precise and clear wording of the research question at hand (Railsback and Grimm 2011).[7]

Complexity Pitfall

- 2.5

- Upon formulating the research question and defining the target system, i.e. the corresponding part of reality to be modeled, the model structure is to be chosen. This task is often perceived as particularly challenging. It often requires (sometimes drastically) simplifying model entities and their relationships that exist in reality to enable modeling. At the same time, the model structure has to represent reality with sufficient precision for the simulation to yield applicable results. It is a balancing act between simplifying and exact representation. However, going to much in the direction of exact representation of the target system this bears the risk of drowning in details and losing sight of the big picture. The resulting model structure becomes increasingly complex and comprehensive. Sometimes this complexity even causes a simulation project to fail. We call this the complexity pitfall.

- 2.6

- A main habit of thought leading to the complexity pitfall is a false understanding of realism. The main goal for a model is not to be as realistic as possible, but modeling also has to balance aspects such as the tractability of the computational model and data analysis (Burton and Obel 1995).[8] People who are generally striving for perfection might be particularly prone to this pitfall, as simplifications involve uncertainty about modeling decisions which many would like to avoid.[9] Related to the fear of radical simplifications are worries about the resistance they may cause in fellow scientists, supervisors, reviewers, etc. Moreover, it can be an intellectual challenge to find simplifications that "work" and are acceptable with respect to the problem at hand, especially prior to the model construction (Edmonds and Moss 2005). Finding a simpler and—maybe—more general model often requires several iterations in the model construction process including substantial changes to the model (Varian 1997). Finally, there seems to be a natural tendency to view modeling as a one-way street of creating and building something. It is easily forgotten that getting rid of elements which are not—or not any longer—needed can also be a part of the journey.

Implementation Pitfall

- 2.7

- Software support is often needed to generate the actual simulation model once the conceptual design is finalized. As the domain experts involved in modeling are often laymen with regards to IT implementation, they are at risk of choosing unsuitable software for the simulation. Often the implementation of a model structure is based on existing IT systems or readily available tools. As a result, the selected IT tool is often too weak if it is technically unable to support the functional scope of the simulation model. Conversely, an IT tool can be too powerful as well if only a fraction of its technical potential is used.[10] Such not well-managed interdependencies between the conceptual model and the IT implementation represent what we call the implementation pitfall.

- 2.8

- A strong driver for the implementation pitfall in practical settings such as in the armed forces and business are cost considerations. The high pressure in practical settings to present results quickly might be another reason to resort to IT solutions that are already in use.[11] Even though there might be less time pressure in science, curiosity and impatience could equally tempt researchers to look for quick solutions. The time investment to learn a new modeling platform or programming language can be quite high. The well-documented phenomenon of "escalation of commitment" might be another important driver in this context (Staw 1981). Having invested heavily in a specific IT solution reduces the willingness to abandon it even if it was rational to use a different one. Some context factors are particularly conducive to escalate the commitment such as having been involved in the decision about the course of action and working on projects that relate to something perceived as new, which is both characteristic for many simulation projects (Schmidt and Calantone 2002).

Interpretation Pitfall

- 2.9

- Upon completing and testing an implemented simulation model, one can finally work with it. However, one can often observe that users are prone to losing their critical distance to the results produced by a simulation. The effect appears to be the bigger the more involved the persons were in the model's development and/or application. Losing critical distance to the simulation results can be responsible for undiscovered model errors or reduced efforts in validation. It also has to be noted again that a model is a simplified representation of reality that can only yield valid results for the context it was originally created for. Going beyond that context means to lose the results' validity and can lead to improper or even false conclusions. It happens for example when the analyzed aspect is not part of the reality represented by the model or when the model is too simple and does not allow for valid interpretations. These wrong conclusions resulting from a loss of critical distance is what we call the interpretation pitfall.

- 2.10

- There are several possible drivers of the interpretation pitfall. A possible psychological explanation for it is the process of identification with a created object and the resulting psychological ownership of the object (Pierce, Kostova and Dirks 2003). This reduces the critical distance to the developed simulation model and as a result the number of comparisons with the target system over time might decline. Similarly the critical distance might decrease, because the simulation model creates its own virtual reality that is experienced when experimenting with the model. Paradoxically, a very thorough analysis of the model and its parameter space could further foster such tendencies. The so-called confirmation bias pinpoints that one should proceed with particular caution when results support prior beliefs or hypotheses (Nickerson 1998).[12] Once again, time pressure might amplify the uncritical acceptance of simulation results. This could be particularly true for models that have proven successful in other contexts or in similar model designs because they appear credible for the current problem as well.

Acceptance Pitfall

- 2.11

- Even if one is convinced of the simulation results' validity and accuracy, this may not be true for third-party decision makers. In many settings, third-party decision makers have the final word and hardly know the model. The more distant they are to the modeling process and the more complex the simulation model is, the more skeptical they tend to be about the results. Ultimately, a simulation-based decision may be rejected because the actual simulation results are not accepted. This can mean that otherwise good and correct simulation results are ignored and discarded. A related observation is that doubts are to be raised particularly in situations in which the results do not meet the expectations of third-party decision makers.[13] Such expectations are also at the heart of a typical dilemma often faced in simulation modeling: If the results match the expectations, the simulation model and the results themselves are called trivial. If the results are surprising, they prompt third-party decision makers to question the correctness of the entire simulation. We call this cluster of related issues the acceptance pitfall.

- 2.12

- The fact that simulation is still relatively new compared to other approaches likely contributes to the occurrence of the acceptance pitfall. Addressees of simulation model results are often not familiar with this type of work. The resulting output and the used formats to present the results differ often from more established approaches (Axelrod 1997). This all increases the difficulty to follow the line of reasoning and to understand the results. It also seems to be a major obstacle for simulation models that the addressee of a simulation model is often not involved in the design process, even though it happens in other context as well without the same negative impact (most drivers do not understand how exactly the car's engine functions). This is true for military and business applications as well as for social science and economics applications. The fear that parts of the model have been manipulated or are not designed well seems to be particularly widespread with respect to simulations. Not only are simulations often perceived as a black-box, simulation modeling also has to battle the prejudice of being very complex. Typical objections to the method are brought forward along those lines if the result does not match the audience's expectations and/or does not support their preferred course of action.[14] At the same time, academics often expect for a model to produce new and counterintuitive results which can sometimes complicate the issue further.[15]

Potential Pitfalls: Practical Examples and Guidelines for Addressing Them

Potential Pitfalls: Practical Examples and Guidelines for Addressing Them

- 3.1

- In this section we provide examples from our simulation work in military and managerial decision making to illustrate the pitfalls. For every pitfall, we first describe some specific situations illustrating important aspects of the respective pitfall and then offer suggestions for addressing it. These suggestions are based on our practical experiences what worked as well as on the psychological aspects described in the previous section. Then we summarize this by establishing guidelines.[16]

Distraction Pitfall

- 3.2

- The distraction pitfall describes the situation in which more than the question at hand is examined. Several issues and a broader context are analyzed in addition and result in an often dramatic increase in the number of aspects that need to be taken into account.

- 3.3

- The following example from a business application illustrates the distraction pitfall. It shows in particular how easily obvious but (too) far-ranging cause-and-effect-relations could lead to an expansion of the original simulation focus. The management of a manufacturing company wanted to implement a new pricing concept. While some customers were expected to get better conditions, others would pay more under the new concept. The customers' adaptive responses were anticipated to result in changed sales volumes. The management was looking for a pricing concept that would affect the profit as little as possible. A simulation of alternative pricing concepts and their impact on the company's profit was initiated to support the management's decision. What-if analysis with an underlying quantitative model seemed to be a suitable simulation technique. However, management soon understood that a new pricing concept would not only lead to a change in the customers' demand. Competitors would react as well to prevent the loss of sales and customers. The requirement for the simulation was quickly expanded to include pricing concepts of competitors. However, the required simulation had consequently expanded from estimating a pricing concept to creating a comprehensive market model. Once this became apparent, the extended simulation project was cancelled due to time constraints and the high risk of project failure given both the expected size of the simulation model and the associated complexity. Instead, it was decided to only pursue the initially requested simulation and quantitative what-if analysis (impact simulation of alternative pricing concepts). Subsequently, anticipated market movements were analyzed separately on the basis of different scenarios to get an idea of their impact.

- 3.4

- The following example from the armed forces provides another illustration of how important it is to focus on core issues and to prioritize them. The project employed a constructive simulation[17] to examine decision-making processes in the context of air defense. The initial question was "How much time is left for the following decision-making process after the detection of an attacking aircraft?". However, subsequently, it was extended step by step during the conception phase of the project to encompass related issues. The revised simulation should also answer questions about aspects such as the quality of individual process variants, the probability of a successful defense and the effectiveness of various air defense forces. This increased the simulation requirements significantly. To answer the initial question, it was enough to just model the recon means of own forces and the flight patterns of enemy aircrafts. The supplementing questions required including all associated processes and different air defense forces with their operational principles as well. The model exploded. Once again, the central questions were prioritized. It turned out that all project participants still felt that the initial question was crucial. They had simply assumed that the other issues could be easily integrated.

- 3.5

- In order to address the distraction pitfall, it is recommended to stay focused on the question as originally defined and stay away from generating a "catch all" simulation.[18] Based on the research question the relevant part of reality to be modeled should be defined as narrowly as possible. It is important to set boundaries and not to plan for a bigger model than absolutely necessary. To achieve this, the modeler has to have a clear understanding of the question to be addressed by his model. Both, modelers as well as clients or seniors should pay attention on clear communication about the purpose of the simulation. Making the main independent and dependent variables of the simulation the starting point of modeling can help achieve this and ensures to not lose sight of the variables of interest. Discussions on this basis help to choose the appropriate part of reality. In our experience it is helpful to look "from the outside in" at times as a possibility to confirm whether the original question has been answered or if the focus has shifted along the way. This requires constant self-discipline, but is one of the most effective ways to remain in focus, what is to be modeled and what not.[19] Not at least a modeler needs willingness (and strength) to defend what can be reasonably addressed by a single model. While working with the simulation model, expansions may become necessary when new questions, additional dependent variables or further parameters arise. However, they should not be included until a simulation model for the original question has been created that is fully functional and accepted by everybody working on it. Composing modules and adding elements stepwise is preferred over doing everything at once.

- 3.6

- The following basic rule summarizes how the distraction pitfall can be avoided: Articulate the question at hand clearly and stay focused on it! This helps to keep the part of reality to be modeled manageable.

Complexity Pitfall

- 3.7

- The complexity pitfall results from the desire to put too much value on descriptive accuracy when creating a model structure. The consequences are a high level of detail and scope with the resulting higher complexity putting the tractability of the computational model and the analysis of the data produced by the model at risk.

- 3.8

- The following example from the armed forces illustrates the importance of explicitly considering the temporal dimension, one of the key drivers for a simulation model's complexity. Increasing the amount of time to be simulated tends to require further factors and plots[20] to be taken into account in the model. In a simulation project assessing how military patrols on combat vehicles should act when facing an unexpected ambush, the looming complexity was already evident in the design phase. The simulation of such combats would have needed the following sub-models for both own and enemy forces: the patrol's approach into the ambush area including the employment of reconnaissance equipment, the initial combat of mounted infantry in the ambush area, the subsequent battle of the forces that are still operational with a focus on the combat of dismounted forces, and the transition between all these battle phases. The real-time to simulate would have been about one hour and the scope of actions would have been almost unlimited. Despite the modeled complexity, the model would never be sufficiently realistic. At that point, it was suggested to not simulate the entire engagement but to reduce the complexity by focusing the simulation on one or more essential sections of the combat. The following question arose: "Is there a period of time that is crucial and yields simulation results accepted by the experts to represent the outcome of the entire battle?" A discussion with the participating domain experts concluded that the first two to three minutes after running into an ambush should be regarded as decisive. During this period the element of surprise has the strongest impact on the course of the battle and the highest casualties of one's own forces have to be expected. Therefore, the initial minutes appear to determine the result of the subsequent battle. This approach allowed for the modeling to focus on the first few minutes and to not to deal with aspects such as the combat of dismounted infantry. The model's complexity was noticeably reduced. In addition, its (descriptive) quality could be considerably increased by focusing on these two to three minutes.

- 3.9

- A second driver of a model's complexity is the number of entities to be considered. The following simulation example from business addresses how to deal with this aspect of complexity. An international corporation uses transfer prices to charge its internal flows of goods and services. If subsidiary A provides products or services to subsidiary B, B pays A the costs plus a mark-up for the received goods or services. The rules for determining the mark-up affect both parties' profit. However, most rules changes impact considerably more subsidiaries than the two primary ones. Partially, they might even have an effect on all subsidiaries. Given this reach, it was suggested to simulate the impact of proposed changes in advance. The simulation model basically consists of all inter-company relationships within the group. The simulation has to consider all transfer price mechanisms (mark-ups, etc.) which made any simplification impossible. However, the number of considered flows of goods and services can be reduced significantly by aggregating them. This allowed for reducing the number of internal products from more than 10,000 to less than 30 in this case. In addition, a drastic reduction of the number of inter-company relationships could be achieved by clustering corporate units based on production-related or regional aspects.[21]

- 3.10

- To avoid the complexity pitfall it is important to stay focused on the core cause-and-effect-relationships when creating a model. We would like to emphasize again that a model is a simplified depiction of reality. It is reduced to only the key elements and relationships that are relevant for that specific purpose (Burton and Obel 1995). Abstraction, unification as well as pragmatism often serve well as guides. They go along with simplifying and reducing details that is occasionally radical. If this is taken too far at some point, one can add details back in later, but it should be done gradually in order to keep an overview of and an understanding for the model. Taking apart the model (as done in the example from the armed forces) can also contribute to managing complexity successfully. If a model is developed in several steps, any logic that is not needed any longer should be removed from the model. If the remaining complexity is still too high, it helps to prioritize the dependent variables and possible parameters. Coordinating the assigned degrees of importance with management and/or other third-party stakeholders in the process creates a check list that can be used to cross off items in order to reduce the complexity of the original question. As finding a simpler model often requires several iterations in the model construction process including substantial changes to the model (Varian 1997), substantial investments in time and effort have to planned in advance for these tasks to effectively avoid the complexity pitfall.

- 3.11

- The following basic rule summarizes how the complexity pitfall can be approached: Look for possibilities to simplify your model structure in order to prevent the simulation model from putting its tractability and analysis at risk!

Implementation Pitfall

- 3.12

- The implementation pitfall depicts not well-managed interdependencies between an abstract model structure and its implementation using IT support. Inappropriate software and programming tools play an important role in this regard.

- 3.13

- The following example from applying simulation to a business problem highlights the importance of working out most requirements of a simulation model prior to the IT implementation. A widely held belief is that simulation models are easily adaptable. In practice, however, seemingly minor adjustments are not easily implemented in an existing simulation tool. This became evident in a project that was set up to centrally structure and consolidate information about an organization's customers and competitors. The goal was to make market behavior of customers and competitors transparent and to use the insight to develop different scenarios. The scenarios were to be quantified through what-if analysis. Due to the relatively large data volume, the project team decided to draw on a relational database system that was already in place. The software implementation of the planned production system[22] was started before the abstract modeling was actually completed. This quickly produced the first visible results. Initially, it was still possible to implement all new requirements such as including profit margins in addition to the sales information about customers. However, every new requirement rapidly increased the time und expenses spent on the implementation along with the model's complexity. The impact of the growing number of new requirements spread from affecting just the business logic within the model to affecting the database itself. It became harder to keep the database consistent with all new extensions. The database consistency was at risk meaning that the software could no longer be used. A new data model and entity relationship model were created at that point based on all known requirements. This revealed the weaknesses of the existing IT implementation, but also the technical limitations of the existing database. It became obvious that certain interfaces to source systems for input data would not have been realizable at acceptable costs. A software selection process was initiated that led to an alternative. However, the costs of implementing requirements on the existing relational database system could have been avoided in the first place.

- 3.14

- The following example from the military domain illustrates another aspect of not well-managed interdependencies between the conceptual simulation model and the IT implementation: Increasing software requirements by trying to solve all issues in a comprehensive approach in one single simulation system instead of trying to decompose the problem and split the simulation. As part of a combat operations simulation, the performance of a new generation of radio transceivers was to be analyzed. In addition to the combat operations itself, the physical parameters of the built-in wireless devices and the relevant environmental parameters had to be simulated. None of the available constructive combat simulations were sufficient in meeting the requirements. The solution was a split simulation approach rather than an expensive model adaption. First, the underlying battle was simulated with a combat simulation system that is normally used for training. For the entire run of the simulation, the geographical coordinates of the vehicles with built-in radios were saved. The resulting position data/tracks formed the basis for the subsequent analysis of the radio links that was executed with another simulation system specialized in such evaluations. The original approach required addressing a secondary issue ("How is the course of the combat actions?") and the primary question ("How effective are the radio transceivers?") at the same time. Restructuring the problem allowed for focusing on the primary issue alone after a short preliminary simulation phase to answer the secondary question. The sequential handling of the questions simplified both the analysis approach and the selection of appropriate IT tools. It also avoided expensive model adjustments.

- 3.15

- Addressing this pitfall is remarkably easy: The abstract simulation model should determine the IT implementation choice, not vice versa. Criteria to be considered when selecting the software are aspects such as the type of analytical relationships to be captured, the data volume to be processed, the integration level of existing source data systems required, and the person(s) to actively use the simulation.[23] A stepwise prototyping can help to work out all these criteria before starting the software selection process. It is important to be guided by subject matter aspects and to keep in mind that the software will be nothing but a support tool for simulation applications. The decisive factor is whether the software is suitable for implementing the abstract simulation model. The cost-benefit ratio is another key element to consider. Adjustments to the abstract simulation model or to the software requirements that are insignificant from a subject matter perspective can sometimes lead to a much more economical IT implementation.

- 3.16

- Once again, we have devised a basic rule for how the implementation pitfall can be approached: Choose the IT implementation based on the model structure at hand rather than based on existing IT systems to avoid restrictions and complications for the IT realization!

Interpretation Pitfall

- 3.17

- Once a model is developed, users are at risk of taking the simulation results at face value. The interpretation pitfall refers to losing a critical distance to the simulation results, not validating the results sufficiently or disregarding the limits of the model's validity.

- 3.18

- The following example from the armed forces illustrates the importance of checking the model although it is partially based on an existing simulation and provides interesting findings. A feasibility study was supposed to use simulations to analyze the effectiveness of checkpoints in terms of preventing vehicle-based transports of explosives within cities. Agent-based modeling of the vehicles loaded with explosives formed the basis for the chosen simulation. The agents could decide which route to take in order to meet their objectives and how to respond to detected checkpoints. Existing simulations informed the rule sets for modeling the agents. Further input came from specific problem-based rule sets. After a large number of simulation runs with different starting parameters, the data interpretation generated some novel and interesting findings. The data was already being prepared for presentations, but the sponsor of the study mistrusted the results. This led to a re-examination of the data. It was then that some clusters of data were noted to be beyond any reasonable distribution, to not be linked to the other result clusters and to be outside of the estimated range that was previously defined by subject matter experts. Suspicion arose that those outliers could represent model errors. As part of an "as is-is not"-analysis, individual simulation runs with results that were closer to the expected outcome were compared with simulation runs that displayed surprising results. Their parameters were analyzed for equality and inequality. It was found that some of the specific rule sets added to the agent-based model were insufficient. Consequently, the agents reverted back to the rule sets of the original model which were inadequate for the problem at hand. A revision of the rule sets led to more correct simulation results. Overreliance on the first simulation results would have resulted in completely wrong conclusions.

- 3.19

- The following example from a business application illustrates that each model has core assumptions which have to be remembered all the time. The management of a company expected significant changes in the competitive environment and intended to adjust pricing accordingly. Information on costs is an important part of pricing decisions. Using a simplified model of the company's cost accounting system was thought to be the natural choice for the simulation approach. Model simplifications were based on aggregating products into product groups, process steps into groups of processes, etc. These aggregations were supposed to enable an appropriate level of detail for fast simulations. It is important to note that this form of modeling always assumes that the formed aggregates have no major changes to their composition. Take a product group for example: The proportions between the individual products must remain constant. Otherwise, the simplification differs from the original cost accounting system. Such differences amplify with the increase of shifts within a product group. This fact is easily forgotten because product groups (aggregates) in a simulation model are usually used like standard products in daily business activities. When a product substitution between different product groups is simulated, the calculated costs of all products and product groups will differ from the results of the original costing because a core assumption of the simulation model is no longer valid (no quantitative change in the composition of the various product groups). Thus, if the simulation results are not always checked for the validity of the model they were calculated with, incorrect conclusions could easily be drawn with potentially devastating consequences.

- 3.20

- To avoid this pitfall, simulation results should be questioned by evaluating their plausibility and by trying to find alternative explanations for the results. Instead of relying on checks by the simulation user alone for revealing discrepancies, it is preferable for a third party to execute this plausibility check. Adding a second set of eyes usually improves the quality check of simulation results. Furthermore, selected results should be randomly tested and recalculated. If the results appear counter-intuitive, they have to be broken down into sub-questions and components to be analyzed at a more detailed level. A plausible explanation has to be found for every component. Such analyses have to take into account the simulation context (i.e. the structures of reality actually represented by the model), the validity boundary of the chosen model structure, the parameters used for the specific simulation, and deliberately tolerated approximations and inaccuracies.

- 3.21

- The following basic rule addresses how to approach the interpretation pitfall: Critically analyze simulation behavior and scrutinize its results to avoid wrong conclusions!

Acceptance Pitfall

- 3.22

- The acceptance pitfall marks a high level of skepticism concerning simulation results by third parties who are not directly involved in determining the results of simulation.

- 3.23

- The following example from the armed forces represents a case of the acceptance pitfall that stems from the need to involve the addressee of simulation models early in the process to make them accept the uncertainty inherent in the simulation results. The simulation approach supported an effectiveness and efficiency comparison of different anti-aircraft systems. It was to be conducted based on preset battle scenarios. Many of the necessary adjustments to the existing model rested merely upon estimates and experience which meant that any result carried unquantifiable uncertainties. After the first simulation runs it became clear that presenting results in form of single numbers would be perceived as "pseudo-rationality" and would therefore receive no acceptance. The way out of the emerging acceptance pitfall was to determine a reasonable interval instead of relying on pseudo-precise numbers. With the consent of the project client, the above mentioned adjustments were now executed for a "best-case-scenario" and a "worst-case-scenario". The subsequent simulation results provided the information on "it cannot be better" and on "it cannot get worse" for each air defense system. The true values naturally had to fall within those two boundaries. This approach increased the acceptance of the simulation results considerably. Instead of having to justify a seemingly accurate curve that would vary by an unpredictable percentage due to the underlying model assumptions, the simulation results represented upper and lower margins that were much easier to convey and explain. Since the client of the project as well as the domain experts had participated in bringing in the best and worst case assumptions into the model, they were willing to accept the simulation results.

- 3.24

- Another aspect of the acceptance pitfall is that all stakeholders of a simulation have their own expectations about the results, which have to be both understood and managed. The following example from business highlights a possibility for dealing with different expectations. When presenting the results derived from a simulation, the feedback "I do not believe in those results!" is probably one of the ones feared the most by the simulation team. If it happens, it is essential to quickly detect the reason for the doubt as illustrated next when a marketing director responded that way to the presentation of simulated product profits for a planning scenario. The first objection that the model did not use the correct planning scenario or correct data as the starting point could quickly be refuted. The marketing director had agreed with the input variables and outcome reports earlier which ruled out deviations between expectations and outcome variables (such as gross margin instead of profit) as an explanation for the rejection. Reasons had to be sought in the simulation logic used and his numerical expectations. As it turned out, his expectations were based on an estimation of how changes in sales volume would impact profit and on factoring in economies of scale and other feasible cost reductions. Once this was discovered, it was possible to explain to the marketing director the unexpected but correct simulation results. The simulation's business logic also considered the distribution logic for fixed costs. The simulated scenarios showed a considerable amount of shifts especially towards non-focus products and this resulted also in a changed allocation of fixed cost to focus products. This explanation as well as a clear account of the simulation logic gained acceptance for the results and new insights for the marketing director.

- 3.25

- To overcome the acceptance pitfall, it is important to increase the confidence in a tool that some third-party decision makers may not be familiar with. This is easier to accomplish if the simulation model and its underlying logic can be explained in simple terms. Excessively mathematical explanations often seem daunting. We feel that the ability to establish the model's plausibility through existing or simple examples and to demonstrate the quality of the model's data sources and parameters is a key element. Referencing to relationships and patterns, which are well-known, commonly used and, thus, accepted further adds to the simulation results' persuasive power. When preparing the results presentation, possible objections to the results as well as differing expectations of third-party decision makers should be anticipated to be able to provide clear explanations in simple terms. If simulation software is used or programmed, we also recommend ensuring user friendliness. Parameter input should be intuitive and the report of simulation results should be clearly laid out. Both allow the addressee of the simulation model to focus on the simulation model and its results (and not on technical aspects).

- 3.26

- The following basic rule summarizes how to approach the acceptance pitfall: Avoid the impression of simulation as a "black box" and consider the expectations of your audience to increase acceptance when communicating your results!

- 3.27

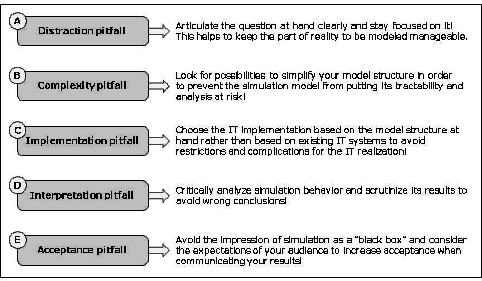

- Figure 3 summarizes the pitfalls and guidelines for addressing them.

Figure 3. Potential pitfalls when using simulations and basic rules how to avoid them

Discussion

Discussion

- 4.1

- After identifying typical pitfalls in simulation modeling and suggesting how to avoid them, we can now assess them in a broader context. The practical examples given above for every pitfall are not isolated events, but can be related to the methodological discussion in social simulation. We will examine this for all five pitfalls.

- 4.2

- Both the distraction and the complexity pitfall result in increasing complexity, but the methodological discussion in social simulation seems to center predominantly on the "right level of complexity". This paper distinguishes between the questions "What are the structures and processes of the target system that should be modeled?" and "How should these be modeled?", a distinction which is often not made as explicit. Keeping the distinction in mind could help to solve some debates or at least help clarify what really is at stake for the different groups: Are certain elements of reality needed because they are required for effectively addressing the research question at hand or are certain simplifications and abstraction not accepted because "they do not work" and/or are not acceptable?

- 4.3

- In line with the suggestions in this paper, Burton and Obel (1995) are widely recognized for emphasizing the primacy of the research question when making modeling decisions.[24] They stress that a model's validity can only be assessed with explicit reference to the purpose at hand (similarly Railsback and Grimm 2011). Accordingly, realism per se should not be the motivation to include elements in a model. Their relevance for the question to be addressed by the model should be the decisive factor. A corollary of this position (although not stated explicitly by Burton and Obel 1995), is that extending the range of questions to be addressed increases the complexity of the model. The so-called "building block approach" describes the approach of addressing related research questions stepwise and is applied to engineering computational models as well as the related strategy to design models in a modular form (Carley 1999; Orcutt 1960). The basic idea is to start with a simple model that has to be well tested and understood first. Then, a new block can be added to provide further functionality. This extended model, in turn, has to be thoroughly tested and understood before more aspects can be included.

- 4.4

- Concerning the issue of how to handle the complexity of models, two prominent positions exist in the social simulation literature (see Edmonds and Moss 2005). One is the so-called KISS principle (Keep it simple and stupid). According to this principle, one should start with a simple model and only add aspects as required. The KIDS principle (Keep it descriptive stupid) instructs to start with a very descriptive model and to reduce complexity only if justified by evidence. The suggestion for avoiding the complexity pitfall actually shares the strong preference for simple models with the KISS principle, but acknowledges the strategy of the KIDS principle of sometimes starting with a more complex model to find the adequate simplifications.

- 4.5

- To our knowledge, the implementation pitfall has not been discussed directly in the social simulation community. Nevertheless, a lot of discussions exist on the relative merits of different ABM platforms and programming languages (e.g., Nikolai and Madey 2009; Railsback, Lytinen and Jackson 2006). The relationship between different platforms in the modeling process has been addressed as well. Some suggest to conduct feasibility studies first by implementing a model in NetLogo before switching to more sophisticated platforms like RePast or Swarm (e.g., Railsback, Lytinen and Jackson 2006). If there is any debate regarding the requirements for data input, it mainly refers to GIS data. One reason for this might be that many social simulation models so far do not directly use data as input in their models but for validating their models (for an overview see Heath, Hill and Ciarallo 2009).

- 4.6

- Related to the interpretation pitfall, the methodological discussion has addressed a number of aspects and suggested several remedies. The social simulation community strongly emphasizes the importance of model replication to detect possible errors (e.g., Axelrod 1997; Edmonds and Hales 2003). The full potential of this approach has not yet been realized in many practical settings. It seems easier to accomplish in an academic setting (if at all) than in business or the armed forces. In many descriptions of the research process the task of verification, i.e. the process of checking whether the model structure is correctly implemented, is considered as an important task in the simulation modeling process as well (Law 2006; Railsback and Grimm 2011). The literature offers numerous suggestions and strategies for such a check of the implemented model. Among the most basic are techniques for the verification of simulation computer programs (Law 2006), while others address rather subtle and easily unnoticed issues like "floating point errors" and "random number generators" (Izquierdo and Polhill 2006; Polhill, Izquierdo and Gotts 2004). Systematically scrutinizing simulation model behavior and results is seen as another effective way of controlling for accurate model implementation (Lorscheid, Heine and Meyer in press).

- 4.7

- As simulation researchers are frequently confronted with the problem of acceptance, several strategies for dealing with it have been derived. One of them is to involve stakeholders as early as possible in model construction, especially when defining assumptions (Law 2006), which matches the recommendation for overcoming the distraction pitfall. A more recent strategy to reduce the black-box character of simulation models is to use principles of design for simulation experiments originally created for laboratory and field experiments in order to analyze and report simulation model behavior (Lorscheid, Heine and Meyer in press). Most of the recent standards for presenting simulation research also explicitly aim at increasing the accessibility and, thereby, the acceptance of simulation models and their results (Grimm et al. 2006; Richiardi et al. 2006).

- 4.8

- Overall, a look at the methodological discussion demonstrated that several related suggestions exist, which implicitly address the pitfalls identified in this paper. Although science, the armed forces and business provide very different settings for simulations, there are still some remarkable similarities that can serve as the basis for further discussion and mutual learning. By looking at the pitfalls we were able to examine them from a different perspective and to introduce some useful concepts such as differentiating between "what are the structures and processes of the target system that should be modeled" and "how should these be modeled" when calibrating the desired level complexity of simulation models. In addition, the "logic of failure" (Dörner 1996) leading to these pitfalls has been hardly addressed so far by the methodological discussion in social simulation.

Conclusion

Conclusion

- 5.1

- We identified five possible pitfalls of simulation modeling and provided suggestions how to avoid them. The occurrence of and the logic behind the pitfalls were discussed with reference to practical experiences from military and managerial applications. The social simulation community benefits from this paper in three ways: First, we increase awareness for typical pitfalls of simulation modeling and their possible causes. Second, our paper provides guidance in avoiding the pitfalls by establishing five basic rules for modeling and fosters a more general discussion of elements that prevent the pitfalls from taking effect. Third, the paper contributes to the methodological discussion from a different perspective as it looks at potential mistakes and develops recommendation based on actual observations in practice instead of deriving recommendations directly from a specific methodological position.

- 5.2

- Some limitations have to be acknowledged. First, although the authors have accumulated more than 35 years of experience between them with simulation modeling in very different environments, we do not claim for the identified problems and the subsequent suggestions to be the "ultimate truth". We also recognize that we might have been influenced by factors such as the typical problems we analyzed, the demands of the settings we work in or our academic backgrounds. With this paper, we want to share our experiences and the examples we observed in business and the armed forces to enrich the methodological discussion in social simulation. Second, the number of possible pitfalls is not limited to those identified in this paper. We relate the five pitfalls to typical steps during a simulation modeling project, but additional obstacles might arise at other stages or even at one of the stages we already analyzed in terms of potential pitfalls. Finally, we accept that some of the pitfalls we identified can be observed in but are not specific to simulation modeling while others like the interpretation or the acceptance pitfall are very typical for simulation modeling.

- 5.3

- Several avenues for further research exist. First, the extent to which simulation projects in general fall victim to the identified pitfalls could be investigated. Second, it would be equally interesting to find out which of the pitfalls are particularly prevalent in social simulation research. Addressing those first could help improve the overall quality of social simulation projects. Another possibility lies in the search for additional pitfalls that might be specific to the social simulation research. Above, it could be of interest to identify additional context factors that increase the probability to get caught in a pitfall. Research at the intersection between simulation modeling pitfalls and psychology might be able to provide additional empirical support and further insights into the relations explored in this paper.

Notes

Notes

-

1For a similar process description see Railsback and Grimm (2011). Other related conceptualizations of the logic of simulation as a research method are provided by Gilbert and Troitzsch (2005) or Law (2006).

2All models have three elements in common. They represent something; they have a purpose; they are reduced to the key attributes that are relevant for that specific purpose (Morgan 1998; Burton and Obel 1995). The purpose is to answer the specific research question at hand.

3It should be mentioned that these revisions also occur for the other steps, e.g. a refined research question (Railsback and Grimm 2011).

4We do not claim to be exhaustive, but drawing on our own experience these are the most common pitfalls.

5The description of these pitfalls is based on our practical experiences. For explicit references to the methodological discussion please see section 4.

6Business settings in particular tend to use increasingly vague formulations—partly for political reasons.

7It should be noted that clients are typically less willing to accept such iterations in the armed forces or a business environment. While the positive effect of modeling on clarifying and refining the project's objective is still acknowledged, a very clear and precise understanding is needed from the very beginning of a simulation project and one should, therefore, invest time and effort in this task. Having all stakeholders agree on an explicit statement of the project objective is a good first step.

8According to Burton and Obel (1995:69) "this balance is realized by creating simple computational models and simple experimental designs which meet the purpose or intent of the study."

9The psychological literature addresses this via the concept of tolerance for uncertainty and ambiguity, which differs between individuals and cultures (see Budner 1962).

10While additional—albeit unused—potential is not harmful in per se, it is usually accompanied by high costs. High costs also arise if programmers have to bend over backwards for the technical realization due to software that is neither too weak nor too powerful but simply unsuitable for the concrete simulation question.

11Other important reasons in practical settings are: corporate guidelines exist for certain IT tools; IT experts insist on certain software, even if it is not suitable for the conceptual model; or modeling experts overestimate their programming skills.

12In an academic setting, the confirmation bias can also work more subtly given the fact that models are often expected to produce new, counterintuitive insights, which increases the willingness to believe counterintuitive but "wrong" results.

13In addition, some results are simply unwanted by decision makers (because of a hidden agenda or other political reasons). In this case, the still widespread skeptical attitude towards simulation modeling makes it easier for them to raise doubts.

14There is also a cultural aspect to what is accepted as a result. In mathematics, for example, negative results are considered as an important contribution because it shows that a certain approach to solving a problem does no longer require testing in the future. In a business setting, clients typically expect a solution that actually solves a problem.

15This links to our discussion of the interpretation pitfall and the willingness to believe counterintuitive but "wrong" results.

16We intentionally created guidelines in form of simple and concise rules to make it easy to remember them.

17The term "constructive simulation" is frequently used in the military domain to categorize simulation systems. A definition can be found in the M&S glossary of the US Department of Defense: "A constructive simulation includes simulated people operating simulated systems. Real people stimulate (provide inputs to) such simulations, but are not involved in determining the outcomes." (DoD 2010:107)

18Possibly the research question is refined during this step (Railsback and Grimm 2011; see also footnote 3). In this case it is recommended to make a focus shift, but no expansion of the research question.

19As an easy and fast "self-test", modeler should always know, which and how many input parameters will be needed and how they are determined.

20The following example illustrates this point: In a simulation period of 30 minutes (real time), weather changes can be neglected in most cases. But is that still true for a simulation period of three days? To create a realistic simulation, changes in the weather conditions should be included in that case. However, it does not simply add an additional parameter into the model. A weather model has to be developed and/or linked, the influence of weather conditions on the dependent variables of the simulation has to be examined, the overall scenario is becoming more comprehensive, and a variety of new rule sets have to be developed. A new "plot" has to be integrated into the model.

21Splitting the corporation into sub-groups (in simulation often called "modularization") also helps to reduce complexity. Because modules reflect only parts of the whole corporation, business logic within the simulation model decreases too. Unification provides an alternative approach to modularization. When unifying, the goal is to eliminate items if they differ in business logic and to only keep a flexible element that can be adapted (or customized) for the needs of the specific unit. Although this flexible element is often more complex than any of the original items, it often is much easier to understand one flexible element than to keep an overview of a large number of individual items.

22A production system means a fully developed software implementation of the simulation model being worked on with real data.

23Most organizations have introduced a professional software selection process nowadays that can support the task. Personal preferences for a specific software tool should make no difference.

24Meyer, Zaggl and Carley (2011) find this paper to be among the top-cited sources for both time periods investigated.

References

References

-

AXELROD, R (1997). Advancing the art of simulation in the social sciences. Complexity, 3(2), 16-22. [doi:10.1002/(SICI)1099-0526(199711/12)3:2<16::AID-CPLX4>3.0.CO;2-K]

BOLAND, L A (2001). Towards a useful methodology discipline. Journal of Economic Methodology, 8(1), 3-10. [doi:10.1080/13501780010022785]

BUDNER, S (1962). Intolerance of ambiguity as a personality variable. Journal of personality, 30(1), 29-50. [doi:10.1111/j.1467-6494.1962.tb02303.x]

BURTON, R and OBEL, B (1995). The Validity of Computational Models in Organization Science: From Model Realism to Purpose of the Model Computational and Mathematical Organization Theory, 1(1), 57-71.

CARLEY, K M (1999). On generating hypotheses using computer simulations. Systems Engineering, 2(2), 69-77. [doi:10.1002/(SICI)1520-6858(1999)2:2<69::AID-SYS3>3.0.CO;2-0]

DoD, Department of Defence (2010). DoD Modeling and Simulation Glossary. 5000.59-M, Department of Defense, 1998 and updates (see http://en.wikipedia.org/wiki/Glossary_of_military_modeling_and_simulation).

DÖRNER, D (1996). The logic of failure: Recognizing and avoiding error in complex situations. Basic Books.

EDMONDS, B and HALES, D (2003). Replication, replication and replication: Some hard lessons from model alignment. Journal of Artificial Societies and Social Simulation, 6(4), 11. https://www.jasss.org/6/4/11.html EDMONDS, B and MOSS, S (2005). From KISS to KIDS—An 'Anti-simplistic' Modelling Approach. In Davidsson, P, Logan, B and Takadama, K (Ed.): Multi-Agent and Multi-Agent-Based Simulation. Berlin / Heidelberg: Springer, pp. 130-144.

EPSTEIN, J M (2008). Why model? Journal of Artificial Societies and Social Simulation. 11(4). 12 https://www.jasss.org/11/4/12.html

GILBERT, G N and TROITZSCH, K G (2005). Simulation for the social scientist. Maidenhead: Open University Press.

GRIMM, V, BERGER, U, BASTIANSEN, F, ELIASSEN, S, GINOT, V, GISKE, J, GOSS-CUSTARD, J, GRAND, T, HEINZ, S K, HUSE, G, HUTH, A, JEPSEN, J U, JORGENSEN, C, MOOIJ, W M, MÜLLER, B, PE'ER, G, PIOU, C, RAILSBACK, S F, ROBBINS, A M, ROBBINS, M M, ROSSMANITH, E, RÜGER, N, STRAND, E, SOUISSI, S, STILLMAN, R A, VABO, R, VISSER, U and DEANGELIS, D L (2006). A Standard Protocol for describing Individual-based and Agent-based Models. Ecological Modelling, 198(1-2), 115-126. [doi:10.1016/j.ecolmodel.2006.04.023]

HEATH, B, HILL, R and CIARALLO, F (2009). A survey of agent-based modeling practices (January 1998 to July 2008). Journal of Artificial Societies and Social Simulation, 12(4), 9. https://www.jasss.org/12/4/9.html

IZQUIERDO, L R and POLHILL, J G (2006). Is your model susceptible to floating-point errors. Journal of Artificial Societies and Social Simulation, 9(4), 4 https://www.jasss.org/9/4/4.html.

LAW, A M (2006). Simulation modeling and analysis. 4th. Boston: McGraw-Hill.

LORSCHEID, I, HEINE, B-O and MEYER, M (in press). Systematic Design-of-Experiments (DOE) as a Way out for Simulation as a 'Black Box': Increasing Transparency and Effectiveness of Communication. Computational and Mathematical Organization Theory.

MEYER, M, LORSCHEID, I and TROITZSCH, K G (2009). The development of social simulation as reflected in the first ten years of JASSS: A citation and co-citation analysis. Journal of Artificial Societies and Social Simulation, (12)4, 12. https://www.jasss.org/12/4/12.html

MEYER,, M, ZAGGL, M A and CARLEY, K M (2011). Measuring CMOT's intellectual structure and its development. Computational & Mathematical Organization Theory, 17(1), 1-34. [doi:10.1007/s10588-010-9076-0]

MOORE, D A and HEALY, P J (2008). The trouble with overconfidence. Psychological review, 115(2), 502-517. [doi:10.1037/0033-295X.115.2.502]

MORGAN, S M (1998). Models. In Davis, J B, Hands, D W and Mäki, U (Ed.): The Handbook of Economic Methodology. Cheltenham: Elgar, pp. 316-321.

NICKERSON, R S (1998). Confirmation bias: A ubiquitous phenomenon in many guises. Review of General Psychology, 2(2), 175-220. [doi:10.1037/1089-2680.2.2.175]

NIKOLAI, C and MADEY, G (2009). Tools of the trade: A survey of various agent based modeling platforms. Journal of Artificial Societies and Social Simulation, 12(2), 2. https://www.jasss.org/12/2/2.html

ORCUTT, G H (1960). Simulation of economic systems. The American Economic Review, 50(5), 893-907.

PIERCE, J L, KOSTOVA, T and DIRKS, K T (2003). The state of psychological ownership: Integrating and extending a century of research. Review of General Psychology, 7(1), 84-107. [doi:10.1037/1089-2680.7.1.84]

POLHILL, G, IZQUIERDO, L and GOTTS, N (2004). The ghost in the model (and other effects of floating point arithmetic). Journal of Artificial Societies and Social Simulation, 8(1), 5 https://www.jasss.org/8/1/5.html .

RAILSBACK, S F and GRIMM, V (2011). Agent-based and individual-based modeling: A practical introduction. Princeton, N.J.: Princeton University Press.

RAILSBACK, S F, LYTINEN, S L and JACKSON, S K (2006). Agent-based simulation platforms: Review and development recommendations. Simulation, 82(9), 609-623. [doi:10.1177/0037549706073695]

REISS, J (2011). A plea for (good) simulations: nudging economics toward an experimental science. Simulation & gaming, 42(2), 243-264. [doi:10.1177/1046878110393941]

RICHIARDI, M, LEOMBRUNI, R, SAAM, N J and SONNESSA, M (2006). A Common Protocol for Agent-Based Social Simulation. Journal of Artificial Societies and Social Simulation (JASSS), 9(1), 15 https://www.jasss.org/9/1/15.html .

RUSSO, J E and SCHOEMAKER, P J H (1992). Managing overconfidence. Sloan Management Review, 33(2), 7-17.

SCHMIDT, J B and CALANTONE, R J (2002). Escalation of commitment during new product development. Journal of the Academy of Marketing Science, 30(2), 103-118. [doi:10.1177/03079459994362]

STAW, B M (1981). The escalation of commitment to a course of action. Academy of Management Review, 6(4), 577-587.

VARIAN, H R (1997). How to build an economic model in your spare time. The American Economist, 41(2), 3-10.